|

|

|

|

@ -1,3 +1,6 @@

|

|

|

|

|

# 一、硬部署

|

|

|

|

|

无条件,可直接硬部署MYSQL与REDIS,即可使用项目。

|

|

|

|

|

|

|

|

|

|

## 01、安装MYSQL

|

|

|

|

|

|

|

|

|

|

**一**、下载并安装mysql:

|

|

|

|

|

@ -45,9 +48,67 @@ flush privileges;

|

|

|

|

|

exit

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

**七**、在云服务上增加MySQL的端口

|

|

|

|

|

**七**、在云服务上增加MySQL的端口(打开防火墙对应端口)

|

|

|

|

|

|

|

|

|

|

## 02、安装REDIS

|

|

|

|

|

**一**、安装redis:

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

yum -y update

|

|

|

|

|

yum -y install redis

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

**二**、修改配置文件

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

vi /etc/redis.conf

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

protected-mode no

|

|

|

|

|

port 6379

|

|

|

|

|

timeout 0

|

|

|

|

|

save 900 1

|

|

|

|

|

save 300 10

|

|

|

|

|

save 60 10000

|

|

|

|

|

rdbcompression yes

|

|

|

|

|

dbfilename dump.rdb

|

|

|

|

|

appendonly yes

|

|

|

|

|

appendfsync everysec

|

|

|

|

|

requirepass austin

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

**三**、启动redis

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

systemctl start redis

|

|

|

|

|

service redis start

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

**四**、检查redis状态

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

sudo systemctl status redis

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

**五**、连接redis

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

# 默认端口号6379

|

|

|

|

|

redis-cli

|

|

|

|

|

|

|

|

|

|

# 验证密码

|

|

|

|

|

AUTH austin

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

**六**、在云服务上增加Redis的端口(打开防火墙对应端口)

|

|

|

|

|

|

|

|

|

|

## 02、安装DOCKER和DOCKER-COMPOSE

|

|

|

|

|

---

|

|

|

|

|

|

|

|

|

|

# 二、DOCKER-COMPOSE方式部署

|

|

|

|

|

为方便管理与部署,可以选择DOCKER-COMPOSE方式部署组件,同理除了MYSQL与REDIS,其余组件都是**可选**。

|

|

|

|

|

|

|

|

|

|

## 01、安装DOCKER和DOCKER-COMPOSE

|

|

|

|

|

|

|

|

|

|

首先我们需要安装GCC相关的环境:

|

|

|

|

|

|

|

|

|

|

@ -121,17 +182,104 @@ sudo ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

|

|

|

|

|

docker-compose --version

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

(Austin项目的中间件使用docker进行部署,文件内容可以参考项目中`docker`文件夹)

|

|

|

|

|

|

|

|

|

|

## 03、安装KAFKA

|

|

|

|

|

## 02、安装MySql

|

|

|

|

|

|

|

|

|

|

新建搭建kafka环境的`docker-compose.yml`文件,内容如下:

|

|

|

|

|

`docker-compose.yaml`文件如下

|

|

|

|

|

|

|

|

|

|

```yaml

|

|

|

|

|

version: '3'

|

|

|

|

|

services:

|

|

|

|

|

mysql:

|

|

|

|

|

image: mysql:5.7

|

|

|

|

|

container_name: mysql

|

|

|

|

|

restart: always

|

|

|

|

|

ports:

|

|

|

|

|

- 3306:3306

|

|

|

|

|

volumes:

|

|

|

|

|

- mysql-data:/var/lib/mysql

|

|

|

|

|

environment:

|

|

|

|

|

MYSQL_ROOT_PASSWORD: root123_A

|

|

|

|

|

TZ: Asia/Shanghai

|

|

|

|

|

command: --character-set-server=utf8mb4 --collation-server=utf8mb4_unicode_ci

|

|

|

|

|

volumes:

|

|

|

|

|

mysql-data:

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

docker-compose up -d

|

|

|

|

|

|

|

|

|

|

docker ps

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

部署后,初始化SQL为./doc/sql/austin.sql,其余SQL安装对应组件才需要

|

|

|

|

|

|

|

|

|

|

**安装文件详见./doc/docker/mysql目录**

|

|

|

|

|

|

|

|

|

|

## 03、安装REDIS

|

|

|

|

|

|

|

|

|

|

新建一个文件夹`redis`,然后在该目录下创建出`data`文件夹、`redis.conf`文件和`docker-compose.yaml`文件

|

|

|

|

|

|

|

|

|

|

`redis.conf`文件的内容如下(后面的配置可在这更改,比如requirepass 我指定的密码为`austin`)

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

protected-mode no

|

|

|

|

|

port 6379

|

|

|

|

|

timeout 0

|

|

|

|

|

save 900 1

|

|

|

|

|

save 300 10

|

|

|

|

|

save 60 10000

|

|

|

|

|

rdbcompression yes

|

|

|

|

|

dbfilename dump.rdb

|

|

|

|

|

dir /data

|

|

|

|

|

appendonly yes

|

|

|

|

|

appendfsync everysec

|

|

|

|

|

requirepass austin

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

`docker-compose.yaml`的文件内容如下:

|

|

|

|

|

|

|

|

|

|

```yaml

|

|

|

|

|

version: '3'

|

|

|

|

|

services:

|

|

|

|

|

zookepper:

|

|

|

|

|

redis:

|

|

|

|

|

image: redis:3.2

|

|

|

|

|

container_name: redis

|

|

|

|

|

restart: always

|

|

|

|

|

ports:

|

|

|

|

|

- 6379:6379

|

|

|

|

|

volumes:

|

|

|

|

|

- ./redis.conf:/usr/local/etc/redis/redis.conf:rw

|

|

|

|

|

- ./data:/data:rw

|

|

|

|

|

command:

|

|

|

|

|

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf"

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

配置的工作就完了,如果是云服务器,记得开redis端口**6379**

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

docker-compose up -d

|

|

|

|

|

|

|

|

|

|

docker ps

|

|

|

|

|

|

|

|

|

|

docker exec -it redis redis-cli

|

|

|

|

|

|

|

|

|

|

auth austin

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

**安装文件详见./doc/docker/redis目录**

|

|

|

|

|

|

|

|

|

|

## 04、安装KAFKA(可选)

|

|

|

|

|

|

|

|

|

|

新建搭建kafka环境的`docker-compose.yml`文件,内容如下:

|

|

|

|

|

|

|

|

|

|

```yaml

|

|

|

|

|

version: '3'

|

|

|

|

|

services:

|

|

|

|

|

zookeeper:

|

|

|

|

|

image: wurstmeister/zookeeper # 原镜像`wurstmeister/zookeeper`

|

|

|

|

|

container_name: zookeeper # 容器名为'zookeeper'

|

|

|

|

|

volumes: # 数据卷挂载路径设置,将本机目录映射到容器目录

|

|

|

|

|

@ -154,10 +302,10 @@ services:

|

|

|

|

|

ports: # 映射端口

|

|

|

|

|

- "9092:9092"

|

|

|

|

|

depends_on: # 解决容器依赖启动先后问题

|

|

|

|

|

- zookepper

|

|

|

|

|

- zookeeper

|

|

|

|

|

|

|

|

|

|

kafka-manager:

|

|

|

|

|

image: sheepkiller/kafka-manager # 原镜像`sheepkiller/kafka-manager`

|

|

|

|

|

image: kafkamanager/kafka-manager # 原镜像`sheepkiller/kafka-manager`

|

|

|

|

|

container_name: kafka-manager # 容器名为'kafka-manager'

|

|

|

|

|

environment: # 设置环境变量,相当于docker run命令中的-e

|

|

|

|

|

ZK_HOSTS: zookeeper:2181

|

|

|

|

|

@ -208,65 +356,55 @@ $KAFKA_HOME/bin/kafka-topics.sh --create --topic austinRecall --partitions 1 --z

|

|

|

|

|

$KAFKA_HOME/bin/kafka-topics.sh --zookeeper zookeeper:2181 --describe --topic austinBusiness

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

## 04、安装REDIS

|

|

|

|

|

|

|

|

|

|

首先,我们新建一个文件夹`redis`,然后在该目录下创建出`data`文件夹、`redis.conf`文件和`docker-compose.yaml`文件

|

|

|

|

|

|

|

|

|

|

`redis.conf`文件的内容如下(后面的配置可在这更改,比如requirepass 我指定的密码为`austin`)

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

protected-mode no

|

|

|

|

|

port 6379

|

|

|

|

|

timeout 0

|

|

|

|

|

save 900 1

|

|

|

|

|

save 300 10

|

|

|

|

|

save 60 10000

|

|

|

|

|

rdbcompression yes

|

|

|

|

|

dbfilename dump.rdb

|

|

|

|

|

dir /data

|

|

|

|

|

appendonly yes

|

|

|

|

|

appendfsync everysec

|

|

|

|

|

requirepass austin

|

|

|

|

|

**安装文件详见./doc/docker/kafka目录**

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

## 05、安装APOLLO(可选)

|

|

|

|

|

|

|

|

|

|

`docker-compose.yaml`的文件内容如下:

|

|

|

|

|

```yaml

|

|

|

|

|

version: '2.1'

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

version: '3'

|

|

|

|

|

services:

|

|

|

|

|

redis:

|

|

|

|

|

image: redis:latest

|

|

|

|

|

container_name: redis

|

|

|

|

|

restart: always

|

|

|

|

|

apollo-quick-start:

|

|

|

|

|

image: nobodyiam/apollo-quick-start

|

|

|

|

|

container_name: apollo-quick-start

|

|

|

|

|

depends_on:

|

|

|

|

|

apollo-db:

|

|

|

|

|

condition: service_healthy

|

|

|

|

|

ports:

|

|

|

|

|

- 6379:6379

|

|

|

|

|

- "8080:8080"

|

|

|

|

|

- "8090:8090"

|

|

|

|

|

- "8070:8070"

|

|

|

|

|

links:

|

|

|

|

|

- apollo-db

|

|

|

|

|

|

|

|

|

|

apollo-db:

|

|

|

|

|

image: mysql:5.7

|

|

|

|

|

container_name: apollo-db

|

|

|

|

|

environment:

|

|

|

|

|

TZ: Asia/Shanghai

|

|

|

|

|

MYSQL_ALLOW_EMPTY_PASSWORD: 'yes'

|

|

|

|

|

healthcheck:

|

|

|

|

|

test: ["CMD", "mysqladmin" ,"ping", "-h", "localhost"]

|

|

|

|

|

interval: 5s

|

|

|

|

|

timeout: 1s

|

|

|

|

|

retries: 10

|

|

|

|

|

depends_on:

|

|

|

|

|

- apollo-dbdata

|

|

|

|

|

ports:

|

|

|

|

|

- "13306:3306"

|

|

|

|

|

volumes:

|

|

|

|

|

- ./redis.conf:/usr/local/etc/redis/redis.conf:rw

|

|

|

|

|

- ./data:/data:rw

|

|

|

|

|

command:

|

|

|

|

|

/bin/bash -c "redis-server /usr/local/etc/redis/redis.conf "

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

配置的工作就完了,如果是云服务器,记得开redis端口**6379**

|

|

|

|

|

|

|

|

|

|

启动Redis跟之前安装Kafka的时候就差不多啦

|

|

|

|

|

- ./sql:/docker-entrypoint-initdb.d

|

|

|

|

|

volumes_from:

|

|

|

|

|

- apollo-dbdata

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

docker-compose up -d

|

|

|

|

|

|

|

|

|

|

docker ps

|

|

|

|

|

|

|

|

|

|

docker exec -it redis redis-cli

|

|

|

|

|

|

|

|

|

|

auth austin

|

|

|

|

|

apollo-dbdata:

|

|

|

|

|

image: alpine:latest

|

|

|

|

|

container_name: apollo-dbdata

|

|

|

|

|

volumes:

|

|

|

|

|

- /var/lib/mysql

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

## 05、安装APOLLO

|

|

|

|

|

|

|

|

|

|

部署Apollo跟之前一样直接用`docker-compose`就完事了,在GitHub已经给出了对应的教程和`docker-compose.yml`以及相关的文件,直接复制粘贴就完事咯。

|

|

|

|

|

|

|

|

|

|

**PS: Apollo 的docker配置文件可以参考:docker/apollo/文件夹, 简单来说,在 docker/apollo/docker-quick-start/文件夹下执行docker-compose up -d 执行即可.**

|

|

|

|

|

|

|

|

|

|

目录结构最好保持一致:

|

|

|

|

|

@ -295,12 +433,13 @@ apollo配置样例可看example/apollo.properties文件的内容

|

|

|

|

|

|

|

|

|

|

动态线程池样例配置可看 dynamic-tp-apollo-dtp.yml 文件的内容

|

|

|

|

|

|

|

|

|

|

**安装文件详见./doc/docker/apollo目录**

|

|

|

|

|

|

|

|

|

|

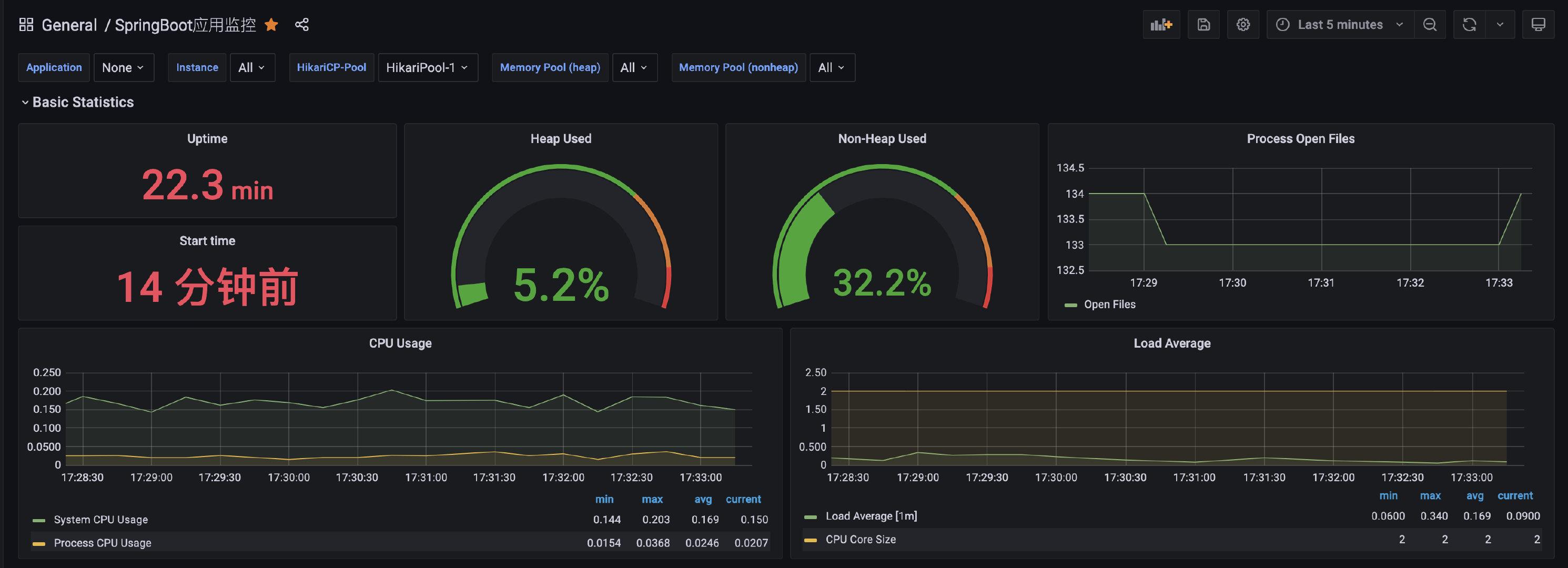

## 06、安装PROMETHEUS和GRAFANA(可选)

|

|

|

|

|

|

|

|

|

|

存放`docker-compose.yml`的信息:

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

```yaml

|

|

|

|

|

version: '2'

|

|

|

|

|

|

|

|

|

|

networks:

|

|

|

|

|

@ -368,20 +507,30 @@ services:

|

|

|

|

|

|

|

|

|

|

新建prometheus的配置文件`prometheus.yml`

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

```yaml

|

|

|

|

|

global:

|

|

|

|

|

scrape_interval: 15s

|

|

|

|

|

evaluation_interval: 15s

|

|

|

|

|

scrape_interval: 1s

|

|

|

|

|

evaluation_interval: 1s

|

|

|

|

|

scrape_configs:

|

|

|

|

|

- job_name: 'prometheus'

|

|

|

|

|

static_configs:

|

|

|

|

|

- targets: ['ip:9090']

|

|

|

|

|

static_configs: # TODO ip地址自己填我有相同的端口,因为是有两台机器,你们可以干掉相同的端口

|

|

|

|

|

- targets: ['ip:9090']

|

|

|

|

|

- job_name: 'cadvisor'

|

|

|

|

|

static_configs:

|

|

|

|

|

- targets: ['ip:8899']

|

|

|

|

|

- targets: ['ip:8899']

|

|

|

|

|

- job_name: 'node'

|

|

|

|

|

static_configs:

|

|

|

|

|

- targets: ['ip:9100']

|

|

|

|

|

- targets: ['ip:9100']

|

|

|

|

|

- job_name: 'cadvisor2'

|

|

|

|

|

static_configs:

|

|

|

|

|

- targets: ['ip:8899']

|

|

|

|

|

- job_name: 'node2'

|

|

|

|

|

static_configs:

|

|

|

|

|

- targets: ['ip:9100']

|

|

|

|

|

- job_name: 'austin'

|

|

|

|

|

metrics_path: '/actuator/prometheus'

|

|

|

|

|

static_configs:

|

|

|

|

|

- targets: ['ip:8888']

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

(**这里要注意端口,按自己配置的来,ip也要填写为自己的**)

|

|

|

|

|

@ -434,6 +583,8 @@ import后就能直接看到高大上的监控页面了:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

**安装文件详见./doc/docker/prometheus目录**

|

|

|

|

|

|

|

|

|

|

## 07、安装GRAYLOG(可选)-分布式日志收集框架

|

|

|

|

|

|

|

|

|

|

`docker-compose.yml`文件内容:

|

|

|

|

|

@ -500,32 +651,32 @@ networks:

|

|

|

|

|

|

|

|

|

|

最后配置`austin.grayLogIp`的ip即可实现分布式日志收集

|

|

|

|

|

|

|

|

|

|

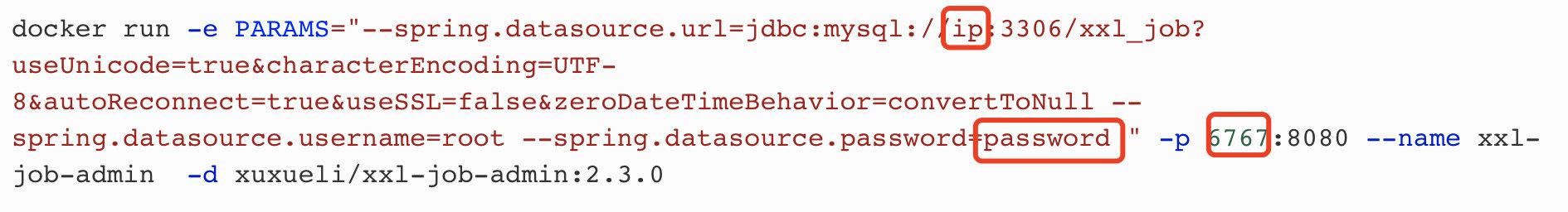

## 08、XXL-JOB

|

|

|

|

|

|

|

|

|

|

文档:[https://www.xuxueli.com/xxl-job/#2.1%20%E5%88%9D%E5%A7%8B%E5%8C%96%E2%80%9C%E8%B0%83%E5%BA%A6%E6%95%B0%E6%8D%AE%E5%BA%93%E2%80%9D](https://www.xuxueli.com/xxl-job/#2.1%20%E5%88%9D%E5%A7%8B%E5%8C%96%E2%80%9C%E8%B0%83%E5%BA%A6%E6%95%B0%E6%8D%AE%E5%BA%93%E2%80%9D)

|

|

|

|

|

|

|

|

|

|

xxl-job的部署我这边其实是依赖官网的文档的,步骤可以简单总结为:

|

|

|

|

|

**安装文件详见./doc/docker/graylog目录**

|

|

|

|

|

|

|

|

|

|

**1**、把xxl-job的仓库拉下来

|

|

|

|

|

## 08、XXL-JOB(可选)

|

|

|

|

|

|

|

|

|

|

**2**、执行`/xxl-job/doc/db/tables_xxl_job.sql`的脚本(创建对应的库、创建表以及插入测试数据记录)

|

|

|

|

|

|

|

|

|

|

**3**、如果是**本地**启动「调度中心」则在`xxl-job-admin`的`application.properties`更改相应的数据库配置,改完启动即可

|

|

|

|

|

|

|

|

|

|

**4**、如果是**云服务**启动「调度中心」,则可以选择拉取`docker`镜像进行部署,我拉取的是`2.30`版本,随后执行以下命令即可:

|

|

|

|

|

|

|

|

|

|

```shell

|

|

|

|

|

docker pull xuxueli/xxl-job-admin:2.3.0

|

|

|

|

|

|

|

|

|

|

docker run -e PARAMS="--spring.datasource.url=jdbc:mysql://ip:3306/xxl_job?useUnicode=true&characterEncoding=UTF-8&autoReconnect=true&useSSL=false&zeroDateTimeBehavior=convertToNull --spring.datasource.username=root --spring.datasource.password=password " -p 6767:8080 --name xxl-job-admin -d xuxueli/xxl-job-admin:2.3.0

|

|

|

|

|

`docker-compose.yaml`文件如下

|

|

|

|

|

|

|

|

|

|

```yaml

|

|

|

|

|

version: '3'

|

|

|

|

|

services:

|

|

|

|

|

austin-xxl-job:

|

|

|

|

|

image: xuxueli/xxl-job-admin:2.3.0

|

|

|

|

|

container_name: xxl-job-admin

|

|

|

|

|

restart: always

|

|

|

|

|

ports:

|

|

|

|

|

- "6767:8080"

|

|

|

|

|

environment:

|

|

|

|

|

PARAMS: '--spring.datasource.url=jdbc:mysql://ip:3306/xxl_job?useUnicode=true&characterEncoding=UTF-8&autoReconnect=true&useSSL=false&zeroDateTimeBehavior=convertToNull --spring.datasource.username=root --spring.datasource.password=root123_A'

|

|

|

|

|

# TODO 添加MySql网络,并更改ip

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

**注意**:第二条命令的**ip**和**password**需要更改为自己的,并且,我开的是**6767**端口

|

|

|

|

|

**注意**:**ip**和**password**需要更改为自己的,并且,我开的是**6767**端口

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

## 09、Flink

|

|

|

|

|

**安装文件详见./doc/docker/xxljob目录**

|

|

|

|

|

|

|

|

|

|

## 09、Flink(可选)

|

|

|

|

|

|

|

|

|

|

部署Flink也是直接上docker-compose就完事了,值得注意的是:我们在部署的时候需要在配置文件里**指定时区**

|

|

|

|

|

|

|

|

|

|

@ -535,7 +686,7 @@ docker-compose.yml配置内容如下:

|

|

|

|

|

version: "2.2"

|

|

|

|

|

services:

|

|

|

|

|

jobmanager:

|

|

|

|

|

image: flink:latest

|

|

|

|

|

image: flink:1.16.1

|

|

|

|

|

ports:

|

|

|

|

|

- "8081:8081"

|

|

|

|

|

command: jobmanager

|

|

|

|

|

@ -547,7 +698,7 @@ services:

|

|

|

|

|

- CONTAINER_TIMEZONE=Asia/Shanghai

|

|

|

|

|

- TZ=Asia/Shanghai

|

|

|

|

|

taskmanager:

|

|

|

|

|

image: flink:latest

|

|

|

|

|

image: flink:1.16.1

|

|

|

|

|

depends_on:

|

|

|

|

|

- jobmanager

|

|

|

|

|

command: taskmanager

|

|

|

|

|

@ -560,10 +711,11 @@ services:

|

|

|

|

|

- CONTAINER_TIMEZONE=Asia/Shanghai

|

|

|

|

|

- TZ=Asia/Shanghai

|

|

|

|

|

```

|

|

|

|

|

**安装文件详见./doc/docker/flink目录**

|

|

|

|

|

|

|

|

|

|

## 10、HIVE

|

|

|

|

|

## 10、HIVE(可选)

|

|

|

|

|

|

|

|

|

|

部署Flink也是直接上docker-compose就完事了

|

|

|

|

|

部署Hive也是直接上docker-compose就完事了

|

|

|

|

|

|

|

|

|

|

1、把仓库拉到自己的服务器上

|

|

|

|

|

|

|

|

|

|

@ -648,7 +800,9 @@ docker-compose exec hive-server bash

|

|

|

|

|

/opt/hive/bin/beeline -u jdbc:hive2://localhost:10000

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

## 11、FLINK和HIVE融合

|

|

|

|

|

**安装文件详见./doc/docker/hive目录**

|

|

|

|

|

|

|

|

|

|

## 11、FLINK和HIVE融合(可选)

|

|

|

|

|

|

|

|

|

|

实时流处理的flink用的是docker-compose进行部署,而与hive融合的flink我这边是正常的姿势安装(主要是涉及的环境很多,用docker-compose就相对没那么方便了)

|

|

|

|

|

|

|

|

|

|

@ -881,23 +1035,154 @@ $KAFKA_HOME/bin/kafka-console-producer.sh --topic austinTraceLog --broker-list

|

|

|

|

|

{"state":"1","businessId":"2","ids":[1,2,3],"logTimestamp":"123123"}

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

## 12、安装METABASE

|

|

|

|

|

## 12、安装METABASE(可选)

|

|

|

|

|

|

|

|

|

|

部署`Metabase`很简单,也是使用`docker`进行安装部署,就两行命令(后续我会将其加入到`docker-compose`里面)。

|

|

|

|

|

```yaml

|

|

|

|

|

version: '3'

|

|

|

|

|

|

|

|

|

|

```shell

|

|

|

|

|

docker pull metabase/metabase:latest

|

|

|

|

|

services:

|

|

|

|

|

metabase:

|

|

|

|

|

image: metabase/metabase

|

|

|

|

|

container_name: metabase

|

|

|

|

|

ports:

|

|

|

|

|

- "5001:3000"

|

|

|

|

|

restart: always

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

```shell

|

|

|

|

|

docker run -d -p 5001:3000 --name metabase metabase/metabase

|

|

|

|

|

**安装文件详见./doc/docker/metabase目录**

|

|

|

|

|

|

|

|

|

|

## 13、安装单机nacos(可选)

|

|

|

|

|

|

|

|

|

|

`docker-compose.yaml`文件如下

|

|

|

|

|

|

|

|

|

|

```yaml

|

|

|

|

|

version: "3"

|

|

|

|

|

services:

|

|

|

|

|

nacos1:

|

|

|

|

|

container_name: nacos-server

|

|

|

|

|

hostname: nacos-server

|

|

|

|

|

image: nacos/nacos-server:v2.1.0

|

|

|

|

|

environment:

|

|

|

|

|

- MODE=standalone

|

|

|

|

|

- PREFER_HOST_MODE=hostname

|

|

|

|

|

- SPRING_DATASOURCE_PLATFORM=mysql

|

|

|

|

|

- MYSQL_SERVICE_HOST=ip # TODO ip需设置

|

|

|

|

|

- MYSQL_SERVICE_PORT=3306

|

|

|

|

|

- MYSQL_SERVICE_USER=root

|

|

|

|

|

- MYSQL_SERVICE_PASSWORD=root123_A

|

|

|

|

|

- MYSQL_SERVICE_DB_NAME=nacos_config

|

|

|

|

|

- JVM_XMS=128m

|

|

|

|

|

- JVM_XMX=128m

|

|

|

|

|

- JVM_XMN=128m

|

|

|

|

|

volumes:

|

|

|

|

|

- /home/nacos/single-logs/nacos-server:/home/nacos/logs

|

|

|

|

|

- /home/nacos/init.d:/home/nacos/init.d

|

|

|

|

|

ports:

|

|

|

|

|

- 8848:8848

|

|

|

|

|

- 9848:9848

|

|

|

|

|

- 9849:9849

|

|

|

|

|

restart: on-failure

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

**安装文件详见./doc/docker/nacos目录**

|

|

|

|

|

|

|

|

|

|

## 14、安装单机rabbitmq(可选)

|

|

|

|

|

|

|

|

|

|

`docker-compose.yaml`文件如下

|

|

|

|

|

|

|

|

|

|

```yaml

|

|

|

|

|

version: '3'

|

|

|

|

|

services:

|

|

|

|

|

rabbitmq:

|

|

|

|

|

image: registry.cn-hangzhou.aliyuncs.com/zhengqing/rabbitmq:3.7.8-management # 原镜像`rabbitmq:3.7.8-management` 【 注:该版本包含了web控制页面 】

|

|

|

|

|

container_name: rabbitmq # 容器名为'rabbitmq'

|

|

|

|

|

hostname: my-rabbit

|

|

|

|

|

restart: unless-stopped # 指定容器退出后的重启策略为始终重启,但是不考虑在Docker守护进程启动时就已经停止了的容器

|

|

|

|

|

environment: # 设置环境变量,相当于docker run命令中的-e

|

|

|

|

|

TZ: Asia/Shanghai

|

|

|

|

|

LANG: en_US.UTF-8

|

|

|

|

|

RABBITMQ_DEFAULT_VHOST: my_vhost # 主机名

|

|

|

|

|

RABBITMQ_DEFAULT_USER: admin # 登录账号

|

|

|

|

|

RABBITMQ_DEFAULT_PASS: admin # 登录密码

|

|

|

|

|

volumes: # 数据卷挂载路径设置,将本机目录映射到容器目录

|

|

|

|

|

- "./rabbitmq/data:/var/lib/rabbitmq"

|

|

|

|

|

ports: # 映射端口

|

|

|

|

|

- "5672:5672"

|

|

|

|

|

- "15672:15672"

|

|

|

|

|

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

完了之后,我们就可以打开`5001`端口到`Metabase`的后台了。

|

|

|

|

|

**安装文件详见./doc/docker/rabbitmq目录**

|

|

|

|

|

|

|

|

|

|

## 15、安装单机rocketmq(可选)

|

|

|

|

|

|

|

|

|

|

`docker-compose.yaml`文件如下

|

|

|

|

|

|

|

|

|

|

```yaml

|

|

|

|

|

version: '3.5'

|

|

|

|

|

services:

|

|

|

|

|

# mq服务

|

|

|

|

|

rocketmq_server:

|

|

|

|

|

image: foxiswho/rocketmq:server

|

|

|

|

|

container_name: rocketmq_server

|

|

|

|

|

ports:

|

|

|

|

|

- 9876:9876

|

|

|

|

|

volumes:

|

|

|

|

|

- ./rocketmq/rocketmq_server/logs:/opt/logs

|

|

|

|

|

- ./rocketmq/rocketmq_server/store:/opt/store

|

|

|

|

|

networks:

|

|

|

|

|

rocketmq:

|

|

|

|

|

aliases:

|

|

|

|

|

- rocketmq_server

|

|

|

|

|

|

|

|

|

|

# mq中间件

|

|

|

|

|

rocketmq_broker:

|

|

|

|

|

image: foxiswho/rocketmq:broker

|

|

|

|

|

container_name: rocketmq_broker

|

|

|

|

|

ports:

|

|

|

|

|

- 10909:10909

|

|

|

|

|

- 10911:10911

|

|

|

|

|

volumes:

|

|

|

|

|

- ./rocketmq/rocketmq_broker/logs:/opt/logs

|

|

|

|

|

- ./rocketmq/rocketmq_broker/store:/opt/store

|

|

|

|

|

- ./rocketmq/rocketmq_broker/conf/broker.conf:/etc/rocketmq/broker.conf

|

|

|

|

|

environment:

|

|

|

|

|

NAMESRV_ADDR: "rocketmq_server:9876"

|

|

|

|

|

JAVA_OPTS: " -Duser.home=/opt"

|

|

|

|

|

JAVA_OPT_EXT: "-server -Xms128m -Xmx128m -Xmn128m"

|

|

|

|

|

command: mqbroker -c /etc/rocketmq/broker.conf

|

|

|

|

|

depends_on:

|

|

|

|

|

- rocketmq_server

|

|

|

|

|

networks:

|

|

|

|

|

rocketmq:

|

|

|

|

|

aliases:

|

|

|

|

|

- rocketmq_broker

|

|

|

|

|

|

|

|

|

|

# mq可视化控制台

|

|

|

|

|

rocketmq_console_ng:

|

|

|

|

|

image: styletang/rocketmq-console-ng

|

|

|

|

|

container_name: rocketmq_console_ng

|

|

|

|

|

ports:

|

|

|

|

|

- 9002:8080

|

|

|

|

|

environment:

|

|

|

|

|

JAVA_OPTS: "-Drocketmq.namesrv.addr=rocketmq_server:9876 -Dcom.rocketmq.sendMessageWithVIPChannel=false"

|

|

|

|

|

depends_on:

|

|

|

|

|

- rocketmq_server

|

|

|

|

|

networks:

|

|

|

|

|

rocketmq:

|

|

|

|

|

aliases:

|

|

|

|

|

- rocketmq_console_ng

|

|

|

|

|

|

|

|

|

|

#容器通信network

|

|

|

|

|

networks:

|

|

|

|

|

rocketmq:

|

|

|

|

|

name: rocketmq

|

|

|

|

|

driver: bridge

|

|

|

|

|

```

|

|

|

|

|

|

|

|

|

|

**安装文件详见./doc/docker/rocketmq目录**

|

|

|

|

|

|

|

|

|

|

## 13、未完待续

|

|

|

|

|

## 未完待续

|

|

|

|

|

|

|

|

|

|

安装更详细的过程以及整个文章系列的更新思路都在公众号**Java3y**连载哟!

|

|

|

|

|

|

|

|

|

|

|