commit

6fddaf27e7

@ -1,27 +0,0 @@

|

||||

# Copyright © 2023 OpenIM. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

|

||||

#more datasource-compose.yaml

|

||||

apiVersion: 1

|

||||

|

||||

datasources:

|

||||

- name: Prometheus

|

||||

type: prometheus

|

||||

access: proxy

|

||||

orgId: 1

|

||||

url: http://127.0.0.1:9091

|

||||

basicAuth: false

|

||||

isDefault: true

|

||||

version: 1

|

||||

editable: true

|

||||

Binary file not shown.

File diff suppressed because it is too large

Load Diff

File diff suppressed because it is too large

Load Diff

@ -1,85 +0,0 @@

|

||||

# Copyright © 2023 OpenIM. All rights reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

|

||||

#more prometheus-compose.yml

|

||||

global:

|

||||

scrape_interval: 15s

|

||||

evaluation_interval: 15s

|

||||

external_labels:

|

||||

monitor: 'openIM-monitor'

|

||||

|

||||

scrape_configs:

|

||||

- job_name: 'prometheus'

|

||||

static_configs:

|

||||

- targets: ['localhost:9091']

|

||||

|

||||

- job_name: 'openIM-server'

|

||||

metrics_path: /metrics

|

||||

static_configs:

|

||||

- targets: ['localhost:10002']

|

||||

labels:

|

||||

group: 'api'

|

||||

|

||||

- targets: ['localhost:20110']

|

||||

labels:

|

||||

group: 'user'

|

||||

|

||||

- targets: ['localhost:20120']

|

||||

labels:

|

||||

group: 'friend'

|

||||

|

||||

- targets: ['localhost:20130']

|

||||

labels:

|

||||

group: 'message'

|

||||

|

||||

- targets: ['localhost:20140']

|

||||

labels:

|

||||

group: 'msg-gateway'

|

||||

|

||||

- targets: ['localhost:20150']

|

||||

labels:

|

||||

group: 'group'

|

||||

|

||||

- targets: ['localhost:20160']

|

||||

labels:

|

||||

group: 'auth'

|

||||

|

||||

- targets: ['localhost:20170']

|

||||

labels:

|

||||

group: 'push'

|

||||

|

||||

- targets: ['localhost:20120']

|

||||

labels:

|

||||

group: 'friend'

|

||||

|

||||

|

||||

- targets: ['localhost:20230']

|

||||

labels:

|

||||

group: 'conversation'

|

||||

|

||||

|

||||

- targets: ['localhost:21400', 'localhost:21401', 'localhost:21402', 'localhost:21403']

|

||||

labels:

|

||||

group: 'msg-transfer'

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

- job_name: 'node'

|

||||

scrape_interval: 8s

|

||||

static_configs:

|

||||

- targets: ['localhost:9100']

|

||||

|

||||

@ -0,0 +1,32 @@

|

||||

###################### AlertManager Configuration ######################

|

||||

# AlertManager configuration using environment variables

|

||||

#

|

||||

# Resolve timeout

|

||||

# SMTP configuration for sending alerts

|

||||

# Templates for email notifications

|

||||

# Routing configurations for alerts

|

||||

# Receiver configurations

|

||||

global:

|

||||

resolve_timeout: 5m

|

||||

smtp_from: alert@openim.io

|

||||

smtp_smarthost: smtp.163.com:465

|

||||

smtp_auth_username: alert@openim.io

|

||||

smtp_auth_password: YOURAUTHPASSWORD

|

||||

smtp_require_tls: false

|

||||

smtp_hello: xxx监控告警

|

||||

|

||||

templates:

|

||||

- /etc/alertmanager/email.tmpl

|

||||

|

||||

route:

|

||||

group_wait: 5s

|

||||

group_interval: 5s

|

||||

repeat_interval: 5m

|

||||

receiver: email

|

||||

receivers:

|

||||

- name: email

|

||||

email_configs:

|

||||

- to: {EMAIL_TO:-'alert@example.com'}

|

||||

html: '{{ template "email.to.html" . }}'

|

||||

headers: { Subject: "[OPENIM-SERVER]Alarm" }

|

||||

send_resolved: true

|

||||

@ -0,0 +1,16 @@

|

||||

{{ define "email.to.html" }}

|

||||

{{ range .Alerts }}

|

||||

<!-- Begin of OpenIM Alert -->

|

||||

<div style="border:1px solid #ccc; padding:10px; margin-bottom:10px;">

|

||||

<h3>OpenIM Alert</h3>

|

||||

<p><strong>Alert Program:</strong> Prometheus Alert</p>

|

||||

<p><strong>Severity Level:</strong> {{ .Labels.severity }}</p>

|

||||

<p><strong>Alert Type:</strong> {{ .Labels.alertname }}</p>

|

||||

<p><strong>Affected Host:</strong> {{ .Labels.instance }}</p>

|

||||

<p><strong>Affected Service:</strong> {{ .Labels.job }}</p>

|

||||

<p><strong>Alert Subject:</strong> {{ .Annotations.summary }}</p>

|

||||

<p><strong>Trigger Time:</strong> {{ .StartsAt.Format "2006-01-02 15:04:05" }}</p>

|

||||

</div>

|

||||

<!-- End of OpenIM Alert -->

|

||||

{{ end }}

|

||||

{{ end }}

|

||||

@ -0,0 +1,11 @@

|

||||

groups:

|

||||

- name: instance_down

|

||||

rules:

|

||||

- alert: InstanceDown

|

||||

expr: up == 0

|

||||

for: 1m

|

||||

labels:

|

||||

severity: critical

|

||||

annotations:

|

||||

summary: "Instance {{ $labels.instance }} down"

|

||||

description: "{{ $labels.instance }} of job {{ $labels.job }} has been down for more than 1 minutes."

|

||||

File diff suppressed because it is too large

Load Diff

@ -0,0 +1,85 @@

|

||||

# my global config

|

||||

global:

|

||||

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

|

||||

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

|

||||

# scrape_timeout is set to the global default (10s).

|

||||

|

||||

# Alertmanager configuration

|

||||

alerting:

|

||||

alertmanagers:

|

||||

- static_configs:

|

||||

- targets: ['172.28.0.1:19093']

|

||||

|

||||

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

|

||||

rule_files:

|

||||

- "instance-down-rules.yml"

|

||||

# - "first_rules.yml"

|

||||

# - "second_rules.yml"

|

||||

|

||||

# A scrape configuration containing exactly one endpoint to scrape:

|

||||

# Here it's Prometheus itself.

|

||||

scrape_configs:

|

||||

# The job name is added as a label "job='job_name'"" to any timeseries scraped from this config.

|

||||

# Monitored information captured by prometheus

|

||||

- job_name: 'node-exporter'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:19100' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

|

||||

# prometheus fetches application services

|

||||

- job_name: 'openimserver-openim-api'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20100' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-msggateway'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20140' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-msgtransfer'

|

||||

static_configs:

|

||||

- targets: [ 172.28.0.1:21400, 172.28.0.1:21401, 172.28.0.1:21402, 172.28.0.1:21403 ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-push'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20170' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-auth'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20160' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-conversation'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20230' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-friend'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20120' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-group'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20150' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-msg'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20130' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-third'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:21301' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-user'

|

||||

static_configs:

|

||||

- targets: [ '172.28.0.1:20110' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

@ -0,0 +1,32 @@

|

||||

###################### AlertManager Configuration ######################

|

||||

# AlertManager configuration using environment variables

|

||||

#

|

||||

# Resolve timeout

|

||||

# SMTP configuration for sending alerts

|

||||

# Templates for email notifications

|

||||

# Routing configurations for alerts

|

||||

# Receiver configurations

|

||||

global:

|

||||

resolve_timeout: ${ALERTMANAGER_RESOLVE_TIMEOUT}

|

||||

smtp_from: ${ALERTMANAGER_SMTP_FROM}

|

||||

smtp_smarthost: ${ALERTMANAGER_SMTP_SMARTHOST}

|

||||

smtp_auth_username: ${ALERTMANAGER_SMTP_AUTH_USERNAME}

|

||||

smtp_auth_password: ${ALERTMANAGER_SMTP_AUTH_PASSWORD}

|

||||

smtp_require_tls: ${ALERTMANAGER_SMTP_REQUIRE_TLS}

|

||||

smtp_hello: ${ALERTMANAGER_SMTP_HELLO}

|

||||

|

||||

templates:

|

||||

- /etc/alertmanager/email.tmpl

|

||||

|

||||

route:

|

||||

group_wait: 5s

|

||||

group_interval: 5s

|

||||

repeat_interval: 5m

|

||||

receiver: email

|

||||

receivers:

|

||||

- name: email

|

||||

email_configs:

|

||||

- to: ${ALERTMANAGER_EMAIL_TO}

|

||||

html: '{{ template "email.to.html" . }}'

|

||||

headers: { Subject: "[OPENIM-SERVER]Alarm" }

|

||||

send_resolved: true

|

||||

@ -0,0 +1,85 @@

|

||||

# my global config

|

||||

global:

|

||||

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

|

||||

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

|

||||

# scrape_timeout is set to the global default (10s).

|

||||

|

||||

# Alertmanager configuration

|

||||

alerting:

|

||||

alertmanagers:

|

||||

- static_configs:

|

||||

- targets: ['${ALERT_MANAGER_ADDRESS}:${ALERT_MANAGER_PORT}']

|

||||

|

||||

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

|

||||

rule_files:

|

||||

- "instance-down-rules.yml"

|

||||

# - "first_rules.yml"

|

||||

# - "second_rules.yml"

|

||||

|

||||

# A scrape configuration containing exactly one endpoint to scrape:

|

||||

# Here it's Prometheus itself.

|

||||

scrape_configs:

|

||||

# The job name is added as a label "job='job_name'"" to any timeseries scraped from this config.

|

||||

# Monitored information captured by prometheus

|

||||

- job_name: 'node-exporter'

|

||||

static_configs:

|

||||

- targets: [ '${NODE_EXPORTER_ADDRESS}:${NODE_EXPORTER_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

|

||||

# prometheus fetches application services

|

||||

- job_name: 'openimserver-openim-api'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${API_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-msggateway'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${MSG_GATEWAY_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-msgtransfer'

|

||||

static_configs:

|

||||

- targets: [ ${MSG_TRANSFER_PROM_ADDRESS_PORT} ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-push'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${PUSH_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-auth'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${AUTH_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-conversation'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${CONVERSATION_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-friend'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${FRIEND_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-group'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${GROUP_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-msg'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${MESSAGE_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-third'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${THIRD_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

- job_name: 'openimserver-openim-rpc-user'

|

||||

static_configs:

|

||||

- targets: [ '${OPENIM_SERVER_ADDRESS}:${USER_PROM_PORT}' ]

|

||||

labels:

|

||||

namespace: 'default'

|

||||

@ -0,0 +1,323 @@

|

||||

# Deployment and Design of OpenIM's Management Backend and Monitoring

|

||||

|

||||

<!-- vscode-markdown-toc -->

|

||||

* 1. [Source Code & Docker](#SourceCodeDocker)

|

||||

* 1.1. [Deployment](#Deployment)

|

||||

* 1.2. [Configuration](#Configuration)

|

||||

* 1.3. [Monitoring Running in Docker Guide](#MonitoringRunninginDockerGuide)

|

||||

* 1.3.1. [Introduction](#Introduction)

|

||||

* 1.3.2. [Prerequisites](#Prerequisites)

|

||||

* 1.3.3. [Step 1: Clone the Repository](#Step1:ClonetheRepository)

|

||||

* 1.3.4. [Step 2: Start Docker Compose](#Step2:StartDockerCompose)

|

||||

* 1.3.5. [Step 3: Use the OpenIM Web Interface](#Step3:UsetheOpenIMWebInterface)

|

||||

* 1.3.6. [Running Effect](#RunningEffect)

|

||||

* 1.3.7. [Step 4: Access the Admin Panel](#Step4:AccesstheAdminPanel)

|

||||

* 1.3.8. [Step 5: Access the Monitoring Interface](#Step5:AccesstheMonitoringInterface)

|

||||

* 1.3.9. [Next Steps](#NextSteps)

|

||||

* 1.3.10. [Troubleshooting](#Troubleshooting)

|

||||

* 2. [Kubernetes](#Kubernetes)

|

||||

* 2.1. [Middleware Monitoring](#MiddlewareMonitoring)

|

||||

* 2.2. [Custom OpenIM Metrics](#CustomOpenIMMetrics)

|

||||

* 2.3. [Node Exporter](#NodeExporter)

|

||||

* 3. [Setting Up and Configuring AlertManager Using Environment Variables and `make init`](#SettingUpandConfiguringAlertManagerUsingEnvironmentVariablesandmakeinit)

|

||||

* 3.1. [Introduction](#Introduction-1)

|

||||

* 3.2. [Prerequisites](#Prerequisites-1)

|

||||

* 3.3. [Configuration Steps](#ConfigurationSteps)

|

||||

* 3.3.1. [Exporting Environment Variables](#ExportingEnvironmentVariables)

|

||||

* 3.3.2. [Initializing AlertManager](#InitializingAlertManager)

|

||||

* 3.3.3. [Key Configuration Fields](#KeyConfigurationFields)

|

||||

* 3.3.4. [Configuring SMTP Authentication Password](#ConfiguringSMTPAuthenticationPassword)

|

||||

* 3.3.5. [Useful Links for Common Email Servers](#UsefulLinksforCommonEmailServers)

|

||||

* 3.4. [Conclusion](#Conclusion)

|

||||

|

||||

<!-- vscode-markdown-toc-config

|

||||

numbering=true

|

||||

autoSave=true

|

||||

/vscode-markdown-toc-config -->

|

||||

<!-- /vscode-markdown-toc -->

|

||||

|

||||

OpenIM offers various flexible deployment options to suit different environments and requirements. Here is a simplified and optimized description of these deployment options:

|

||||

|

||||

1. Source Code Deployment:

|

||||

+ **Regular Source Code Deployment**: Deployment using the `nohup` method. This is a basic deployment method suitable for development and testing environments. For details, refer to the [Regular Source Code Deployment Guide](https://docs.openim.io/).

|

||||

+ **Production-Level Deployment**: Deployment using the `system` method, more suitable for production environments. This method provides higher stability and reliability. For details, refer to the [Production-Level Deployment Guide](https://docs.openim.io/guides/gettingStarted/install-openim-linux-system).

|

||||

2. Cluster Deployment:

|

||||

+ **Kubernetes Deployment**: Provides two deployment methods, including deployment through Helm and sealos. This is suitable for environments that require high availability and scalability. Specific methods can be found in the [Kubernetes Deployment Guide](https://docs.openim.io/guides/gettingStarted/k8s-deployment).

|

||||

3. Docker Deployment:

|

||||

+ **Regular Docker Deployment**: Suitable for quick deployments and small projects. For detailed information, refer to the [Docker Deployment Guide](https://docs.openim.io/guides/gettingStarted/dockerCompose).

|

||||

+ **Docker Compose Deployment**: Provides more convenient service management and configuration, suitable for complex multi-container applications.

|

||||

|

||||

Next, we will introduce the specific steps, monitoring, and management backend configuration for each of these deployment methods, as well as usage tips to help you choose the most suitable deployment option according to your needs.

|

||||

|

||||

## 1. <a name='SourceCodeDocker'></a>Source Code & Docker

|

||||

|

||||

### 1.1. <a name='Deployment'></a>Deployment

|

||||

|

||||

OpenIM deploys openim-server and openim-chat from source code, while other components are deployed via Docker.

|

||||

|

||||

For Docker deployment, you can deploy all components with a single command using the [openimsdk/openim-docker](https://github.com/openimsdk/openim-docker) repository. The deployment configuration can be found in the [environment.sh](https://github.com/openimsdk/open-im-server/blob/main/scripts/install/environment.sh) document, which provides information on how to learn and familiarize yourself with various environment variables.

|

||||

|

||||

For Prometheus, it is not enabled by default. To enable it, set the environment variable before executing `make init`:

|

||||

|

||||

```bash

|

||||

export PROMETHEUS_ENABLE=true # Default is false

|

||||

```

|

||||

|

||||

Then, execute:

|

||||

|

||||

```bash

|

||||

make init

|

||||

docker compose up -d

|

||||

```

|

||||

|

||||

### 1.2. <a name='Configuration'></a>Configuration

|

||||

|

||||

To configure Prometheus data sources in Grafana, follow these steps:

|

||||

|

||||

1. **Log in to Grafana**: First, open your web browser and access the Grafana URL. If you haven't changed the port, the address is typically [http://localhost:13000](http://localhost:13000/).

|

||||

|

||||

2. **Log in with default credentials**: Grafana's default username and password are both `admin`. You will be prompted to change the password on your first login.

|

||||

|

||||

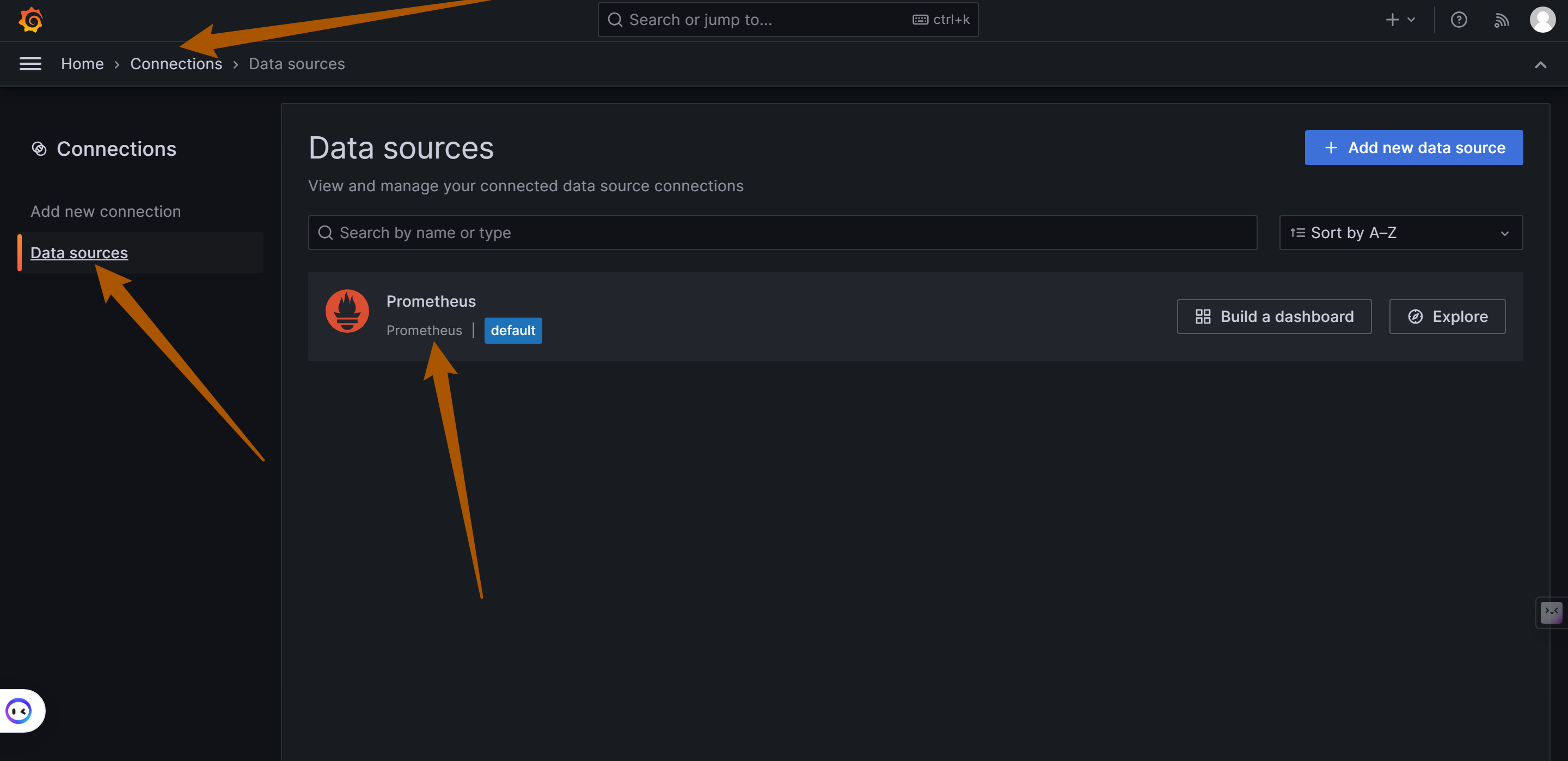

3. **Access Data Sources Settings**:

|

||||

|

||||

+ In the left menu of Grafana, look for and click the "gear" icon representing "Configuration."

|

||||

+ In the configuration menu, select "Data Sources."

|

||||

|

||||

4. **Add a New Data Source**:

|

||||

|

||||

+ On the Data Sources page, click the "Add data source" button.

|

||||

+ In the list, find and select "Prometheus."

|

||||

|

||||

|

||||

|

||||

Click `Add New connection` to add more data sources, such as Loki (responsible for log storage and query processing).

|

||||

|

||||

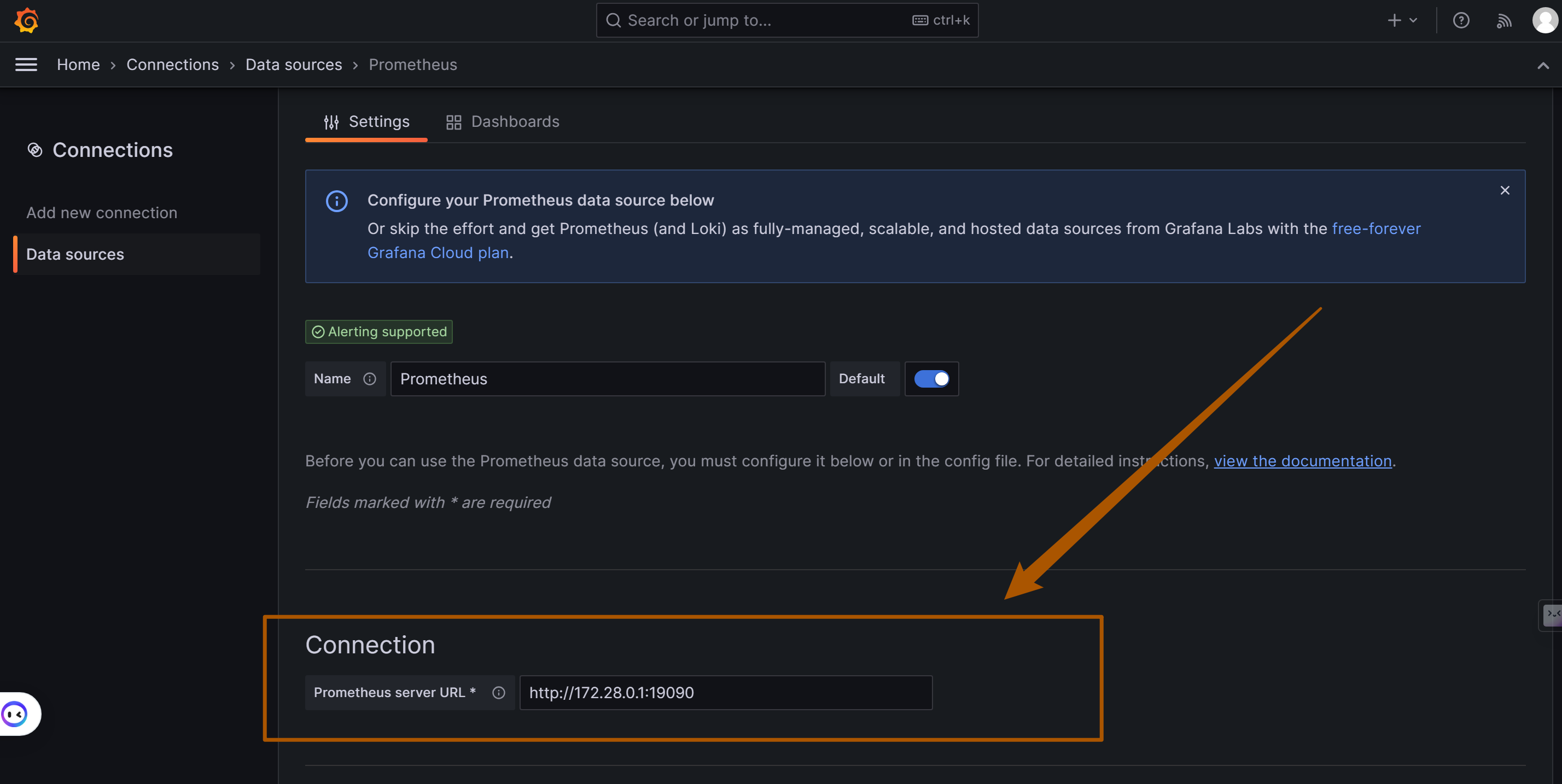

5. **Configure the Prometheus Data Source**:

|

||||

|

||||

+ On the configuration page, fill in the details of the Prometheus server. This typically includes the URL of the Prometheus service (e.g., if Prometheus is running on the same machine as OpenIM, the URL might be `http://172.28.0.1:19090`, with the address matching the `DOCKER_BRIDGE_GATEWAY` variable address). OpenIM and the components are linked via a gateway. The default port used by OpenIM is `19090`.

|

||||

+ Adjust other settings as needed, such as authentication and TLS settings.

|

||||

|

||||

|

||||

|

||||

6. **Save and Test**:

|

||||

|

||||

+ After completing the configuration, click the "Save & Test" button to ensure that Grafana can successfully connect to Prometheus.

|

||||

|

||||

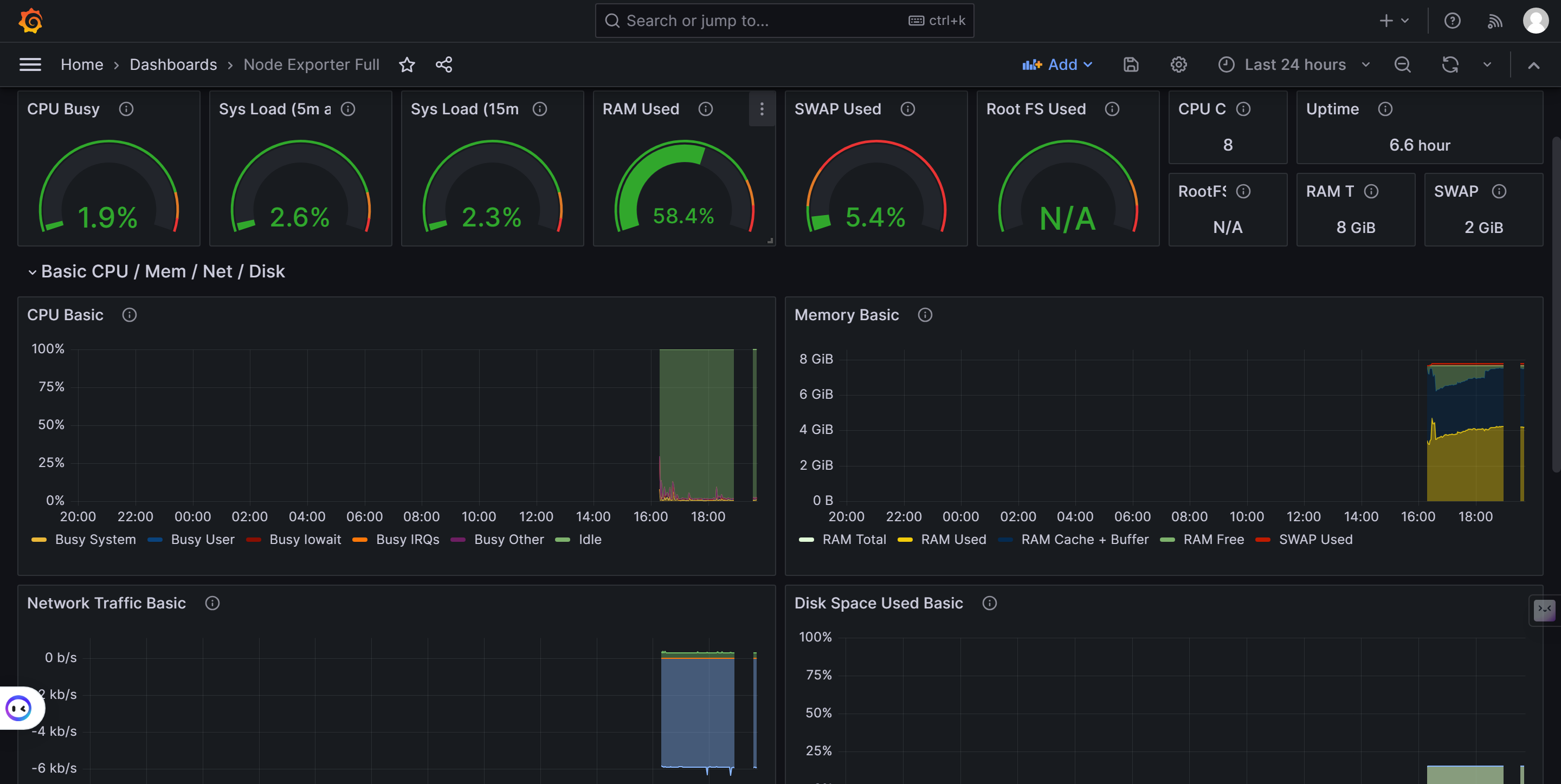

**Importing Dashboards in Grafana**

|

||||

|

||||

Importing Grafana Dashboards is a straightforward process and is applicable to OpenIM Server application services and Node Exporter. Here are detailed steps and necessary considerations:

|

||||

|

||||

**Key Metrics Overview and Deployment Steps**

|

||||

|

||||

To monitor OpenIM in Grafana, you need to focus on three categories of key metrics, each with its specific deployment and configuration steps:

|

||||

|

||||

1. **OpenIM Metrics (`prometheus-dashboard.yaml`)**:

|

||||

+ **Configuration File Path**: Located at `config/prometheus-dashboard.yaml`.

|

||||

+ **Enabling Monitoring**: Set the environment variable `export PROMETHEUS_ENABLE=true` to enable Prometheus monitoring.

|

||||

+ **More Information**: Refer to the [OpenIM Configuration Guide](https://docs.openim.io/configurations/prometheus-integration).

|

||||

2. **Node Exporter**:

|

||||

+ **Container Deployment**: Deploy the `quay.io/prometheus/node-exporter` container for node monitoring.

|

||||

+ **Get Dashboard**: Access the [Node Exporter Full Feature Dashboard](https://grafana.com/grafana/dashboards/1860-node-exporter-full/) and import it using YAML file download or ID import.

|

||||

+ **Deployment Guide**: Refer to the [Node Exporter Deployment Documentation](https://prometheus.io/docs/guides/node-exporter/).

|

||||

3. **Middleware Metrics**: Each middleware requires specific steps and configurations to enable monitoring. Here is a list of common middleware and links to their respective setup guides:

|

||||

+ MySQL:

|

||||

+ **Configuration**: Ensure MySQL has performance monitoring enabled.

|

||||

+ **Link**: Refer to the [MySQL Monitoring Configuration Guide](https://grafana.com/docs/grafana/latest/datasources/mysql/).

|

||||

+ Redis:

|

||||

+ **Configuration**: Configure Redis to allow monitoring data export.

|

||||

+ **Link**: Refer to the [Redis Monitoring Guide](https://grafana.com/docs/grafana/latest/datasources/redis/).

|

||||

+ MongoDB:

|

||||

+ **Configuration**: Set up monitoring metrics for MongoDB.

|

||||

+ **Link**: Refer to the [MongoDB Monitoring Guide](https://grafana.com/grafana/plugins/grafana-mongodb-datasource/).

|

||||

+ Kafka:

|

||||

+ **Configuration**: Integrate Kafka with Prometheus monitoring.

|

||||

+ **Link**: Refer to the [Kafka Monitoring Guide](https://grafana.com/grafana/plugins/grafana-kafka-datasource/).

|

||||

+ Zookeeper:

|

||||

+ **Configuration**: Ensure Zookeeper can be monitored by Prometheus.

|

||||

+ **Link**: Refer to the [Zookeeper Monitoring Configuration](https://grafana.com/docs/grafana/latest/datasources/zookeeper/).

|

||||

|

||||

|

||||

|

||||

**Importing Steps**:

|

||||

|

||||

1. Access the Dashboard Import Interface:

|

||||

|

||||

+ Click the `+` icon on the left menu or in the top right corner of Grafana, then select "Create."

|

||||

+ Choose "Import" to access the dashboard import interface.

|

||||

|

||||

2. **Perform Dashboard Import**:

|

||||

+ **Upload via File**: Directly upload your YAML file.

|

||||

+ **Paste Content**: Open the YAML file, copy its content, and paste it into the import interface.

|

||||

+ **Import via Grafana.com Dashboard**: Visit [Grafana Dashboards](https://grafana.com/grafana/dashboards/), search for the desired dashboard, and import it using its ID.

|

||||

3. **Configure the Dashboard**:

|

||||

+ Select the appropriate data source, such as the previously configured Prometheus.

|

||||

+ Adjust other settings, such as the dashboard name or folder.

|

||||

4. **Save and View the Dashboard**:

|

||||

+ After configuring, click "Import" to complete the process.

|

||||

+ Immediately view the new dashboard after successful import.

|

||||

|

||||

**Graph Examples:**

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### 1.3. <a name='MonitoringRunninginDockerGuide'></a>Monitoring Running in Docker Guide

|

||||

|

||||

#### 1.3.1. <a name='Introduction'></a>Introduction

|

||||

|

||||

This guide provides the steps to run OpenIM using Docker. OpenIM is an open-source instant messaging solution that can be quickly deployed using Docker. For more information, please refer to the [OpenIM Docker GitHub](https://github.com/openimsdk/openim-docker).

|

||||

|

||||

#### 1.3.2. <a name='Prerequisites'></a>Prerequisites

|

||||

|

||||

+ Ensure that Docker and Docker Compose are installed.

|

||||

+ Basic understanding of Docker and containerization technology.

|

||||

|

||||

#### 1.3.3. <a name='Step1:ClonetheRepository'></a>Step 1: Clone the Repository

|

||||

|

||||

First, clone the OpenIM Docker repository:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/openimsdk/openim-docker.git

|

||||

```

|

||||

|

||||

Navigate to the repository directory and check the `README` file for more information and configuration options.

|

||||

|

||||

#### 1.3.4. <a name='Step2:StartDockerCompose'></a>Step 2: Start Docker Compose

|

||||

|

||||

In the repository directory, run the following command to start the service:

|

||||

|

||||

```bash

|

||||

docker-compose up -d

|

||||

```

|

||||

|

||||

This will download the required Docker images and start the OpenIM service.

|

||||

|

||||

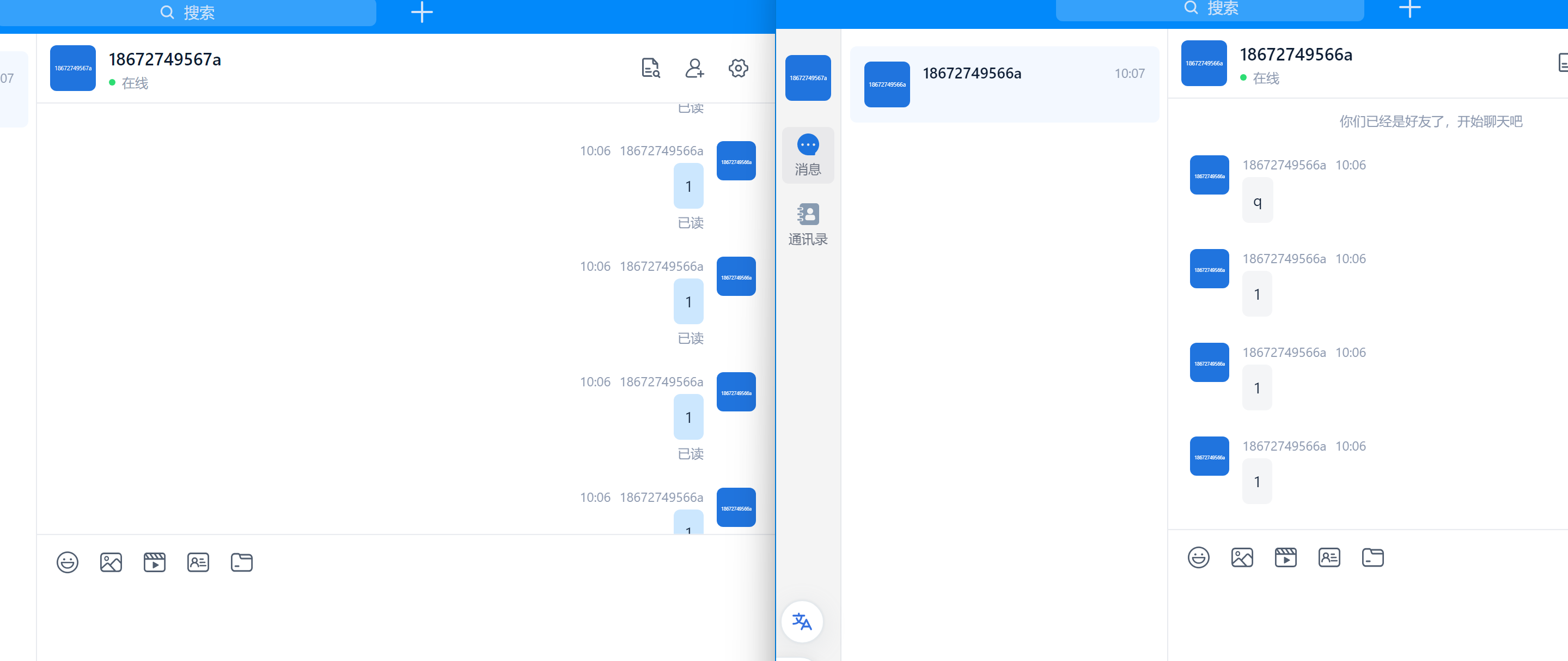

#### 1.3.5. <a name='Step3:UsetheOpenIMWebInterface'></a>Step 3: Use the OpenIM Web Interface

|

||||

|

||||

+ Open a browser in private mode and access [OpenIM Web](http://localhost:11001/).

|

||||

+ Register two users and try adding friends.

|

||||

+ Test sending messages and pictures.

|

||||

|

||||

#### 1.3.6. <a name='RunningEffect'></a>Running Effect

|

||||

|

||||

|

||||

|

||||

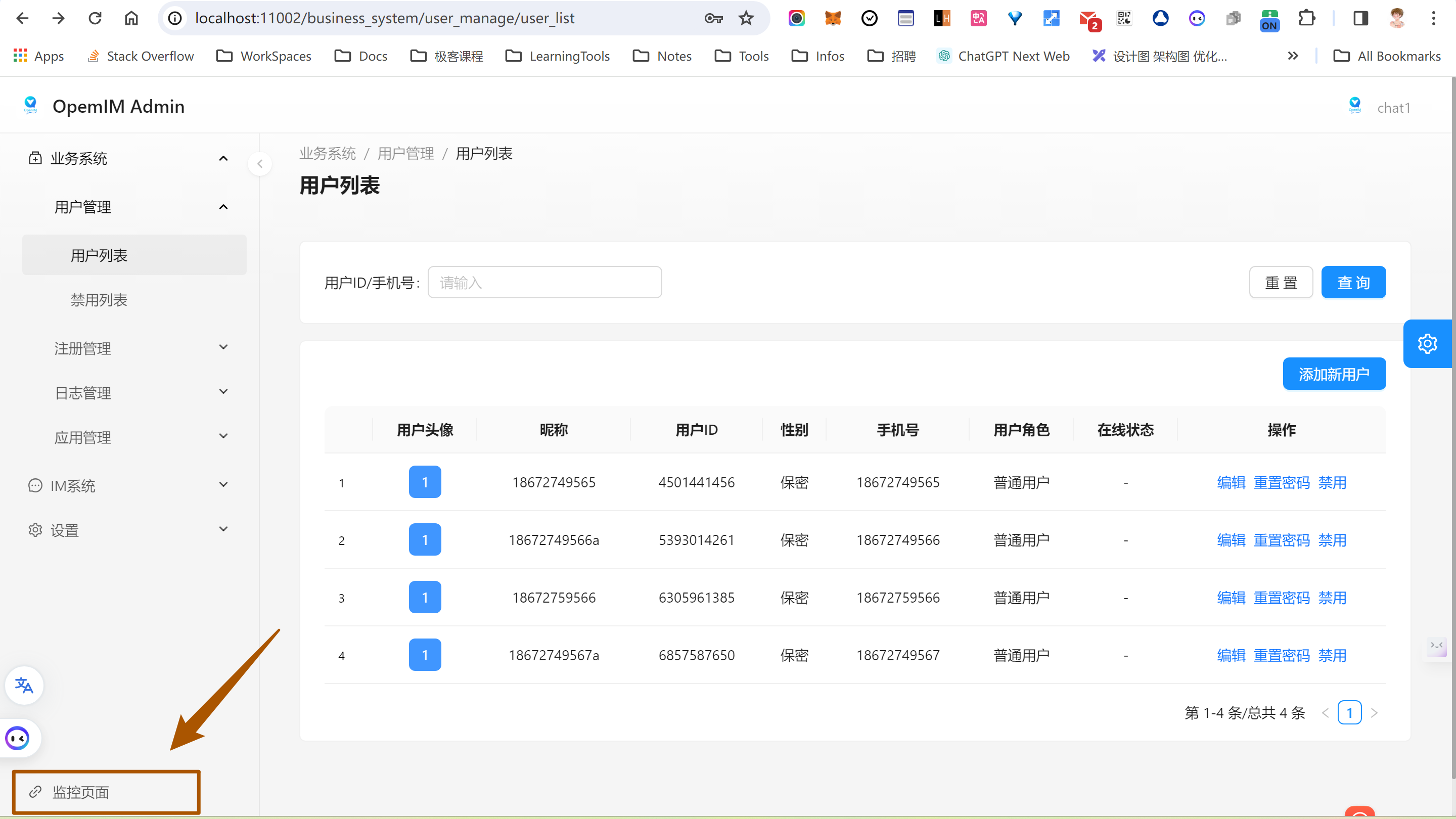

#### 1.3.7. <a name='Step4:AccesstheAdminPanel'></a>Step 4: Access the Admin Panel

|

||||

|

||||

+ Access the [OpenIM Admin Panel](http://localhost:11002/).

|

||||

+ Log in using the default username and password (`admin1:admin1`).

|

||||

|

||||

Running Effect Image:

|

||||

|

||||

|

||||

|

||||

#### 1.3.8. <a name='Step5:AccesstheMonitoringInterface'></a>Step 5: Access the Monitoring Interface

|

||||

|

||||

+ Log in to the [Monitoring Interface](http://localhost:3000/login) using the credentials (`admin:admin`).

|

||||

|

||||

#### 1.3.9. <a name='NextSteps'></a>Next Steps

|

||||

|

||||

+ Configure and manage the services following the steps provided in the OpenIM source code.

|

||||

+ Refer to the `README` file for advanced configuration and management.

|

||||

|

||||

#### 1.3.10. <a name='Troubleshooting'></a>Troubleshooting

|

||||

|

||||

+ If you encounter any issues, please check the documentation on [OpenIM Docker GitHub](https://github.com/openimsdk/openim-docker) or search for related issues in the Issues section.

|

||||

+ If the problem persists, you can create an issue on the [openim-docker](https://github.com/openimsdk/openim-docker/issues/new/choose) repository or the [openim-server](https://github.com/openimsdk/open-im-server/issues/new/choose) repository.

|

||||

|

||||

|

||||

|

||||

## 2. <a name='Kubernetes'></a>Kubernetes

|

||||

|

||||

Refer to [openimsdk/helm-charts](https://github.com/openimsdk/helm-charts).

|

||||

|

||||

When deploying and monitoring OpenIM in a Kubernetes environment, you will focus on three main metrics: middleware, custom OpenIM metrics, and Node Exporter. Here are detailed steps and guidelines:

|

||||

|

||||

### 2.1. <a name='MiddlewareMonitoring'></a>Middleware Monitoring

|

||||

|

||||

Middleware monitoring is crucial to ensure the overall system's stability. Typically, this includes monitoring the following components:

|

||||

|

||||

+ **MySQL**: Monitor database performance, query latency, and more.

|

||||

+ **Redis**: Track operation latency, memory usage, and more.

|

||||

+ **MongoDB**: Observe database operations, resource usage, and more.

|

||||

+ **Kafka**: Monitor message throughput, latency, and more.

|

||||

+ **Zookeeper**: Keep an eye on cluster status, performance metrics, and more.

|

||||

|

||||

For Kubernetes environments, you can use the corresponding Prometheus Exporters to collect monitoring data for these middleware components.

|

||||

|

||||

### 2.2. <a name='CustomOpenIMMetrics'></a>Custom OpenIM Metrics

|

||||

|

||||

Custom OpenIM metrics provide essential information about the OpenIM application itself, such as user activity, message traffic, system performance, and more. To monitor these metrics in Kubernetes:

|

||||

|

||||

+ Ensure OpenIM application configurations expose Prometheus metrics.

|

||||

+ When deploying using Helm charts (refer to [OpenIM Helm Charts](https://github.com/openimsdk/helm-charts)), pay attention to configuring relevant monitoring settings.

|

||||

|

||||

### 2.3. <a name='NodeExporter'></a>Node Exporter

|

||||

|

||||

Node Exporter is used to collect hardware and operating system-level metrics for Kubernetes nodes, such as CPU, memory, disk usage, and more. To integrate Node Exporter in Kubernetes:

|

||||

|

||||

+ Deploy Node Exporter using the appropriate Helm chart. You can find information and guides on [Prometheus Community](https://prometheus.io/docs/guides/node-exporter/).

|

||||

+ Ensure Node Exporter's data is collected by Prometheus instances within your cluster.

|

||||

|

||||

|

||||

|

||||

## 3. <a name='SettingUpandConfiguringAlertManagerUsingEnvironmentVariablesandmakeinit'></a>Setting Up and Configuring AlertManager Using Environment Variables and `make init`

|

||||

|

||||

### 3.1. <a name='Introduction-1'></a>Introduction

|

||||

|

||||

AlertManager, a component of the Prometheus monitoring system, handles alerts sent by client applications such as the Prometheus server. It takes care of deduplicating, grouping, and routing them to the correct receiver. This document outlines how to set up and configure AlertManager using environment variables and the `make init` command. We will focus on configuring key fields like the sender's email, SMTP settings, and SMTP authentication password.

|

||||

|

||||

### 3.2. <a name='Prerequisites-1'></a>Prerequisites

|

||||

|

||||

+ Basic knowledge of terminal and command-line operations.

|

||||

+ AlertManager installed on your system.

|

||||

+ Access to an SMTP server for sending emails.

|

||||

|

||||

### 3.3. <a name='ConfigurationSteps'></a>Configuration Steps

|

||||

|

||||

#### 3.3.1. <a name='ExportingEnvironmentVariables'></a>Exporting Environment Variables

|

||||

|

||||

Before initializing AlertManager, you need to set environment variables. These variables are used to configure the AlertManager settings without altering the code. Use the `export` command in your terminal. Here are some key variables you might set:

|

||||

|

||||

+ `export ALERTMANAGER_RESOLVE_TIMEOUT='5m'`

|

||||

+ `export ALERTMANAGER_SMTP_FROM='alert@example.com'`

|

||||

+ `export ALERTMANAGER_SMTP_SMARTHOST='smtp.example.com:465'`

|

||||

+ `export ALERTMANAGER_SMTP_AUTH_USERNAME='alert@example.com'`

|

||||

+ `export ALERTMANAGER_SMTP_AUTH_PASSWORD='your_password'`

|

||||

+ `export ALERTMANAGER_SMTP_REQUIRE_TLS='false'`

|

||||

|

||||

#### 3.3.2. <a name='InitializingAlertManager'></a>Initializing AlertManager

|

||||

|

||||

After setting the necessary environment variables, you can initialize AlertManager by running the `make init` command. This command typically runs a script that prepares AlertManager with the provided configuration.

|

||||

|

||||

#### 3.3.3. <a name='KeyConfigurationFields'></a>Key Configuration Fields

|

||||

|

||||

##### a. Sender's Email (`ALERTMANAGER_SMTP_FROM`)

|

||||

|

||||

This variable sets the email address that will appear as the sender in the notifications sent by AlertManager.

|

||||

|

||||

##### b. SMTP Configuration

|

||||

|

||||

+ **SMTP Server (`ALERTMANAGER_SMTP_SMARTHOST`):** Specifies the address and port of the SMTP server used for sending emails.

|

||||

+ **SMTP Authentication Username (`ALERTMANAGER_SMTP_AUTH_USERNAME`):** The username for authenticating with the SMTP server.

|

||||

+ **SMTP Authentication Password (`ALERTMANAGER_SMTP_AUTH_PASSWORD`):** The password for SMTP server authentication. It's crucial to keep this value secure.

|

||||

|

||||

#### 3.3.4. <a name='ConfiguringSMTPAuthenticationPassword'></a>Configuring SMTP Authentication Password

|

||||

|

||||

The SMTP authentication password can be set using the `ALERTMANAGER_SMTP_AUTH_PASSWORD` environment variable. It's recommended to use a secure method to set this variable to avoid exposing sensitive information. For instance, you might read the password from a secure file or a secret management tool.

|

||||

|

||||

#### 3.3.5. <a name='UsefulLinksforCommonEmailServers'></a>Useful Links for Common Email Servers

|

||||

|

||||

For specific configurations related to common email servers, you may refer to their respective documentation:

|

||||

|

||||

+ Gmail SMTP Settings:

|

||||

+ [Gmail SMTP Configuration](https://support.google.com/mail/answer/7126229?hl=en)

|

||||

+ Microsoft Outlook SMTP Settings:

|

||||

+ [Outlook Email Settings](https://support.microsoft.com/en-us/office/pop-imap-and-smtp-settings-8361e398-8af4-4e97-b147-6c6c4ac95353)

|

||||

+ Yahoo Mail SMTP Settings:

|

||||

+ [Yahoo SMTP Configuration](https://help.yahoo.com/kb/SLN4724.html)

|

||||

|

||||

### 3.4. <a name='Conclusion'></a>Conclusion

|

||||

|

||||

Setting up and configuring AlertManager with environment variables provides a flexible and secure way to manage alert settings. By following the above steps, you can easily configure AlertManager for your monitoring needs. Always ensure to secure sensitive information, especially when dealing with SMTP authentication credentials.

|

||||

|

Before Width: | Height: | Size: 144 KiB After Width: | Height: | Size: 118 KiB |

Loading…

Reference in new issue