[](https://youtu.be/pqqsm5reGvs)

## Pre-Lecture Quiz

[Pre-lecture quiz]()

@ -75,9 +76,53 @@ As we have already mentioned - data is everywhere, we just need to capture it in

There are many possible sources of data, and it will be impossible to list all of them! However, let's mention some of the typical places where you can get data:

* **Structured**

- **Internet of Things**, including data from different sensors, such as temperature or pressure sensors, provides a lot of useful data. For example, if an office building is equipped with IoT sensors, we can automatically control heating and lighting in order to minimize costs.

- **Surveys** that we ask users after purchase of a good, or after visiting a web site.

- **Analysis of behavior** can, for example, help us understand how deeply a user goes into a site, and what is the typical reason for leaving the site.

* **Unstructured**

- **Texts** can be a rich source of insights, starting from overall **sentiment score**, up to extracting keywords and even some semantic meaning.

- **Images** or **Video**. A video from surveillance camera can be used to estimate traffic on the road, and inform people about potential traffic jams.

- Web server **Logs** can be used to understand which pages of our site are most visited, and for how long.

* Semi-structured

- **Social Network** graph can be a great source of data about user personality and potential effectiveness in spreading information around.

- When we have a bunch of photographs from a party, we can try to extract **Group Dynamics** data by building a graph of people taking pictures with each other.

By knowing different possible sources of data, you can try to think about different scenarios where data science techniques can be applied to know the situation better, and to improve business processes.

## What you can do with Data

In Data Science, we focus on the following steps of data journey:

<dl>

<dt>Data Acquisition</dt>

<dd>

First step is to collect the data. While in many cases it can be a straightforward process, like data coming to a database from web application, sometimes we need to use special techniques. For example, data from IoT sensors can be overwhelming, and it is a good practice to use buffering endpoints such as IoT Hub to collect all the data before further processing.

</dd>

<dt>Data Storage</dt>

<dd>

Storing the data can be challenging, especially if we are talking about big data. When deciding how to store data, it makes sense to anticipate the way you would want later on to query them. There are several ways data can be stored:

<ul>

<li>Relational database stores a collection of tables, and uses special language called SQL to query them. Typically, tables would be connected to each other using some schema. In many cases we need to convert the data from original form to fit the schema</li>

<li><ahref="https://en.wikipedia.org/wiki/NoSQL">NoSQL</a> database, such as <ahref="https://azure.microsoft.com/services/cosmos-db/?WT.mc_id=acad-31812-dmitryso">CosmosDB</a>, does not enforce schema on data, and allows storing more complex data, for example, hierarchical JSON documents or graphs. However, NoSQL database does not have rich querying capabilities of SQL, and cannot enforce referential integrity between data.</li>

<li><ahref="https://en.wikipedia.org/wiki/Data_lake">Data Lake</a> storage is used for large collections of data in raw form. Data lakes are often used with big data, where all data cannot fit into one machine, and has to be stored and processed by a cluster. <ahref="https://en.wikipedia.org/wiki/Apache_Parquet">Parquet</a> is the data format that is often used in conjunction with big data.</li>

</ul>

</dd>

<dt>Data Processing</dt>

<dd>

This is the most exciting part of data journey, which involved processing the data from its original form to the form that can be used for visualization/model training. When dealing with unstructured data such as text or images, we may need to use some AI techniques to extract **features** from the data, thus converting it to structured form.

</dd>

<dt>Visualization / Human Insights</dt>

<dd>

Often to understand the data we need to visualize them. Having many different visualization techniques in our toolbox we can find the right view to make an insight. Often, data scientist needs to "play with data", visualizing it many times and looking for some relationships. Also, we may use techniques from statistics to test some hypotheses or prove correlation between different pieces of data.

</dd>

<dt>Training predictive model</dt>

<dd>

Because the ultimate goal of data science is to be able to take decisions based on data, we may want to use the techniques of <ahref="http://github.com/microsoft/ml-for-beginners">Machine Learning</a> to build predictive model that will be able to solve our problem.

</dd>

</dl>

Of course, depending on the actual data some steps might be missing (eg., when we already have the data in the database, or when we do not need model training), or some steps might be repeated several times (such as data processing).

## Digitalization and Digital Transformation

@ -102,8 +147,6 @@ In this challenge, we will try to find concepts relevant to the field of Data Sc

[Post-lecture quiz]()

## Review & Self Study

## Assignment

[Assignment Title](assignment.md)

[Think About Data Science Scenarios](assignment.md)

We are all data citizens living in a datafied world.

Market trends tell us that by 2022, 1-in-3 large organizations will buy and sell their data through online [Marketplaces and Exchanges](https://www.gartner.com/smarterwithgartner/gartner-top-10-trends-in-data-and-analytics-for-2020/). As **App Developers**, we'll find it easier and cheaper to integrate data-driven insights and algorithm-driven automation into daily user experiences. But as AI becomes pervasive, we'll also need to understand the potential harms caused by the [weaponization](https://www.youtube.com/watch?v=TQHs8SA1qpk) of such algorithms at scale.

Trends also indicate that we will create and consume over [180 zettabytes](https://www.statista.com/statistics/871513/worldwide-data-created/) of data by 2025. As **Data Scientists**, this gives us unprecedented levels of access to personal data. This means we can build behavioral profiles of users and influence decision-making in ways that create an [illusion of free choice](https://www.datasciencecentral.com/profiles/blogs/the-illusion-of-choice) while potentially nudging users towards outcomes we prefer. It also raises broader questions on data privacy and user protections.

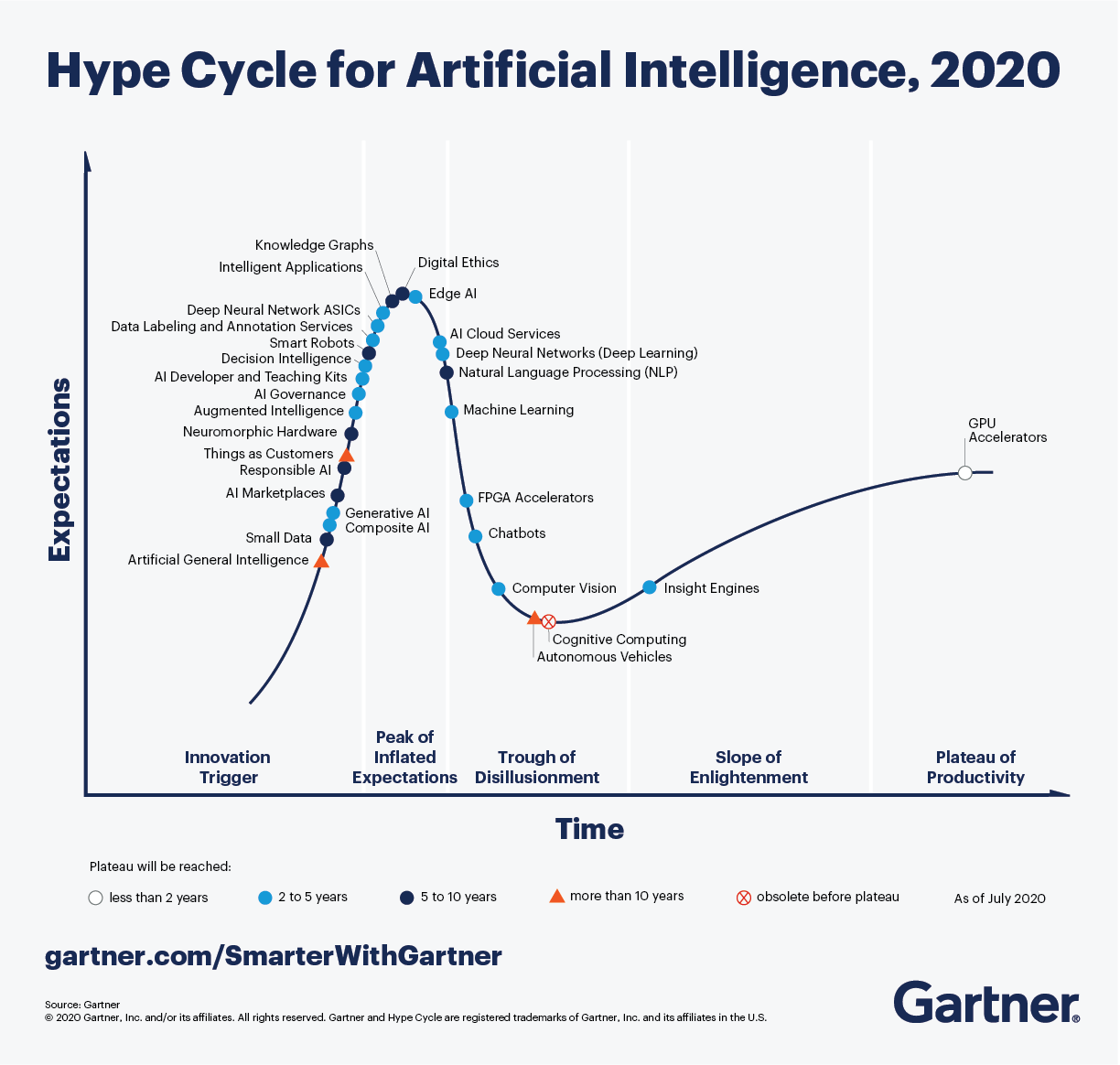

Data ethics are now _necessary guardrails_ for data science and engineering, helping us minimize potential harms and unintended consequences from our data-driven actions. The [Gartner Hype Cycle for AI](https://www.gartner.com/smarterwithgartner/2-megatrends-dominate-the-gartner-hype-cycle-for-artificial-intelligence-2020/) identifies relevant trends in digital ethics, responsibile AI and AI governances as key drivers for larger megatrends around _democratization_ and _industrialization_ of AI.

In this lesson, we'll explore the fascinating area of data ethics - from core concepts and challenges, to case studies and applied AI concepts like governance - that help establish an ethics culture in teams and organizations that work with data and AI.

## Pre-Lecture Quiz 🎯

@ -9,122 +22,186 @@

> A Visual Guide to Data Ethics by [Nitya Narasimhan](https://twitter.com/nitya) / [(@sketchthedocs)](https://sketchthedocs.dev)

## 1. Introduction

---

## Basic Definitions

Let's start by understanding the basic terminology.

The word "ethics" comes from the [Greek word "ethikos"](https://en.wikipedia.org/wiki/Ethics) (and its root "ethos") meaning _character or moral nature_.

**Ethics** is about the shared values and moral principles that govern our behavior in society. Ethics is based not on laws but on

widely-accepted norms of what is "right vs. wrong". However, ethical considerations can influence corporate governance initiatives and government regulations that create more incentives for compliance.

**Data Ethics** is a [new branch of ethics](https://royalsocietypublishing.org/doi/full/10.1098/rsta.2016.0360#sec-1) that "studies and evaluates moral problems related to _data, algorithms and corresponding practices_". Here, **"data"** focuses on actions related to generation, recording, curation, processing dissemination, sharing and usage, **"algorithms"** focuses on AI, agents, machine learning and robots, and **"practices"** focuses on topics like responsible innovation, programming, hacking and ethics codes.

**Applied Ethics** is the [practical application of moral considerations](https://en.wikipedia.org/wiki/Applied_ethics). It's the process of actively investigating ethics issues in the context of _real-world actions, products and processes_, and taking corrective measures to make that these remain aligned with our defined ethical values.

**Ethics Culture** is about [_operationalizing_ applied ethics](https://hbr.org/2019/05/how-to-design-an-ethical-organization) to make sure that our ethical principles and practices are adopted in a consistent and scalable manner across the entire organization. Successful ethics cultures define organization-wide ethical principles, provide meaningful incentives for compliance, and reinforce ethics norms by encouraging and amplifying desired behaviors at every level of the organization.

## Ethics Concepts

In this section we'll discuss concepts like **shared values** (principles) and **ethical challenges** (problems) for data ethics - and explore **case studies** that help you understand these concepts in real-world contexts.

### 1. Ethics Principles

Every data ethics strategy begins by defining _ethical principles_ - the "shared values" that describe acceptable behaviors, and guide compliant actions, in our data & AI projects. You can define these at an individual or team level. However, most large organizations outlines these in an _ethical AI_ mission statement or framework that is defined at corporate levels and enforced consistently across all teams.

This lesson will look at the field of _data ethics_ - from core concepts (ethical challenges & societal consequences) to applied ethics (ethical principles, practices and culture). Let's start with the basics: definitions and motivations.

**Example:** Microsoft's [Responsible AI](https://www.microsoft.com/en-us/ai/responsible-ai) mission statement reads: _"We are committed to the advancement of AI driven by ethical principles that put people first"_ - identifying 6 ethical principles in the framework below:

### 1.1 Definitions

**Ethics** [comes from the Greek word "ethikos" and its root "ethos"](https://en.wikipedia.org/wiki/Ethics). It refers to the set of _shared values and moral principles_ that govern our behavior in society and is based on widely-accepted ideas of _right vs. wrong_. Ethics are not laws! They can't be legally enforced but they can influence corporate initiatives and government regulations that help with compliance and governance.

Let's briefly explore these principles. _Transparency_ and _accountability_ are foundational values that other principles built upon - so let's begin there:

**Data Ethics** is [defined as a new branch of ethics](https://royalsocietypublishing.org/doi/full/10.1098/rsta.2016.0360#sec-1) that "studies and evaluates moral problems related to _data, algorithms and corresponding practices_ .. to formulate and support morally good solutions" where:

* `data` = generation, recording, curation, dissemination, sharing and usage

* `algorithms` = AI, machine learning, bots

* `practices` = responsible innovation, ethical hacking, codes of conduct

* [**Accountability**](https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1:primaryr6) makes practioners _responsible_ for their data & AI operations, and for compliance with these ethical principles.

* [**Transparency**](https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1:primaryr6) ensures that data and AI actions are _understandable_ (interpretable) to users, explaining the what and why behind decisions.

* [**Fairness**](https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1%3aprimaryr6) - focuses on ensuring AI treats _all people_ fairly, addressing any systemic or implicit socio-technical biases in data and systems.

* [**Reliability & Safety**](https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1:primaryr6) - ensures that AI behaves _consistently_ with defined values, minimizing potential harms or unintended consequences.

* [**Privacy & Security**](https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1:primaryr6) - is about understanding data lineage, and providing _data privacy and related protections_ to users.

* [**Inclusiveness**](https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1:primaryr6) - is about designing AI solutions with intention, adapting them to meet a _broad range of human needs_& capabilities.

**Applied Ethics** is the [_practical application of moral considerations_](https://en.wikipedia.org/wiki/Applied_ethics). If focuses on understanding how ethical issues impact real-world actions, products and processes, by asking moral questions - like _"is this fair?"_ and _"how can this harm individuals or society as a whole?"_ when working with big data and AI algorithms. Applied ethics practices can then focus on taking corrective measures - like employing checklists (_"did we test data model accruacy with diverse groups, for fairness?"_) - to minimize or prevent any unintended consequences.

> 🚨 Think about what your data ethics mission statement could be. Explore ethical AI frameworks from other organizations - here are examples from [IBM](https://www.ibm.com/cloud/learn/ai-ethics), [Google](https://ai.google/principles) and [Facebook](https://ai.facebook.com/blog/facebooks-five-pillars-of-responsible-ai/). What shared values do they have in common? How do these principles relate to the AI product or industry they operate in?

**Ethics Culture**: Applied ethics focuses on identifying moral questions and adopting ethically-motivated actions with respect to real-world scenarios and projects. Ethics culture is about _operationalizing_ these practices, collaboratively and at scale, to ensure governances at the scale of organizations and industries. [Establishing an ethics culture](https://hbr.org/2019/05/how-to-design-an-ethical-organization) requires identifying and addressing _systemic_ issues (historical or ingrained) and creating norms & incentives htat keep members accountable for adherence to ethical principles.

### 2. Ethics Challenges

Once we have ethics principles defined, the next step is to evaluate our data and AI actions to see if they align with those shared values. Think about your actions in two categories: _data collection_ and _algorithm design_.

### 1.2 Motivation

With data collection, actions will likely involve **personal data** or personally identifiable information (PII) for identifiable living individuals. This includes [diverse items of non-personal data](https://ec.europa.eu/info/law/law-topic/data-protection/reform/what-personal-data_en) that _collectively_ identify an individual. Ethical challenges can relate to _data privacy_, _data ownership_, and related topics like _informed consent_ and _intellectual property rights_ for users.

Let's look at some emerging trends in big data and AI:

With algorithm design, actions will involve collecting & curating **datasets**, then using them to train & deploy **data models** that predict outcomes or automate decisions in real-world contexts. Ethical challenges can arise from _dataset bias_, _data quality_ issues, _unfairness_ and _misrepresentation_ in algorithms - including some issues that are systemic in nature.

* [By 2022](https://www.gartner.com/smarterwithgartner/gartner-top-10-trends-in-data-and-analytics-for-2020/) one-in-three large organizations will buy and sell data via online Marketplaces and Exchanges.

* [By 2025](https://www.statista.com/statistics/871513/worldwide-data-created/) we'll be creating and consuming over 180 zettabytes of data.

In both cases, ethics challenges highlight areas where our actions may encounter conflict with our shared values. To detect, mitigate, minimize, or eliminate, these concerns - we need to ask moral "yes/no" questions related to our actions, then take corrective actions as needed. Let's take a look at some ethical challenges and the moral questions they raise:

**Data scientists** will have unimaginable levels of access to personal and behavioral data, helping them develop the algorithms to fuel an AI-driven economy. This raises data ethics issues around _protection of data privacy_ with implications for individual rights around personal data collection and usage.

**App developers** will find it easier and cheaper to integrate AI into everday consumer experiences, thanks to the economies of scale and efficiencies of distribution in centralized exchanges. This raises ethical issues around the [_weaponization of AI_](https://www.youtube.com/watch?v=TQHs8SA1qpk) with implications for societal harms caused by unfairness, misrepresentation and systemic biases.

#### 2.1 Data Ownership

**Democratization and Industrialization of AI** are seen as the two megatrends in Gartner's 2020 [Hype Cycle for AI](https://www.gartner.com/smarterwithgartner/2-megatrends-dominate-the-gartner-hype-cycle-for-artificial-intelligence-2020/), shown below. The first positions developers to be a major force in driving increased AI adoption, while the second makes responsible AI and governance a priority for industries.

Data collection often involves personal data that can identify the data subjects. [Data ownership](https://permission.io/blog/data-ownership) is about _control_ and [_user rights_](https://permission.io/blog/data-ownership) related to the creation, processing and dissemination of data.

The moral questions we need to ask are:

* Who owns the data? (user or organization)

* What rights do data subjects have? (ex: access, erasure, portability)

* What rights do organizations have? (ex: rectify malicious user reviews)

Data ethics are now **necessary guardrails** ensuring developers ask the right moral questions and adopt the right practices (to uphold ethical values). And they influence the regulations and frameworks defined (for governance) by governments and organizations.

[Informed consent](https://legaldictionary.net/informed-consent/) defines the act of users agreeing to an action (like data collection) with a _full understanding_ of relevant facts including the purpose, potential risks and alternatives.

Questions to explore here are:

* Did user (data subject) give permission for data capture and usage?

* Did user understand the purpose for which that data was captured?

* Did user understand the potential risks from their participation?

## 2. Core Concepts

#### 2.3 Intellectual Property

A data ethics culture requires an understanding of three things: the _shared values_ we embrace as a society, the _moral questions_ we ask (to ensure adherence to those values), and the potential _harms & consequences_ (of non-adherence).

[Intellectual property](https://en.wikipedia.org/wiki/Intellectual_property) refers to intangible creations resulting from human initiative, that may _have economic value_ to individuals or businesses.

### 2.1 Ethical AI Values

Questions to explore here are:

* Did the collected data have economic value to a user or business?

* Does the **user** have intellectual property here?

* Does the **organization** have intellectual property here?

* If these rights exist, how are we protecting them?

Our shared values reflect our ideas of wrong-vs-right when it comes to big data and AI. Different organizations have their own views of what responsible AI and ethical AI principles look like.

#### 2.4 Data Privacy

Here is an example - the [Responsible AI Framework](https://docs.microsoft.com/en-gb/azure/cognitive-services/personalizer/media/ethics-and-responsible-use/ai-values-future-computed.png) from Microsoft defines 6 core ethics principles for all products and processes to follow, when implementing AI solutions:

[Data privacy](https://www.northeastern.edu/graduate/blog/what-is-data-privacy/) or information privacy refers to preservation of user privacy and protection of user identity with respect to personally-identifiable information.

* **Accountability**: ensure AI designers & developers take _responsibility_ for its operation.

* **Transparency**: make AI operations and decisions _understandable_ to users.

* **Fairness**: understand biases and ensure AI _behaves comparably_ across target groups.

* **Reliability & Safety**: make sure AI behaves consistently, and _without malicious intent_.

* **Security & Privacy**: get _informed consent_ for data collection, provide data privacy controls.

* **Inclusiveness**: adapt AI behaviors to _broad range of human needs_ and capabilities.

Questions to explore here are:

* Is users' personal data secured against hacks and leaks?

* Is users' data accessible only to authorized users and contexts?

* Is users' anonymity preserved when data is shared or disseminated?

* Can a user be de-identified from anonymized datasets?

Note that accountability and transparency are _cross-cutting_ concerns that are foundational to the top 4 values, and can be explored in their contexts. In the next section we'll look at the ethical challenges (moral questions) raised in two core contexts:

#### 2.5 Right To Be Forgotten

* Data Privacy - focused on **personal data** collection & use, with consequences to individuals.

* Fairness - focused on **algorithm** design & use, with consequences to society at large.

The [Right To Be Forgotten](https://en.wikipedia.org/wiki/Right_to_be_forgotten) or [Right to Erasure](https://www.gdpreu.org/right-to-be-forgotten/) provides additional personal data protections to users. Specifically, it gives users the right to request deletion or removal of their personal data from Internet searches and other locations, _under specific circumstances_ - allowing them a fresh start online without past actions being held against them.

### 2.2 Ethics of Personal Data

Questions to explore here are:

* Does the system allow data subjects to request erasure?

* Should the withdrawal of user consent trigger automated erasure?

* Was data collected without consent or by unlawful means?

* Are we compliant with government regulations for data privacy?

[Personal data](https://en.wikipedia.org/wiki/Personal_data) or personally-identifiable information (PII) is _any data that relates to an identified or identifiable living individual_. It can also [extend to diverse pieces of non-personal data](https://ec.europa.eu/info/law/law-topic/data-protection/reform/what-personal-data_en) that collectively can lead to the identification of a specific individual. Examples include: participant data from research studies, social media interactions, mobile & web app data, online commerce transactions and more.

Here are _some_ ethical concepts and moral questions to explore in context:

#### 2.6 Dataset Bias

* **Data Ownership**. Who owns the data - user or organization? How does this impact users' rights?

* **Informed Consent**. Did users give permissions for data capture? Did they understand purpose?

* **Intellectual Property**. Does data have economic value? What are the users' rights & controls?

* **Data Privacy**. Is data secured from hacks/leaks? Is anonymity preserved on data use or sharing?

* **Right to be Forgotten**. Can user request their data be deleted or removed to reclaim privacy?

Dataset or [Collection Bias](http://researcharticles.com/index.php/bias-in-data-collection-in-research/) is about selecting a _non-representative_ subset of data for algorithm development, creating potential unfairness in result outcomes for diverse groups. Types of bias include selection or sampling bias, volunteer bias, and instrument bias.

### 2.3 Ethics of Algorithms

Questions to explore here are:

* Did we recruit a representative set of data subjects?

* Did we test our collected or curated dataset for various biases?

* Can we mitigate or remove any discovered biases?

Algorithm design begins with collecting & curating datasets relevant to a specific AI problem or domain, then processing & analyzing it to create models that can help predict outcomes or automate decisions in real-world applications. Moral questions can now arise in various contexts, at any one of these stages.

#### 2.7 Data Quality

Here are _some_ ethical concepts and moral questions to explore in context:

[Data Quality](https://lakefs.io/data-quality-testing/) looks at the validity of the curated dataset used to develop our algorithms, checking to see if features and records meet requirements for the level of accuracy and consistency needed for our AI purpose.

* **Dataset Bias** - Is data representative of target audience? Have we checked for different [data biases](https://towardsdatascience.com/survey-d4f168791e57)?

* **Data Quality** - Does dataset and feature selection provide the required [data quality assurance](https://lakefs.io/data-quality-testing/)?

* **Algorithm Fairness** - Does the data model [systematically discriminate](https://towardsdatascience.com/what-is-algorithm-fairness-3182e161cf9f) against some subgroups?

* **Misrepresentation** - Are we [communicating honestly reported data in a deceptive manner?](https://www.sciencedirect.com/topics/computer-science/misrepresentation)

* **Explainable AI** - Are the results of AI [understandable by humans](https://en.wikipedia.org/wiki/Explainable_artificial_intelligence)? White-box (vs. black-box) models.

* **Free Choice** - Did user exercise free will or did algorithm nudge them towards a desired outcome?

Questions to explore here are:

* Did we capture valid _features_ for our use case?

* Was data captured _consistently_ across diverse data sources?

* Is the dataset _complete_ for diverse conditions or scenarios?

* Is information captured _accurate_ in reflecting reality?

### 2.3 Case Studies

#### 2.8 Algorithm Fairness

The above are a subset of the core ethical challenges posed for big data and AI. More organizations are defining and adopting _responsible AI_ or _ethical AI_ frameworks that may identify additional shared values and related ethical challenges for specific domains or needs.

[Algorithm Fairness](https://towardsdatascience.com/what-is-algorithm-fairness-3182e161cf9f) checks to see if the algorithm design systematically discriminates against specific subgroups of data subjects leading to [potential harms](https://docs.microsoft.com/en-us/azure/machine-learning/concept-fairness-ml) in _allocation_ (where resources are denied or withheld from that group) and _quality of service_ (where AI is not as accurate for some subgroups as it is for others).

To understand the potential _harms and consequences_ of neglecting or violating these data ethics principles, it helps to explore this in a real-world context. Here are some famous case studies and recent examples to get you started:

Questions to explore here are:

* Did we evaluate model accuracy for diverse subgroups and conditions?

* Did we scrutinize system for potential harms (e.g., stereotyping)?

* Can we revise data or retrain models to mitigate identified harms?

Explore resources like [AI Fairness checklists](https://query.prod.cms.rt.microsoft.com/cms/api/am/binary/RE4t6dA) to learn more.

* `1972`: The [Tuskegee Syphillis Study](https://en.wikipedia.org/wiki/Tuskegee_Syphilis_Study) is a landmark case study for **informed consent** in data science. African American men who participated in the study were promised free medical care _but deceived_ by researchers who failed to inform subjects of their diagnosis or about availability of treatment. Many subjects died; some partners or children were affected by complications. The study lasted 40 years.

* `2007`: The Netflix data prize provided researchers with [_10M anonymized movie rankings from 50K customers_](https://www.wired.com/2007/12/why-anonymous-data-sometimes-isnt/) to help improve recommendation algorithms. This became a landmark case study in **de-identification (data privacy)** where researchers were able to correlate the anonymized data with _other datasets_ (e.g., IMDb) that had personally identifiable information - helping them "de-anonymize" users.

* `2013`: The City of Boston [developed Street Bump](https://www.boston.gov/transportation/street-bump), an app that let citizens report potholes, giving the city better roadway data to find and fix issues. This became a case study for **collection bias** where [people in lower income groups had less access to cars and phones](https://hbr.org/2013/04/the-hidden-biases-in-big-data), making their roadway issues invisible in this app. Developers worked with academics to _equitable access and digital divides_ issues for fairness.

* `2018`: The MIT [Gender Shades Study](http://gendershades.org/overview.html) evaluated the accuracy of gender classification AI products, exposing gaps in accuracy for women and persons of color. A [2019 Apple Card](https://www.wired.com/story/the-apple-card-didnt-see-genderand-thats-the-problem/) seemed to offer less credit to women than men. Both these illustrated issues in **algorithmic fairness** and discrimination.

* `2020`: The [Georgia Department of Public Health released COVID-19 charts](https://www.vox.com/covid-19-coronavirus-us-response-trump/2020/5/18/21262265/georgia-covid-19-cases-declining-reopening) that appeared to mislead citizens about trends in confirmed cases with non-chronological ordering on the x-axis. This illustrates **data misrepresentation** where honest data is presented dishonestly to support a desired narrative.

* `2020`: Learning app [ABCmouse paid $10M to settle an FTC complaint](https://www.washingtonpost.com/business/2020/09/04/abcmouse-10-million-ftc-settlement/) where parents were trapped into paying for subscriptions they couldn't cancel. This highlights the **illusion of free choice** in algorithmic decision-making, and potential harms from dark patterns that exploit user insights.

* `2021`: Facebook [Data Breach](https://www.npr.org/2021/04/09/986005820/after-data-breach-exposes-530-million-facebook-says-it-will-not-notify-users) exposed data from 530M users, resulting in a $5B settlement to the FTC. It however refused to notify users of the breach - raising issues like **data privacy**, **data security** and **accountability**, including user rights to redress for those affected.

#### 2.9 Misrepresentation

Want to explore more case studies on your own? Check out these resources:

[Data Misrepresentation](https://www.sciencedirect.com/topics/computer-science/misrepresentation) is about asking whether we are communicating insights from honestly-reported data in a deceptive manner to support a desired narrative.

Questions to explore here are:

* Are we reporting incomplete or inaccurate data?

* Are we visualizing data in a manner that drives misleading conclusions?

* Are we using selective statistical techniques to manipulate outcomes?

* Are there alternative explanations that may offer a different conclusion?

#### 2.10 Free Choice

The [Illusion of Free Choice](https://www.datasciencecentral.com/profiles/blogs/the-illusion-of-choice) occurs when system "choice architectures" use decision-making algorithms to nudge people towards taking a preferred outcome, while seeming to give them options and control. These [dark patterns](https://www.darkpatterns.org/) can cause social and economic harms to users. Because user decisions impact behavior profiles, these actions potentially drive future choices that can amplify or extend the impact of these harms.

Questions to explore here are:

* Did the user understand the implications of making that choice?

* Was user aware of alternative choices and the pros & cons of each?

* Can the user reverse an automated or influenced choice later?

### 3. Case Studies

To put these ethical challenges in real-world contexts, it helps to look at case studies that highlight the potential harms and consequences to individuals and society, when such ethics violations are overlooked.

Here are a few examples:

| Ethics Challenge | Case Study |

|--- |--- |

| **Informed Consent** | 1972 - [Tuskegee Syphillis Study](https://en.wikipedia.org/wiki/Tuskegee_Syphilis_Study) - African American men who participated in the study were promised free medical care _but deceived_ by researchers who failed to inform subjects of their diagnosis or about availability of treatment. Many subjects died & partners or children were affected; the study lasted 40 years. |

| **Data Privacy** | 2007 - The [Netflix data prize](https://www.wired.com/2007/12/why-anonymous-data-sometimes-isnt/) provided researchers with _10M anonymized movie rankings from 50K customers_ to help improve recommendation algorithms. However, researchers were able to correlate anonymized data with personally-identifiable data in _external datasets_ (e.g., IMDb comments) - effectively "de-anonymizing" some Netflix subscribers.|

| **Collection Bias** | 2013 - The City of Boston [developed Street Bump](https://www.boston.gov/transportation/street-bump), an app that let citizens report potholes, giving the city better roadway data to find and fix issues. However, [people in lower income groups had less access to cars and phones](https://hbr.org/2013/04/the-hidden-biases-in-big-data), making their roadway issues invisible in this app. Developers worked with academics to _equitable access and digital divides_ issues for fairness. |

| **Algorithmic Fairness** | 2018 - The MIT [Gender Shades Study](http://gendershades.org/overview.html) evaluated the accuracy of gender classification AI products, exposing gaps in accuracy for women and persons of color. A [2019 Apple Card](https://www.wired.com/story/the-apple-card-didnt-see-genderand-thats-the-problem/) seemed to offer less credit to women than men. Both illustrated issues in algorithmic bias leading to socio-economic harms.|

| **Data Misrepresentation** | 2020 - The [Georgia Department of Public Health released COVID-19 charts](https://www.vox.com/covid-19-coronavirus-us-response-trump/2020/5/18/21262265/georgia-covid-19-cases-declining-reopening) that appeared to mislead citizens about trends in confirmed cases with non-chronological ordering on the x-axis. This illustrates misrepresentation through visualization tricks. |

| **Illusion of free choice** | 2020 - Learning app [ABCmouse paid $10M to settle an FTC complaint](https://www.washingtonpost.com/business/2020/09/04/abcmouse-10-million-ftc-settlement/) where parents were trapped into paying for subscriptions they couldn't cancel. This illustrates dark patterns in choice architectures, where users were nudged towards potentially harmful choices. |

| **Data Privacy & User Rights** | 2021 - Facebook [Data Breach](https://www.npr.org/2021/04/09/986005820/after-data-breach-exposes-530-million-facebook-says-it-will-not-notify-users) exposed data from 530M users, resulting in a $5B settlement to the FTC. It however refused to notify users of the breach violating user rights around data transparency and access. |

Want to explore more case studies? Check out these resources:

* [Ethics Unwrapped](https://ethicsunwrapped.utexas.edu/case-studies) - ethics dilemmas across diverse industries.

* [Data Science Ethics course](https://www.coursera.org/learn/data-science-ethics#syllabus) - landmark case studies in data ethics.

* [Where things have gone wrong](https://deon.drivendata.org/examples/) - deon checklist examples of ethical issues

* [Data Science Ethics course](https://www.coursera.org/learn/data-science-ethics#syllabus) - landmark case studies explored.

* [Where things have gone wrong](https://deon.drivendata.org/examples/) - deon checklist with examples

## 3. Applied Ethics

> 🚨 Think about the case studies you've seen - have you experienced, or been affected by, a similar ethical challenge in your life? Can you think of at least one other case study that illustrates one of the ethical challenges we've discussed in this section?

We've learned about data ethics values, and the ethical challenges (+ moral questions) associated with adherence to these values. But how do we _implement_ these ideas in real-world contexts? Here are some tools & practices that can help.

## Applied Ethics

### 3.1 Have Professional Codes

We've talked about ethics concepts, challenges and case studies in real-world contexts. But how do we get started _applying_ ethical principles and practices in our own projects? And how do we _operationalize_ these practices for better governance? Let's explore some real-world solutions:

Professional codes are _moral guidelines_ for professional behavior, helping employees or members _make decisions that align with organizational principles_. Codes may not be legally enforceable, making them only as good as the willing compliance of members. An organization may inspire adherence by imposing incentives & penalties accordingly.

### 1. Professional Codes

Professional _codes of conduct_ are prescriptive rules and responsibilities that members must follow to remain in good standing with an organization. A professional *code of ethics* is more [_aspirational_](https://keydifferences.com/difference-between-code-of-ethics-and-code-of-conduct.html), defining the shared values and ideas of the organization. The terms are sometimes used interchangeably.

Professional Codes offer one option for organizations to "incentivize" members to support their ethical principles and mission statement. Codes are _moral guidelines_ for professional behavior, helping employees or members make decisions that align with their organization's principles. They are only as good as the voluntary compliance from members; however, many organizations offer additional rewards and penalties to motivate compliance from members.

Examples include:

@ -132,48 +209,43 @@ Examples include:

* [Data Science Association](http://datascienceassn.org/code-of-conduct.html) Code of Conduct (created 2013)

* [ACM Code of Ethics and Professional Conduct](https://www.acm.org/code-of-ethics) (since 1993)

> 🚨 Do you belong to a professional engineering or data science organization? Explore their site to see if they define a professional code of ethics. What does this say about their ethical principles? How are they "incentivizing" members to follow the code?

### 3.2 Ask Moral Questions

Assuming you've already identified your shared values or ethical principles at a team or organization level, the next step is to identify the moral questions relevant to your specific use case and operational workflow.

Here are [6 basic questions about data ethics](https://halpert3.medium.com/six-questions-about-data-science-ethics-252b5ae31fec) that you can build on:

* Is the data you're collecting fair and unbiased?

* Is the data being used ethically and fairly?

* Is user privacy being protected?

* To whom does data belong - the company or the user?

* What effects do the data and algorithms have on society (individual and collective)?

* Is the data manipulated or deceptive?

### 2. Ethics Checklists

For larger team or project scope, you can choose to expand on questions that reflect a specific stage of the workflow. For example here are [22 questions on ethics in data and AI](https://medium.com/the-organization/22-questions-for-ethics-in-data-and-ai-efb68fd19429) that were grouped into _design_, _implementation & management_, _systems & organization_ categories for convenience.

While professional codes define required _ethical behavior_ from practitioners, they [have known limitations](https://resources.oreilly.com/examples/0636920203964/blob/master/of_oaths_and_checklists.md) in enforcement, particularly in large-scale projects. Instead, many data Science experts [advocate for checklists](https://resources.oreilly.com/examples/0636920203964/blob/master/of_oaths_and_checklists.md), that can **connect principles to practices** in more deterministic and actionable ways.

### 3.3 Adopt Ethics Checklists

Checklists convert questions into "yes/no" tasks that can be operationalized, allowing them to be tracked as part of standard product release workflows.

While professional codes define required _ethical behavior_ from practitioners, they [have known limitations](https://resources.oreilly.com/examples/0636920203964/blob/master/of_oaths_and_checklists.md) for implementation, particularly in large-scale projects. In [Ethics and Data Science](https://resources.oreilly.com/examples/0636920203964/blob/master/of_oaths_and_checklists.md)), experts instead advocate for ethics checklists that can **connect principles to practices** in more deterministic and actionable ways.

Examples include:

* [Deon](https://deon.drivendata.org/) - a general-purpose data ethics checklist created from [industry recommendations](https://deon.drivendata.org/#checklist-citations) with a command-line tool for easy integration.

* [Privacy Audit Checklist](https://cyber.harvard.edu/ecommerce/privacyaudit.html) - provides general guidance for information handling practices from legal and social exposure perspectives.

* [AI Fairness Checklist](https://www.microsoft.com/en-us/research/project/ai-fairness-checklist/) - created by AI practitioners to support adoption and integration of fairness checks into AI development cycles.

* [22 questions for ethics in data and AI](https://medium.com/the-organization/22-questions-for-ethics-in-data-and-ai-efb68fd19429) - more open-ended framework, structured for initial exploration of ethics issues in design, implementation, and organizational, contexts.

Checklists convert questions into "yes/no" tasks that can be tracked and validated before product release. Tools like [deon](https://deon.drivendata.org/) make this frictionless, creating default checklists aligned to [industry recommendations](https://deon.drivendata.org/#checklist-citations) and enabling users to customize and integrate them into workflows using a command-line tool. Deon also provides [real-world examples](ttps://deon.drivendata.org/examples/) of ethical challenges to provide context for these decisions.

### 3. Ethics Regulations

### 3.4 Track Ethics Compliance

Ethics is about defining shared values and doing the right thing _voluntarily_. **Compliance** is about _following the law_ if and where defined. **Governance** broadly covers all the ways in which organizations operate to enforce ethical principles and comply with established laws.

**Ethics** is about doing the right thing, even if there are no laws to enforce it. **Compliance** is about following the law, when defined and where applicable.

**Governance** is the broader umbrella that covers all the ways in which an organization (company or government) operates to enforce ethical principles & comply with laws.

Today, governance takes two forms within organizations. First, it's about defining **ethical AI** principles and establishing practices to operationalize adoption across all AI-related projects in the organization. Second, it's about complying with all government-mandated **data protection regulations** for regions it operates in.

Companies are creating their own ethics frameworks (e.g., [Microsoft](https://www.microsoft.com/en-us/ai/responsible-ai), [IBM](https://www.ibm.com/cloud/learn/ai-ethics), [Google](https://ai.google/principles), [Facebook](https://ai.facebook.com/blog/facebooks-five-pillars-of-responsible-ai/), [Accenture](https://www.accenture.com/_acnmedia/PDF-149/Accenture-Responsible-AI-Final.pdf#zoom=50)) for governances, while state and national governments tend to focus on regulations that protect the data privacy and rights of their citizens.

Examples of data protection and privacy regulations:

Here are some landmark data privacy regulations to know:

* `1974`, [US Privacy Act](https://www.justice.gov/opcl/privacy-act-1974) - regulates _federal govt._ collection, use and disclosure of personal information.

* `1996`, [US Health Insurance Portability & Accountability Act (HIPAA)](https://www.cdc.gov/phlp/publications/topic/hipaa.html) - protects personal health data.

* `1998`, [US Children's Online Privacy Protection Act (COPPA)](https://www.ftc.gov/enforcement/rules/rulemaking-regulatory-reform-proceedings/childrens-online-privacy-protection-rule) - protects data privacy of children under 13.

* `2018`, [General Data Protection Regulation (GDPR)](https://gdpr-info.eu/) - provides user rights, data protection and privacy.

* `2018`, [California Consumer Privacy Act (CCPA)](https://www.oag.ca.gov/privacy/ccpa) gives consumers more _rights_ over their personal data.

* `2021`, China's [Personal Information Protection Law](https://www.reuters.com/world/china/china-passes-new-personal-data-privacy-law-take-effect-nov-1-2021-08-20/) just passed, creating one of the strongest online data privacy regulations worldwide.

> 🚨 The European Union defined GDPR (General Data Protection Regulation) remains one of the most influential data privacy regulations today. Did you know it also defines [8 user rights](https://www.freeprivacypolicy.com/blog/8-user-rights-gdpr) to protect citizens' digital privacy and personal data? Learn about what these are, and why they matter.

In Aug 2021, China passed the [Personal Information Protection Law](https://www.reuters.com/world/china/china-passes-new-personal-data-privacy-law-take-effect-nov-1-2021-08-20/) (to go into effect Nov 1) which, with its Data Security Law, will create one of the strongest online data privacy regulations in the world.

### 4. Ethics Culture

### 3.5 Establish Ethics Culture

Note that there remains an intangible gap beween _compliance_ (doing enough to meet "the letter of the law") and addressing [systemic issues](https://www.coursera.org/learn/data-science-ethics/home/week/4) (like ossification, information asymmetry and distributional unfairness) that can speed up the weaponization of AI.

There remains an intangible gap between compliance ("doing enough to meet the letter of the law") and addressing systemic issues ([like ossification, information asymmetry and distributional unfairness](https://www.coursera.org/learn/data-science-ethics/home/week/4)) that can create self-fulfilling feedback loops to weaponizes AI further. This is motivating calls for [formalizing data ethics cultures](https://www.codeforamerica.org/news/formalizing-an-ethical-data-culture/) in organizations, where everyone is empowered to [pull the Andon cord](https://en.wikipedia.org/wiki/Andon_(manufacturing) to raise ethics concerns early. And exploring [collaborative approaches to defining this culture](https://towardsdatascience.com/why-ai-ethics-requires-a-culture-driven-approach-26f451afa29f) that build emotional connections and consistent beliefs across organizations and industries.

The latter requires [collaborative approaches to defining ethics cultures](https://towardsdatascience.com/why-ai-ethics-requires-a-culture-driven-approach-26f451afa29f) that build emotional connections and consistent shared values _across organizations_ in the industry. This calls for more [formalized data ethics cultures](https://www.codeforamerica.org/news/formalizing-an-ethical-data-culture/) in organizations - allowing _anyone_ to [pull the Andon cord](https://en.wikipedia.org/wiki/Andon_(manufacturing) (to raise ethics concerns early in the process) and making _ethical assessments_ (e.g., in hiring) a core criteria team formation in AI projects.

---

@ -187,14 +259,17 @@ There remains an intangible gap between compliance ("doing enough to meet the le

## Review & Self Study

Courses and books help with understanding core ethics concepts and challengs, while case studies and tools help with applied ethics practices in real-world contexts. Here are a few resources to start with.

---

# Assignment

* [Machine Learning For Beginners](https://github.com/microsoft/ML-For-Beginners/blob/main/1-Introduction/3-fairness/README.md) - lesson on Fairness, from Microsoft.

* [Principles of Responsible AI](https://docs.microsoft.com/en-us/learn/modules/responsible-ai-principles/) - free learning path from Microsoft Learn.

* [Ethics and Data Science](https://resources.oreilly.com/examples/0636920203964) - O'Reilly EBook (M. Loukides, H. Mason et. al)

* [Data Science Ethics](https://www.coursera.org/learn/data-science-ethics#syllabus) - online course from University of Michigan.

* [Ethics Unwrapped](https://ethicsunwrapped.utexas.edu/case-studies) - case studies from University of Texas.

While databases offer very efficient ways to store data and query them using query languages, the most flexible way of data processing is writing your own program to manipulate data. While in many cases doing database query will be more effective way, in some cases you might need some more complex data processing, which cannot be easily done using SQL.

Data processing can be programmed in any programming language, but there are certain languages that are higher level with respect to working with data. Data scientists typically prefer one of the following languages:

Here `.T` means the operation of transposing the DataFrame, i.e. changing rows and columns, and `rename` operation allows us to rename columns to match the previous example.

Here are a few most important operations we can perform on DataFrames:

**Column selection**. We can select individual columns by writing `df['A']` - this operation returns a Series. We can also select a subset of columns into another DataFrame by writing `df[['B','A']]` - this return another DataFrame.

**Filtering** only certain rows by criteria. For example, to leave only rows with column `A` greater than 5, we can write `df[df['A']>5]`.

> **Note**: The way filtering works is the following. The expression `df['A']<5` returns a boolean series, which indicates whether expression is `True` or `False` for each element of the original series `df['A']`. When boolean series is used as an index, it returns subset of rows in the DataFrame. Thus it is not possible to use arbitrary Python boolean expression, for example, writing `df[df['A']>5 and df['A']<7]` would be wrong. Instead, you should use special `&` operation on boolean series, writing `df[(df['A']>5) & (df['A']<7)]` (*brackets are important here*).

**Creating new computable columns**. We can easily create new computable columns for our DataFrame by using intuitive expression like this:

```python

df['DivA'] = df['A']-df['A'].mean()

```

This example calculates divergence of A from its mean value. What actually happens here is we are computing a series, and then assigning this series to the left-hand-side, creating another column. Thus, we cannot use any operations that are not compatible with series, for example, the code below is wrong:

```python

# Wrong code -> df['ADescr'] = "Low" if df['A'] <5else"Hi"

df['LenB'] = len(df['B']) # <-Wrongresult

```

The latter example, while being syntactically correct, gives us wrong result, because it assigns the length of series `B` to all values in the column, and not the length of individual elements as we intended.

If we need to compute complex expressions like this, we can use `apply` function. The last example can be written as follows:

```python

df['LenB'] = df['B'].apply(lambda x : len(x))

# or

df['LenB'] = df['B'].apply(len)

```

After operations above, we will end up with the following DataFrame:

| | A | B | DivA | LenB |

|---|---|---|---|---|

| 0 | 1 | I | -4.0 | 1 |

| 1 | 2 | like | -3.0 | 4 |

| 2 | 3 | to | -2.0 | 2 |

| 3 | 4 | use | -1.0 | 3 |

| 4 | 5 | Python | 0.0 | 6 |

| 5 | 6 | and | 1.0 | 3 |

| 6 | 7 | Pandas | 2.0 | 6 |

| 7 | 8 | very | 3.0 | 4 |

| 8 | 9 | much | 4.0 | 4 |

**Selecting rows based on numbers** can be done using `iloc` construct. For example, to select first 5 rows from the DataFrame:

```python

df.iloc[:5]

```

**Grouping** is often used to get a result similar to *pivot tables* in Excel. Suppose that we want to compute mean value of column `A` for each given number of `LenB`. Then we can group our DataFrame by `LenB`, and call `mean`:

```python

df.groupby(by='LenB').mean()

```

If we need to compute mean and the number of elements in the group, then we can use more complex `aggregate` function:

We have seen how easy it is to construct Series and DataFrames from Python objects. However, data usually comes in the form of a text file, or an Excel table. Luckily, Pandas offers us a simple way to load data from disk. For example, reading CSV file is as simple as this:

```python

df = pd.read_csv('file.csv')

```

We will see more examples of loading data, including fetching it from external web sites, in the "Challenge" section

### Printing and Plotting

Data Scientist often has to explore the data, thus it is important to be able to visualize it. When DataFrame is big, manytimes we want just to make sure we are doing everything correctly by printing out the first few rows. This can be done by calling `df.head()`. If you are running it from Jupyter Notebook, it will print out the DataFrame in a nice tabular form.

We have also seen the usage of `plot` function to visualize some columns. While `plot` is very useful for many tasks, and supports many different graph types via `kind=` parameter, you can always use raw `matplotlib` library to plot something more complex. We will cover data visualization in detail in separate course lessons.

This overview covers most important concepts of Pandas, however, the library is very rich, and there is no limit to what you can do with it! Let's now apply this knowledge for solving specific problem.

## 🚀 Challenge 1: Analyzing COVID Spread

First problem we will focus on is modelling of epidemic spread of COVID-19. In order to do that, we will use the data on the number of infected individuals in different countries, provided by the [Center for Systems Science and Engineering](https://systems.jhu.edu/) (CSSE) at [Johns Hopkins University](https://jhu.edu/). Dataset is available in [this GitHub Repository](https://github.com/CSSEGISandData/COVID-19).

Since we want to demonstrate how to deal with data, we invite you to open [`notebook-covidspread.ipynb`](notebook-covidspread.ipynb) and read it from top to bottom. You can also execute cells, and do some challenges that we have set for you along the way.

Since we want to demonstrate how to deal with data, we invite you to open [`notebook-covidspread.ipynb`](notebook-covidspread.ipynb) and read it from top to bottom. You can also execute cells, and do some challenges that we have left for you at the end.

## Working with Unstructured Data

While data very often comes in tabular form, in some cases we need to deal with less structured data, for example, text or images. In this case, to apply data processing techniques we have seen above, we need to somehow **extract** structured data. Here are a few examples:

* Extracting keywords from text, and seeing how often those keywords appear

* Using neural networks to extract information about objects on the picture

* Getting information on emotions of people on video camera feed

## 🚀 Challenge 2: Analyzing COVID Papers

In this challenge, we will continue with the topic of COVID pandemic, and focus on processing scientific papers on the subject. There is [CORD-19 Dataset](https://www.kaggle.com/allen-institute-for-ai/CORD-19-research-challenge) with more than 7000 (at the time of writing) papers on COVID, available with metadata and abstracts (and for about half of them there is also full text provided).

A full example of analyzing this dataset using [Text Analytics for Health](https://docs.microsoft.com/azure/cognitive-services/text-analytics/how-tos/text-analytics-for-health/?WT.mc_id=acad-31812-dmitryso) cognitive service is described [in this blog post](https://soshnikov.com/science/analyzing-medical-papers-with-azure-and-text-analytics-for-health/). We will discuss simplified version of this analysis.

> **NOTE**: We do not provide a copy of the dataset as part of this repository. You may first need to download the [`metadata.csv`](https://www.kaggle.com/allen-institute-for-ai/CORD-19-research-challenge?select=metadata.csv) file from [this dataset on Kaggle](https://www.kaggle.com/allen-institute-for-ai/CORD-19-research-challenge). Registration with Kaggle may be required. You may also download the dataset without registration [from here](https://ai2-semanticscholar-cord-19.s3-us-west-2.amazonaws.com/historical_releases.html), but it will include all full texts in addition to metadata file.

Open [`notebook-papers.ipynb`](notebook-papers.ipynb) and read it from top to bottom. You can also execute cells, and do some challenges that we have left for you at the end.

## Processing Image Data

Recently, very powerful AI models have been developed that allow us to understand images. There are many tasks that can be solved using pre-trained neural networks, or cloud services. Some examples include:

* **Image Classification**, which can help you categorize the image into one of the pre-defined classes. You can easily train your own image classifiers using services such as [Custom Vision](https://azure.microsoft.com/services/cognitive-services/custom-vision-service/?WT.mc_id=acad-31812-dmitryso)

* **Object Detection** to detect different objects in the image. Services such as [computer vision](https://azure.microsoft.com/services/cognitive-services/computer-vision/?WT.mc_id=acad-31812-dmitryso) can detect a number of common objects, and you can train [Custom Vision](https://azure.microsoft.com/services/cognitive-services/custom-vision-service/?WT.mc_id=acad-31812-dmitryso) model to detect some specific objects of interest.

* **Face Detection**, including Age, Gender and Emotion detection. This can be done via [Face API](https://azure.microsoft.com/services/cognitive-services/face/?WT.mc_id=acad-31812-dmitryso).

All those cloud services can be called using [Python SDKs](https://docs.microsoft.com/samples/azure-samples/cognitive-services-python-sdk-samples/cognitive-services-python-sdk-samples/?WT.mc_id=acad-31812-dmitryso), and thus can be easily incorporated into your data exploration workflow.

Here are some examples of exploring data from Image data sources:

* In the blog post [How to Learn Data Science without Coding](https://soshnikov.com/azure/how-to-learn-data-science-without-coding/) we explore Instagram photos, trying to understand what makes people give more likes to a photo. We first extract as much information from pictures as possible using [computer vision](https://azure.microsoft.com/services/cognitive-services/computer-vision/?WT.mc_id=acad-31812-dmitryso), and then use [Azure Machine Learning AutoML](https://docs.microsoft.com/azure/machine-learning/concept-automated-ml/?WT.mc_id=acad-31812-dmitryso) to build interpretable model.

* In [Facial Studies Workshop](https://github.com/CloudAdvocacy/FaceStudies) we use [Face API](https://azure.microsoft.com/services/cognitive-services/face/?WT.mc_id=acad-31812-dmitryso) to extract emotions on people on photographs from events, in order to try to understand what makes people happy.

## Conclusion

Whether you already have structured or unstructured data, using Python you can perform all steps related to data processing and understanding. It is probably the most flexible way of data processing, and that is the reason the majority of data scientists use Python as their primary tool. Learning Python in depth is probably a good idea if you are serious about your data science journey!

## Post-Lecture Quiz

@ -128,8 +257,17 @@ Since we want to demonstrate how to deal with data, we invite you to open [`note

## Review & Self Study

**Books**

* [Wes McKinney. Python for Data Analysis: Data Wrangling with Pandas, NumPy, and IPython](https://www.amazon.com/gp/product/1491957662)

**Online Resources**

* Official [10 minutes to Pandas](https://pandas.pydata.org/pandas-docs/stable/user_guide/10min.html) tutorial

* [Documentation on Pandas Visualization](https://pandas.pydata.org/pandas-docs/stable/user_guide/visualization.html)

**Learning Python**

* [Learn Python in a Fun Way with Turtle Graphics and Fractals](https://github.com/shwars/pycourse)

* [Take your First Steps with Python](https://docs.microsoft.com/en-us/learn/paths/python-first-steps/?WT.mc_id=acad-31812-dmitryso) Learning Path on [Microsoft Learn](http://learn.microsoft.com/?WT.mc_id=acad-31812-dmitryso)

## Assignment

[Assignment Title](assignment.md)

[Perform more detailed data study for the challenges above](assignment.md)

In this assignment, we will ask you to elaborate on the code we have started developing in our challenges. The assignment consists of two parts:

## COVID-19 Spread Modelling

- [ ] Plot $R_t$ graphs for 5-6 different countries on one plot for comparison, or using several plots side-by-side

- [ ] See how the number of deaths and recoveries correlate with number of infected cases.

- [ ] Find out how long a typical disease lasts by visually correlating infection rate and deaths rate and looking for some anomalies. You may need to look at different countries to find that out.

- [ ] Calculate the fatality rate and how it changes over time. *You may want to take into account the length of the disease in days to shift one time series before doing calculations*

## COVID-19 Papers Analysis

- [ ] Build co-occurrence matrix of different medications, and see which medications often occur together (i.e. mentioned in one abstract). You can modify the code for building co-occurrence matrix for medications and diagnoses.

- [ ] Visualize this matrix using heatmap.

- [ ] As a stretch goal, visualize the co-occurrence of medications using [chord diagram](https://en.wikipedia.org/wiki/Chord_diagram). [This library](https://pypi.org/project/chord/) may help you draw a chord diagram.

- [ ] As another stretch goal, extract dosages of different medications (such as **400mg** in *take 400mg of chloroquine daily*) using regular expressions, and build dataframe that shows different dosages for different medications. **Note**: consider numeric values that are in close textual vicinity of the medicine name.

## Rubric

Exemplary | Adequate | Needs Improvement

--- | --- | -- |

All tasks are complete, graphically illustrated and explained, including at least one of two stretch goals | More than 5 tasks are complete, no stretch goals are attempted, or the results are not clear | Less than 5 (but more than 3) tasks are complete, visualizations do not help to demonstrate the point