| ](../../sketchnotes/01-Definitions.png)|

|:---:|

|Defining Data Science - _Sketchnote by [@nitya](https://twitter.com/nitya)_ |

[Pre-lecture quiz]()

---

## What is Data?

[](https://youtu.be/pqqsm5reGvs)

In our everyday life, we are always surrounded by **data**. The text you are reading now is data, the list of phone numbers of your friends in your smartphone is data, as well as current time displayed on your watch. As human beings, we naturally operate with data, counting the amount of money we have, or writing letters to our friends.

However, data became much more important with the creation of **computers**. The main role of computers is to perform *computations*, but they need data to operate on. Thus, we need to understand how computers store and process data.

## What is Data?

In our everyday life, we are constantly surrounded by data. The text you are reading now is data, the list of phone numbers of your friends in your smartphone is data, as well as the current time displayed on your watch. As human beings, we naturally operate with data by counting the money we have or writing letters to our friends.

With the emergence of Internet, the role of computers as data handling devices increased. If you think of it, we now use computers more and more for data processing and communication, rather than actual computations. When we write an e-mail to a friend, or search some information on the Internet - we are essentially creating, storing, transmitting, and manipulating data.

However, data became much more critical with the creation of computers. The primary role of computers is to perform computations, but they need data to operate on. Thus, we need to understand how computers store and process data.

With the emergence of the Internet, the role of computers as data handling devices increased. If you think of it, we now use computers more and more for data processing and communication, rather than actual computations. When we write an e-mail to a friend or search for some information on the Internet - we are essentially creating, storing, transmitting, and manipulating data.

> Can you remember the last time you have used computers to actually compute something?

## What is Data Science?

In [Wikipedia](https://en.wikipedia.org/wiki/Data_science), **Data Science** is defined as *scientific field that uses scientific methods to extract knowledge and insights from structured and unstructured data, and apply knowledge and actionable insights from data across a broad range of application domains*.

In [Wikipedia](https://en.wikipedia.org/wiki/Data_science), **Data Science** is defined as *a scientific field that uses scientific methods to extract knowledge and insights from structured and unstructured data, and apply knowledge and actionable insights from data across a broad range of application domains*.

This definition highlights the following important aspects of data science:

* The main goal of data science is to **extract knowledge** from data, in order words - to **understand** data, find some hidden relationships and build a **model**.

* Data science uses **scientific methods**, such as probability and statistics. In fact, when the term *data science* was first introduced, some people argued that data science is just a new fancy name for statistics. Nowadays it becomes evident that the field is much more broad.

* Data science uses **scientific methods**, such as probability and statistics. In fact, when the term *data science* was first introduced, some people argued that data science is just a new fancy name for statistics. Nowadays it has become evident that the field is much broader.

* Obtained knowledge should be applied to produce some **actionable insights**.

* We should be able to operate on both **structured** and **unstructured** data. We will come back to discuss different types of data later in the course.

* **Application domain** is an important concept, and data scientist often needs at least some degree of expertise in the problem domain.

@ -36,7 +40,7 @@ One of the ways (attributed to [Jim Gray](https://en.wikipedia.org/wiki/Jim_Gray

## Other Related Fields

Since data is pervasive concept, data science itself is also a broad field, touching many other related disciplines.

Since data is a pervasive concept, data science itself is also a broad field, touching many other related disciplines.

<dl>

<dt>Databases</dt>

@ -63,21 +67,65 @@ Vast amounts of data are incomprehensible for a human being, but once we create

## Types of Data

As we have already mentioned - data is everywhere, we just need to capture it in the right way! It is useful to distinguish between **structured** and **unstructured** data - the former are typically represented in some well-structured form, often as a table or number of tables, while latter is just a collection of files. Sometimes we can also talk about **semistructured** data, that have some sort of a structure that may vary greatly.

As we have already mentioned - data is everywhere, we just need to capture it in the right way! It is useful to distinguish between **structured** and **unstructured** data. The former are typically represented in some well-structured form, often as a table or number of tables, while latter is just a collection of files. Sometimes we can also talk about **semistructured** data, that have some sort of a structure that may vary greatly.

| Structured | Semi-structured | Unstructured |

|----------- |-----------------|--------------|

| List of people with their phone numbers | Wikipedia pages with links | Text of Encyclopaedia Brittanica |

| List of people with their phone numbers | Wikipedia pages with links | Text of Encyclopaedia Britannica |

| Temperature in all rooms of a building at every minute for the last 20 years | Collection of scientific papers in JSON format with authors, data of publication, and abstract | File share with corporate documents |

| Data for age and gender for all people entering the building | Internet pages | Raw video feed from surveillance camera |

| Data for age and gender of all people entering the building | Internet pages | Raw video feed from surveillance camera |

## Where to get Data

There are many possible sources of data, and it will be impossible to list all of them! However, let's mention some of the typical places where you can get data:

* **Structured**

- **Internet of Things**, including data from different sensors, such as temperature or pressure sensors, provides a lot of useful data. For example, if an office building is equipped with IoT sensors, we can automatically control heating and lighting in order to minimize costs.

- **Surveys** that we ask users after purchase of a good, or after visiting a web site.

- **Analysis of behavior** can, for example, help us understand how deeply a user goes into a site, and what is the typical reason for leaving the site.

* **Unstructured**

- **Texts** can be a rich source of insights, starting from overall **sentiment score**, up to extracting keywords and even some semantic meaning.

- **Images** or **Video**. A video from surveillance camera can be used to estimate traffic on the road, and inform people about potential traffic jams.

- Web server **Logs** can be used to understand which pages of our site are most visited, and for how long.

* Semi-structured

- **Social Network** graph can be a great source of data about user personality and potential effectiveness in spreading information around.

- When we have a bunch of photographs from a party, we can try to extract **Group Dynamics** data by building a graph of people taking pictures with each other.

By knowing different possible sources of data, you can try to think about different scenarios where data science techniques can be applied to know the situation better, and to improve business processes.

## What you can do with Data

In Data Science, we focus on the following steps of data journey:

<dl>

<dt>1) Data Acquisition</dt>

<dd>

First step is to collect the data. While in many cases it can be a straightforward process, like data coming to a database from web application, sometimes we need to use special techniques. For example, data from IoT sensors can be overwhelming, and it is a good practice to use buffering endpoints such as IoT Hub to collect all the data before further processing.

</dd>

<dt>2) Data Storage</dt>

<dd>

Storing the data can be challenging, especially if we are talking about big data. When deciding how to store data, it makes sense to anticipate the way you would want later on to query them. There are several ways data can be stored:

<ul>

<li>Relational database stores a collection of tables, and uses a special language called SQL to query them. Typically, tables would be connected to each other using some schema. In many cases we need to convert the data from original form to fit the schema.</li>

<li><ahref="https://en.wikipedia.org/wiki/NoSQL">NoSQL</a> database, such as <ahref="https://azure.microsoft.com/services/cosmos-db/?WT.mc_id=acad-31812-dmitryso">CosmosDB</a>, does not enforce schema on data, and allows storing more complex data, for example, hierarchical JSON documents or graphs. However, NoSQL database does not have rich querying capabilities of SQL, and cannot enforce referential integrity between data.</li>

<li><ahref="https://en.wikipedia.org/wiki/Data_lake">Data Lake</a> storage is used for large collections of data in raw form. Data lakes are often used with big data, where all data cannot fit into one machine, and has to be stored and processed by a cluster. <ahref="https://en.wikipedia.org/wiki/Apache_Parquet">Parquet</a> is the data format that is often used in conjunction with big data.</li>

</ul>

</dd>

<dt>3) Data Processing</dt>

<dd>

This is the most exciting part of data journey, which involved processing the data from its original form to the form that can be used for visualization/model training. When dealing with unstructured data such as text or images, we may need to use some AI techniques to extract **features** from the data, thus converting it to structured form.

</dd>

<dt>4) Visualization / Human Insights</dt>

<dd>

Often to understand the data we need to visualize them. Having many different visualization techniques in our toolbox we can find the right view to make an insight. Often, data scientist needs to "play with data", visualizing it many times and looking for some relationships. Also, we may use techniques from statistics to test some hypotheses or prove correlation between different pieces of data.

</dd>

<dt>5) Training predictive model</dt>

<dd>

Because the ultimate goal of data science is to be able to take decisions based on data, we may want to use the techniques of <ahref="http://github.com/microsoft/ml-for-beginners">Machine Learning</a> to build predictive model that will be able to solve our problem.

</dd>

</dl>

Of course, depending on the actual data some steps might be missing (eg., when we already have the data in the database, or when we do not need model training), or some steps might be repeated several times (such as data processing).

## Digitalization and Digital Transformation

@ -98,12 +146,20 @@ If we want to get even more complicated, we can plot the time taken for each mod

In this challenge, we will try to find concepts relevant to the field of Data Science by looking at texts. We will take Wikipedia article on Data Science, download and process the text, and then build a word cloud like this one:

## Post-Lecture Quiz

[Post-lecture quiz]()

Visit [`notebook.ipynb`](notebook.ipynb) to read through the code. You can also run the code, and see how it performs all data transformations in real time.

> If you do not know how to run code in Jupyter Notebook, have a look at [this article](https://soshnikov.com/education/how-to-execute-notebooks-from-github/).

In this first assignment, we ask you to think about some real-life process or problem in different problem domains, and how you can improve it using the Data Science process. Think about the following:

1. Which data can you collect?

1. How would you collect it?

1. How would you store the data? How large the data is likely to be?

1. Which insights you might be able to get from this data? Which decisions we would be able to take based on the data?

Try to think about 3 different problems/processes and describe each of the points above for each problem domain.

Here are some of the problem domains and problems that can get you started thinking:

1. How can you use data to improve education process for children in schools?

1. How can you use data to control vaccination during the pandemic?

1. How can you use data to make sure you are being productive at work?

## Instructions

Fill in the following table (substitute suggested problem domains for your own ones if needed):

| Problem Domain | Problem | Which data to collect | How to store the data | Which insights/decisions we can make |

One was able to identify reasonable data sources, ways of storing data and possible decisions/insights for all problem domains | Some of the aspects of the solution are not detailed, data storage is not discussed, at least 2 problem domains are described | Only parts of the data solution are described, only one problem domain is considered.

In this first assignment, we ask you to think about some real-life process or problem in different problem domains, and how you can improve it using the Data Science process. Think about the following:

1. Which data can you collect?

1. How would you collect it?

1. How would you store the data? How large the data is likely to be?

1. Which insights you might be able to get from this data? Which decisions we would be able to take based on the data?

Try to think about 3 different problems/processes and describe each of the points above for each problem domain.

Here are some of the problem domains and problems that can get you started thinking:

1. How can you use data to improve education process for children in schools?

1. How can you use data to control vaccination during the pandemic?

1. How can you use data to make sure you are being productive at work?

## Instructions

Fill in the following table (substitute suggested problem domains for your own ones if needed):

| Problem Domain | Problem | Which data to collect | How to store the data | Which insights/decisions we can make |

| Education | In university, we typically have low attendance to lectures, and we have the hypothesis that students who attend lectures on average to better during exams. We want to stimulate attendance and test the hypothesis. | We can track attendance through pictures taken by the security camera in class, or by tracking bluetooth/wifi addresses of student mobile phones in class. Exam data is already available in the university database. | In case we track security camera images - we need to store a few (5-10) photographs during class (unstructured data), and then use AI to identify faces of students (convert data to structured form). | We can compute average attendance data for each student, and see if there is any correlation with exam grades. We will talk more about correlation in [probability and statistics](../../04-stats-and-probability/README.md) section. In order to stimulate student attendance, we can publish the weekly attendance rating on school portal, and draw prizes among those with highest attendance. |

| Vaccination | | | | |

| Productivity | | | | |

> *We provide just one answer as an example, so that you can get an idea of what is expected in this assignment.*

## Rubric

Exemplary | Adequate | Needs Improvement

--- | --- | -- |

One was able to identify reasonable data sources, ways of storing data and possible decisions/insights for all problem domains | Some of the aspects of the solution are not detailed, data storage is not discussed, at least 2 problem domains are described | Only parts of the data solution are described, only one problem domain is considered.

| ](../../sketchnotes/02-Ethics.png)|

|:---:|

| Data Science Ethics - _Sketchnote by [@nitya](https://twitter.com/nitya)_ |

[Pre-lecture quiz]()

---

We are all data citizens living in a datafied world.

Market trends tell us that by 2022, 1-in-3 large organizations will buy and sell their data through online [Marketplaces and Exchanges](https://www.gartner.com/smarterwithgartner/gartner-top-10-trends-in-data-and-analytics-for-2020/). As **App Developers**, we'll find it easier and cheaper to integrate data-driven insights and algorithm-driven automation into daily user experiences. But as AI becomes pervasive, we'll also need to understand the potential harms caused by the [weaponization](https://www.youtube.com/watch?v=TQHs8SA1qpk) of such algorithms at scale.

Trends also indicate that we will create and consume over [180 zettabytes](https://www.statista.com/statistics/871513/worldwide-data-created/) of data by 2025. As **Data Scientists**, this gives us unprecedented levels of access to personal data. This means we can build behavioral profiles of users and influence decision-making in ways that create an [illusion of free choice](https://www.datasciencecentral.com/profiles/blogs/the-illusion-of-choice) while potentially nudging users towards outcomes we prefer. It also raises broader questions on data privacy and user protections.

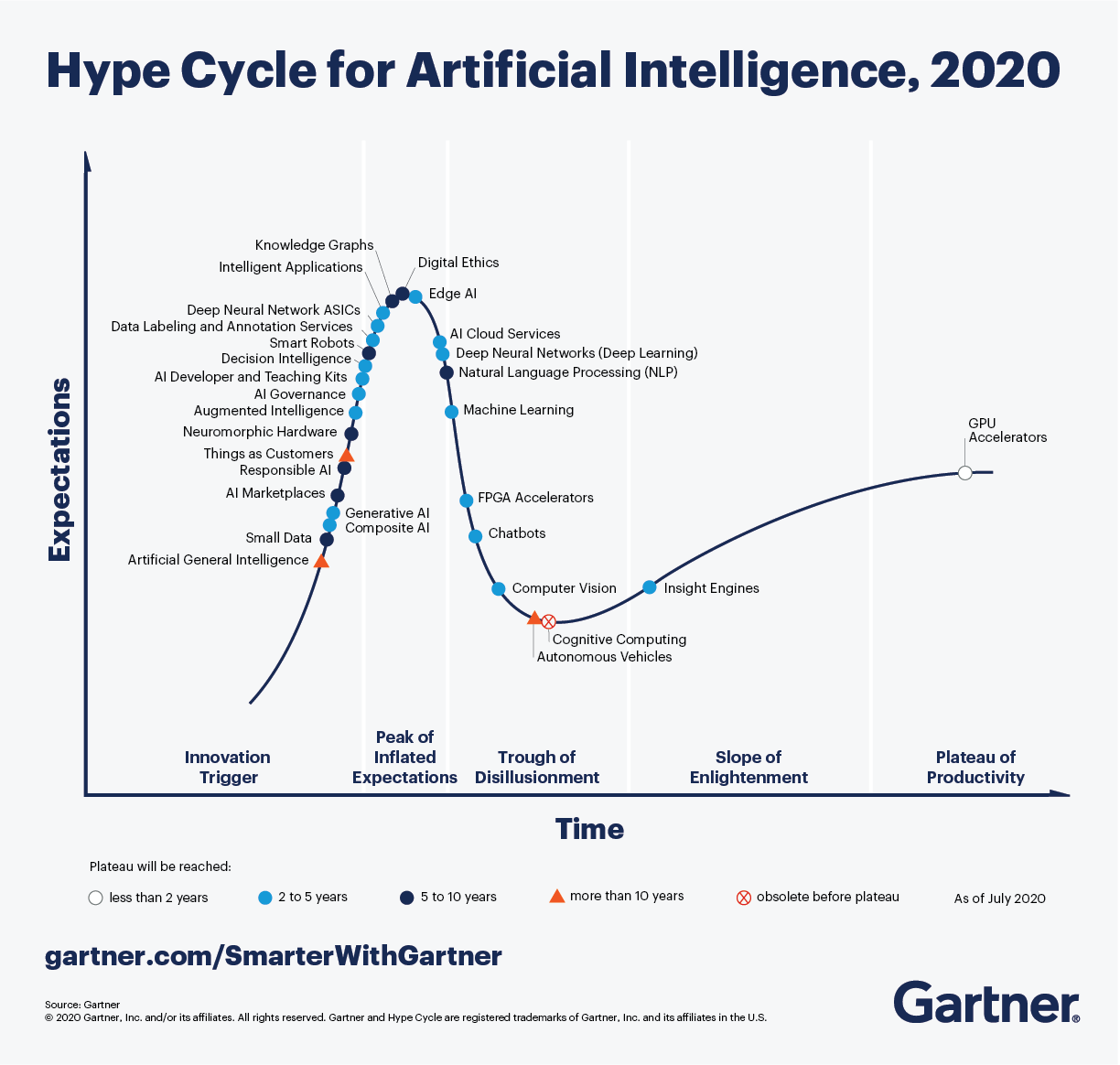

Data ethics are now _necessary guardrails_ for data science and engineering, helping us minimize potential harms and unintended consequences from our data-driven actions. The [Gartner Hype Cycle for AI](https://www.gartner.com/smarterwithgartner/2-megatrends-dominate-the-gartner-hype-cycle-for-artificial-intelligence-2020/) identifies relevant trends in digital ethics, responsible AI ,and AI governances as key drivers for larger megatrends around _democratization_ and _industrialization_ of AI.

In this lesson, we'll explore the fascinating area of data ethics - from core concepts and challenges, to case studies and applied AI concepts like governance - that help establish an ethics culture in teams and organizations that work with data and AI.

Let's start by understanding the basic terminology.

The word "ethics" comes from the [Greek word "ethikos"](https://en.wikipedia.org/wiki/Ethics) (and its root "ethos") meaning _character or moral nature_.

**Ethics** is about the shared values and moral principles that govern our behavior in society. Ethics is based not on laws but on

widely accepted norms of what is "right vs. wrong". However, ethical considerations can influence corporate governance initiatives and government regulations that create more incentives for compliance.

**Data Ethics** is a [new branch of ethics](https://royalsocietypublishing.org/doi/full/10.1098/rsta.2016.0360#sec-1) that "studies and evaluates moral problems related to _data, algorithms and corresponding practices_". Here, **"data"** focuses on actions related to generation, recording, curation, processing dissemination, sharing ,and usage, **"algorithms"** focuses on AI, agents, machine learning ,and robots, and **"practices"** focuses on topics like responsible innovation, programming, hacking and ethics codes.

**Applied Ethics** is the [practical application of moral considerations](https://en.wikipedia.org/wiki/Applied_ethics). It's the process of actively investigating ethical issues in the context of _real-world actions, products and processes_, and taking corrective measures to make that these remain aligned with our defined ethical values.

**Ethics Culture** is about [_operationalizing_ applied ethics](https://hbr.org/2019/05/how-to-design-an-ethical-organization) to make sure that our ethical principles and practices are adopted in a consistent and scalable manner across the entire organization. Successful ethics cultures define organization-wide ethical principles, provide meaningful incentives for compliance, and reinforce ethics norms by encouraging and amplifying desired behaviors at every level of the organization.

## Ethics Concepts

## Sketchnote 🖼

In this section, we'll discuss concepts like **shared values** (principles) and **ethical challenges** (problems) for data ethics - and explore **case studies** that help you understand these concepts in real-world contexts.

> A Visual Guide to Data Ethics by [Nitya Narasimhan](https://twitter.com/nitya) / [(@sketchthedocs)](https://sketchthedocs.dev)

### 1. Ethics Principles

Every data ethics strategy begins by defining _ethical principles_ - the "shared values" that describe acceptable behaviors, and guide compliant actions, in our data & AI projects. You can define these at an individual or team level. However, most large organizations outline these in an _ethical AI_ mission statement or framework that is defined at corporate levels and enforced consistently across all teams.

## 1. Introduction

**Example:** Microsoft's [Responsible AI](https://www.microsoft.com/en-us/ai/responsible-ai) mission statement reads: _"We are committed to the advancement of AI-driven by ethical principles that put people first"_ - identifying 6 ethical principles in the framework below:

This lesson will look at the field of _data ethics_ - from core concepts (ethical challenges & societal consequences) to applied ethics (ethical principles, practices and culture). Let's start with the basics: definitions and motivations.

### 1.1 Definitions

Let's briefly explore these principles. _Transparency_ and _accountability_ are foundational values that other principles built upon - so let's begin there:

**Ethics** [comes from the Greek word "ethikos" and its root "ethos"](https://en.wikipedia.org/wiki/Ethics). It refers to the set of _shared values and moral principles_ that govern our behavior in society and is based on widely-accepted ideas of _right vs. wrong_. Ethics are not laws! They can't be legally enforced but they can influence corporate initiatives and government regulations that help with compliance and governance.

* [**Accountability**](https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1:primaryr6) makes practitioners _responsible_ for their data & AI operations, and compliance with these ethical principles.

* [**Transparency**](https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1:primaryr6) ensures that data and AI actions are _understandable_ (interpretable) to users, explaining the what and why behind decisions.

* [**Fairness**](https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1%3aprimaryr6) - focuses on ensuring AI treats _all people_ fairly, addressing any systemic or implicit socio-technical biases in data and systems.

* [**Reliability & Safety**](https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1:primaryr6) - ensures that AI behaves _consistently_ with defined values, minimizing potential harms or unintended consequences.

* [**Privacy & Security**](https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1:primaryr6) - is about understanding data lineage, and providing _data privacy and related protections_ to users.

* [**Inclusiveness**](https://www.microsoft.com/en-us/ai/responsible-ai?activetab=pivot1:primaryr6) - is about designing AI solutions with intention, adapting them to meet a _broad range of human needs_& capabilities.

**Data Ethics** is [defined as a new branch of ethics](https://royalsocietypublishing.org/doi/full/10.1098/rsta.2016.0360#sec-1) that "studies and evaluates moral problems related to _data, algorithms and corresponding practices_ .. to formulate and support morally good solutions" where:

* `data` = generation, recording, curation, dissemination, sharing and usage

* `algorithms` = AI, machine learning, bots

* `practices` = responsible innovation, ethical hacking, codes of conduct

> 🚨 Think about what your data ethics mission statement could be. Explore ethical AI frameworks from other organizations - here are examples from [IBM](https://www.ibm.com/cloud/learn/ai-ethics), [Google](https://ai.google/principles) ,and [Facebook](https://ai.facebook.com/blog/facebooks-five-pillars-of-responsible-ai/). What shared values do they have in common? How do these principles relate to the AI product or industry they operate in?

**Applied Ethics** is the [_practical application of moral considerations_](https://en.wikipedia.org/wiki/Applied_ethics). If focuses on understanding how ethical issues impact real-world actions, products and processes, by asking moral questions - like _"is this fair?"_ and _"how can this harm individuals or society as a whole?"_ when working with big data and AI algorithms. Applied ethics practices can then focus on taking corrective measures - like employing checklists (_"did we test data model accruacy with diverse groups, for fairness?"_) - to minimize or prevent any unintended consequences.

### 2. Ethics Challenges

**Ethics Culture**: Applied ethics focuses on identifying moral questions and adopting ethically-motivated actions with respect to real-world scenarios and projects. Ethics culture is about _operationalizing_ these practices, collaboratively and at scale, to ensure governances at the scale of organizations and industries. [Establishing an ethics culture](https://hbr.org/2019/05/how-to-design-an-ethical-organization) requires identifying and addressing _systemic_ issues (historical or ingrained) and creating norms & incentives htat keep members accountable for adherence to ethical principles.

Once we have ethical principles defined, the next step is to evaluate our data and AI actions to see if they align with those shared values. Think about your actions in two categories: _data collection_ and _algorithm design_.

With data collection, actions will likely involve **personal data** or personally identifiable information (PII) for identifiable living individuals. This includes [diverse items of non-personal data](https://ec.europa.eu/info/law/law-topic/data-protection/reform/what-personal-data_en) that _collectively_ identify an individual. Ethical challenges can relate to _data privacy_, _data ownership_, and related topics like _informed consent_ and _intellectual property rights_ for users.

### 1.2 Motivation

With algorithm design, actions will involve collecting & curating **datasets**, then using them to train & deploy **data models** that predict outcomes or automate decisions in real-world contexts. Ethical challenges can arise from _dataset bias_, _data quality_ issues, _unfairness_ ,and _misrepresentation_ in algorithms - including some issues that are systemic in nature.

Let's look at some emerging trends in big data and AI:

In both cases, ethics challenges highlight areas where our actions may encounter conflict with our shared values. To detect, mitigate, minimize, or eliminate, these concerns - we need to ask moral "yes/no" questions related to our actions, then take corrective actions as needed. Let's take a look at some ethical challenges and the moral questions they raise:

* [By 2022](https://www.gartner.com/smarterwithgartner/gartner-top-10-trends-in-data-and-analytics-for-2020/) one-in-three large organizations will buy and sell data via online Marketplaces and Exchanges.

* [By 2025](https://www.statista.com/statistics/871513/worldwide-data-created/) we'll be creating and consuming over 180 zettabytes of data.

**Data scientists** will have unimaginable levels of access to personal and behavioral data, helping them develop the algorithms to fuel an AI-driven economy. This raises data ethics issues around _protection of data privacy_ with implications for individual rights around personal data collection and usage.

#### 2.1 Data Ownership

**App developers** will find it easier and cheaper to integrate AI into everday consumer experiences, thanks to the economies of scale and efficiencies of distribution in centralized exchanges. This raises ethical issues around the [_weaponization of AI_](https://www.youtube.com/watch?v=TQHs8SA1qpk) with implications for societal harms caused by unfairness, misrepresentation and systemic biases.

Data collection often involves personal data that can identify the data subjects. [Data ownership](https://permission.io/blog/data-ownership) is about _control_ and [_user rights_](https://permission.io/blog/data-ownership) related to the creation, processing ,and dissemination of data.

**Democratization and Industrialization of AI** are seen as the two megatrends in Gartner's 2020 [Hype Cycle for AI](https://www.gartner.com/smarterwithgartner/2-megatrends-dominate-the-gartner-hype-cycle-for-artificial-intelligence-2020/), shown below. The first positions developers to be a major force in driving increased AI adoption, while the second makes responsible AI and governance a priority for industries.

The moral questions we need to ask are:

* Who owns the data? (user or organization)

* What rights do data subjects have? (ex: access, erasure, portability)

* What rights do organizations have? (ex: rectify malicious user reviews)

[Informed consent](https://legaldictionary.net/informed-consent/) defines the act of users agreeing to an action (like data collection) with a _full understanding_ of relevant facts including the purpose, potential risks ,and alternatives.

Data ethics are now **necessary guardrails** ensuring developers ask the right moral questions and adopt the right practices (to uphold ethical values). And they influence the regulations and frameworks defined (for governance) by governments and organizations.

Questions to explore here are:

* Did the user (data subject) give permission for data capture and usage?

* Did the user understand the purpose for which that data was captured?

* Did the user understand the potential risks from their participation?

#### 2.3 Intellectual Property

## 2. Core Concepts

[Intellectual property](https://en.wikipedia.org/wiki/Intellectual_property) refers to intangible creations resulting from the human initiative, that may _have economic value_ to individuals or businesses.

A data ethics culture requires an understanding of three things: the _shared values_ we embrace as a society, the _moral questions_ we ask (to ensure adherence to those values), and the potential _harms & consequences_ (of non-adherence).

Questions to explore here are:

* Did the collected data have economic value to a user or business?

* Does the **user** have intellectual property here?

* Does the **organization** have intellectual property here?

* If these rights exist, how are we protecting them?

### 2.1 Ethical AI Values

#### 2.4 Data Privacy

Our shared values reflect our ideas of wrong-vs-right when it comes to big data and AI. Different organizations have their own views of what responsible AI and ethical AI principles look like.

[Data privacy](https://www.northeastern.edu/graduate/blog/what-is-data-privacy/) or information privacy refers to the preservation of user privacy and protection of user identity with respect to personally identifiable information.

Here is an example - the [Responsible AI Framework](https://docs.microsoft.com/en-gb/azure/cognitive-services/personalizer/media/ethics-and-responsible-use/ai-values-future-computed.png) from Microsoft defines 6 core ethics principles for all products and processes to follow, when implementing AI solutions:

Questions to explore here are:

* Is users' (personal) data secured against hacks and leaks?

* Is users' data accessible only to authorized users and contexts?

* Is users' anonymity preserved when data is shared or disseminated?

* Can a user be de-identified from anonymized datasets?

* **Accountability**: ensure AI designers & developers take _responsibility_ for its operation.

* **Transparency**: make AI operations and decisions _understandable_ to users.

* **Fairness**: understand biases and ensure AI _behaves comparably_ across target groups.

* **Reliability & Safety**: make sure AI behaves consistently, and _without malicious intent_.

* **Security & Privacy**: get _informed consent_ for data collection, provide data privacy controls.

* **Inclusiveness**: adapt AI behaviors to _broad range of human needs_ and capabilities.

#### 2.5 Right To Be Forgotten

Note that accountability and transparency are _cross-cutting_ concerns that are foundational to the top 4 values, and can be explored in their contexts. In the next section we'll look at the ethical challenges (moral questions) raised in two core contexts:

The [Right To Be Forgotten](https://en.wikipedia.org/wiki/Right_to_be_forgotten) or [Right to Erasure](https://www.gdpreu.org/right-to-be-forgotten/) provides additional personal data protection to users. Specifically, it gives users the right to request deletion or removal of personal data from Internet searches and other locations, _under specific circumstances_ - allowing them a fresh start online without past actions being held against them.

* Data Privacy - focused on **personal data** collection & use, with consequences to individuals.

* Fairness - focused on **algorithm** design & use, with consequences to society at large.

Questions to explore here are:

* Does the system allow data subjects to request erasure?

* Should the withdrawal of user consent trigger automated erasure?

* Was data collected without consent or by unlawful means?

* Are we compliant with government regulations for data privacy?

### 2.2 Ethics of Personal Data

[Personal data](https://en.wikipedia.org/wiki/Personal_data) or personally-identifiable information (PII) is _any data that relates to an identified or identifiable living individual_. It can also [extend to diverse pieces of non-personal data](https://ec.europa.eu/info/law/law-topic/data-protection/reform/what-personal-data_en) that collectively can lead to the identification of a specific individual. Examples include: participant data from research studies, social media interactions, mobile & web app data, online commerce transactions and more.

#### 2.6 Dataset Bias

Here are _some_ ethical concepts and moral questions to explore in context:

Dataset or [Collection Bias](http://researcharticles.com/index.php/bias-in-data-collection-in-research/) is about selecting a _non-representative_ subset of data for algorithm development, creating potential unfairness in result outcomes for diverse groups. Types of bias include selection or sampling bias, volunteer bias, and instrument bias.

* **Data Ownership**. Who owns the data - user or organization? How does this impact users' rights?

* **Informed Consent**. Did users give permissions for data capture? Did they understand purpose?

* **Intellectual Property**. Does data have economic value? What are the users' rights & controls?

* **Data Privacy**. Is data secured from hacks/leaks? Is anonymity preserved on data use or sharing?

* **Right to be Forgotten**. Can user request their data be deleted or removed to reclaim privacy?

Questions to explore here are:

* Did we recruit a representative set of data subjects?

* Did we test our collected or curated dataset for various biases?

* Can we mitigate or remove any discovered biases?

### 2.3 Ethics of Algorithms

#### 2.7 Data Quality

Algorithm design begins with collecting & curating datasets relevant to a specific AI problem or domain, then processing & analyzing it to create models that can help predict outcomes or automate decisions in real-world applications. Moral questions can now arise in various contexts, at any one of these stages.

[Data Quality](https://lakefs.io/data-quality-testing/) looks at the validity of the curated dataset used to develop our algorithms, checking to see if features and records meet requirements for the level of accuracy and consistency needed for our AI purpose.

Here are _some_ ethical concepts and moral questions to explore in context:

Questions to explore here are:

* Did we capture valid _features_ for our use case?

* Was data captured _consistently_ across diverse data sources?

* Is the dataset _complete_ for diverse conditions or scenarios?

* Is information captured _accurately_ in reflecting reality?

* **Dataset Bias** - Is data representative of target audience? Have we checked for different [data biases](https://towardsdatascience.com/survey-d4f168791e57)?

* **Data Quality** - Does dataset and feature selection provide the required [data quality assurance](https://lakefs.io/data-quality-testing/)?

* **Algorithm Fairness** - Does the data model [systematically discriminate](https://towardsdatascience.com/what-is-algorithm-fairness-3182e161cf9f) against some subgroups?

* **Misrepresentation** - Are we [communicating honestly reported data in a deceptive manner?](https://www.sciencedirect.com/topics/computer-science/misrepresentation)

* **Explainable AI** - Are the results of AI [understandable by humans](https://en.wikipedia.org/wiki/Explainable_artificial_intelligence)? White-box (vs. black-box) models.

* **Free Choice** - Did user exercise free will or did algorithm nudge them towards a desired outcome?

#### 2.8 Algorithm Fairness

### 2.3 Case Studies

[Algorithm Fairness](https://towardsdatascience.com/what-is-algorithm-fairness-3182e161cf9f) checks to see if the algorithm design systematically discriminates against specific subgroups of data subjects leading to [potential harms](https://docs.microsoft.com/en-us/azure/machine-learning/concept-fairness-ml) in _allocation_ (where resources are denied or withheld from that group) and _quality of service_ (where AI is not as accurate for some subgroups as it is for others).

The above are a subset of the core ethical challenges posed for big data and AI. More organizations are defining and adopting _responsible AI_ or _ethical AI_ frameworks that may identify additional shared values and related ethical challenges for specific domains or needs.

Questions to explore here are:

* Did we evaluate model accuracy for diverse subgroups and conditions?

* Did we scrutinize the system for potential harms (e.g., stereotyping)?

* Can we revise data or retrain models to mitigate identified harms?

To understand the potential _harms and consequences_ of neglecting or violating these data ethics principles, it helps to explore this in a real-world context. Here are some famous case studies and recent examples to get you started:

Explore resources like [AI Fairness checklists](https://query.prod.cms.rt.microsoft.com/cms/api/am/binary/RE4t6dA) to learn more.

#### 2.9 Misrepresentation

* `1972`: The [Tuskegee Syphillis Study](https://en.wikipedia.org/wiki/Tuskegee_Syphilis_Study) is a landmark case study for **informed consent** in data science. African American men who participated in the study were promised free medical care _but deceived_ by researchers who failed to inform subjects of their diagnosis or about availability of treatment. Many subjects died; some partners or children were affected by complications. The study lasted 40 years.

* `2007`: The Netflix data prize provided researchers with [_10M anonymized movie rankings from 50K customers_](https://www.wired.com/2007/12/why-anonymous-data-sometimes-isnt/) to help improve recommendation algorithms. This became a landmark case study in **de-identification (data privacy)** where researchers were able to correlate the anonymized data with _other datasets_ (e.g., IMDb) that had personally identifiable information - helping them "de-anonymize" users.

* `2013`: The City of Boston [developed Street Bump](https://www.boston.gov/transportation/street-bump), an app that let citizens report potholes, giving the city better roadway data to find and fix issues. This became a case study for **collection bias** where [people in lower income groups had less access to cars and phones](https://hbr.org/2013/04/the-hidden-biases-in-big-data), making their roadway issues invisible in this app. Developers worked with academics to _equitable access and digital divides_ issues for fairness.

* `2018`: The MIT [Gender Shades Study](http://gendershades.org/overview.html) evaluated the accuracy of gender classification AI products, exposing gaps in accuracy for women and persons of color. A [2019 Apple Card](https://www.wired.com/story/the-apple-card-didnt-see-genderand-thats-the-problem/) seemed to offer less credit to women than men. Both these illustrated issues in **algorithmic fairness** and discrimination.

* `2020`: The [Georgia Department of Public Health released COVID-19 charts](https://www.vox.com/covid-19-coronavirus-us-response-trump/2020/5/18/21262265/georgia-covid-19-cases-declining-reopening) that appeared to mislead citizens about trends in confirmed cases with non-chronological ordering on the x-axis. This illustrates **data misrepresentation** where honest data is presented dishonestly to support a desired narrative.

* `2020`: Learning app [ABCmouse paid $10M to settle an FTC complaint](https://www.washingtonpost.com/business/2020/09/04/abcmouse-10-million-ftc-settlement/) where parents were trapped into paying for subscriptions they couldn't cancel. This highlights the **illusion of free choice** in algorithmic decision-making, and potential harms from dark patterns that exploit user insights.

* `2021`: Facebook [Data Breach](https://www.npr.org/2021/04/09/986005820/after-data-breach-exposes-530-million-facebook-says-it-will-not-notify-users) exposed data from 530M users, resulting in a $5B settlement to the FTC. It however refused to notify users of the breach - raising issues like **data privacy**, **data security** and **accountability**, including user rights to redress for those affected.

[Data Misrepresentation](https://www.sciencedirect.com/topics/computer-science/misrepresentation) is about asking whether we are communicating insights from honestly reported data in a deceptive manner to support a desired narrative.

Want to explore more case studies on your own? Check out these resources:

Questions to explore here are:

* Are we reporting incomplete or inaccurate data?

* Are we visualizing data in a manner that drives misleading conclusions?

* Are we using selective statistical techniques to manipulate outcomes?

* Are there alternative explanations that may offer a different conclusion?

* [Ethics Unwrapped](https://ethicsunwrapped.utexas.edu/case-studies) - ethics dilemmas across diverse industries.

* [Data Science Ethics course](https://www.coursera.org/learn/data-science-ethics#syllabus) - landmark case studies in data ethics.

* [Where things have gone wrong](https://deon.drivendata.org/examples/) - deon checklist examples of ethical issues

#### 2.10 Free Choice

The [Illusion of Free Choice](https://www.datasciencecentral.com/profiles/blogs/the-illusion-of-choice) occurs when system "choice architectures" use decision-making algorithms to nudge people towards taking a preferred outcome while seeming to give them options and control. These [dark patterns](https://www.darkpatterns.org/) can cause social and economic harm to users. Because user decisions impact behavior profiles, these actions potentially drive future choices that can amplify or extend the impact of these harms.

## 3. Applied Ethics

Questions to explore here are:

* Did the user understand the implications of making that choice?

* Was the user aware of (alternative) choices and the pros & cons of each?

* Can the user reverse an automated or influenced choice later?

We've learned about data ethics values, and the ethical challenges (+ moral questions) associated with adherence to these values. But how do we _implement_ these ideas in real-world contexts? Here are some tools & practices that can help.

### 3. Case Studies

### 3.1 Have Professional Codes

To put these ethical challenges in real-world contexts, it helps to look at case studies that highlight the potential harms and consequences to individuals and society, when such ethics violations are overlooked.

Professional codes are _moral guidelines_ for professional behavior, helping employees or members _make decisions that align with organizational principles_. Codes may not be legally enforceable, making them only as good as the willing compliance of members. An organization may inspire adherence by imposing incentives & penalties accordingly.

Here are a few examples:

Professional _codes of conduct_ are prescriptive rules and responsibilities that members must follow to remain in good standing with an organization. A professional *code of ethics* is more [_aspirational_](https://keydifferences.com/difference-between-code-of-ethics-and-code-of-conduct.html), defining the shared values and ideas of the organization. The terms are sometimes used interchangeably.

| Ethics Challenge | Case Study |

|--- |--- |

| **Informed Consent** | 1972 - [Tuskegee Syphillis Study](https://en.wikipedia.org/wiki/Tuskegee_Syphilis_Study) - African American men who participated in the study were promised free medical care _but deceived_ by researchers who failed to inform subjects of their diagnosis or about availability of treatment. Many subjects died & partners or children were affected; the study lasted 40 years. |

| **Data Privacy** | 2007 - The [Netflix data prize](https://www.wired.com/2007/12/why-anonymous-data-sometimes-isnt/) provided researchers with _10M anonymized movie rankings from 50K customers_ to help improve recommendation algorithms. However, researchers were able to correlate anonymized data with personally-identifiable data in _external datasets_ (e.g., IMDb comments) - effectively "de-anonymizing" some Netflix subscribers.|

| **Collection Bias** | 2013 - The City of Boston [developed Street Bump](https://www.boston.gov/transportation/street-bump), an app that let citizens report potholes, giving the city better roadway data to find and fix issues. However, [people in lower income groups had less access to cars and phones](https://hbr.org/2013/04/the-hidden-biases-in-big-data), making their roadway issues invisible in this app. Developers worked with academics to _equitable access and digital divides_ issues for fairness. |

| **Algorithmic Fairness** | 2018 - The MIT [Gender Shades Study](http://gendershades.org/overview.html) evaluated the accuracy of gender classification AI products, exposing gaps in accuracy for women and persons of color. A [2019 Apple Card](https://www.wired.com/story/the-apple-card-didnt-see-genderand-thats-the-problem/) seemed to offer less credit to women than men. Both illustrated issues in algorithmic bias leading to socio-economic harms.|

| **Data Misrepresentation** | 2020 - The [Georgia Department of Public Health released COVID-19 charts](https://www.vox.com/covid-19-coronavirus-us-response-trump/2020/5/18/21262265/georgia-covid-19-cases-declining-reopening) that appeared to mislead citizens about trends in confirmed cases with non-chronological ordering on the x-axis. This illustrates misrepresentation through visualization tricks. |

| **Illusion of free choice** | 2020 - Learning app [ABCmouse paid $10M to settle an FTC complaint](https://www.washingtonpost.com/business/2020/09/04/abcmouse-10-million-ftc-settlement/) where parents were trapped into paying for subscriptions they couldn't cancel. This illustrates dark patterns in choice architectures, where users were nudged towards potentially harmful choices. |

| **Data Privacy & User Rights** | 2021 - Facebook [Data Breach](https://www.npr.org/2021/04/09/986005820/after-data-breach-exposes-530-million-facebook-says-it-will-not-notify-users) exposed data from 530M users, resulting in a $5B settlement to the FTC. It however refused to notify users of the breach violating user rights around data transparency and access. |

Want to explore more case studies? Check out these resources:

* [Ethics Unwrapped](https://ethicsunwrapped.utexas.edu/case-studies) - ethics dilemmas across diverse industries.

* [Data Science Ethics course](https://www.coursera.org/learn/data-science-ethics#syllabus) - landmark case studies explored.

* [Where things have gone wrong](https://deon.drivendata.org/examples/) - deon checklist with examples

> 🚨 Think about the case studies you've seen - have you experienced, or been affected by, a similar ethical challenge in your life? Can you think of at least one other case study that illustrates one of the ethical challenges we've discussed in this section?

## Applied Ethics

We've talked about ethics concepts, challenges ,and case studies in real-world contexts. But how do we get started _applying_ ethical principles and practices in our projects? And how do we _operationalize_ these practices for better governance? Let's explore some real-world solutions:

### 1. Professional Codes

Professional Codes offer one option for organizations to "incentivize" members to support their ethical principles and mission statement. Codes are _moral guidelines_ for professional behavior, helping employees or members make decisions that align with their organization's principles. They are only as good as the voluntary compliance from members; however, many organizations offer additional rewards and penalties to motivate compliance from members.

Examples include:

@ -132,69 +208,67 @@ Examples include:

* [Data Science Association](http://datascienceassn.org/code-of-conduct.html) Code of Conduct (created 2013)

* [ACM Code of Ethics and Professional Conduct](https://www.acm.org/code-of-ethics) (since 1993)

> 🚨 Do you belong to a professional engineering or data science organization? Explore their site to see if they define a professional code of ethics. What does this say about their ethical principles? How are they "incentivizing" members to follow the code?

### 3.2 Ask Moral Questions

Assuming you've already identified your shared values or ethical principles at a team or organization level, the next step is to identify the moral questions relevant to your specific use case and operational workflow.

Here are [6 basic questions about data ethics](https://halpert3.medium.com/six-questions-about-data-science-ethics-252b5ae31fec) that you can build on:

### 2. Ethics Checklists

* Is the data you're collecting fair and unbiased?

* Is the data being used ethically and fairly?

* Is user privacy being protected?

* To whom does data belong - the company or the user?

* What effects do the data and algorithms have on society (individual and collective)?

* Is the data manipulated or deceptive?

While professional codes define required _ethical behavior_ from practitioners, they [have known limitations](https://resources.oreilly.com/examples/0636920203964/blob/master/of_oaths_and_checklists.md) in enforcement, particularly in large-scale projects. Instead, many data Science experts [advocate for checklists](https://resources.oreilly.com/examples/0636920203964/blob/master/of_oaths_and_checklists.md), that can **connect principles to practices** in more deterministic and actionable ways.

For larger team or project scope, you can choose to expand on questions that reflect a specific stage of the workflow. For example here are [22 questions on ethics in data and AI](https://medium.com/the-organization/22-questions-for-ethics-in-data-and-ai-efb68fd19429) that were grouped into _design_, _implementation & management_, _systems & organization_ categories for convenience.

Checklists convert questions into "yes/no" tasks that can be operationalized, allowing them to be tracked as part of standard product release workflows.

### 3.3 Adopt Ethics Checklists

While professional codes define required _ethical behavior_ from practitioners, they [have known limitations](https://resources.oreilly.com/examples/0636920203964/blob/master/of_oaths_and_checklists.md) for implementation, particularly in large-scale projects. In [Ethics and Data Science](https://resources.oreilly.com/examples/0636920203964/blob/master/of_oaths_and_checklists.md)), experts instead advocate for ethics checklists that can **connect principles to practices** in more deterministic and actionable ways.

Examples include:

* [Deon](https://deon.drivendata.org/) - a general-purpose data ethics checklist created from [industry recommendations](https://deon.drivendata.org/#checklist-citations) with a command-line tool for easy integration.

* [Privacy Audit Checklist](https://cyber.harvard.edu/ecommerce/privacyaudit.html) - provides general guidance for information handling practices from legal and social exposure perspectives.

* [AI Fairness Checklist](https://www.microsoft.com/en-us/research/project/ai-fairness-checklist/) - created by AI practitioners to support the adoption and integration of fairness checks into AI development cycles.

* [22 questions for ethics in data and AI](https://medium.com/the-organization/22-questions-for-ethics-in-data-and-ai-efb68fd19429) - more open-ended framework, structured for initial exploration of ethical issues in design, implementation, and organizational, contexts.

Checklists convert questions into "yes/no" tasks that can be tracked and validated before product release. Tools like [deon](https://deon.drivendata.org/) make this frictionless, creating default checklists aligned to [industry recommendations](https://deon.drivendata.org/#checklist-citations) and enabling users to customize and integrate them into workflows using a command-line tool. Deon also provides [real-world examples](ttps://deon.drivendata.org/examples/) of ethical challenges to provide context for these decisions.

### 3. Ethics Regulations

### 3.4 Track Ethics Compliance

Ethics is about defining shared values and doing the right thing _voluntarily_. **Compliance** is about _following the law_ if and where defined. **Governance** broadly covers all the ways in which organizations operate to enforce ethical principles and comply with established laws.

**Ethics** is about doing the right thing, even if there are no laws to enforce it. **Compliance** is about following the law, when defined and where applicable.

**Governance** is the broader umbrella that covers all the ways in which an organization (company or government) operates to enforce ethical principles & comply with laws.

Today, governance takes two forms within organizations. First, it's about defining **ethical AI** principles and establishing practices to operationalize adoption across all AI-related projects in the organization. Second, it's about complying with all government-mandated **data protection regulations** for regions it operates in.

Companies are creating their own ethics frameworks (e.g., [Microsoft](https://www.microsoft.com/en-us/ai/responsible-ai), [IBM](https://www.ibm.com/cloud/learn/ai-ethics), [Google](https://ai.google/principles), [Facebook](https://ai.facebook.com/blog/facebooks-five-pillars-of-responsible-ai/), [Accenture](https://www.accenture.com/_acnmedia/PDF-149/Accenture-Responsible-AI-Final.pdf#zoom=50)) for governances, while state and national governments tend to focus on regulations that protect the data privacy and rights of their citizens.

Examples of data protection and privacy regulations:

Here are some landmark data privacy regulations to know:

* `1974`, [US Privacy Act](https://www.justice.gov/opcl/privacy-act-1974) - regulates _federal govt._ collection, use and disclosure of personal information.

* `1974`, [US Privacy Act](https://www.justice.gov/opcl/privacy-act-1974) - regulates _federal govt._ collection, use ,and disclosure of personal information.

* `1996`, [US Health Insurance Portability & Accountability Act (HIPAA)](https://www.cdc.gov/phlp/publications/topic/hipaa.html) - protects personal health data.

* `1998`, [US Children's Online Privacy Protection Act (COPPA)](https://www.ftc.gov/enforcement/rules/rulemaking-regulatory-reform-proceedings/childrens-online-privacy-protection-rule) - protects data privacy of children under 13.

* `2018`, [General Data Protection Regulation (GDPR)](https://gdpr-info.eu/) - provides user rights, data protection and privacy.

* `2018`, [California Consumer Privacy Act (CCPA)](https://www.oag.ca.gov/privacy/ccpa) gives consumers more _rights_ over their personal data.

* `2018`, [General Data Protection Regulation (GDPR)](https://gdpr-info.eu/) - provides user rights, data protection ,and privacy.

* `2018`, [California Consumer Privacy Act (CCPA)](https://www.oag.ca.gov/privacy/ccpa) gives consumers more _rights_ over their (personal) data.

* `2021`, China's [Personal Information Protection Law](https://www.reuters.com/world/china/china-passes-new-personal-data-privacy-law-take-effect-nov-1-2021-08-20/) just passed, creating one of the strongest online data privacy regulations worldwide.

In Aug 2021, China passed the [Personal Information Protection Law](https://www.reuters.com/world/china/china-passes-new-personal-data-privacy-law-take-effect-nov-1-2021-08-20/) (to go into effect Nov 1) which, with its Data Security Law, will create one of the strongest online data privacy regulations in the world.

> 🚨 The European Union defined GDPR (General Data Protection Regulation) remains one of the most influential data privacy regulations today. Did you know it also defines [8 user rights](https://www.freeprivacypolicy.com/blog/8-user-rights-gdpr) to protect citizens' digital privacy and personal data? Learn about what these are, and why they matter.

### 3.5 Establish Ethics Culture

### 4. Ethics Culture

There remains an intangible gap between compliance ("doing enough to meet the letter of the law") and addressing systemic issues ([like ossification, information asymmetry and distributional unfairness](https://www.coursera.org/learn/data-science-ethics/home/week/4)) that can create self-fulfilling feedback loops to weaponizes AI further. This is motivating calls for [formalizing data ethics cultures](https://www.codeforamerica.org/news/formalizing-an-ethical-data-culture/) in organizations, where everyone is empowered to [pull the Andon cord](https://en.wikipedia.org/wiki/Andon_(manufacturing) to raise ethics concerns early. And exploring [collaborative approaches to defining this culture](https://towardsdatascience.com/why-ai-ethics-requires-a-culture-driven-approach-26f451afa29f) that build emotional connections and consistent beliefs across organizations and industries.

Note that there remains an intangible gap between _compliance_ (doing enough to meet "the letter of the law") and addressing [systemic issues](https://www.coursera.org/learn/data-science-ethics/home/week/4) (like ossification, information asymmetry ,and distributional unfairness) that can speed up the weaponization of AI.

The latter requires [collaborative approaches to defining ethics cultures](https://towardsdatascience.com/why-ai-ethics-requires-a-culture-driven-approach-26f451afa29f) that build emotional connections and consistent shared values _across organizations_ in the industry. This calls for more [formalized data ethics cultures](https://www.codeforamerica.org/news/formalizing-an-ethical-data-culture/) in organizations - allowing _anyone_ to [pull the Andon cord](https://en.wikipedia.org/wiki/Andon_(manufacturing) (to raise ethics concerns early in the process) and making _ethical assessments_ (e.g., in hiring) a core criteria team formation in AI projects.

Courses and books help with understanding core ethics concepts and challenges, while case studies and tools help with applied ethics practices in real-world contexts. Here are a few resources to start with.

---

# Assignment

* [Machine Learning For Beginners](https://github.com/microsoft/ML-For-Beginners/blob/main/1-Introduction/3-fairness/README.md) - lesson on Fairness, from Microsoft.

* [Principles of Responsible AI](https://docs.microsoft.com/en-us/learn/modules/responsible-ai-principles/) - free learning path from Microsoft Learn.

* [Ethics and Data Science](https://resources.oreilly.com/examples/0636920203964) - O'Reilly EBook (M. Loukides, H. Mason et. al)

* [Data Science Ethics](https://www.coursera.org/learn/data-science-ethics#syllabus) - online course from the University of Michigan.

* [Ethics Unwrapped](https://ethicsunwrapped.utexas.edu/case-studies) - case studies from the University of Texas.

This lesson focuses on identifying and classifying data by its characteristics and its sources.

| ](../../sketchnotes/03-DefiningData.png)|

|:---:|

|Defining Data - _Sketchnote by [@nitya](https://twitter.com/nitya)_ |

Data are facts, information, observations and measurements that are used to make discoveries and to support informed decisions. A data point is a single unit of data with in a dataset, which is collection of data points. Datasets may come in different formats and structures, and will usually be based on its source, or where the data came from. For example, a company's monthly earnings might be in a spreadsheet but hourly heart rate data from a smartwatch may be in [JSON](https://stackoverflow.com/a/383699) format. It's common for data scientists to work with different types of data within a dataset.

This lesson focuses on identifying and classifying data by its characteristics and its sources.

> Source: [Mika Baumister](https://unsplash.com/@mbaumi) via [Unsplash](https://unsplash.com/photos/Wpnoqo2plFA)

## How Data is Described

*Raw data* are data that has come from its source in its initial state and has not been analyzed or organized. In order to make sense of what is happening with a dataset, it needs to be organized into a format that can be understood by humans as well as the technology they may use to analyze it further. The structure of a dataset describes how it's organized and can be classified at structured, unstructured and semi-structured. These types of structure will vary, depending on the source but will ultimately fit in these three categories.

**Raw data** are data that has come from its source in its initial state and has not been analyzed or organized. In order to make sense of what is happening with a dataset, it needs to be organized into a format that can be understood by humans as well as the technology they may use to analyze it further. The structure of a dataset describes how it's organized and can be classified at structured, unstructured and semi-structured. These types of structure will vary, depending on the source but will ultimately fit in these three categories.

### Quantitative Data

Quantitative data are numerical observations within a dataset and can typically be analyzed, measured and used mathematically. Some examples of quantitative data are: a country's population, a person's height or a company's quarterly earnings. With some additional analysis, quantitative data could be used to discover seasonal trends of the Air Quality Index (AQI) or estimate the probability of rush hour traffic on a typical work day.

A data source is the initial location of where the data was generated, or where it "lives" and will vary based on how and when it was collected. Data generated by its user(s) are known as primary data while secondary data comes from a source that has collected data for general use. For example, a group of scientists collecting observations in a rainforest would be considered primary and if they decide to share it with other scientists it would be considered secondary to those that use it.

Databases are a common source and rely on a database management system to host and maintain the data where users use commands called queries to explore the data. Files as data sources can be audio, image, and video files as well as spreadsheets like Excel. Internet sources are a common location for hosting data, where databases as well as files can be found. Application programming interfaces, also known as APIs allow programmers to create ways to share data with external users through the internet, while the process of web scraping extracts data from a web page. The [lessons in Working with Data](/2-Working-With-Data) focus on how to use various data sources.

Databases are a common source and rely on a database management system to host and maintain the data where users use commands called queries to explore the data. Files as data sources can be audio, image, and video files as well as spreadsheets like Excel. Internet sources are a common location for hosting data, where databases as well as files can be found. Application programming interfaces, also known as APIs allow programmers to create ways to share data with external users through the internet, while the process of web scraping extracts data from a web page. The [lessons in Working with Data](/2-Working-With-Data) focuses on how to use various data sources.

## Conclusion

In this lesson we have learned:

- What data is

- How data is described

- How data is classified and categorized

- Where data can be found

## 🚀 Challenge

Kaggle is an excellent source of open datasets. Use the [dataset search tool](https://www.kaggle.com/datasets) to find some data sets of interest and and classify 3-5 with this criteria:

- Is the data quantitative or qualitative?

- Is the data structured, unstructured, or semi-structured?

- This Microsoft Learn unit, titled [Classify your Data](https://docs.microsoft.com/en-us/learn/modules/choose-storage-approach-in-azure/2-classify-data) has a detailed breakdown of structured, semi-structured, and unstructured data.

Follow the prompts in this assignment to identify and classify the data with one of each of the following data types:

Structure Types: Structured, Semi-Structured, or Unstructured

Value Types: Qualitative or Quantitative

Source Types: Primary or Secondary

**Structure Types**: Structured, Semi-Structured, or Unstructured

**Value Types**: Qualitative or Quantitative

**Source Types**: Primary or Secondary

1. A company has been acquired and now has a parent company. The data scientists have received a spreadsheet of customer phone numbers from the parent company.

Structure Type:

Value Type:

Source Type:

---

2. A smart watch has been collecting heart rate data from its wearer, and the raw data is in JSON format.

Structure Type:

Value Type:

Source Type:

---

3. A workplace survey of employee morale that is stored in a CSV file.

Structure Type:

Value Type:

Source Type:

---

4. Astrophysicists are accessing a database of galaxies that has been collected by a space probe. The data contains the number of planets within in each galaxy.

Structure Type:

Value Type:

Source Type:

---

5. A personal finance app uses APIs to connect to a user's financial accounts in order to calculate their net worth. They can see all of their transactions in a format of rows and columns and looks similar to a spreadsheet.

# A Brief Introduction to Statistics and Probability

| ](../../sketchnotes/04-Statistics-Probability.png)|

|:---:|

| Statistics and Probability - _Sketchnote by [@nitya](https://twitter.com/nitya)_ |

Statistics and Probability Theory are two highly related areas of Mathematics that are highly relevant to Data Science. It is possible to operate with data without deep knowledge of mathematics, but it is still better to know at least some basic concepts. Here we will present a short introduction that will help you get started.

**Probability** is a number between 0 and 1 that expresses how probable an **event** is. It is defined as a number of positive outcomes (that lead to the event), divided by total number of outcomes, given that all outcomes are equally probable. For example, when we roll a dice, the probability that we get an even number is 3/6 = 0.5.

When we talk about events, we use **random variables**. For example, the random variable that represents a number obtained when rolling a dice would take values from 1 to 6. Set of numbers from 1 to 6 is called **sample space**. We can talk about probability of a random variable taking a certain value, for example P(X=3)=1/6.

When we talk about events, we use **random variables**. For example, the random variable that represents a number obtained when rolling a dice would take values from 1 to 6. Set of numbers from 1 to 6 is called **sample space**. We can talk about the probability of a random variable taking a certain value, for example P(X=3)=1/6.

The random variable in previous example is called **discrete**, because it has a countable sample space, i.e. there are separate values that can be enumerated. There are cases when sample space is a range of real numbers, or the whole set of real numbers. Such variables are called **continuous**. An good example is the time when the bus arrives.

The random variable in previous example is called **discrete**, because it has a countable sample space, i.e. there are separate values that can be enumerated. There are cases when sample space is a range of real numbers, or the whole set of real numbers. Such variables are called **continuous**. A good example is the time when the bus arrives.

## Probability Distribution

@ -28,7 +33,7 @@ We can only talk about the probability of a variable falling in a given interval

An continuous analog of uniform distribution is called **continuous uniform**, which is defined on a finite interval. A probability that the value X falls into an interval of length l is proportional to l, and rises up to 1.

A continuous analog of uniform distribution is called **continuous uniform**, which is defined on a finite interval. A probability that the value X falls into an interval of length l is proportional to l, and rises up to 1.

Another important distribution is **normal distribution**, which we will talk about in more detail below.

@ -53,9 +58,9 @@ Graphically we can represent relationship between median and quartiles in a diag

Here we also computer**inter-quartile range** IQR=Q3-Q1, and so-called **outliers** - values, that lie outside the boundaries [Q1-1.5*IQR,Q3+1.5*IQR].

Here we also compute **inter-quartile range** IQR=Q3-Q1, and so-called **outliers** - values, that lie outside the boundaries [Q1-1.5*IQR,Q3+1.5*IQR].

For finite distribution that contains small number of possible values, a good "typical" value is the one that appears the most frequently, which is called **mode**. It is often applied to categorical data, such as colors. Consider a situation when we have two groups of people - some that strongly prefer red, and others who prefer blue. If we code colors by numbers, the mean value for a favorite color would be somewhere in the orange-green spectrum, which does not indicate the actual preference on neither group. However, the mode would be either one of the colors, or both colors, if the number of people voting for them is equal (in this case we call the sample **multimodal**).

For finite distribution that contains a small number of possible values, a good "typical" value is the one that appears the most frequently, which is called **mode**. It is often applied to categorical data, such as colors. Consider a situation when we have two groups of people - some that strongly prefer red, and others who prefer blue. If we code colors by numbers, the mean value for a favorite color would be somewhere in the orange-green spectrum, which does not indicate the actual preference on neither group. However, the mode would be either one of the colors, or both colors, if the number of people voting for them is equal (in this case we call the sample **multimodal**).

## Real-world Data

When we analyze data from real life, they often are not random variables as such, in a sense that we do not perform experiments with unknown result. For example, consider a team of baseball players, and their body data, such as height, weight and age. Those numbers are not exactly random, but we can still apply the same mathematical concepts. For example, a sequence of people's weights can be considered to be a sequence of values drawn from some random variable. Below is the sequence of weights of actual baseball players from [Major League Baseball](http://mlb.mlb.com/index.jsp), taken from [this dataset](http://wiki.stat.ucla.edu/socr/index.php/SOCR_Data_MLB_HeightsWeights) (for your convenience, only first 20 values are shown):

@ -64,7 +69,7 @@ When we analyze data from real life, they often are not random variables as such

> **Note**: To see the example of working with this dataset, have a look at the [accompanying notebook](notebook.ipynb). There is also a number of challenges throughout this lesson, and you may complete them by adding some code to that notebook. If you are not sure how to operate on data, do not worry - we will come back to working with data using Python at a later time.

> **Note**: To see the example of working with this dataset, have a look at the [accompanying notebook](notebook.ipynb). There are also a number of challenges throughout this lesson, and you may complete them by adding some code to that notebook. If you are not sure how to operate on data, do not worry - we will come back to working with data using Python at a later time. If you do not know how to run code in Jupyter Notebook, have a look at [this article](https://soshnikov.com/education/how-to-execute-notebooks-from-github/).

Here is the box plot showing mean, median and quartiles for our data:

@ -78,16 +83,16 @@ This diagram suggests that, on average, height of first basemen is higher that h

> When working with real-world data, we assume that all data points are samples drawn from some probability distribution. This assumption allows us to apply machine learning techniques and build working predictive models.

To see what is the distribution of our data, we can plot a graph called a **histogram**. X-axis would contain a number of different weight intervals (so-called **bins**), and vertical axis would show the number of times our random variable sample was inside a given interval.

To see what the distribution of our data is, we can plot a graph called a **histogram**. X-axis would contain a number of different weight intervals (so-called **bins**), and the vertical axis would show the number of times our random variable sample was inside a given interval.

From this histogram you can see that all values are centered around certain mean weight, and the further we go from that weight - the fewer weights of that value are encountered. I.e., it is very improbable that a weight of a baseball player would be very different from the mean weight. Variance of weights show the extent to which weights are likely to differ from the mean.

From this histogram you can see that all values are centered around certain mean weight, and the further we go from that weight - the fewer weights of that value are encountered. I.e., it is very improbable that the weight of a baseball player would be very different from the mean weight. Variance of weights show the extent to which weights are likely to differ from the mean.

> If we take weights of other people, not from the baseball league, the distribution is likely to be different. However, the shape of the distribution will be the same, but mean and variance would change. So, if we train our model on baseball players, it is likely to give wrong results when applied to students of a university, because the underlying distribution is different.

## Normal Distribution

The distribution of weights that we have seen above is very typical, and many measurements from real world follow the same type of distribution, but with different mean and variance. This distribution is called **normal distribution**, and it plays very important role in statistics.

The distribution of weights that we have seen above is very typical, and many measurements from real world follow the same type of distribution, but with different mean and variance. This distribution is called **normal distribution**, and it plays a very important role in statistics.

Using normal distribution is a correct way to generate random weights of potential baseball players. Once we know mean weight `mean` and standard deviation `std`, we can generate 1000 weight samples in the following way:

```python

@ -112,7 +117,7 @@ Suppose we have a sample X<sub>1</sub>, ..., X<sub>n</sub> from our distribution

It does beyond our short intro to discuss in detail how those confidence intervals are calculated. Some more details can be found [on Wikipedia](https://en.wikipedia.org/wiki/Confidence_interval). In short, we define the distribution of computed sample mean relative to the true mean of the population, which is called **student distribution**.

> **Interesting fact**: Student distribution is named after mathematician William Sealy Gosset, who published his paper under pseudonym "Student". He worked in the Guinness brewery, and, according to one of the versions, his employer did not want general public to know that they were using statistical tests to determine the quality of raw materials.

> **Interesting fact**: Student distribution is named after mathematician William Sealy Gosset, who published his paper under the pseudonym "Student". He worked in the Guinness brewery, and, according to one of the versions, his employer did not want general public to know that they were using statistical tests to determine the quality of raw materials.