# Mradi wa Gumzo

Mradi huu wa gumzo unaonyesha jinsi ya kujenga Msaidizi wa Gumzo kwa kutumia GitHub Models.

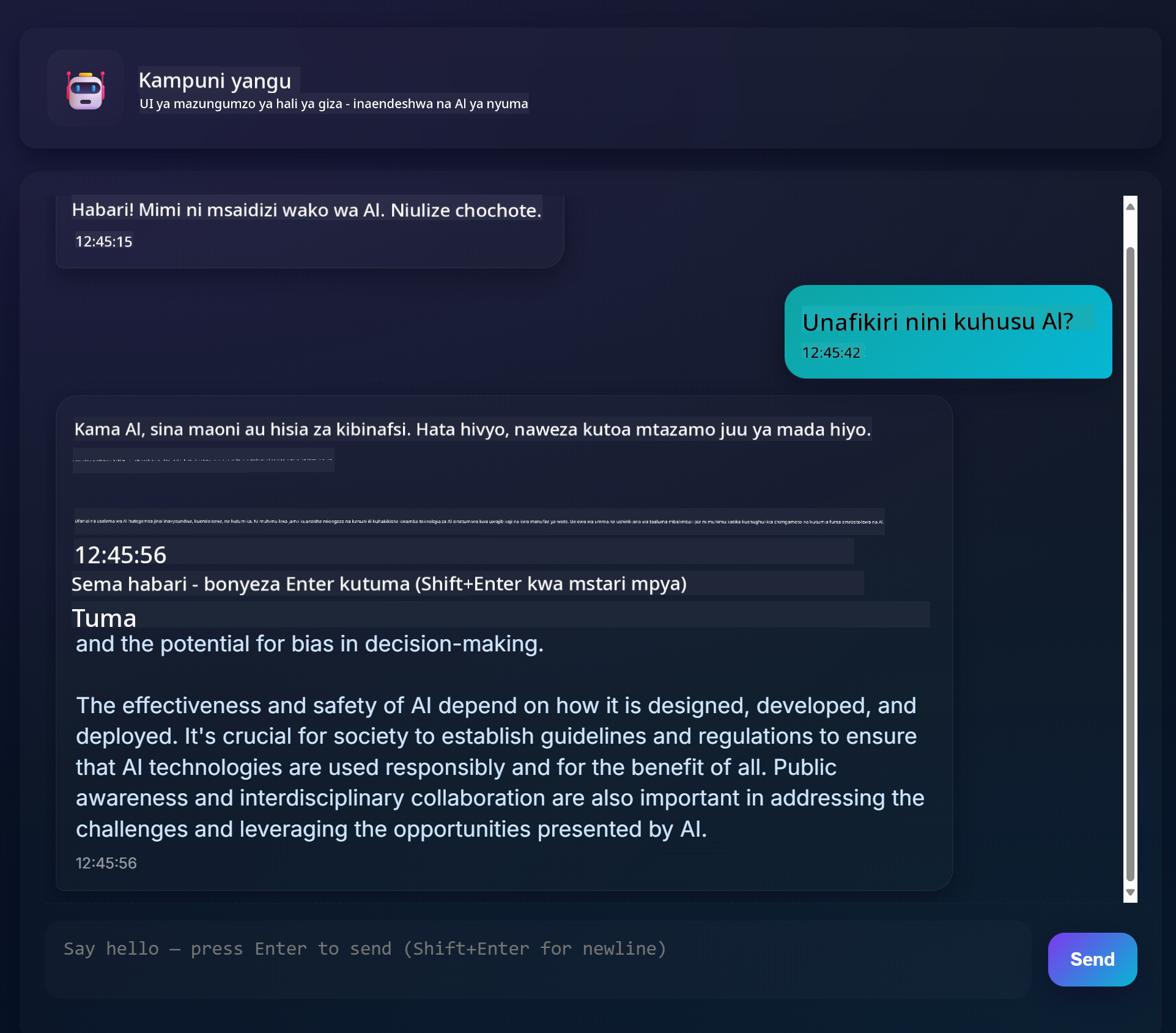

Hivi ndivyo mradi uliokamilika unavyoonekana:

Kwa muktadha, kujenga wasaidizi wa gumzo kwa kutumia AI ya kizazi ni njia nzuri ya kuanza kujifunza kuhusu AI. Kile utakachojifunza ni jinsi ya kuunganisha AI ya kizazi kwenye programu ya wavuti katika somo hili, hebu tuanze.

## Kuunganisha na AI ya kizazi

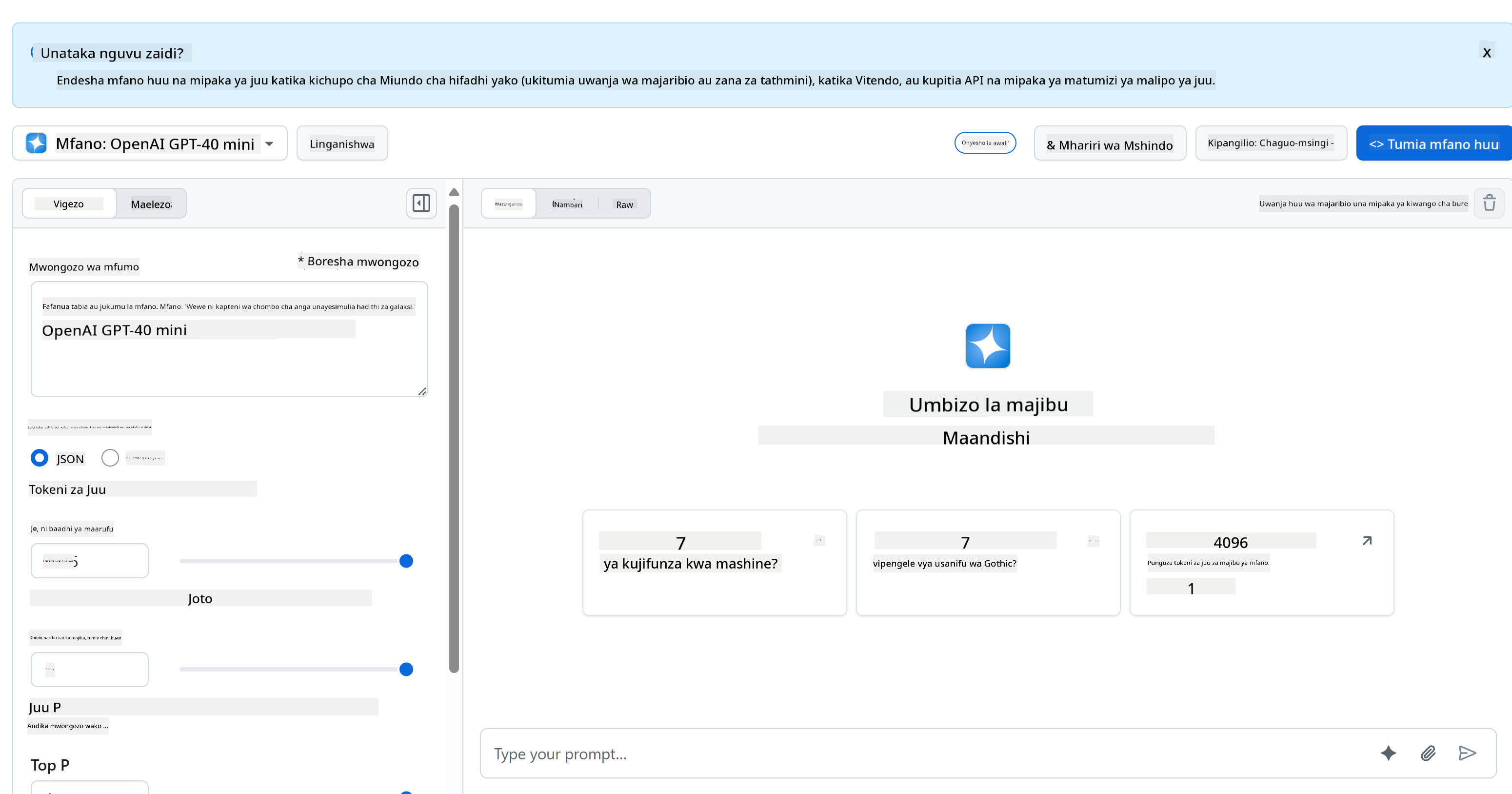

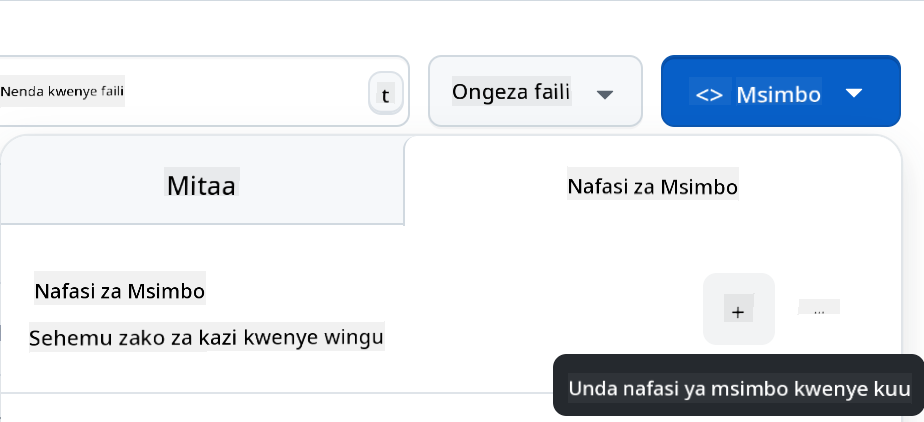

Kwa upande wa nyuma (backend), tunatumia GitHub Models. Ni huduma nzuri inayokuwezesha kutumia AI bila malipo. Nenda kwenye uwanja wake wa majaribio na chukua msimbo unaolingana na lugha ya nyuma unayochagua. Hivi ndivyo inavyoonekana kwenye [GitHub Models Playground](https://github.com/marketplace/models/azure-openai/gpt-4o-mini/playground)

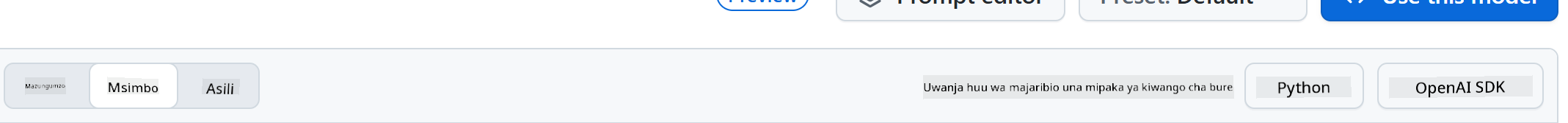

Kama tulivyosema, chagua kichupo cha "Code" na mazingira unayopendelea.

### Kutumia Python

Katika hali hii tunachagua Python, ambayo itamaanisha tunachukua msimbo huu:

```python

"""Run this model in Python

> pip install openai

"""

import os

from openai import OpenAI

# To authenticate with the model you will need to generate a personal access token (PAT) in your GitHub settings.

# Create your PAT token by following instructions here: https://docs.github.com/en/authentication/keeping-your-account-and-data-secure/managing-your-personal-access-tokens

client = OpenAI(

base_url="https://models.github.ai/inference",

api_key=os.environ["GITHUB_TOKEN"],

)

response = client.chat.completions.create(

messages=[

{

"role": "system",

"content": "",

},

{

"role": "user",

"content": "What is the capital of France?",

}

],

model="openai/gpt-4o-mini",

temperature=1,

max_tokens=4096,

top_p=1

)

print(response.choices[0].message.content)

```

Hebu tusafishe msimbo huu kidogo ili uweze kutumika tena:

```python

def call_llm(prompt: str, system_message: str):

response = client.chat.completions.create(

messages=[

{

"role": "system",

"content": system_message,

},

{

"role": "user",

"content": prompt,

}

],

model="openai/gpt-4o-mini",

temperature=1,

max_tokens=4096,

top_p=1

)

return response.choices[0].message.content

```

Kwa kutumia kazi hii `call_llm` sasa tunaweza kuchukua maelezo ya awali na maelezo ya mfumo, na kazi hii itarudisha matokeo.

### Kubinafsisha Msaidizi wa AI

Ikiwa unataka kubinafsisha msaidizi wa AI unaweza kubainisha jinsi unavyotaka awe kwa kujaza maelezo ya mfumo kama hivi:

```python

call_llm("Tell me about you", "You're Albert Einstein, you only know of things in the time you were alive")

```

## Kuufichua kupitia Web API

Nzuri, tumemaliza sehemu ya AI, hebu tuone jinsi tunavyoweza kuunganisha hiyo kwenye Web API. Kwa Web API, tunachagua kutumia Flask, lakini mfumo wowote wa wavuti unafaa. Hebu tuone msimbo wake:

### Kutumia Python

```python

# api.py

from flask import Flask, request, jsonify

from llm import call_llm

from flask_cors import CORS

app = Flask(__name__)

CORS(app) # * example.com

@app.route("/", methods=["GET"])

def index():

return "Welcome to this API. Call POST /hello with 'message': 'my message' as JSON payload"

@app.route("/hello", methods=["POST"])

def hello():

# get message from request body { "message": "do this taks for me" }

data = request.get_json()

message = data.get("message", "")

response = call_llm(message, "You are a helpful assistant.")

return jsonify({

"response": response

})

if __name__ == "__main__":

app.run(host="0.0.0.0", port=5000)

```

Hapa, tunaunda API ya Flask na kufafanua njia ya msingi "/" na "/chat". Njia ya pili imekusudiwa kutumiwa na sehemu ya mbele (frontend) kupitisha maswali kwake.

Ili kuunganisha *llm.py* tunachohitaji kufanya ni:

- Kuingiza kazi ya `call_llm`:

```python

from llm import call_llm

from flask import Flask, request

```

- Kuiita kutoka kwenye njia ya "/chat":

```python

@app.route("/hello", methods=["POST"])

def hello():

# get message from request body { "message": "do this taks for me" }

data = request.get_json()

message = data.get("message", "")

response = call_llm(message, "You are a helpful assistant.")

return jsonify({

"response": response

})

```

Hapa tunachambua ombi linalokuja ili kupata mali ya `message` kutoka kwa mwili wa JSON. Baadaye tunaita LLM kwa mwito huu:

```python

response = call_llm(message, "You are a helpful assistant")

# return the response as JSON

return jsonify({

"response": response

})

```

Nzuri, sasa tumemaliza kile tunachohitaji.

## Kuseti Cors

Tunapaswa kutaja kwamba tunaseti kitu kama CORS, kushiriki rasilimali kati ya asili tofauti. Hii inamaanisha kwamba kwa sababu sehemu yetu ya nyuma na ya mbele zitakimbia kwenye bandari tofauti, tunahitaji kuruhusu sehemu ya mbele kupiga simu kwenye sehemu ya nyuma.

### Kutumia Python

Kuna kipande cha msimbo katika *api.py* kinachoseti hili:

```python

from flask_cors import CORS

app = Flask(__name__)

CORS(app) # * example.com

```

Kwa sasa kimeseti kuruhusu "*" ambayo ni asili zote, na hiyo si salama sana, tunapaswa kuibana mara tu tunapofika kwenye uzalishaji.

## Endesha mradi wako

Ili kuendesha mradi wako, unahitaji kuanza sehemu ya nyuma kwanza na kisha sehemu ya mbele.

### Kutumia Python

Sawa, kwa hivyo tuna *llm.py* na *api.py*, tunawezaje kufanya kazi hii na sehemu ya nyuma? Naam, kuna mambo mawili tunahitaji kufanya:

- Sakinisha utegemezi:

```sh

cd backend

python -m venv venv

source ./venv/bin/activate

pip install openai flask flask-cors openai

```

- Anzisha API

```sh

python api.py

```

Ikiwa uko kwenye Codespaces unahitaji kwenda kwenye Ports katika sehemu ya chini ya mhariri, bofya kulia juu yake na bonyeza "Port Visibility" na uchague "Public".

### Fanya kazi kwenye sehemu ya mbele

Sasa kwa kuwa tuna API inayofanya kazi, hebu tuunde sehemu ya mbele kwa ajili ya hii. Sehemu ya mbele ya kiwango cha chini kabisa ambayo tutaboresha hatua kwa hatua. Katika folda ya *frontend*, unda yafuatayo:

```text

backend/

frontend/

index.html

app.js

styles.css

```

Hebu tuanze na **index.html**:

```html

```

Hii hapo juu ni kiwango cha chini kabisa unachohitaji ili kusaidia dirisha la gumzo, kwani lina sehemu ya maandishi ambapo ujumbe utaonyeshwa, sehemu ya kuingiza ambapo utaandika ujumbe, na kitufe cha kutuma ujumbe wako kwa sehemu ya nyuma. Hebu tuangalie JavaScript inayofuata katika *app.js*

**app.js**

```js

// app.js

(function(){

// 1. set up elements

const messages = document.getElementById("messages");

const form = document.getElementById("form");

const input = document.getElementById("input");

const BASE_URL = "change this";

const API_ENDPOINT = `${BASE_URL}/hello`;

// 2. create a function that talks to our backend

async function callApi(text) {

const response = await fetch(API_ENDPOINT, {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({ message: text })

});

let json = await response.json();

return json.response;

}

// 3. add response to our textarea

function appendMessage(text, role) {

const el = document.createElement("div");

el.className = `message ${role}`;

el.innerHTML = text;

messages.appendChild(el);

}

// 4. listen to submit events

form.addEventListener("submit", async(e) => {

e.preventDefault();

// someone clicked the button in the form

// get input

const text = input.value.trim();

appendMessage(text, "user")

// reset it

input.value = '';

const reply = await callApi(text);

// add to messages

appendMessage(reply, "assistant");

})

})();

```

Hebu tuende kupitia msimbo kwa kila sehemu:

- 1) Hapa tunapata rejeleo kwa vipengele vyote tutakavyorejelea baadaye katika msimbo

- 2) Katika sehemu hii, tunaunda kazi inayotumia njia ya kujengwa ya `fetch` inayopiga simu sehemu ya nyuma

- 3) `appendMessage` husaidia kuongeza majibu pamoja na kile unachotype kama mtumiaji.

- 4) Hapa tunasikiliza tukio la kuwasilisha na tunasoma sehemu ya kuingiza, kuweka ujumbe wa mtumiaji kwenye sehemu ya maandishi, kupiga simu API, na kuonyesha jibu hilo kwenye sehemu ya maandishi.

Hebu tuangalie mitindo inayofuata, hapa ndipo unaweza kuwa mbunifu na kuifanya ionekane unavyotaka, lakini hapa kuna mapendekezo:

**styles.css**

```

.message {

background: #222;

box-shadow: 0 0 0 10px orange;

padding: 10px:

margin: 5px;

}

.message.user {

background: blue;

}

.message.assistant {

background: grey;

}

```

Kwa darasa hizi tatu, utaweza kutengeneza mitindo tofauti kulingana na mahali ujumbe unatoka, msaidizi au wewe kama mtumiaji. Ikiwa unataka kupata msukumo, angalia folda ya `solution/frontend/styles.css`.

### Badilisha Base Url

Kuna jambo moja hapa hatukuseti, nalo ni `BASE_URL`, hili halijulikani hadi sehemu ya nyuma ianze. Ili kuiseti:

- Ikiwa unaendesha API kwa ndani, inapaswa kusetiwa kama `http://localhost:5000`.

- Ikiwa unaendesha kwenye Codespaces, inapaswa kuonekana kama "[name]app.github.dev".

## Kazi

Unda folda yako mwenyewe *project* yenye maudhui kama hivi:

```text

project/

frontend/

index.html

app.js

styles.css

backend/

...

```

Nakili maudhui kutoka kwa yale yaliyoelekezwa hapo juu lakini jisikie huru kubinafsisha unavyopenda.

## Suluhisho

[Suluhisho](./solution/README.md)

## Ziada

Jaribu kubadilisha tabia ya msaidizi wa AI.

### Kwa Python

Unapopiga `call_llm` katika *api.py* unaweza kubadilisha hoja ya pili kwa unavyotaka, kwa mfano:

```python

call_llm(message, "You are Captain Picard")

```

### Sehemu ya mbele

Badilisha pia CSS na maandishi unavyopenda, kwa hivyo fanya mabadiliko katika *index.html* na *styles.css*.

## Muhtasari

Nzuri, umejifunza kutoka mwanzo jinsi ya kuunda msaidizi wa kibinafsi kwa kutumia AI. Tumefanya hivyo kwa kutumia GitHub Models, sehemu ya nyuma katika Python na sehemu ya mbele katika HTML, CSS na JavaScript.

## Kuseti na Codespaces

- Tembelea: [Web Dev For Beginners repo](https://github.com/microsoft/Web-Dev-For-Beginners)

- Unda kutoka kwa kiolezo (hakikisha umeingia kwenye GitHub) kwenye kona ya juu kulia:

- Mara tu ukiwa kwenye repo yako, unda Codespace:

Hii inapaswa kuanzisha mazingira unayoweza kufanya kazi nayo sasa.

---

**Kanusho**:

Hati hii imetafsiriwa kwa kutumia huduma ya kutafsiri ya AI [Co-op Translator](https://github.com/Azure/co-op-translator). Ingawa tunajitahidi kuhakikisha usahihi, tafsiri za kiotomatiki zinaweza kuwa na makosa au kutokuwa sahihi. Hati ya asili katika lugha yake ya awali inapaswa kuchukuliwa kama chanzo cha mamlaka. Kwa taarifa muhimu, tafsiri ya kitaalamu ya binadamu inapendekezwa. Hatutawajibika kwa kutoelewana au tafsiri zisizo sahihi zinazotokana na matumizi ya tafsiri hii.