diff --git a/README.md b/README.md

index 379550cee..2ade8a69c 100644

--- a/README.md

+++ b/README.md

@@ -1,19 +1,10 @@

([简体中文](./README_cn.md)|English)

+

+

-

-

-------------------------------------------------------------------------------------

-

@@ -28,6 +19,20 @@

@@ -28,6 +19,20 @@

+

+

+

**PaddleSpeech** is an open-source toolkit on [PaddlePaddle](https://github.com/PaddlePaddle/Paddle) platform for a variety of critical tasks in speech and audio, with the state-of-art and influential models.

@@ -142,47 +147,40 @@ For more synthesized audios, please refer to [PaddleSpeech Text-to-Speech sample

-### ⭐ Examples

-- **[PaddleBoBo](https://github.com/JiehangXie/PaddleBoBo): Use PaddleSpeech TTS to generate virtual human voice.**

-

-

-

-- [PaddleSpeech Demo Video](https://paddlespeech.readthedocs.io/en/latest/demo_video.html)

-

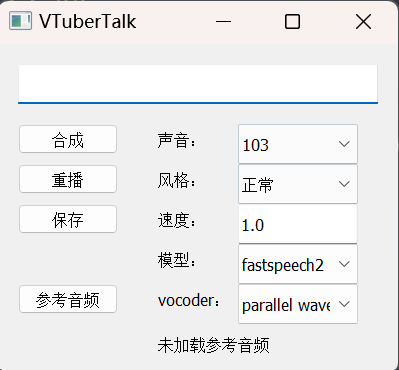

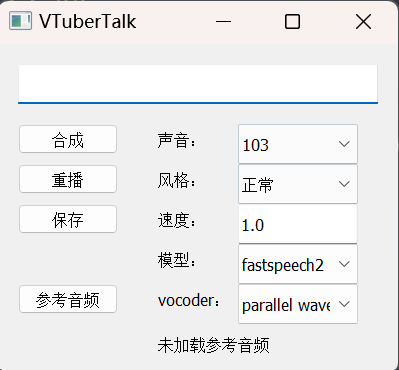

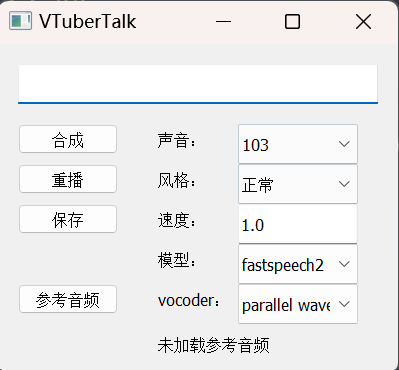

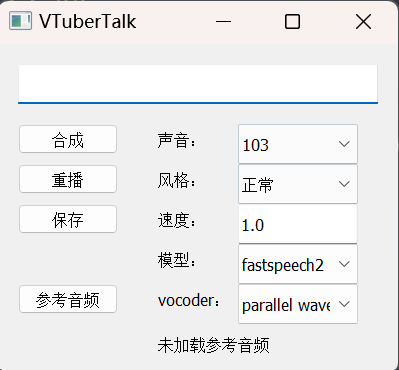

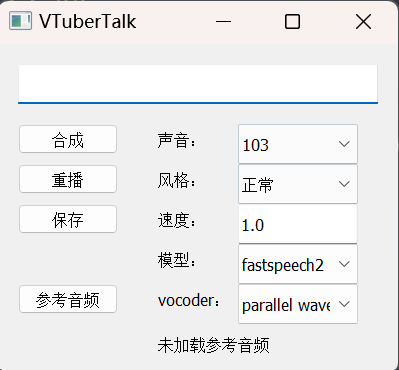

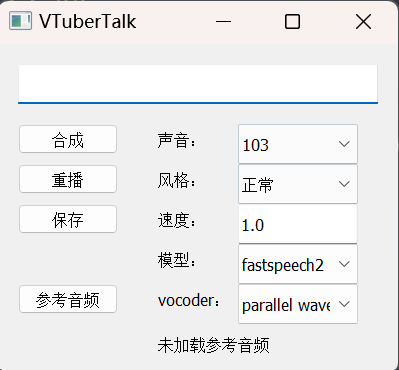

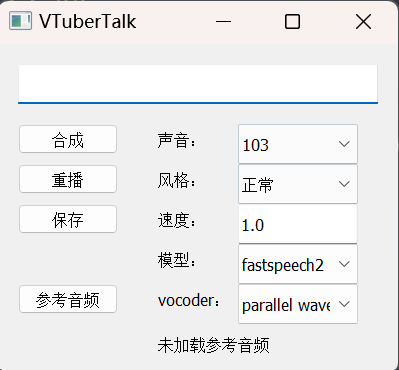

-- **[VTuberTalk](https://github.com/jerryuhoo/VTuberTalk): Use PaddleSpeech TTS and ASR to clone voice from videos.**

-

-

-

-

Speech-to-Text Module Type |

Dataset |

Model Type |

- Link |

+ Example |

@@ -371,7 +404,7 @@ PaddleSpeech supports a series of most popular models. They are summarized in [r

Text-to-Speech Module Type |

Model Type |

Dataset |

- Link |

+ Example |

@@ -489,7 +522,7 @@ PaddleSpeech supports a series of most popular models. They are summarized in [r

Task |

Dataset |

Model Type |

- Link |

+ Example |

@@ -514,7 +547,7 @@ PaddleSpeech supports a series of most popular models. They are summarized in [r

Task |

Dataset |

Model Type |

- Link |

+ Example |

@@ -539,7 +572,7 @@ PaddleSpeech supports a series of most popular models. They are summarized in [r

Task |

Dataset |

Model Type |

- Link |

+ Example |

@@ -589,6 +622,21 @@ Normally, [Speech SoTA](https://paperswithcode.com/area/speech), [Audio SoTA](ht

The Text-to-Speech module is originally called [Parakeet](https://github.com/PaddlePaddle/Parakeet), and now merged with this repository. If you are interested in academic research about this task, please see [TTS research overview](https://github.com/PaddlePaddle/PaddleSpeech/tree/develop/docs/source/tts#overview). Also, [this document](https://github.com/PaddlePaddle/PaddleSpeech/blob/develop/docs/source/tts/models_introduction.md) is a good guideline for the pipeline components.

+

+## ⭐ Examples

+- **[PaddleBoBo](https://github.com/JiehangXie/PaddleBoBo): Use PaddleSpeech TTS to generate virtual human voice.**

+

+

+

+- [PaddleSpeech Demo Video](https://paddlespeech.readthedocs.io/en/latest/demo_video.html)

+

+- **[VTuberTalk](https://github.com/jerryuhoo/VTuberTalk): Use PaddleSpeech TTS and ASR to clone voice from videos.**

+

+

+

+

-

-------------------------------------------------------------------------------------

-

-  +

+  +

+

+

+  +

+

+

+

+

+------------------------------------------------------------------------------------

+

+

+

+

+

+

**PaddleSpeech** 是基于飞桨 [PaddlePaddle](https://github.com/PaddlePaddle/Paddle) 的语音方向的开源模型库,用于语音和音频中的各种关键任务的开发,包含大量基于深度学习前沿和有影响力的模型,一些典型的应用示例如下:

##### 语音识别

@@ -57,7 +78,6 @@ from https://github.com/18F/open-source-guide/blob/18f-pages/pages/making-readme

我认为跑步最重要的就是给我带来了身体健康。 |

-

@@ -143,47 +163,39 @@ from https://github.com/18F/open-source-guide/blob/18f-pages/pages/making-readme

-### ⭐ 应用案例

-- **[PaddleBoBo](https://github.com/JiehangXie/PaddleBoBo): 使用 PaddleSpeech 的语音合成模块生成虚拟人的声音。**

-

-

-

-

+

## 安装

我们强烈建议用户在 **Linux** 环境下,*3.7* 以上版本的 *python* 上安装 PaddleSpeech。

目前为止,**Linux** 支持声音分类、语音识别、语音合成和语音翻译四种功能,**Mac OSX、 Windows** 下暂不支持语音翻译功能。 想了解具体安装细节,可以参考[安装文档](./docs/source/install_cn.md)。

+

## 快速开始

安装完成后,开发者可以通过命令行快速开始,改变 `--input` 可以尝试用自己的音频或文本测试。

@@ -232,7 +246,7 @@ paddlespeech tts --input "你好,欢迎使用百度飞桨深度学习框架!

**批处理**

```

echo -e "1 欢迎光临。\n2 谢谢惠顾。" | paddlespeech tts

-```

+```

**Shell管道**

ASR + Punc:

@@ -269,6 +283,38 @@ paddlespeech_client cls --server_ip 127.0.0.1 --port 8090 --input input.wav

更多服务相关的命令行使用信息,请参考 [demos](https://github.com/PaddlePaddle/PaddleSpeech/tree/develop/demos/speech_server)

+

+## 快速使用流式服务

+

+开发者可以尝试[流式ASR](./demos/streaming_asr_server/README.md)和 [流式TTS](./demos/streaming_tts_server/README.md)服务.

+

+**启动流式ASR服务**

+

+```

+paddlespeech_server start --config_file ./demos/streaming_asr_server/conf/application.yaml

+```

+

+**访问流式ASR服务**

+

+```

+paddlespeech_client asr_online --server_ip 127.0.0.1 --port 8090 --input input_16k.wav

+```

+

+**启动流式TTS服务**

+

+```

+paddlespeech_server start --config_file ./demos/streaming_tts_server/conf/tts_online_application.yaml

+```

+

+**访问流式TTS服务**

+

+```

+paddlespeech_client tts_online --server_ip 127.0.0.1 --port 8092 --protocol http --input "您好,欢迎使用百度飞桨语音合成服务。" --output output.wav

+```

+

+更多信息参看: [流式 ASR](./demos/streaming_asr_server/README.md) 和 [流式 TTS](./demos/streaming_tts_server/README.md)

+

+

## 模型列表

PaddleSpeech 支持很多主流的模型,并提供了预训练模型,详情请见[模型列表](./docs/source/released_model.md)。

@@ -282,8 +328,8 @@ PaddleSpeech 的 **语音转文本** 包含语音识别声学模型、语音识

+

## 安装

我们强烈建议用户在 **Linux** 环境下,*3.7* 以上版本的 *python* 上安装 PaddleSpeech。

目前为止,**Linux** 支持声音分类、语音识别、语音合成和语音翻译四种功能,**Mac OSX、 Windows** 下暂不支持语音翻译功能。 想了解具体安装细节,可以参考[安装文档](./docs/source/install_cn.md)。

+

## 快速开始

安装完成后,开发者可以通过命令行快速开始,改变 `--input` 可以尝试用自己的音频或文本测试。

@@ -232,7 +246,7 @@ paddlespeech tts --input "你好,欢迎使用百度飞桨深度学习框架!

**批处理**

```

echo -e "1 欢迎光临。\n2 谢谢惠顾。" | paddlespeech tts

-```

+```

**Shell管道**

ASR + Punc:

@@ -269,6 +283,38 @@ paddlespeech_client cls --server_ip 127.0.0.1 --port 8090 --input input.wav

更多服务相关的命令行使用信息,请参考 [demos](https://github.com/PaddlePaddle/PaddleSpeech/tree/develop/demos/speech_server)

+

+## 快速使用流式服务

+

+开发者可以尝试[流式ASR](./demos/streaming_asr_server/README.md)和 [流式TTS](./demos/streaming_tts_server/README.md)服务.

+

+**启动流式ASR服务**

+

+```

+paddlespeech_server start --config_file ./demos/streaming_asr_server/conf/application.yaml

+```

+

+**访问流式ASR服务**

+

+```

+paddlespeech_client asr_online --server_ip 127.0.0.1 --port 8090 --input input_16k.wav

+```

+

+**启动流式TTS服务**

+

+```

+paddlespeech_server start --config_file ./demos/streaming_tts_server/conf/tts_online_application.yaml

+```

+

+**访问流式TTS服务**

+

+```

+paddlespeech_client tts_online --server_ip 127.0.0.1 --port 8092 --protocol http --input "您好,欢迎使用百度飞桨语音合成服务。" --output output.wav

+```

+

+更多信息参看: [流式 ASR](./demos/streaming_asr_server/README.md) 和 [流式 TTS](./demos/streaming_tts_server/README.md)

+

+

## 模型列表

PaddleSpeech 支持很多主流的模型,并提供了预训练模型,详情请见[模型列表](./docs/source/released_model.md)。

@@ -282,8 +328,8 @@ PaddleSpeech 的 **语音转文本** 包含语音识别声学模型、语音识

| 语音转文本模块类型 |

数据集 |

- 模型种类 |

- 链接 |

+ 模型类型 |

+ 脚本 |

@@ -356,9 +402,9 @@ PaddleSpeech 的 **语音合成** 主要包含三个模块:文本前端、声

| 语音合成模块类型 |

- 模型种类 |

+ 模型类型 |

数据集 |

- 链接 |

+ 脚本 |

@@ -474,8 +520,8 @@ PaddleSpeech 的 **语音合成** 主要包含三个模块:文本前端、声

| 任务 |

数据集 |

- 模型种类 |

- 链接 |

+ 模型类型 |

+ 脚本 |

@@ -498,10 +544,10 @@ PaddleSpeech 的 **语音合成** 主要包含三个模块:文本前端、声

- | Task |

- Dataset |

- Model Type |

- Link |

+ 任务 |

+ 数据集 |

+ 模型类型 |

+ 脚本 |

@@ -525,8 +571,8 @@ PaddleSpeech 的 **语音合成** 主要包含三个模块:文本前端、声

| 任务 |

数据集 |

- 模型种类 |

- 链接 |

+ 模型类型 |

+ 脚本 |

@@ -582,6 +628,21 @@ PaddleSpeech 的 **语音合成** 主要包含三个模块:文本前端、声

语音合成模块最初被称为 [Parakeet](https://github.com/PaddlePaddle/Parakeet),现在与此仓库合并。如果您对该任务的学术研究感兴趣,请参阅 [TTS 研究概述](https://github.com/PaddlePaddle/PaddleSpeech/tree/develop/docs/source/tts#overview)。此外,[模型介绍](https://github.com/PaddlePaddle/PaddleSpeech/blob/develop/docs/source/tts/models_introduction.md) 是了解语音合成流程的一个很好的指南。

+## ⭐ 应用案例

+- **[PaddleBoBo](https://github.com/JiehangXie/PaddleBoBo): 使用 PaddleSpeech 的语音合成模块生成虚拟人的声音。**

+

+

+

+- [PaddleSpeech 示例视频](https://paddlespeech.readthedocs.io/en/latest/demo_video.html)

+

+

+- **[VTuberTalk](https://github.com/jerryuhoo/VTuberTalk): 使用 PaddleSpeech 的语音合成和语音识别从视频中克隆人声。**

+

+

+

+

-

-

- +

+

-

- +

## 安装

我们强烈建议用户在 **Linux** 环境下,*3.7* 以上版本的 *python* 上安装 PaddleSpeech。

目前为止,**Linux** 支持声音分类、语音识别、语音合成和语音翻译四种功能,**Mac OSX、 Windows** 下暂不支持语音翻译功能。 想了解具体安装细节,可以参考[安装文档](./docs/source/install_cn.md)。

+

## 快速开始

安装完成后,开发者可以通过命令行快速开始,改变 `--input` 可以尝试用自己的音频或文本测试。

@@ -232,7 +246,7 @@ paddlespeech tts --input "你好,欢迎使用百度飞桨深度学习框架!

**批处理**

```

echo -e "1 欢迎光临。\n2 谢谢惠顾。" | paddlespeech tts

-```

+```

**Shell管道**

ASR + Punc:

@@ -269,6 +283,38 @@ paddlespeech_client cls --server_ip 127.0.0.1 --port 8090 --input input.wav

更多服务相关的命令行使用信息,请参考 [demos](https://github.com/PaddlePaddle/PaddleSpeech/tree/develop/demos/speech_server)

+

+## 快速使用流式服务

+

+开发者可以尝试[流式ASR](./demos/streaming_asr_server/README.md)和 [流式TTS](./demos/streaming_tts_server/README.md)服务.

+

+**启动流式ASR服务**

+

+```

+paddlespeech_server start --config_file ./demos/streaming_asr_server/conf/application.yaml

+```

+

+**访问流式ASR服务**

+

+```

+paddlespeech_client asr_online --server_ip 127.0.0.1 --port 8090 --input input_16k.wav

+```

+

+**启动流式TTS服务**

+

+```

+paddlespeech_server start --config_file ./demos/streaming_tts_server/conf/tts_online_application.yaml

+```

+

+**访问流式TTS服务**

+

+```

+paddlespeech_client tts_online --server_ip 127.0.0.1 --port 8092 --protocol http --input "您好,欢迎使用百度飞桨语音合成服务。" --output output.wav

+```

+

+更多信息参看: [流式 ASR](./demos/streaming_asr_server/README.md) 和 [流式 TTS](./demos/streaming_tts_server/README.md)

+

+

## 模型列表

PaddleSpeech 支持很多主流的模型,并提供了预训练模型,详情请见[模型列表](./docs/source/released_model.md)。

@@ -282,8 +328,8 @@ PaddleSpeech 的 **语音转文本** 包含语音识别声学模型、语音识

+

## 安装

我们强烈建议用户在 **Linux** 环境下,*3.7* 以上版本的 *python* 上安装 PaddleSpeech。

目前为止,**Linux** 支持声音分类、语音识别、语音合成和语音翻译四种功能,**Mac OSX、 Windows** 下暂不支持语音翻译功能。 想了解具体安装细节,可以参考[安装文档](./docs/source/install_cn.md)。

+

## 快速开始

安装完成后,开发者可以通过命令行快速开始,改变 `--input` 可以尝试用自己的音频或文本测试。

@@ -232,7 +246,7 @@ paddlespeech tts --input "你好,欢迎使用百度飞桨深度学习框架!

**批处理**

```

echo -e "1 欢迎光临。\n2 谢谢惠顾。" | paddlespeech tts

-```

+```

**Shell管道**

ASR + Punc:

@@ -269,6 +283,38 @@ paddlespeech_client cls --server_ip 127.0.0.1 --port 8090 --input input.wav

更多服务相关的命令行使用信息,请参考 [demos](https://github.com/PaddlePaddle/PaddleSpeech/tree/develop/demos/speech_server)

+

+## 快速使用流式服务

+

+开发者可以尝试[流式ASR](./demos/streaming_asr_server/README.md)和 [流式TTS](./demos/streaming_tts_server/README.md)服务.

+

+**启动流式ASR服务**

+

+```

+paddlespeech_server start --config_file ./demos/streaming_asr_server/conf/application.yaml

+```

+

+**访问流式ASR服务**

+

+```

+paddlespeech_client asr_online --server_ip 127.0.0.1 --port 8090 --input input_16k.wav

+```

+

+**启动流式TTS服务**

+

+```

+paddlespeech_server start --config_file ./demos/streaming_tts_server/conf/tts_online_application.yaml

+```

+

+**访问流式TTS服务**

+

+```

+paddlespeech_client tts_online --server_ip 127.0.0.1 --port 8092 --protocol http --input "您好,欢迎使用百度飞桨语音合成服务。" --output output.wav

+```

+

+更多信息参看: [流式 ASR](./demos/streaming_asr_server/README.md) 和 [流式 TTS](./demos/streaming_tts_server/README.md)

+

+

## 模型列表

PaddleSpeech 支持很多主流的模型,并提供了预训练模型,详情请见[模型列表](./docs/source/released_model.md)。

@@ -282,8 +328,8 @@ PaddleSpeech 的 **语音转文本** 包含语音识别声学模型、语音识