Sorry, your browser doesn't support embedded videos.

diff --git a/docs/source/install.md b/docs/source/install.md

index 205d3e600..b78fdd864 100644

--- a/docs/source/install.md

+++ b/docs/source/install.md

@@ -68,7 +68,7 @@ pip install paddlepaddle==2.4.1 -i https://mirror.baidu.com/pypi/simple

# install develop version

pip install paddlepaddle==0.0.0 -f https://www.paddlepaddle.org.cn/whl/linux/cpu-mkl/develop.html

```

-> If you encounter problem with downloading **nltk_data** while using paddlespeech, it maybe due to your poor network, we suggest you download the [nltk_data](https://paddlespeech.bj.bcebos.com/Parakeet/tools/nltk_data.tar.gz) provided by us, and extract it to your `${HOME}`.

+> If you encounter problem with downloading **nltk_data** while using paddlespeech, it maybe due to your poor network, we suggest you download the [nltk_data](https://paddlespeech.cdn.bcebos.com/Parakeet/tools/nltk_data.tar.gz) provided by us, and extract it to your `${HOME}`.

> If you fail to install paddlespeech-ctcdecoders, you only can not use deepspeech2 model inference. For other models, it doesn't matter.

diff --git a/docs/source/install_cn.md b/docs/source/install_cn.md

index ecfb22f59..c47be0500 100644

--- a/docs/source/install_cn.md

+++ b/docs/source/install_cn.md

@@ -65,7 +65,7 @@ pip install paddlepaddle==2.3.1 -i https://mirror.baidu.com/pypi/simple

# 安装 develop 版本

pip install paddlepaddle==0.0.0 -f https://www.paddlepaddle.org.cn/whl/linux/cpu-mkl/develop.html

```

-> 如果您在使用 paddlespeech 的过程中遇到关于下载 **nltk_data** 的问题,可能是您的网络不佳,我们建议您下载我们提供的 [nltk_data](https://paddlespeech.bj.bcebos.com/Parakeet/tools/nltk_data.tar.gz) 并解压缩到您的 `${HOME}` 目录下。

+> 如果您在使用 paddlespeech 的过程中遇到关于下载 **nltk_data** 的问题,可能是您的网络不佳,我们建议您下载我们提供的 [nltk_data](https://paddlespeech.cdn.bcebos.com/Parakeet/tools/nltk_data.tar.gz) 并解压缩到您的 `${HOME}` 目录下。

> 如果出现 paddlespeech-ctcdecoders 无法安装的问题,无须担心,这个只影响 deepspeech2 模型的推理,不影响其他模型的使用。

diff --git a/docs/source/released_model.md b/docs/source/released_model.md

index 87619a558..7c67685d3 100644

--- a/docs/source/released_model.md

+++ b/docs/source/released_model.md

@@ -7,34 +7,34 @@

### Speech Recognition Model

Acoustic Model | Training Data | Token-based | Size | Descriptions | CER | WER | Hours of speech | Example Link | Inference Type | static_model |

:-------------:| :------------:| :-----: | -----: | :-----: |:-----:| :-----: | :-----: | :-----: | :-----: | :-----: |

-[Ds2 Online Wenetspeech ASR0 Model](https://paddlespeech.bj.bcebos.com/s2t/wenetspeech/asr0/asr0_deepspeech2_online_wenetspeech_ckpt_1.0.4.model.tar.gz) | Wenetspeech Dataset | Char-based | 1.2 GB | 2 Conv + 5 LSTM layers | 0.152 (test\_net, w/o LM)

-

+

Sorry, your browser doesn't support embedded videos.

diff --git a/docs/source/streaming_tts_demo_video.rst b/docs/source/streaming_tts_demo_video.rst

index 3ad9ca6cf..b2dcdba2c 100644

--- a/docs/source/streaming_tts_demo_video.rst

+++ b/docs/source/streaming_tts_demo_video.rst

@@ -5,7 +5,7 @@ Streaming TTS Demo Video

-

Sorry, your browser doesn't support embedded videos.

diff --git a/docs/source/tts/README.md b/docs/source/tts/README.md

index 835db08ee..97a426be5 100644

--- a/docs/source/tts/README.md

+++ b/docs/source/tts/README.md

@@ -35,39 +35,39 @@ Check our [website](https://paddlespeech.readthedocs.io/en/latest/tts/demo.html)

### Acoustic Model

#### FastSpeech2/FastPitch

-1. [fastspeech2_nosil_baker_ckpt_0.4.zip](https://paddlespeech.bj.bcebos.com/Parakeet/fastspeech2_nosil_baker_ckpt_0.4.zip)

-2. [fastspeech2_nosil_aishell3_ckpt_0.4.zip](https://paddlespeech.bj.bcebos.com/Parakeet/fastspeech2_nosil_aishell3_ckpt_0.4.zip)

-3. [fastspeech2_nosil_ljspeech_ckpt_0.5.zip](https://paddlespeech.bj.bcebos.com/Parakeet/fastspeech2_nosil_ljspeech_ckpt_0.5.zip)

+1. [fastspeech2_nosil_baker_ckpt_0.4.zip](https://paddlespeech.cdn.bcebos.com/Parakeet/fastspeech2_nosil_baker_ckpt_0.4.zip)

+2. [fastspeech2_nosil_aishell3_ckpt_0.4.zip](https://paddlespeech.cdn.bcebos.com/Parakeet/fastspeech2_nosil_aishell3_ckpt_0.4.zip)

+3. [fastspeech2_nosil_ljspeech_ckpt_0.5.zip](https://paddlespeech.cdn.bcebos.com/Parakeet/fastspeech2_nosil_ljspeech_ckpt_0.5.zip)

#### SpeedySpeech

-1. [speedyspeech_nosil_baker_ckpt_0.5.zip](https://paddlespeech.bj.bcebos.com/Parakeet/speedyspeech_nosil_baker_ckpt_0.5.zip)

+1. [speedyspeech_nosil_baker_ckpt_0.5.zip](https://paddlespeech.cdn.bcebos.com/Parakeet/speedyspeech_nosil_baker_ckpt_0.5.zip)

#### TransformerTTS

-1. [transformer_tts_ljspeech_ckpt_0.4.zip](https://paddlespeech.bj.bcebos.com/Parakeet/transformer_tts_ljspeech_ckpt_0.4.zip)

+1. [transformer_tts_ljspeech_ckpt_0.4.zip](https://paddlespeech.cdn.bcebos.com/Parakeet/transformer_tts_ljspeech_ckpt_0.4.zip)

#### Tacotron2

-1. [tacotron2_ljspeech_ckpt_0.3.zip](https://paddlespeech.bj.bcebos.com/Parakeet/tacotron2_ljspeech_ckpt_0.3.zip)

-2. [tacotron2_ljspeech_ckpt_0.3_alternative.zip](https://paddlespeech.bj.bcebos.com/Parakeet/tacotron2_ljspeech_ckpt_0.3_alternative.zip)

+1. [tacotron2_ljspeech_ckpt_0.3.zip](https://paddlespeech.cdn.bcebos.com/Parakeet/tacotron2_ljspeech_ckpt_0.3.zip)

+2. [tacotron2_ljspeech_ckpt_0.3_alternative.zip](https://paddlespeech.cdn.bcebos.com/Parakeet/tacotron2_ljspeech_ckpt_0.3_alternative.zip)

### Vocoder

#### WaveFlow

-1. [waveflow_ljspeech_ckpt_0.3.zip](https://paddlespeech.bj.bcebos.com/Parakeet/waveflow_ljspeech_ckpt_0.3.zip)

+1. [waveflow_ljspeech_ckpt_0.3.zip](https://paddlespeech.cdn.bcebos.com/Parakeet/waveflow_ljspeech_ckpt_0.3.zip)

#### Parallel WaveGAN

-1. [pwg_baker_ckpt_0.4.zip](https://paddlespeech.bj.bcebos.com/Parakeet/pwg_baker_ckpt_0.4.zip)

-2. [pwg_ljspeech_ckpt_0.5.zip](https://paddlespeech.bj.bcebos.com/Parakeet/pwg_ljspeech_ckpt_0.5.zip)

+1. [pwg_baker_ckpt_0.4.zip](https://paddlespeech.cdn.bcebos.com/Parakeet/pwg_baker_ckpt_0.4.zip)

+2. [pwg_ljspeech_ckpt_0.5.zip](https://paddlespeech.cdn.bcebos.com/Parakeet/pwg_ljspeech_ckpt_0.5.zip)

### Voice Cloning

#### Tacotron2_AISHELL3

-1. [tacotron2_aishell3_ckpt_0.3.zip](https://paddlespeech.bj.bcebos.com/Parakeet/tacotron2_aishell3_ckpt_0.3.zip)

+1. [tacotron2_aishell3_ckpt_0.3.zip](https://paddlespeech.cdn.bcebos.com/Parakeet/tacotron2_aishell3_ckpt_0.3.zip)

#### GE2E

-1. [ge2e_ckpt_0.3.zip](https://paddlespeech.bj.bcebos.com/Parakeet/ge2e_ckpt_0.3.zip)

+1. [ge2e_ckpt_0.3.zip](https://paddlespeech.cdn.bcebos.com/Parakeet/ge2e_ckpt_0.3.zip)

diff --git a/docs/source/tts/advanced_usage.md b/docs/source/tts/advanced_usage.md

index 4dd742b70..9f86689e7 100644

--- a/docs/source/tts/advanced_usage.md

+++ b/docs/source/tts/advanced_usage.md

@@ -303,7 +303,7 @@ The experimental codes in PaddleSpeech TTS are generally organized as follows:

.

├── README.md (help information)

├── conf

-│ └── default.yaml (defalut config)

+│ └── default.yaml (default config)

├── local

│ ├── preprocess.sh (script to call data preprocessing.py)

│ ├── synthesize.sh (script to call synthesis.py)

diff --git a/docs/source/tts/demo.rst b/docs/source/tts/demo.rst

index 1ae687f85..bb866e742 100644

--- a/docs/source/tts/demo.rst

+++ b/docs/source/tts/demo.rst

@@ -44,7 +44,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -52,7 +52,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -63,7 +63,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -72,7 +72,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -83,7 +83,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -91,7 +91,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -102,7 +102,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -110,7 +110,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -121,7 +121,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -129,7 +129,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -155,7 +155,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -163,7 +163,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -174,7 +174,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -182,7 +182,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -193,7 +193,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -201,7 +201,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -212,7 +212,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -220,7 +220,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -232,7 +232,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -240,7 +240,7 @@ Audio samples generated from ground-truth spectrograms with a vocoder.

Your browser does not support the audio element.

@@ -273,7 +273,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -281,7 +281,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -292,7 +292,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -300,7 +300,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -311,7 +311,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -320,7 +320,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -332,7 +332,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -341,7 +341,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -352,7 +352,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -361,7 +361,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -372,7 +372,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -381,7 +381,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -392,7 +392,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -401,7 +401,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -412,7 +412,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -421,7 +421,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -432,7 +432,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -441,7 +441,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -467,7 +467,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -475,7 +475,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -486,7 +486,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -494,7 +494,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -505,7 +505,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -513,7 +513,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -525,7 +525,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -533,7 +533,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -544,7 +544,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -552,7 +552,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -563,7 +563,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -571,7 +571,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -582,7 +582,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -590,7 +590,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -601,7 +601,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -609,7 +609,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -620,7 +620,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -628,7 +628,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -647,7 +647,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -657,7 +657,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -667,7 +667,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -678,7 +678,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -688,7 +688,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -698,7 +698,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -708,7 +708,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -718,7 +718,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -728,7 +728,7 @@ Audio samples generated by a TTS system. Text is first transformed into spectrog

Your browser does not support the audio element.

@@ -758,7 +758,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -766,7 +766,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -776,7 +776,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -784,7 +784,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -794,7 +794,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -802,7 +802,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -812,7 +812,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -820,7 +820,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -830,7 +830,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -838,7 +838,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -848,7 +848,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -856,7 +856,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -866,7 +866,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -874,7 +874,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -884,7 +884,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -892,7 +892,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -902,7 +902,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -910,7 +910,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -920,7 +920,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -928,7 +928,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -938,7 +938,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -946,7 +946,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -956,7 +956,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -964,7 +964,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -974,7 +974,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -982,7 +982,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -992,7 +992,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1000,7 +1000,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1010,7 +1010,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1018,7 +1018,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1028,7 +1028,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1036,7 +1036,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1046,7 +1046,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1054,7 +1054,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1064,7 +1064,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1072,7 +1072,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1082,7 +1082,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1090,7 +1090,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1100,7 +1100,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1108,7 +1108,7 @@ PaddleSpeech also support Multi-Speaker TTS, we provide the audio demos generate

Your browser does not support the audio element.

@@ -1144,7 +1144,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1152,7 +1152,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1160,7 +1160,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1170,7 +1170,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1178,7 +1178,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1186,7 +1186,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1196,7 +1196,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1204,7 +1204,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1212,7 +1212,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1222,7 +1222,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1230,7 +1230,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1238,7 +1238,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1248,7 +1248,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1256,7 +1256,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1264,7 +1264,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1274,7 +1274,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1282,7 +1282,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1290,7 +1290,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1300,7 +1300,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1308,7 +1308,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1316,7 +1316,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1326,7 +1326,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1334,7 +1334,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1342,7 +1342,7 @@ The duration control in FastSpeech2 can control the speed of audios will keep th

Your browser does not support the audio element.

@@ -1376,7 +1376,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1384,7 +1384,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1394,7 +1394,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1402,7 +1402,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1412,7 +1412,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1420,7 +1420,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1430,7 +1430,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1438,7 +1438,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1448,7 +1448,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1456,7 +1456,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1466,7 +1466,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1474,7 +1474,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1484,7 +1484,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1492,7 +1492,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1502,7 +1502,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1510,7 +1510,7 @@ The nomal audios are in the second column of the previous table.

Your browser does not support the audio element.

@@ -1544,7 +1544,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1552,7 +1552,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1563,7 +1563,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1571,7 +1571,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1582,7 +1582,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1590,7 +1590,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1601,7 +1601,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1609,7 +1609,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1620,7 +1620,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1628,7 +1628,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1639,7 +1639,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1647,7 +1647,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1658,7 +1658,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1666,7 +1666,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1677,7 +1677,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1685,7 +1685,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1696,7 +1696,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1704,7 +1704,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1715,7 +1715,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1723,7 +1723,7 @@ We use ``FastSpeech2`` + ``ParallelWaveGAN`` here.

Your browser does not support the audio element.

@@ -1751,7 +1751,7 @@ When finetuning for CSMSC, we thought ``Freeze encoder`` > ``Non Frozen`` > ``Fr

Your browser does not support the audio element.

@@ -1770,7 +1770,7 @@ When finetuning for CSMSC, we thought ``Freeze encoder`` > ``Non Frozen`` > ``Fr

Your browser does not support the audio element.

@@ -1778,7 +1778,7 @@ When finetuning for CSMSC, we thought ``Freeze encoder`` > ``Non Frozen`` > ``Fr

Your browser does not support the audio element.

@@ -1786,7 +1786,7 @@ When finetuning for CSMSC, we thought ``Freeze encoder`` > ``Non Frozen`` > ``Fr

Your browser does not support the audio element.

@@ -1794,7 +1794,7 @@ When finetuning for CSMSC, we thought ``Freeze encoder`` > ``Non Frozen`` > ``Fr

Your browser does not support the audio element.

@@ -1805,7 +1805,7 @@ When finetuning for CSMSC, we thought ``Freeze encoder`` > ``Non Frozen`` > ``Fr

Your browser does not support the audio element.

@@ -1813,7 +1813,7 @@ When finetuning for CSMSC, we thought ``Freeze encoder`` > ``Non Frozen`` > ``Fr

Your browser does not support the audio element.

@@ -1821,7 +1821,7 @@ When finetuning for CSMSC, we thought ``Freeze encoder`` > ``Non Frozen`` > ``Fr

Your browser does not support the audio element.

@@ -1829,7 +1829,7 @@ When finetuning for CSMSC, we thought ``Freeze encoder`` > ``Non Frozen`` > ``Fr

Your browser does not support the audio element.

@@ -1840,7 +1840,7 @@ When finetuning for CSMSC, we thought ``Freeze encoder`` > ``Non Frozen`` > ``Fr

Your browser does not support the audio element.

@@ -1848,7 +1848,7 @@ When finetuning for CSMSC, we thought ``Freeze encoder`` > ``Non Frozen`` > ``Fr

Your browser does not support the audio element.

@@ -1856,7 +1856,7 @@ When finetuning for CSMSC, we thought ``Freeze encoder`` > ``Non Frozen`` > ``Fr

Your browser does not support the audio element.

@@ -1864,7 +1864,7 @@ When finetuning for CSMSC, we thought ``Freeze encoder`` > ``Non Frozen`` > ``Fr

Your browser does not support the audio element.

diff --git a/docs/source/tts/demo_2.rst b/docs/source/tts/demo_2.rst

index 06d0d0399..62db2c760 100644

--- a/docs/source/tts/demo_2.rst

+++ b/docs/source/tts/demo_2.rst

@@ -21,7 +21,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -29,7 +29,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -40,7 +40,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -48,7 +48,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -59,7 +59,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -67,7 +67,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -78,7 +78,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -86,7 +86,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -97,7 +97,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -105,7 +105,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -116,7 +116,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -124,7 +124,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -135,7 +135,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -143,7 +143,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -154,7 +154,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -162,7 +162,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -173,7 +173,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -181,7 +181,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -192,7 +192,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -200,7 +200,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -211,7 +211,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -219,7 +219,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -230,7 +230,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -238,7 +238,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -249,7 +249,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -257,7 +257,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -268,7 +268,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

@@ -276,7 +276,7 @@ FastSpeech2 + Parallel WaveGAN in CSMSC

Your browser does not support the audio element.

diff --git a/docs/source/tts/svs_music_score.md b/docs/source/tts/svs_music_score.md

index 9f351c001..8607ed28f 100644

--- a/docs/source/tts/svs_music_score.md

+++ b/docs/source/tts/svs_music_score.md

@@ -3,7 +3,7 @@

# 一、常见基础

## 1.1 简谱和音名(note)

-

上图从左往右的黑键音名分别是:C#/Db,D#/Db,F#/Db,G#/Ab,A#/Bb

@@ -11,20 +11,20 @@

钢琴八度音就是12345671八个音,最后一个音是高1。**遵循:全全半全全全半** 就会得到 1 2 3 4 5 6 7 (高)1 的音

-

## 1.2 十二大调

“#”表示升调

-

“b”表示降调

-

什么大调表示Do(简谱1) 这个音从哪个键开始,例如D大调,则用D这个键来表示 Do这个音。

@@ -39,7 +39,7 @@

Tempo 用于表示速度(Speed of the beat/pulse),一分钟里面有几拍(beats per mimute BPM)

-

whole note --> 4 beats

@@ -54,7 +54,7 @@ sixteenth note --> 1/4 beat

music scores 包含:note,note_dur,is_slur

-

从左上角的谱信息 *bE* 可以得出该谱子是 **降E大调**,可以对应1.2小节十二大调简谱音名对照表根据 简谱获取对应的note

@@ -111,7 +111,7 @@ music scores 包含:note,note_dur,is_slur

1

原始 opencpop 标注的 notes,note_durs,is_slurs,升F大调,起始在小字组(第3组)

-

+

@@ -119,7 +119,7 @@ music scores 包含:note,note_dur,is_slur

2

原始 opencpop 标注的 notes 和 is_slurs,note_durs 改变(从谱子获取)

-

+

@@ -127,7 +127,7 @@ music scores 包含:note,note_dur,is_slur

3

原始 opencpop 标注的 notes 去掉 rest(毛字一拍),is_slurs 和 note_durs 改变(从谱子获取)

-

+

@@ -135,7 +135,7 @@ music scores 包含:note,note_dur,is_slur

4

从谱子获取 notes,note durs,is_slurs,不含 rest(毛字一拍),起始在小字一组(第3组)

-

+

@@ -143,7 +143,7 @@ music scores 包含:note,note_dur,is_slur

5

从谱子获取 notes,note durs,is_slurs,加上 rest (毛字半拍,rest半拍),起始在小字一组(第3组)

-

+

@@ -151,7 +151,7 @@ music scores 包含:note,note_dur,is_slur

6

从谱子获取 notes, is_slurs,包含 rest,note_durs 从原始标注获取,起始在小字一组(第3组)

-

+

@@ -159,7 +159,7 @@ music scores 包含:note,note_dur,is_slur

7

从谱子获取 notes,note durs,is_slurs,不含 rest(毛字一拍),起始在小字一组(第4组)

-

+

diff --git a/docs/source/tts_demo_video.rst b/docs/source/tts_demo_video.rst

index 4f807165d..83890e572 100644

--- a/docs/source/tts_demo_video.rst

+++ b/docs/source/tts_demo_video.rst

@@ -5,7 +5,7 @@ TTS Demo Video

-

Sorry, your browser doesn't support embedded videos.

diff --git a/docs/topic/ctc/ctc_loss_speed_compare.ipynb b/docs/topic/ctc/ctc_loss_speed_compare.ipynb

index eb7a030c7..6d9187f7f 100644

--- a/docs/topic/ctc/ctc_loss_speed_compare.ipynb

+++ b/docs/topic/ctc/ctc_loss_speed_compare.ipynb

@@ -27,7 +27,7 @@

],

"source": [

"!mkdir -p ./test_data\n",

- "!test -f ./test_data/ctc_loss_compare_data.tgz || wget -P ./test_data https://paddlespeech.bj.bcebos.com/datasets/unit_test/asr/ctc_loss_compare_data.tgz\n",

+ "!test -f ./test_data/ctc_loss_compare_data.tgz || wget -P ./test_data https://paddlespeech.cdn.bcebos.com/datasets/unit_test/asr/ctc_loss_compare_data.tgz\n",

"!tar xzvf test_data/ctc_loss_compare_data.tgz -C ./test_data\n"

]

},

diff --git a/docs/topic/gan_vocoder/gan_vocoder.ipynb b/docs/topic/gan_vocoder/gan_vocoder.ipynb

index edb4eeb1d..4f53c32f7 100644

--- a/docs/topic/gan_vocoder/gan_vocoder.ipynb

+++ b/docs/topic/gan_vocoder/gan_vocoder.ipynb

@@ -156,7 +156,7 @@

"\n",

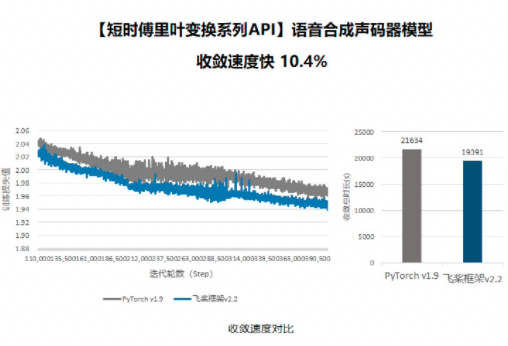

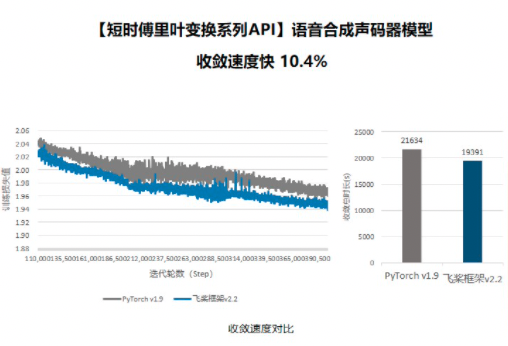

"以 `Parallel WaveGAN` 模型为例,我们复现了基于 `Pytorch` 和基于 `Paddle` 的 `Parallel WaveGAN`,并保持模型结构完全一致,在相同的实验环境下,基于 `Paddle` 的模型收敛速度比基于 `Pytorch` 的模型快 `10.4%`, 而基于 `Conv1D` 的 `stft` 实现的 Paddle 模型的收敛速度和收敛效果和收敛速度差于基于 `Pytorch` 的模型,更明显差于基于 `Paddle` 的模型,所以可以认为 `paddle.signal.stft` 算子大幅度提升了 `Parallel WaveGAN` 模型的效果。\n",

"\n",

- "\n"

+ "\n"

]

}

],

diff --git a/docs/tutorial/asr/tutorial_deepspeech2.ipynb b/docs/tutorial/asr/tutorial_deepspeech2.ipynb

index 34c0090ac..c40ada9fa 100644

--- a/docs/tutorial/asr/tutorial_deepspeech2.ipynb

+++ b/docs/tutorial/asr/tutorial_deepspeech2.ipynb

@@ -23,7 +23,7 @@

"outputs": [],

"source": [

"# 下载demo视频\n",

- "!test -f work/source/subtitle_demo1.mp4 || wget https://paddlespeech.bj.bcebos.com/demos/asr_demos/subtitle_demo1.mp4 -P work/source/"

+ "!test -f work/source/subtitle_demo1.mp4 || wget https://paddlespeech.cdn.bcebos.com/demos/asr_demos/subtitle_demo1.mp4 -P work/source/"

]

},

{

@@ -311,7 +311,7 @@

},

"outputs": [],

"source": [

- "!test -f ds2.model.tar.gz || wget -nc https://paddlespeech.bj.bcebos.com/s2t/aishell/asr0/ds2.model.tar.gz\n",

+ "!test -f ds2.model.tar.gz || wget -nc https://paddlespeech.cdn.bcebos.com/s2t/aishell/asr0/ds2.model.tar.gz\n",

"!tar xzvf ds2.model.tar.gz"

]

},

@@ -329,7 +329,7 @@

"!mkdir -p data/lm\n",

"!test -f ./data/lm/zh_giga.no_cna_cmn.prune01244.klm || wget -nc https://deepspeech.bj.bcebos.com/zh_lm/zh_giga.no_cna_cmn.prune01244.klm -P data/lm\n",

"# 获取用于预测的音频文件\n",

- "!test -f ./data/demo_01_03.wav || wget -nc https://paddlespeech.bj.bcebos.com/datasets/single_wav/zh/demo_01_03.wav -P ./data/"

+ "!test -f ./data/demo_01_03.wav || wget -nc https://paddlespeech.cdn.bcebos.com/datasets/single_wav/zh/demo_01_03.wav -P ./data/"

]

},

{

diff --git a/docs/tutorial/asr/tutorial_transformer.ipynb b/docs/tutorial/asr/tutorial_transformer.ipynb

index 77aed4bf8..bcfb57d53 100644

--- a/docs/tutorial/asr/tutorial_transformer.ipynb

+++ b/docs/tutorial/asr/tutorial_transformer.ipynb

@@ -22,7 +22,7 @@

"outputs": [],

"source": [

"# 下载demo视频\n",

- "!test -f work/source/subtitle_demo1.mp4 || wget -c https://paddlespeech.bj.bcebos.com/demos/asr_demos/subtitle_demo1.mp4 -P work/source/"

+ "!test -f work/source/subtitle_demo1.mp4 || wget -c https://paddlespeech.cdn.bcebos.com/demos/asr_demos/subtitle_demo1.mp4 -P work/source/"

]

},

{

@@ -181,11 +181,11 @@

"outputs": [],

"source": [

"# 获取模型\n",

- "!test -f transformer.model.tar.gz || wget -nc https://paddlespeech.bj.bcebos.com/s2t/aishell/asr1/transformer.model.tar.gz\n",

+ "!test -f transformer.model.tar.gz || wget -nc https://paddlespeech.cdn.bcebos.com/s2t/aishell/asr1/transformer.model.tar.gz\n",

"!tar xzvf transformer.model.tar.gz\n",

"\n",

"# 获取用于预测的音频文件\n",

- "!test -f ./data/demo_01_03.wav || wget -nc https://paddlespeech.bj.bcebos.com/datasets/single_wav/zh/demo_01_03.wav -P ./data/"

+ "!test -f ./data/demo_01_03.wav || wget -nc https://paddlespeech.cdn.bcebos.com/datasets/single_wav/zh/demo_01_03.wav -P ./data/"

]

},

{

diff --git a/docs/tutorial/cls/cls_tutorial.ipynb b/docs/tutorial/cls/cls_tutorial.ipynb

index 3cee64991..c976a2c63 100644

--- a/docs/tutorial/cls/cls_tutorial.ipynb

+++ b/docs/tutorial/cls/cls_tutorial.ipynb

@@ -35,7 +35,7 @@

"source": [

"%%HTML\n",

"\n",

- " \n",

+ " \n",

" 图片来源:https://ww2.mathworks.cn/help/audio/ref/mfcc.html \n",

"\n",

"` and

+ :ref:`"soundfile" backend with the new interface`.

+

+ :ivar int sample_rate: Sample rate

+ :ivar int num_frames: The number of frames

+ :ivar int num_channels: The number of channels

+ :ivar int bits_per_sample: The number of bits per sample. This is 0 for lossy formats,

+ or when it cannot be accurately inferred.

+ :ivar str encoding: Audio encoding

+ The values encoding can take are one of the following:

+

+ * ``PCM_S``: Signed integer linear PCM

+ * ``PCM_U``: Unsigned integer linear PCM

+ * ``PCM_F``: Floating point linear PCM

+ * ``FLAC``: Flac, Free Lossless Audio Codec

+ * ``ULAW``: Mu-law

+ * ``ALAW``: A-law

+ * ``MP3`` : MP3, MPEG-1 Audio Layer III

+ * ``VORBIS``: OGG Vorbis

+ * ``AMR_WB``: Adaptive Multi-Rate

+ * ``AMR_NB``: Adaptive Multi-Rate Wideband

+ * ``OPUS``: Opus

+ * ``HTK``: Single channel 16-bit PCM

+ * ``UNKNOWN`` : None of above

+ """

+

+ def __init__(

+ self,

+ sample_rate: int,

+ num_frames: int,

+ num_channels: int,

+ bits_per_sample: int,

+ encoding: str, ):

+ self.sample_rate = sample_rate

+ self.num_frames = num_frames

+ self.num_channels = num_channels

+ self.bits_per_sample = bits_per_sample

+ self.encoding = encoding

+

+ def __str__(self):

+ return (f"AudioMetaData("

+ f"sample_rate={self.sample_rate}, "

+ f"num_frames={self.num_frames}, "

+ f"num_channels={self.num_channels}, "

+ f"bits_per_sample={self.bits_per_sample}, "

+ f"encoding={self.encoding}"

+ f")")

diff --git a/paddlespeech/audio/backends/soundfile_backend.py b/paddlespeech/audio/backends/soundfile_backend.py

new file mode 100644

index 000000000..7611fd297

--- /dev/null

+++ b/paddlespeech/audio/backends/soundfile_backend.py

@@ -0,0 +1,677 @@

+# Copyright (c) 2022 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import os

+import warnings

+from typing import Optional

+from typing import Tuple

+

+import numpy as np

+import paddle

+import resampy

+import soundfile

+from scipy.io import wavfile

+

+from ..utils import depth_convert

+from ..utils import ParameterError

+from .common import AudioInfo

+

+__all__ = [

+ 'resample',

+ 'to_mono',

+ 'normalize',

+ 'save',

+ 'soundfile_save',

+ 'load',

+ 'soundfile_load',

+ 'info',

+]

+NORMALMIZE_TYPES = ['linear', 'gaussian']

+MERGE_TYPES = ['ch0', 'ch1', 'random', 'average']

+RESAMPLE_MODES = ['kaiser_best', 'kaiser_fast']

+EPS = 1e-8

+

+

+def resample(y: np.ndarray,

+ src_sr: int,

+ target_sr: int,

+ mode: str='kaiser_fast') -> np.ndarray:

+ """Audio resampling.

+

+ Args:

+ y (np.ndarray): Input waveform array in 1D or 2D.

+ src_sr (int): Source sample rate.

+ target_sr (int): Target sample rate.

+ mode (str, optional): The resampling filter to use. Defaults to 'kaiser_fast'.

+

+ Returns:

+ np.ndarray: `y` resampled to `target_sr`

+ """

+

+ if mode == 'kaiser_best':

+ warnings.warn(

+ f'Using resampy in kaiser_best to {src_sr}=>{target_sr}. This function is pretty slow, \

+ we recommend the mode kaiser_fast in large scale audio training')

+

+ if not isinstance(y, np.ndarray):

+ raise ParameterError(

+ 'Only support numpy np.ndarray, but received y in {type(y)}')

+

+ if mode not in RESAMPLE_MODES:

+ raise ParameterError(f'resample mode must in {RESAMPLE_MODES}')

+

+ return resampy.resample(y, src_sr, target_sr, filter=mode)

+

+

+def to_mono(y: np.ndarray, merge_type: str='average') -> np.ndarray:

+ """Convert sterior audio to mono.

+

+ Args:

+ y (np.ndarray): Input waveform array in 1D or 2D.

+ merge_type (str, optional): Merge type to generate mono waveform. Defaults to 'average'.

+

+ Returns:

+ np.ndarray: `y` with mono channel.

+ """

+

+ if merge_type not in MERGE_TYPES:

+ raise ParameterError(

+ f'Unsupported merge type {merge_type}, available types are {MERGE_TYPES}'

+ )

+ if y.ndim > 2:

+ raise ParameterError(

+ f'Unsupported audio array, y.ndim > 2, the shape is {y.shape}')

+ if y.ndim == 1: # nothing to merge

+ return y

+

+ if merge_type == 'ch0':

+ return y[0]

+ if merge_type == 'ch1':

+ return y[1]

+ if merge_type == 'random':

+ return y[np.random.randint(0, 2)]

+

+ # need to do averaging according to dtype

+

+ if y.dtype == 'float32':

+ y_out = (y[0] + y[1]) * 0.5

+ elif y.dtype == 'int16':

+ y_out = y.astype('int32')

+ y_out = (y_out[0] + y_out[1]) // 2

+ y_out = np.clip(y_out, np.iinfo(y.dtype).min,

+ np.iinfo(y.dtype).max).astype(y.dtype)

+

+ elif y.dtype == 'int8':

+ y_out = y.astype('int16')

+ y_out = (y_out[0] + y_out[1]) // 2

+ y_out = np.clip(y_out, np.iinfo(y.dtype).min,

+ np.iinfo(y.dtype).max).astype(y.dtype)

+ else:

+ raise ParameterError(f'Unsupported dtype: {y.dtype}')

+ return y_out

+

+

+def soundfile_load_(file: os.PathLike,

+ offset: Optional[float]=None,

+ dtype: str='int16',

+ duration: Optional[int]=None) -> Tuple[np.ndarray, int]:

+ """Load audio using soundfile library. This function load audio file using libsndfile.

+

+ Args:

+ file (os.PathLike): File of waveform.

+ offset (Optional[float], optional): Offset to the start of waveform. Defaults to None.

+ dtype (str, optional): Data type of waveform. Defaults to 'int16'.

+ duration (Optional[int], optional): Duration of waveform to read. Defaults to None.

+

+ Returns:

+ Tuple[np.ndarray, int]: Waveform in ndarray and its samplerate.

+ """

+ with soundfile.SoundFile(file) as sf_desc:

+ sr_native = sf_desc.samplerate

+ if offset:

+ sf_desc.seek(int(offset * sr_native))

+ if duration is not None:

+ frame_duration = int(duration * sr_native)

+ else:

+ frame_duration = -1

+ y = sf_desc.read(frames=frame_duration, dtype=dtype, always_2d=False).T

+

+ return y, sf_desc.samplerate

+

+

+def normalize(y: np.ndarray, norm_type: str='linear',

+ mul_factor: float=1.0) -> np.ndarray:

+ """Normalize an input audio with additional multiplier.

+

+ Args:

+ y (np.ndarray): Input waveform array in 1D or 2D.

+ norm_type (str, optional): Type of normalization. Defaults to 'linear'.

+ mul_factor (float, optional): Scaling factor. Defaults to 1.0.

+

+ Returns:

+ np.ndarray: `y` after normalization.

+ """

+

+ if norm_type == 'linear':

+ amax = np.max(np.abs(y))

+ factor = 1.0 / (amax + EPS)

+ y = y * factor * mul_factor

+ elif norm_type == 'gaussian':

+ amean = np.mean(y)

+ astd = np.std(y)

+ astd = max(astd, EPS)

+ y = mul_factor * (y - amean) / astd

+ else:

+ raise NotImplementedError(f'norm_type should be in {NORMALMIZE_TYPES}')

+

+ return y

+

+

+def soundfile_save(y: np.ndarray, sr: int, file: os.PathLike) -> None:

+ """Save audio file to disk. This function saves audio to disk using scipy.io.wavfile, with additional step to convert input waveform to int16.

+

+ Args:

+ y (np.ndarray): Input waveform array in 1D or 2D.

+ sr (int): Sample rate.

+ file (os.PathLike): Path of audio file to save.

+ """

+ if not file.endswith('.wav'):

+ raise ParameterError(

+ f'only .wav file supported, but dst file name is: {file}')

+

+ if sr <= 0:

+ raise ParameterError(

+ f'Sample rate should be larger than 0, received sr = {sr}')

+

+ if y.dtype not in ['int16', 'int8']:

+ warnings.warn(

+ f'input data type is {y.dtype}, will convert data to int16 format before saving'

+ )

+ y_out = depth_convert(y, 'int16')

+ else:

+ y_out = y

+

+ wavfile.write(file, sr, y_out)

+

+

+def soundfile_load(

+ file: os.PathLike,

+ sr: Optional[int]=None,

+ mono: bool=True,

+ merge_type: str='average', # ch0,ch1,random,average

+ normal: bool=True,

+ norm_type: str='linear',

+ norm_mul_factor: float=1.0,

+ offset: float=0.0,

+ duration: Optional[int]=None,

+ dtype: str='float32',

+ resample_mode: str='kaiser_fast') -> Tuple[np.ndarray, int]:

+ """Load audio file from disk. This function loads audio from disk using using audio backend.

+

+ Args:

+ file (os.PathLike): Path of audio file to load.

+ sr (Optional[int], optional): Sample rate of loaded waveform. Defaults to None.

+ mono (bool, optional): Return waveform with mono channel. Defaults to True.

+ merge_type (str, optional): Merge type of multi-channels waveform. Defaults to 'average'.

+ normal (bool, optional): Waveform normalization. Defaults to True.

+ norm_type (str, optional): Type of normalization. Defaults to 'linear'.

+ norm_mul_factor (float, optional): Scaling factor. Defaults to 1.0.

+ offset (float, optional): Offset to the start of waveform. Defaults to 0.0.

+ duration (Optional[int], optional): Duration of waveform to read. Defaults to None.

+ dtype (str, optional): Data type of waveform. Defaults to 'float32'.

+ resample_mode (str, optional): The resampling filter to use. Defaults to 'kaiser_fast'.

+

+ Returns:

+ Tuple[np.ndarray, int]: Waveform in ndarray and its samplerate.

+ """

+

+ y, r = soundfile_load_(file, offset=offset, dtype=dtype, duration=duration)

+

+ if not ((y.ndim == 1 and len(y) > 0) or (y.ndim == 2 and len(y[0]) > 0)):

+ raise ParameterError(f'audio file {file} looks empty')

+

+ if mono:

+ y = to_mono(y, merge_type)

+

+ if sr is not None and sr != r:

+ y = resample(y, r, sr, mode=resample_mode)

+ r = sr

+

+ if normal:

+ y = normalize(y, norm_type, norm_mul_factor)

+ elif dtype in ['int8', 'int16']:

+ # still need to do normalization, before depth conversion

+ y = normalize(y, 'linear', 1.0)

+

+ y = depth_convert(y, dtype)

+ return y, r

+

+

+#The code below is taken from: https://github.com/pytorch/audio/blob/main/torchaudio/backend/soundfile_backend.py, with some modifications.

+

+

+def _get_subtype_for_wav(dtype: paddle.dtype,

+ encoding: str,

+ bits_per_sample: int):

+ if not encoding:

+ if not bits_per_sample:

+ subtype = {

+ paddle.uint8: "PCM_U8",

+ paddle.int16: "PCM_16",

+ paddle.int32: "PCM_32",

+ paddle.float32: "FLOAT",

+ paddle.float64: "DOUBLE",

+ }.get(dtype)

+ if not subtype:

+ raise ValueError(f"Unsupported dtype for wav: {dtype}")

+ return subtype

+ if bits_per_sample == 8:

+ return "PCM_U8"

+ return f"PCM_{bits_per_sample}"

+ if encoding == "PCM_S":

+ if not bits_per_sample:

+ return "PCM_32"

+ if bits_per_sample == 8:

+ raise ValueError("wav does not support 8-bit signed PCM encoding.")

+ return f"PCM_{bits_per_sample}"

+ if encoding == "PCM_U":

+ if bits_per_sample in (None, 8):

+ return "PCM_U8"

+ raise ValueError("wav only supports 8-bit unsigned PCM encoding.")

+ if encoding == "PCM_F":

+ if bits_per_sample in (None, 32):

+ return "FLOAT"

+ if bits_per_sample == 64:

+ return "DOUBLE"

+ raise ValueError("wav only supports 32/64-bit float PCM encoding.")

+ if encoding == "ULAW":

+ if bits_per_sample in (None, 8):

+ return "ULAW"

+ raise ValueError("wav only supports 8-bit mu-law encoding.")

+ if encoding == "ALAW":

+ if bits_per_sample in (None, 8):

+ return "ALAW"

+ raise ValueError("wav only supports 8-bit a-law encoding.")

+ raise ValueError(f"wav does not support {encoding}.")

+

+

+def _get_subtype_for_sphere(encoding: str, bits_per_sample: int):

+ if encoding in (None, "PCM_S"):

+ return f"PCM_{bits_per_sample}" if bits_per_sample else "PCM_32"

+ if encoding in ("PCM_U", "PCM_F"):

+ raise ValueError(f"sph does not support {encoding} encoding.")

+ if encoding == "ULAW":

+ if bits_per_sample in (None, 8):

+ return "ULAW"

+ raise ValueError("sph only supports 8-bit for mu-law encoding.")

+ if encoding == "ALAW":

+ return "ALAW"

+ raise ValueError(f"sph does not support {encoding}.")

+

+

+def _get_subtype(dtype: paddle.dtype,

+ format: str,

+ encoding: str,

+ bits_per_sample: int):

+ if format == "wav":

+ return _get_subtype_for_wav(dtype, encoding, bits_per_sample)

+ if format == "flac":

+ if encoding:

+ raise ValueError("flac does not support encoding.")

+ if not bits_per_sample:

+ return "PCM_16"

+ if bits_per_sample > 24:

+ raise ValueError("flac does not support bits_per_sample > 24.")

+ return "PCM_S8" if bits_per_sample == 8 else f"PCM_{bits_per_sample}"

+ if format in ("ogg", "vorbis"):

+ if encoding or bits_per_sample:

+ raise ValueError(

+ "ogg/vorbis does not support encoding/bits_per_sample.")

+ return "VORBIS"

+ if format == "sph":

+ return _get_subtype_for_sphere(encoding, bits_per_sample)

+ if format in ("nis", "nist"):

+ return "PCM_16"

+ raise ValueError(f"Unsupported format: {format}")

+

+

+def save(

+ filepath: str,

+ src: paddle.Tensor,

+ sample_rate: int,

+ channels_first: bool=True,

+ compression: Optional[float]=None,

+ format: Optional[str]=None,

+ encoding: Optional[str]=None,

+ bits_per_sample: Optional[int]=None, ):

+ """Save audio data to file.

+

+ Note:

+ The formats this function can handle depend on the soundfile installation.

+ This function is tested on the following formats;

+

+ * WAV

+

+ * 32-bit floating-point

+ * 32-bit signed integer

+ * 16-bit signed integer

+ * 8-bit unsigned integer

+

+ * FLAC

+ * OGG/VORBIS

+ * SPHERE

+

+ Note:

+ ``filepath`` argument is intentionally annotated as ``str`` only, even though it accepts

+ ``pathlib.Path`` object as well. This is for the consistency with ``"sox_io"`` backend,

+

+ Args:

+ filepath (str or pathlib.Path): Path to audio file.

+ src (paddle.Tensor): Audio data to save. must be 2D tensor.

+ sample_rate (int): sampling rate

+ channels_first (bool, optional): If ``True``, the given tensor is interpreted as `[channel, time]`,

+ otherwise `[time, channel]`.

+ compression (float of None, optional): Not used.

+ It is here only for interface compatibility reason with "sox_io" backend.

+ format (str or None, optional): Override the audio format.

+ When ``filepath`` argument is path-like object, audio format is

+ inferred from file extension. If the file extension is missing or

+ different, you can specify the correct format with this argument.

+

+ When ``filepath`` argument is file-like object,

+ this argument is required.

+

+ Valid values are ``"wav"``, ``"ogg"``, ``"vorbis"``,

+ ``"flac"`` and ``"sph"``.

+ encoding (str or None, optional): Changes the encoding for supported formats.

+ This argument is effective only for supported formats, such as

+ ``"wav"``, ``""flac"`` and ``"sph"``. Valid values are:

+

+ - ``"PCM_S"`` (signed integer Linear PCM)

+ - ``"PCM_U"`` (unsigned integer Linear PCM)

+ - ``"PCM_F"`` (floating point PCM)

+ - ``"ULAW"`` (mu-law)

+ - ``"ALAW"`` (a-law)

+

+ bits_per_sample (int or None, optional): Changes the bit depth for the

+ supported formats.

+ When ``format`` is one of ``"wav"``, ``"flac"`` or ``"sph"``,

+ you can change the bit depth.

+ Valid values are ``8``, ``16``, ``24``, ``32`` and ``64``.

+

+ Supported formats/encodings/bit depth/compression are:

+

+ ``"wav"``

+ - 32-bit floating-point PCM

+ - 32-bit signed integer PCM

+ - 24-bit signed integer PCM

+ - 16-bit signed integer PCM

+ - 8-bit unsigned integer PCM

+ - 8-bit mu-law

+ - 8-bit a-law

+

+ Note:

+ Default encoding/bit depth is determined by the dtype of

+ the input Tensor.

+

+ ``"flac"``

+ - 8-bit

+ - 16-bit (default)

+ - 24-bit

+

+ ``"ogg"``, ``"vorbis"``

+ - Doesn't accept changing configuration.

+

+ ``"sph"``

+ - 8-bit signed integer PCM

+ - 16-bit signed integer PCM

+ - 24-bit signed integer PCM

+ - 32-bit signed integer PCM (default)

+ - 8-bit mu-law

+ - 8-bit a-law

+ - 16-bit a-law

+ - 24-bit a-law

+ - 32-bit a-law

+

+ """

+ if src.ndim != 2:

+ raise ValueError(f"Expected 2D Tensor, got {src.ndim}D.")

+ if compression is not None:

+ warnings.warn(

+ '`save` function of "soundfile" backend does not support "compression" parameter. '

+ "The argument is silently ignored.")

+ if hasattr(filepath, "write"):

+ if format is None:

+ raise RuntimeError(

+ "`format` is required when saving to file object.")

+ ext = format.lower()

+ else:

+ ext = str(filepath).split(".")[-1].lower()

+

+ if bits_per_sample not in (None, 8, 16, 24, 32, 64):

+ raise ValueError("Invalid bits_per_sample.")

+ if bits_per_sample == 24:

+ warnings.warn(

+ "Saving audio with 24 bits per sample might warp samples near -1. "

+ "Using 16 bits per sample might be able to avoid this.")

+ subtype = _get_subtype(src.dtype, ext, encoding, bits_per_sample)

+

+ # sph is a extension used in TED-LIUM but soundfile does not recognize it as NIST format,

+ # so we extend the extensions manually here

+ if ext in ["nis", "nist", "sph"] and format is None:

+ format = "NIST"

+

+ if channels_first:

+ src = src.t()

+

+ soundfile.write(

+ file=filepath,

+ data=src,

+ samplerate=sample_rate,

+ subtype=subtype,

+ format=format)

+

+

+_SUBTYPE2DTYPE = {

+ "PCM_S8": "int8",

+ "PCM_U8": "uint8",

+ "PCM_16": "int16",

+ "PCM_32": "int32",

+ "FLOAT": "float32",

+ "DOUBLE": "float64",

+}

+

+

+def load(

+ filepath: str,

+ frame_offset: int=0,

+ num_frames: int=-1,

+ normalize: bool=True,

+ channels_first: bool=True,

+ format: Optional[str]=None, ) -> Tuple[paddle.Tensor, int]:

+ """Load audio data from file.

+

+ Note:

+ The formats this function can handle depend on the soundfile installation.

+ This function is tested on the following formats;

+

+ * WAV

+

+ * 32-bit floating-point

+ * 32-bit signed integer

+ * 16-bit signed integer

+ * 8-bit unsigned integer

+

+ * FLAC

+ * OGG/VORBIS

+ * SPHERE

+

+ By default (``normalize=True``, ``channels_first=True``), this function returns Tensor with

+ ``float32`` dtype and the shape of `[channel, time]`.

+ The samples are normalized to fit in the range of ``[-1.0, 1.0]``.

+

+ When the input format is WAV with integer type, such as 32-bit signed integer, 16-bit

+ signed integer and 8-bit unsigned integer (24-bit signed integer is not supported),

+ by providing ``normalize=False``, this function can return integer Tensor, where the samples

+ are expressed within the whole range of the corresponding dtype, that is, ``int32`` tensor

+ for 32-bit signed PCM, ``int16`` for 16-bit signed PCM and ``uint8`` for 8-bit unsigned PCM.

+

+ ``normalize`` parameter has no effect on 32-bit floating-point WAV and other formats, such as

+ ``flac`` and ``mp3``.

+ For these formats, this function always returns ``float32`` Tensor with values normalized to

+ ``[-1.0, 1.0]``.

+

+ Note:

+ ``filepath`` argument is intentionally annotated as ``str`` only, even though it accepts

+ ``pathlib.Path`` object as well. This is for the consistency with ``"sox_io"`` backend.

+

+ Args:

+ filepath (path-like object or file-like object):

+ Source of audio data.

+ frame_offset (int, optional):

+ Number of frames to skip before start reading data.

+ num_frames (int, optional):

+ Maximum number of frames to read. ``-1`` reads all the remaining samples,

+ starting from ``frame_offset``.

+ This function may return the less number of frames if there is not enough

+ frames in the given file.

+ normalize (bool, optional):

+ When ``True``, this function always return ``float32``, and sample values are

+ normalized to ``[-1.0, 1.0]``.

+ If input file is integer WAV, giving ``False`` will change the resulting Tensor type to

+ integer type.

+ This argument has no effect for formats other than integer WAV type.

+ channels_first (bool, optional):

+ When True, the returned Tensor has dimension `[channel, time]`.

+ Otherwise, the returned Tensor's dimension is `[time, channel]`.

+ format (str or None, optional):

+ Not used. PySoundFile does not accept format hint.

+

+ Returns:

+ (paddle.Tensor, int): Resulting Tensor and sample rate.

+ If the input file has integer wav format and normalization is off, then it has

+ integer type, else ``float32`` type. If ``channels_first=True``, it has

+ `[channel, time]` else `[time, channel]`.

+ """

+ with soundfile.SoundFile(filepath, "r") as file_:

+ if file_.format != "WAV" or normalize:

+ dtype = "float32"

+ elif file_.subtype not in _SUBTYPE2DTYPE:

+ raise ValueError(f"Unsupported subtype: {file_.subtype}")

+ else:

+ dtype = _SUBTYPE2DTYPE[file_.subtype]

+

+ frames = file_._prepare_read(frame_offset, None, num_frames)

+ waveform = file_.read(frames, dtype, always_2d=True)

+ sample_rate = file_.samplerate

+

+ waveform = paddle.to_tensor(waveform)

+ if channels_first:

+ waveform = paddle.transpose(waveform, perm=[1, 0])

+ return waveform, sample_rate

+

+

+# Mapping from soundfile subtype to number of bits per sample.

+# This is mostly heuristical and the value is set to 0 when it is irrelevant

+# (lossy formats) or when it can't be inferred.

+# For ADPCM (and G72X) subtypes, it's hard to infer the bit depth because it's not part of the standard:

+# According to https://en.wikipedia.org/wiki/Adaptive_differential_pulse-code_modulation#In_telephony,

+# the default seems to be 8 bits but it can be compressed further to 4 bits.

+# The dict is inspired from

+# https://github.com/bastibe/python-soundfile/blob/744efb4b01abc72498a96b09115b42a4cabd85e4/soundfile.py#L66-L94

+_SUBTYPE_TO_BITS_PER_SAMPLE = {

+ "PCM_S8": 8, # Signed 8 bit data

+ "PCM_16": 16, # Signed 16 bit data

+ "PCM_24": 24, # Signed 24 bit data

+ "PCM_32": 32, # Signed 32 bit data

+ "PCM_U8": 8, # Unsigned 8 bit data (WAV and RAW only)

+ "FLOAT": 32, # 32 bit float data

+ "DOUBLE": 64, # 64 bit float data

+ "ULAW": 8, # U-Law encoded. See https://en.wikipedia.org/wiki/G.711#Types

+ "ALAW": 8, # A-Law encoded. See https://en.wikipedia.org/wiki/G.711#Types

+ "IMA_ADPCM": 0, # IMA ADPCM.

+ "MS_ADPCM": 0, # Microsoft ADPCM.

+ "GSM610":

+ 0, # GSM 6.10 encoding. (Wikipedia says 1.625 bit depth?? https://en.wikipedia.org/wiki/Full_Rate)

+ "VOX_ADPCM": 0, # OKI / Dialogix ADPCM

+ "G721_32": 0, # 32kbs G721 ADPCM encoding.

+ "G723_24": 0, # 24kbs G723 ADPCM encoding.

+ "G723_40": 0, # 40kbs G723 ADPCM encoding.

+ "DWVW_12": 12, # 12 bit Delta Width Variable Word encoding.

+ "DWVW_16": 16, # 16 bit Delta Width Variable Word encoding.

+ "DWVW_24": 24, # 24 bit Delta Width Variable Word encoding.

+ "DWVW_N": 0, # N bit Delta Width Variable Word encoding.

+ "DPCM_8": 8, # 8 bit differential PCM (XI only)

+ "DPCM_16": 16, # 16 bit differential PCM (XI only)

+ "VORBIS": 0, # Xiph Vorbis encoding. (lossy)

+ "ALAC_16": 16, # Apple Lossless Audio Codec (16 bit).

+ "ALAC_20": 20, # Apple Lossless Audio Codec (20 bit).

+ "ALAC_24": 24, # Apple Lossless Audio Codec (24 bit).

+ "ALAC_32": 32, # Apple Lossless Audio Codec (32 bit).

+}

+

+

+def _get_bit_depth(subtype):

+ if subtype not in _SUBTYPE_TO_BITS_PER_SAMPLE:

+ warnings.warn(

+ f"The {subtype} subtype is unknown to PaddleAudio. As a result, the bits_per_sample "

+ "attribute will be set to 0. If you are seeing this warning, please "

+ "report by opening an issue on github (after checking for existing/closed ones). "

+ "You may otherwise ignore this warning.")

+ return _SUBTYPE_TO_BITS_PER_SAMPLE.get(subtype, 0)

+

+

+_SUBTYPE_TO_ENCODING = {

+ "PCM_S8": "PCM_S",

+ "PCM_16": "PCM_S",

+ "PCM_24": "PCM_S",

+ "PCM_32": "PCM_S",

+ "PCM_U8": "PCM_U",

+ "FLOAT": "PCM_F",

+ "DOUBLE": "PCM_F",

+ "ULAW": "ULAW",

+ "ALAW": "ALAW",

+ "VORBIS": "VORBIS",

+}

+

+

+def _get_encoding(format: str, subtype: str):

+ if format == "FLAC":

+ return "FLAC"

+ return _SUBTYPE_TO_ENCODING.get(subtype, "UNKNOWN")

+

+

+def info(filepath: str, format: Optional[str]=None) -> AudioInfo:

+ """Get signal information of an audio file.

+

+ Note:

+ ``filepath`` argument is intentionally annotated as ``str`` only, even though it accepts

+ ``pathlib.Path`` object as well. This is for the consistency with ``"sox_io"`` backend,

+

+ Args:

+ filepath (path-like object or file-like object):

+ Source of audio data.

+ format (str or None, optional):

+ Not used. PySoundFile does not accept format hint.

+

+ Returns:

+ AudioInfo: meta data of the given audio.

+

+ """

+ sinfo = soundfile.info(filepath)

+ return AudioInfo(

+ sinfo.samplerate,

+ sinfo.frames,

+ sinfo.channels,

+ bits_per_sample=_get_bit_depth(sinfo.subtype),

+ encoding=_get_encoding(sinfo.format, sinfo.subtype), )

diff --git a/paddlespeech/audio/compliance/__init__.py b/paddlespeech/audio/compliance/__init__.py

new file mode 100644

index 000000000..c08f9ab11

--- /dev/null

+++ b/paddlespeech/audio/compliance/__init__.py

@@ -0,0 +1,15 @@

+# Copyright (c) 2022 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+from . import kaldi

+from . import librosa

diff --git a/paddlespeech/audio/compliance/kaldi.py b/paddlespeech/audio/compliance/kaldi.py

new file mode 100644

index 000000000..a94ec4053

--- /dev/null

+++ b/paddlespeech/audio/compliance/kaldi.py

@@ -0,0 +1,643 @@

+# Copyright (c) 2022 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+# Modified from torchaudio(https://github.com/pytorch/audio)

+import math

+from typing import Tuple

+

+import paddle

+from paddle import Tensor

+

+from ..functional import create_dct

+from ..functional.window import get_window

+

+__all__ = [

+ 'spectrogram',

+ 'fbank',

+ 'mfcc',

+]

+

+# window types

+HANNING = 'hann'

+HAMMING = 'hamming'

+POVEY = 'povey'

+RECTANGULAR = 'rect'

+BLACKMAN = 'blackman'

+

+

+def _get_epsilon(dtype):

+ return paddle.to_tensor(1e-07, dtype=dtype)

+

+

+def _next_power_of_2(x: int) -> int:

+ return 1 if x == 0 else 2**(x - 1).bit_length()

+

+

+def _get_strided(waveform: Tensor,

+ window_size: int,

+ window_shift: int,

+ snip_edges: bool) -> Tensor:

+ assert waveform.dim() == 1

+ num_samples = waveform.shape[0]

+

+ if snip_edges:

+ if num_samples < window_size:

+ return paddle.empty((0, 0), dtype=waveform.dtype)

+ else:

+ m = 1 + (num_samples - window_size) // window_shift

+ else:

+ reversed_waveform = paddle.flip(waveform, [0])

+ m = (num_samples + (window_shift // 2)) // window_shift

+ pad = window_size // 2 - window_shift // 2

+ pad_right = reversed_waveform

+ if pad > 0:

+ pad_left = reversed_waveform[-pad:]

+ waveform = paddle.concat((pad_left, waveform, pad_right), axis=0)

+ else:

+ waveform = paddle.concat((waveform[-pad:], pad_right), axis=0)

+

+ return paddle.signal.frame(waveform, window_size, window_shift)[:, :m].T

+

+

+def _feature_window_function(

+ window_type: str,

+ window_size: int,

+ blackman_coeff: float,

+ dtype: int, ) -> Tensor:

+ if window_type == "hann":

+ return get_window('hann', window_size, fftbins=False, dtype=dtype)

+ elif window_type == "hamming":

+ return get_window('hamming', window_size, fftbins=False, dtype=dtype)

+ elif window_type == "povey":

+ return get_window(

+ 'hann', window_size, fftbins=False, dtype=dtype).pow(0.85)

+ elif window_type == "rect":

+ return paddle.ones([window_size], dtype=dtype)

+ elif window_type == "blackman":

+ a = 2 * math.pi / (window_size - 1)

+ window_function = paddle.arange(window_size, dtype=dtype)

+ return (blackman_coeff - 0.5 * paddle.cos(a * window_function) +

+ (0.5 - blackman_coeff) * paddle.cos(2 * a * window_function)

+ ).astype(dtype)

+ else:

+ raise Exception('Invalid window type ' + window_type)

+

+

+def _get_log_energy(strided_input: Tensor, epsilon: Tensor,

+ energy_floor: float) -> Tensor:

+ log_energy = paddle.maximum(strided_input.pow(2).sum(1), epsilon).log()

+ if energy_floor == 0.0:

+ return log_energy

+ return paddle.maximum(

+ log_energy,

+ paddle.to_tensor(math.log(energy_floor), dtype=strided_input.dtype))

+

+