diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index f72b44ac6..44bbd5cad 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -26,12 +26,12 @@ repos:

- --no-sort-keys

- --autofix

- id: check-merge-conflict

- - id: flake8

- aergs:

- - --ignore=E501,E228,E226,E261,E266,E128,E402,W503

- - --builtins=G,request

- - --jobs=1

- exclude: (?=runtime/engine/kaldi|audio/paddleaudio/src|third_party).*(\.cpp|\.cc|\.h\.hpp|\.py)$

+ # - id: flake8

+ # aergs:

+ # - --ignore=E501,E228,E226,E261,E266,E128,E402,W503

+ # - --builtins=G,request

+ # - --jobs=1

+ # exclude: (?=runtime/engine/kaldi|audio/paddleaudio/src|third_party).*(\.cpp|\.cc|\.h\.hpp|\.py)$

- repo : https://github.com/Lucas-C/pre-commit-hooks

rev: v1.0.1

diff --git a/README.md b/README.md

index c6e9fc209..19ec61cb0 100644

--- a/README.md

+++ b/README.md

@@ -227,13 +227,13 @@ Via the easy-to-use, efficient, flexible and scalable implementation, our vision

## Installation

-We strongly recommend our users to install PaddleSpeech in **Linux** with *python>=3.7* and *paddlepaddle>=2.4.1*.

+We strongly recommend our users to install PaddleSpeech in **Linux** with *python>=3.8* and *paddlepaddle<=2.5.1*. Some new versions of Paddle do not have support for adaptation in PaddleSpeech, so currently only versions 2.5.1 and earlier can be supported.

### **Dependency Introduction**

+ gcc >= 4.8.5

-+ paddlepaddle >= 2.4.1

-+ python >= 3.7

++ paddlepaddle <= 2.5.1

++ python >= 3.8

+ OS support: Linux(recommend), Windows, Mac OSX

PaddleSpeech depends on paddlepaddle. For installation, please refer to the official website of [paddlepaddle](https://www.paddlepaddle.org.cn/en) and choose according to your own machine. Here is an example of the cpu version.

@@ -893,10 +893,6 @@ The Text-to-Speech module is originally called [Parakeet](https://github.com/Pad

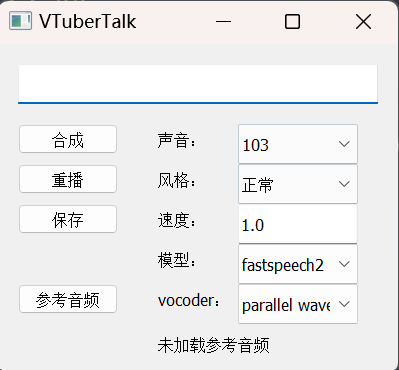

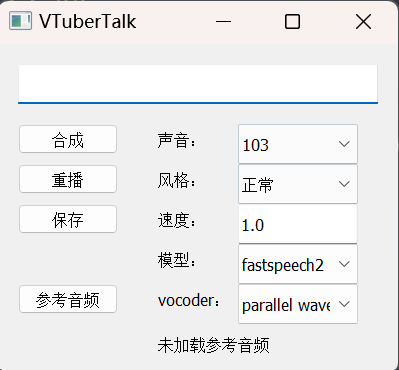

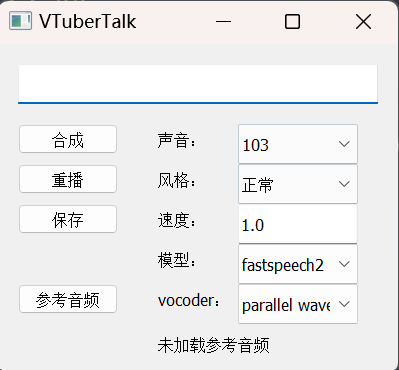

- **[VTuberTalk](https://github.com/jerryuhoo/VTuberTalk): Use PaddleSpeech TTS and ASR to clone voice from videos.**

-

-

-

-

-  +

+

@@ -237,12 +237,12 @@

## 安装

-我们强烈建议用户在 **Linux** 环境下,*3.7* 以上版本的 *python* 上安装 PaddleSpeech。

+我们强烈建议用户在 **Linux** 环境下,*3.8* 以上版本的 *python* 上安装 PaddleSpeech。同时,有一些Paddle新版本的内容没有在做适配的支持,因此目前只能使用2.5.1及之前的版本。

### 相关依赖

+ gcc >= 4.8.5

-+ paddlepaddle >= 2.4.1

-+ python >= 3.7

++ paddlepaddle <= 2.5.1

++ python >= 3.8

+ linux(推荐), mac, windows

PaddleSpeech 依赖于 paddlepaddle,安装可以参考[ paddlepaddle 官网](https://www.paddlepaddle.org.cn/),根据自己机器的情况进行选择。这里给出 cpu 版本示例,其它版本大家可以根据自己机器的情况进行安装。

diff --git a/audio/setup.py b/audio/setup.py

index 0fe6e5995..f7d459446 100644

--- a/audio/setup.py

+++ b/audio/setup.py

@@ -38,8 +38,10 @@ VERSION = '1.2.0'

COMMITID = 'none'

base = [

+ # paddleaudio align with librosa==0.8.1, which need numpy==1.23.x

+ "librosa==0.8.1",

+ "numpy==1.23.5",

"kaldiio",

- "librosa>=0.10.0",

"pathos",

"pybind11",

"parameterized",

diff --git a/demos/speech_web/speech_server/src/ge2e_clone.py b/demos/speech_web/speech_server/src/ge2e_clone.py

index 83c2b3f35..0711a40af 100644

--- a/demos/speech_web/speech_server/src/ge2e_clone.py

+++ b/demos/speech_web/speech_server/src/ge2e_clone.py

@@ -38,23 +38,9 @@ class VoiceCloneGE2E():

output_dir = os.path.dirname(out_wav)

ngpu = get_ngpu()

- cmd = f"""

- python3 {self.BIN_DIR}/voice_cloning.py \

- --am={self.am} \

- --am_config={self.am_config} \

- --am_ckpt={self.am_ckpt} \

- --am_stat={self.am_stat} \

- --voc={self.voc} \

- --voc_config={self.voc_config} \

- --voc_ckpt={self.voc_ckpt} \

- --voc_stat={self.voc_stat} \

- --ge2e_params_path={self.ge2e_params_path} \

- --text="{text}" \

- --input-dir={ref_audio_dir} \

- --output-dir={output_dir} \

- --phones-dict={self.phones_dict} \

- --ngpu={ngpu}

- """

+ cmd = f"""python {self.BIN_DIR}/voice_cloning.py --am={self.am} --am_config={self.am_config} --am_ckpt={self.am_ckpt} --am_stat={self.am_stat} --voc={self.voc} --voc_config={self.voc_config} --voc_ckpt={self.voc_ckpt} --voc_stat={self.voc_stat} --ge2e_params_path={self.ge2e_params_path} --text="{text}" --input-dir={ref_audio_dir} --output-dir={output_dir} --phones-dict={self.phones_dict} --ngpu={ngpu}"""

+

+ print(cmd)

output_name = os.path.join(output_dir, full_file_name)

return run_cmd(cmd, output_name=output_name)

diff --git a/docs/source/install.md b/docs/source/install.md

index a4dae3640..3607d7185 100644

--- a/docs/source/install.md

+++ b/docs/source/install.md

@@ -95,7 +95,7 @@ bash

```

Then you can create a conda virtual environment using the following command:

```bash

-conda create -y -p tools/venv python=3.7

+conda create -y -p tools/venv python=3.8

```

Activate the conda virtual environment:

```bash

@@ -181,7 +181,7 @@ $HOME/miniconda3/bin/conda init

# use the "bash" command to make the conda environment works

bash

# create a conda virtual environment

-conda create -y -p tools/venv python=3.7

+conda create -y -p tools/venv python=3.8

# Activate the conda virtual environment:

conda activate tools/venv

# Install the conda packages

diff --git a/docs/source/install_cn.md b/docs/source/install_cn.md

index 7f05cdfe4..01ae21fe7 100644

--- a/docs/source/install_cn.md

+++ b/docs/source/install_cn.md

@@ -91,7 +91,7 @@ bash

```

然后你可以创建一个 conda 的虚拟环境:

```bash

-conda create -y -p tools/venv python=3.7

+conda create -y -p tools/venv python=3.8

```

激活 conda 虚拟环境:

```bash

@@ -173,7 +173,7 @@ $HOME/miniconda3/bin/conda init

# 激活 conda

bash

# 创建 Conda 虚拟环境

-conda create -y -p tools/venv python=3.7

+conda create -y -p tools/venv python=3.8

# 激活 Conda 虚拟环境:

conda activate tools/venv

# 安装 Conda 包

diff --git a/docs/topic/package_release/python_package_release.md b/docs/topic/package_release/python_package_release.md

index cb1029e7b..c735e0bd8 100644

--- a/docs/topic/package_release/python_package_release.md

+++ b/docs/topic/package_release/python_package_release.md

@@ -165,8 +165,7 @@ docker run -it xxxxxx

设置python:

```bash

-export PATH="/opt/python/cp37-cp37m/bin/:$PATH"

-#export PATH="/opt/python/cp38-cp38/bin/:$PATH"

+export PATH="/opt/python/cp38-cp38/bin/:$PATH"

#export PATH="/opt/python/cp39-cp39/bin/:$PATH"

```

diff --git a/examples/aishell/asr1/RESULTS.md b/examples/aishell/asr1/RESULTS.md

index 643d0e224..be771ba59 100644

--- a/examples/aishell/asr1/RESULTS.md

+++ b/examples/aishell/asr1/RESULTS.md

@@ -1,14 +1,31 @@

# Aishell

-## Conformer

-paddle version: 2.2.2

-paddlespeech version: 1.0.1

-| Model | Params | Config | Augmentation| Test set | Decode method | Loss | CER |

-| --- | --- | --- | --- | --- | --- | --- | --- |

-| conformer | 47.07M | conf/conformer.yaml | spec_aug | test | attention | - | 0.0522 |

-| conformer | 47.07M | conf/conformer.yaml | spec_aug | test | ctc_greedy_search | - | 0.0481 |

-| conformer | 47.07M | conf/conformer.yaml | spec_aug| test | ctc_prefix_beam_search | - | 0.0480 |

-| conformer | 47.07M | conf/conformer.yaml | spec_aug | test | attention_rescoring | - | 0.0460 |

+## RoFormer Streaming

+paddle version: 2.5.0

+paddlespeech version: 1.5.0

+

+Tesla V100-SXM2-32GB: 1 node, 4 card

+Global BachSize: 32 * 4

+Training Done: 1 day, 12:56:39.639646

+### `decoding.decoding_chunk_size=16`

+

+> chunk_size=16, ((16 - 1) * 4 + 7) * 10ms = (16 * 4 + 3) * 10ms = 670ms

+

+| Model | Params | Config | Augmentation| Test set | Decode method | Chunk Size & Left Chunks | Loss | CER |

+| --- | --- | --- | --- | --- | --- | --- | --- | --- |

+| roformer | 44.80M | conf/chunk_roformer.yaml | spec_aug | test | attention | 16, -1 | - | 5.63 |

+| roformer | 44.80M | conf/chunk_roformer.yaml | spec_aug | test | ctc_greedy_search | 16, -1 | - | 6.13 |

+| roformer | 44.80M | conf/chunk_roformer.yaml | spec_aug | test | ctc_prefix_beam_search | 16, -1 | - | 6.13 |

+| roformer | 44.80M | conf/chunk_roformer.yaml | spec_aug | test | attention_rescoring | 16, -1 | - | 5.44 |

+

+### `decoding.decoding_chunk_size=-1`

+

+| Model | Params | Config | Augmentation| Test set | Decode method | Chunk Size & Left Chunks | Loss | CER |

+| --- | --- | --- | --- | --- | --- | --- | --- | --- |

+| roformer | 44.80M | conf/chunk_roformer.yaml | spec_aug | test | attention | -1, -1 | - | 5.39 |

+| roformer | 44.80M | conf/chunk_roformer.yaml | spec_aug | test | ctc_greedy_search | -1, -1 | - | 5.51 |

+| roformer | 44.80M | conf/chunk_roformer.yaml | spec_aug | test | ctc_prefix_beam_search | -1, -1 | - | 5.51 |

+| roformer | 44.80M | conf/chunk_roformer.yaml | spec_aug | test | attention_rescoring | -1, -1 | - | 4.99 |

## Conformer Streaming

@@ -24,6 +41,17 @@ Need set `decoding.decoding_chunk_size=16` when decoding.

| conformer | 47.06M | conf/chunk_conformer.yaml | spec_aug | test | attention_rescoring | 16, -1 | - | 0.051968 |

+## Conformer

+paddle version: 2.2.2

+paddlespeech version: 1.0.1

+| Model | Params | Config | Augmentation| Test set | Decode method | Loss | CER |

+| --- | --- | --- | --- | --- | --- | --- | --- |

+| conformer | 47.07M | conf/conformer.yaml | spec_aug | test | attention | - | 0.0522 |

+| conformer | 47.07M | conf/conformer.yaml | spec_aug | test | ctc_greedy_search | - | 0.0481 |

+| conformer | 47.07M | conf/conformer.yaml | spec_aug | test | ctc_prefix_beam_search | - | 0.0480 |

+| conformer | 47.07M | conf/conformer.yaml | spec_aug | test | attention_rescoring | - | 0.0460 |

+

+

## Transformer

| Model | Params | Config | Augmentation| Test set | Decode method | Loss | CER |

diff --git a/examples/aishell/asr1/conf/chunk_roformer.yaml b/examples/aishell/asr1/conf/chunk_roformer.yaml

new file mode 100644

index 000000000..a4051a021

--- /dev/null

+++ b/examples/aishell/asr1/conf/chunk_roformer.yaml

@@ -0,0 +1,98 @@

+############################################

+# Network Architecture #

+############################################

+cmvn_file:

+cmvn_file_type: "json"

+# encoder related

+encoder: conformer

+encoder_conf:

+ output_size: 256 # dimension of attention

+ attention_heads: 4

+ linear_units: 2048 # the number of units of position-wise feed forward

+ num_blocks: 12 # the number of encoder blocks

+ dropout_rate: 0.1 # sublayer output dropout

+ positional_dropout_rate: 0.1

+ attention_dropout_rate: 0.0

+ input_layer: conv2d # encoder input type, you can chose conv2d, conv2d6 and conv2d8

+ normalize_before: True

+ cnn_module_kernel: 15

+ use_cnn_module: True

+ activation_type: 'swish'

+ pos_enc_layer_type: 'rope_pos' # abs_pos, rel_pos, rope_pos

+ selfattention_layer_type: 'rel_selfattn' # unused

+ causal: true

+ use_dynamic_chunk: true

+ cnn_module_norm: 'layer_norm' # using nn.LayerNorm makes model converge faster

+ use_dynamic_left_chunk: false

+# decoder related

+decoder: transformer # transformer, bitransformer

+decoder_conf:

+ attention_heads: 4

+ linear_units: 2048

+ num_blocks: 6

+ r_num_blocks: 0 # only for bitransformer

+ dropout_rate: 0.1 # sublayer output dropout

+ positional_dropout_rate: 0.1

+ self_attention_dropout_rate: 0.0

+ src_attention_dropout_rate: 0.0

+# hybrid CTC/attention

+model_conf:

+ ctc_weight: 0.3

+ lsm_weight: 0.1 # label smoothing option

+ reverse_weight: 0.0 # only for bitransformer

+ length_normalized_loss: false

+ init_type: 'kaiming_uniform' # !Warning: need to convergence

+

+###########################################

+# Data #

+###########################################

+

+train_manifest: data/manifest.train

+dev_manifest: data/manifest.dev

+test_manifest: data/manifest.test

+

+

+###########################################

+# Dataloader #

+###########################################

+

+vocab_filepath: data/lang_char/vocab.txt

+spm_model_prefix: ''

+unit_type: 'char'

+preprocess_config: conf/preprocess.yaml

+feat_dim: 80

+stride_ms: 10.0

+window_ms: 25.0

+sortagrad: 0 # Feed samples from shortest to longest ; -1: enabled for all epochs, 0: disabled, other: enabled for 'other' epochs

+batch_size: 32

+maxlen_in: 512 # if input length > maxlen-in, batchsize is automatically reduced

+maxlen_out: 150 # if output length > maxlen-out, batchsize is automatically reduced

+minibatches: 0 # for debug

+batch_count: auto

+batch_bins: 0

+batch_frames_in: 0

+batch_frames_out: 0

+batch_frames_inout: 0

+num_workers: 2

+subsampling_factor: 1

+num_encs: 1

+

+###########################################

+# Training #

+###########################################

+n_epoch: 240

+accum_grad: 1

+global_grad_clip: 5.0

+dist_sampler: True

+optim: adam

+optim_conf:

+ lr: 0.001

+ weight_decay: 1.0e-6

+scheduler: warmuplr

+scheduler_conf:

+ warmup_steps: 25000

+ lr_decay: 1.0

+log_interval: 100

+checkpoint:

+ kbest_n: 50

+ latest_n: 5

diff --git a/examples/aishell/asr1/conf/chunk_roformer_bidecoder.yaml b/examples/aishell/asr1/conf/chunk_roformer_bidecoder.yaml

new file mode 100644

index 000000000..aa3a0aca7

--- /dev/null

+++ b/examples/aishell/asr1/conf/chunk_roformer_bidecoder.yaml

@@ -0,0 +1,98 @@

+############################################

+# Network Architecture #

+############################################

+cmvn_file:

+cmvn_file_type: "json"

+# encoder related

+encoder: conformer

+encoder_conf:

+ output_size: 256 # dimension of attention

+ attention_heads: 4

+ linear_units: 2048 # the number of units of position-wise feed forward

+ num_blocks: 12 # the number of encoder blocks

+ dropout_rate: 0.1 # sublayer output dropout

+ positional_dropout_rate: 0.1

+ attention_dropout_rate: 0.0

+ input_layer: conv2d # encoder input type, you can chose conv2d, conv2d6 and conv2d8

+ normalize_before: True

+ cnn_module_kernel: 15

+ use_cnn_module: True

+ activation_type: 'swish'

+ pos_enc_layer_type: 'rope_pos' # abs_pos, rel_pos, rope_pos

+ selfattention_layer_type: 'rel_selfattn' # unused

+ causal: true

+ use_dynamic_chunk: true

+ cnn_module_norm: 'layer_norm' # using nn.LayerNorm makes model converge faster

+ use_dynamic_left_chunk: false

+# decoder related

+decoder: bitransformer # transformer, bitransformer

+decoder_conf:

+ attention_heads: 4

+ linear_units: 2048

+ num_blocks: 3

+ r_num_blocks: 3 # only for bitransformer

+ dropout_rate: 0.1 # sublayer output dropout

+ positional_dropout_rate: 0.1

+ self_attention_dropout_rate: 0.0

+ src_attention_dropout_rate: 0.0

+# hybrid CTC/attention

+model_conf:

+ ctc_weight: 0.3

+ lsm_weight: 0.1 # label smoothing option

+ reverse_weight: 0.3 # only for bitransformer

+ length_normalized_loss: false

+ init_type: 'kaiming_uniform' # !Warning: need to convergence

+

+###########################################

+# Data #

+###########################################

+

+train_manifest: data/manifest.train

+dev_manifest: data/manifest.dev

+test_manifest: data/manifest.test

+

+

+###########################################

+# Dataloader #

+###########################################

+

+vocab_filepath: data/lang_char/vocab.txt

+spm_model_prefix: ''

+unit_type: 'char'

+preprocess_config: conf/preprocess.yaml

+feat_dim: 80

+stride_ms: 10.0

+window_ms: 25.0

+sortagrad: 0 # Feed samples from shortest to longest ; -1: enabled for all epochs, 0: disabled, other: enabled for 'other' epochs

+batch_size: 32

+maxlen_in: 512 # if input length > maxlen-in, batchsize is automatically reduced

+maxlen_out: 150 # if output length > maxlen-out, batchsize is automatically reduced

+minibatches: 0 # for debug

+batch_count: auto

+batch_bins: 0

+batch_frames_in: 0

+batch_frames_out: 0

+batch_frames_inout: 0

+num_workers: 2

+subsampling_factor: 1

+num_encs: 1

+

+###########################################

+# Training #

+###########################################

+n_epoch: 240

+accum_grad: 1

+global_grad_clip: 5.0

+dist_sampler: True

+optim: adam

+optim_conf:

+ lr: 0.001

+ weight_decay: 1.0e-6

+scheduler: warmuplr

+scheduler_conf:

+ warmup_steps: 25000

+ lr_decay: 1.0

+log_interval: 100

+checkpoint:

+ kbest_n: 50

+ latest_n: 5

diff --git a/examples/csmsc/tts2/local/inference_xpu.sh b/examples/csmsc/tts2/local/inference_xpu.sh

new file mode 100644

index 000000000..5d8d92054

--- /dev/null

+++ b/examples/csmsc/tts2/local/inference_xpu.sh

@@ -0,0 +1,46 @@

+#!/bin/bash

+

+train_output_path=$1

+

+stage=0

+stop_stage=0

+

+# pwgan

+if [ ${stage} -le 0 ] && [ ${stop_stage} -ge 0 ]; then

+ python3 ${BIN_DIR}/../inference.py \

+ --inference_dir=${train_output_path}/inference \

+ --am=speedyspeech_csmsc \

+ --voc=pwgan_csmsc \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/pd_infer_out \

+ --phones_dict=dump/phone_id_map.txt \

+ --tones_dict=dump/tone_id_map.txt \

+ --device xpu

+fi

+

+# for more GAN Vocoders

+# multi band melgan

+if [ ${stage} -le 1 ] && [ ${stop_stage} -ge 1 ]; then

+ python3 ${BIN_DIR}/../inference.py \

+ --inference_dir=${train_output_path}/inference \

+ --am=speedyspeech_csmsc \

+ --voc=mb_melgan_csmsc \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/pd_infer_out \

+ --phones_dict=dump/phone_id_map.txt \

+ --tones_dict=dump/tone_id_map.txt \

+ --device xpu

+fi

+

+# hifigan

+if [ ${stage} -le 2 ] && [ ${stop_stage} -ge 2 ]; then

+ python3 ${BIN_DIR}/../inference.py \

+ --inference_dir=${train_output_path}/inference \

+ --am=speedyspeech_csmsc \

+ --voc=hifigan_csmsc \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/pd_infer_out \

+ --phones_dict=dump/phone_id_map.txt \

+ --tones_dict=dump/tone_id_map.txt \

+ --device xpu

+fi

diff --git a/examples/csmsc/tts2/local/synthesize_e2e_xpu.sh b/examples/csmsc/tts2/local/synthesize_e2e_xpu.sh

new file mode 100644

index 000000000..0285f42cd

--- /dev/null

+++ b/examples/csmsc/tts2/local/synthesize_e2e_xpu.sh

@@ -0,0 +1,122 @@

+#!/bin/bash

+

+config_path=$1

+train_output_path=$2

+ckpt_name=$3

+

+stage=0

+stop_stage=0

+

+# pwgan

+if [ ${stage} -le 0 ] && [ ${stop_stage} -ge 0 ]; then

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize_e2e.py \

+ --am=speedyspeech_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/feats_stats.npy \

+ --voc=pwgan_csmsc \

+ --voc_config=pwg_baker_ckpt_0.4/pwg_default.yaml \

+ --voc_ckpt=pwg_baker_ckpt_0.4/pwg_snapshot_iter_400000.pdz \

+ --voc_stat=pwg_baker_ckpt_0.4/pwg_stats.npy \

+ --lang=zh \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/test_e2e \

+ --phones_dict=dump/phone_id_map.txt \

+ --tones_dict=dump/tone_id_map.txt \

+ --inference_dir=${train_output_path}/inference \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+# for more GAN Vocoders

+# multi band melgan

+if [ ${stage} -le 1 ] && [ ${stop_stage} -ge 1 ]; then

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize_e2e.py \

+ --am=speedyspeech_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/feats_stats.npy \

+ --voc=mb_melgan_csmsc \

+ --voc_config=mb_melgan_csmsc_ckpt_0.1.1/default.yaml \

+ --voc_ckpt=mb_melgan_csmsc_ckpt_0.1.1/snapshot_iter_1000000.pdz\

+ --voc_stat=mb_melgan_csmsc_ckpt_0.1.1/feats_stats.npy \

+ --lang=zh \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/test_e2e \

+ --phones_dict=dump/phone_id_map.txt \

+ --tones_dict=dump/tone_id_map.txt \

+ --inference_dir=${train_output_path}/inference \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+# the pretrained models haven't release now

+# style melgan

+# style melgan's Dygraph to Static Graph is not ready now

+if [ ${stage} -le 2 ] && [ ${stop_stage} -ge 2 ]; then

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize_e2e.py \

+ --am=speedyspeech_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/feats_stats.npy \

+ --voc=style_melgan_csmsc \

+ --voc_config=style_melgan_csmsc_ckpt_0.1.1/default.yaml \

+ --voc_ckpt=style_melgan_csmsc_ckpt_0.1.1/snapshot_iter_1500000.pdz \

+ --voc_stat=style_melgan_csmsc_ckpt_0.1.1/feats_stats.npy \

+ --lang=zh \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/test_e2e \

+ --phones_dict=dump/phone_id_map.txt \

+ --tones_dict=dump/tone_id_map.txt \

+ --ngpu=0 \

+ --nxpu=1

+ # --inference_dir=${train_output_path}/inference

+fi

+

+# hifigan

+if [ ${stage} -le 3 ] && [ ${stop_stage} -ge 3 ]; then

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize_e2e.py \

+ --am=speedyspeech_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/feats_stats.npy \

+ --voc=hifigan_csmsc \

+ --voc_config=hifigan_csmsc_ckpt_0.1.1/default.yaml \

+ --voc_ckpt=hifigan_csmsc_ckpt_0.1.1/snapshot_iter_2500000.pdz \

+ --voc_stat=hifigan_csmsc_ckpt_0.1.1/feats_stats.npy \

+ --lang=zh \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/test_e2e \

+ --phones_dict=dump/phone_id_map.txt \

+ --tones_dict=dump/tone_id_map.txt \

+ --inference_dir=${train_output_path}/inference \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+# wavernn

+if [ ${stage} -le 4 ] && [ ${stop_stage} -ge 4 ]; then

+ echo "in wavernn syn_e2e"

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize_e2e.py \

+ --am=speedyspeech_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/feats_stats.npy \

+ --voc=wavernn_csmsc \

+ --voc_config=wavernn_csmsc_ckpt_0.2.0/default.yaml \

+ --voc_ckpt=wavernn_csmsc_ckpt_0.2.0/snapshot_iter_400000.pdz \

+ --voc_stat=wavernn_csmsc_ckpt_0.2.0/feats_stats.npy \

+ --lang=zh \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/test_e2e \

+ --phones_dict=dump/phone_id_map.txt \

+ --tones_dict=dump/tone_id_map.txt \

+ --inference_dir=${train_output_path}/inference \

+ --ngpu=0 \

+ --nxpu=1

+fi

diff --git a/examples/csmsc/tts2/local/synthesize_xpu.sh b/examples/csmsc/tts2/local/synthesize_xpu.sh

new file mode 100644

index 000000000..801789c26

--- /dev/null

+++ b/examples/csmsc/tts2/local/synthesize_xpu.sh

@@ -0,0 +1,110 @@

+#!/bin/bash

+

+config_path=$1

+train_output_path=$2

+ckpt_name=$3

+stage=0

+stop_stage=0

+

+# pwgan

+if [ ${stage} -le 0 ] && [ ${stop_stage} -ge 0 ]; then

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize.py \

+ --am=speedyspeech_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/feats_stats.npy \

+ --voc=pwgan_csmsc \

+ --voc_config=pwg_baker_ckpt_0.4/pwg_default.yaml \

+ --voc_ckpt=pwg_baker_ckpt_0.4/pwg_snapshot_iter_400000.pdz \

+ --voc_stat=pwg_baker_ckpt_0.4/pwg_stats.npy \

+ --test_metadata=dump/test/norm/metadata.jsonl \

+ --output_dir=${train_output_path}/test \

+ --phones_dict=dump/phone_id_map.txt \

+ --tones_dict=dump/tone_id_map.txt \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+# for more GAN Vocoders

+# multi band melgan

+if [ ${stage} -le 1 ] && [ ${stop_stage} -ge 1 ]; then

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize.py \

+ --am=speedyspeech_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/feats_stats.npy \

+ --voc=mb_melgan_csmsc \

+ --voc_config=mb_melgan_csmsc_ckpt_0.1.1/default.yaml \

+ --voc_ckpt=mb_melgan_csmsc_ckpt_0.1.1/snapshot_iter_1000000.pdz\

+ --voc_stat=mb_melgan_csmsc_ckpt_0.1.1/feats_stats.npy \

+ --test_metadata=dump/test/norm/metadata.jsonl \

+ --output_dir=${train_output_path}/test \

+ --phones_dict=dump/phone_id_map.txt \

+ --tones_dict=dump/tone_id_map.txt \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+# style melgan

+if [ ${stage} -le 2 ] && [ ${stop_stage} -ge 2 ]; then

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize.py \

+ --am=speedyspeech_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/feats_stats.npy \

+ --voc=style_melgan_csmsc \

+ --voc_config=style_melgan_csmsc_ckpt_0.1.1/default.yaml \

+ --voc_ckpt=style_melgan_csmsc_ckpt_0.1.1/snapshot_iter_1500000.pdz \

+ --voc_stat=style_melgan_csmsc_ckpt_0.1.1/feats_stats.npy \

+ --test_metadata=dump/test/norm/metadata.jsonl \

+ --output_dir=${train_output_path}/test \

+ --phones_dict=dump/phone_id_map.txt \

+ --tones_dict=dump/tone_id_map.txt \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+# hifigan

+if [ ${stage} -le 3 ] && [ ${stop_stage} -ge 3 ]; then

+ echo "in hifigan syn"

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize.py \

+ --am=speedyspeech_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/feats_stats.npy \

+ --voc=hifigan_csmsc \

+ --voc_config=hifigan_csmsc_ckpt_0.1.1/default.yaml \

+ --voc_ckpt=hifigan_csmsc_ckpt_0.1.1/snapshot_iter_2500000.pdz \

+ --voc_stat=hifigan_csmsc_ckpt_0.1.1/feats_stats.npy \

+ --test_metadata=dump/test/norm/metadata.jsonl \

+ --output_dir=${train_output_path}/test \

+ --phones_dict=dump/phone_id_map.txt \

+ --tones_dict=dump/tone_id_map.txt \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+# wavernn

+if [ ${stage} -le 4 ] && [ ${stop_stage} -ge 4 ]; then

+ echo "in wavernn syn"

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize.py \

+ --am=speedyspeech_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/feats_stats.npy \

+ --voc=wavernn_csmsc \

+ --voc_config=wavernn_csmsc_ckpt_0.2.0/default.yaml \

+ --voc_ckpt=wavernn_csmsc_ckpt_0.2.0/snapshot_iter_400000.pdz \

+ --voc_stat=wavernn_csmsc_ckpt_0.2.0/feats_stats.npy \

+ --test_metadata=dump/test/norm/metadata.jsonl \

+ --output_dir=${train_output_path}/test \

+ --tones_dict=dump/tone_id_map.txt \

+ --phones_dict=dump/phone_id_map.txt \

+ --ngpu=0 \

+ --nxpu=1

+fi

diff --git a/examples/csmsc/tts2/local/train_xpu.sh b/examples/csmsc/tts2/local/train_xpu.sh

new file mode 100644

index 000000000..0c07c27fc

--- /dev/null

+++ b/examples/csmsc/tts2/local/train_xpu.sh

@@ -0,0 +1,16 @@

+

+#!/bin/bash

+

+config_path=$1

+train_output_path=$2

+

+python ${BIN_DIR}/train.py \

+ --train-metadata=dump/train/norm/metadata.jsonl \

+ --dev-metadata=dump/dev/norm/metadata.jsonl \

+ --config=${config_path} \

+ --output-dir=${train_output_path} \

+ --ngpu=0 \

+ --nxpu=1 \

+ --phones-dict=dump/phone_id_map.txt \

+ --tones-dict=dump/tone_id_map.txt \

+ --use-relative-path=True

diff --git a/examples/csmsc/tts2/run_xpu.sh b/examples/csmsc/tts2/run_xpu.sh

new file mode 100644

index 000000000..4b867961f

--- /dev/null

+++ b/examples/csmsc/tts2/run_xpu.sh

@@ -0,0 +1,42 @@

+#!/bin/bash

+

+set -e

+source path.sh

+

+xpus=0,1

+stage=0

+stop_stage=100

+

+conf_path=conf/default.yaml

+train_output_path=exp/default

+ckpt_name=snapshot_iter_76.pdz

+

+# with the following command, you can choose the stage range you want to run

+# such as `./run_xpu.sh --stage 0 --stop-stage 0`

+# this can not be mixed use with `$1`, `$2` ...

+source ${MAIN_ROOT}/utils/parse_options.sh || exit 1

+

+if [ ${stage} -le 0 ] && [ ${stop_stage} -ge 0 ]; then

+ # prepare data

+ ./local/preprocess.sh ${conf_path} || exit -1

+fi

+

+if [ ${stage} -le 1 ] && [ ${stop_stage} -ge 1 ]; then

+ # train model, all `ckpt` under `train_output_path/checkpoints/` dir

+ FLAGS_selected_xpus=${xpus} ./local/train_xpu.sh ${conf_path} ${train_output_path} || exit -1

+fi

+

+if [ ${stage} -le 2 ] && [ ${stop_stage} -ge 2 ]; then

+ # synthesize, vocoder is pwgan by default

+ FLAGS_selected_xpus=${xpus} ./local/synthesize_xpu.sh ${conf_path} ${train_output_path} ${ckpt_name} || exit -1

+fi

+

+if [ ${stage} -le 3 ] && [ ${stop_stage} -ge 3 ]; then

+ # synthesize_e2e, vocoder is pwgan by default

+ FLAGS_selected_xpus=${xpus} ./local/synthesize_e2e_xpu.sh ${conf_path} ${train_output_path} ${ckpt_name} || exit -1

+fi

+

+if [ ${stage} -le 4 ] && [ ${stop_stage} -ge 4 ]; then

+ # inference with static model

+ FLAGS_selected_xpus=${xpus} ./local/inference_xpu.sh ${train_output_path} || exit -1

+fi

diff --git a/examples/csmsc/tts3/local/inference_xpu.sh b/examples/csmsc/tts3/local/inference_xpu.sh

new file mode 100644

index 000000000..541dc6262

--- /dev/null

+++ b/examples/csmsc/tts3/local/inference_xpu.sh

@@ -0,0 +1,55 @@

+#!/bin/bash

+

+train_output_path=$1

+

+stage=0

+stop_stage=0

+

+# pwgan

+if [ ${stage} -le 0 ] && [ ${stop_stage} -ge 0 ]; then

+ python3 ${BIN_DIR}/../inference.py \

+ --inference_dir=${train_output_path}/inference \

+ --am=fastspeech2_csmsc \

+ --voc=pwgan_csmsc \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/pd_infer_out \

+ --phones_dict=dump/phone_id_map.txt \

+ --device xpu

+fi

+

+# for more GAN Vocoders

+# multi band melgan

+if [ ${stage} -le 1 ] && [ ${stop_stage} -ge 1 ]; then

+ python3 ${BIN_DIR}/../inference.py \

+ --inference_dir=${train_output_path}/inference \

+ --am=fastspeech2_csmsc \

+ --voc=mb_melgan_csmsc \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/pd_infer_out \

+ --phones_dict=dump/phone_id_map.txt \

+ --device xpu

+fi

+

+# hifigan

+if [ ${stage} -le 2 ] && [ ${stop_stage} -ge 2 ]; then

+ python3 ${BIN_DIR}/../inference.py \

+ --inference_dir=${train_output_path}/inference \

+ --am=fastspeech2_csmsc \

+ --voc=hifigan_csmsc \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/pd_infer_out \

+ --phones_dict=dump/phone_id_map.txt \

+ --device xpu

+fi

+

+# wavernn

+if [ ${stage} -le 3 ] && [ ${stop_stage} -ge 3 ]; then

+ python3 ${BIN_DIR}/../inference.py \

+ --inference_dir=${train_output_path}/inference \

+ --am=fastspeech2_csmsc \

+ --voc=wavernn_csmsc \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/pd_infer_out \

+ --phones_dict=dump/phone_id_map.txt \

+ --device xpu

+fi

\ No newline at end of file

diff --git a/examples/csmsc/tts3/local/synthesize_e2e_xpu.sh b/examples/csmsc/tts3/local/synthesize_e2e_xpu.sh

new file mode 100644

index 000000000..bb58a37c8

--- /dev/null

+++ b/examples/csmsc/tts3/local/synthesize_e2e_xpu.sh

@@ -0,0 +1,119 @@

+#!/bin/bash

+

+config_path=$1

+train_output_path=$2

+ckpt_name=$3

+

+stage=0

+stop_stage=0

+

+# pwgan

+if [ ${stage} -le 0 ] && [ ${stop_stage} -ge 0 ]; then

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize_e2e.py \

+ --am=fastspeech2_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/speech_stats.npy \

+ --voc=pwgan_csmsc \

+ --voc_config=pwg_baker_ckpt_0.4/pwg_default.yaml \

+ --voc_ckpt=pwg_baker_ckpt_0.4/pwg_snapshot_iter_400000.pdz \

+ --voc_stat=pwg_baker_ckpt_0.4/pwg_stats.npy \

+ --lang=zh \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/test_e2e \

+ --phones_dict=dump/phone_id_map.txt \

+ --inference_dir=${train_output_path}/inference \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+# for more GAN Vocoders

+# multi band melgan

+if [ ${stage} -le 1 ] && [ ${stop_stage} -ge 1 ]; then

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize_e2e.py \

+ --am=fastspeech2_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/speech_stats.npy \

+ --voc=mb_melgan_csmsc \

+ --voc_config=mb_melgan_csmsc_ckpt_0.1.1/default.yaml \

+ --voc_ckpt=mb_melgan_csmsc_ckpt_0.1.1/snapshot_iter_1000000.pdz\

+ --voc_stat=mb_melgan_csmsc_ckpt_0.1.1/feats_stats.npy \

+ --lang=zh \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/test_e2e \

+ --phones_dict=dump/phone_id_map.txt \

+ --inference_dir=${train_output_path}/inference \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+# the pretrained models haven't release now

+# style melgan

+# style melgan's Dygraph to Static Graph is not ready now

+if [ ${stage} -le 2 ] && [ ${stop_stage} -ge 2 ]; then

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize_e2e.py \

+ --am=fastspeech2_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/speech_stats.npy \

+ --voc=style_melgan_csmsc \

+ --voc_config=style_melgan_csmsc_ckpt_0.1.1/default.yaml \

+ --voc_ckpt=style_melgan_csmsc_ckpt_0.1.1/snapshot_iter_1500000.pdz \

+ --voc_stat=style_melgan_csmsc_ckpt_0.1.1/feats_stats.npy \

+ --lang=zh \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/test_e2e \

+ --phones_dict=dump/phone_id_map.txt \

+ --ngpu=0 \

+ --nxpu=1

+ # --inference_dir=${train_output_path}/inference

+fi

+

+# hifigan

+if [ ${stage} -le 3 ] && [ ${stop_stage} -ge 3 ]; then

+ echo "in hifigan syn_e2e"

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize_e2e.py \

+ --am=fastspeech2_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/speech_stats.npy \

+ --voc=hifigan_csmsc \

+ --voc_config=hifigan_csmsc_ckpt_0.1.1/default.yaml \

+ --voc_ckpt=hifigan_csmsc_ckpt_0.1.1/snapshot_iter_2500000.pdz \

+ --voc_stat=hifigan_csmsc_ckpt_0.1.1/feats_stats.npy \

+ --lang=zh \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/test_e2e \

+ --phones_dict=dump/phone_id_map.txt \

+ --inference_dir=${train_output_path}/inference \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+

+# wavernn

+if [ ${stage} -le 4 ] && [ ${stop_stage} -ge 4 ]; then

+ echo "in wavernn syn_e2e"

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize_e2e.py \

+ --am=fastspeech2_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/speech_stats.npy \

+ --voc=wavernn_csmsc \

+ --voc_config=wavernn_csmsc_ckpt_0.2.0/default.yaml \

+ --voc_ckpt=wavernn_csmsc_ckpt_0.2.0/snapshot_iter_400000.pdz \

+ --voc_stat=wavernn_csmsc_ckpt_0.2.0/feats_stats.npy \

+ --lang=zh \

+ --text=${BIN_DIR}/../../assets/sentences.txt \

+ --output_dir=${train_output_path}/test_e2e \

+ --phones_dict=dump/phone_id_map.txt \

+ --inference_dir=${train_output_path}/inference \

+ --ngpu=0 \

+ --nxpu=1

+fi

diff --git a/examples/csmsc/tts3/local/synthesize_xpu.sh b/examples/csmsc/tts3/local/synthesize_xpu.sh

new file mode 100644

index 000000000..fac8677a7

--- /dev/null

+++ b/examples/csmsc/tts3/local/synthesize_xpu.sh

@@ -0,0 +1,105 @@

+#!/bin/bash

+

+config_path=$1

+train_output_path=$2

+ckpt_name=$3

+stage=0

+stop_stage=0

+

+# pwgan

+if [ ${stage} -le 0 ] && [ ${stop_stage} -ge 0 ]; then

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize.py \

+ --am=fastspeech2_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/speech_stats.npy \

+ --voc=pwgan_csmsc \

+ --voc_config=pwg_baker_ckpt_0.4/pwg_default.yaml \

+ --voc_ckpt=pwg_baker_ckpt_0.4/pwg_snapshot_iter_400000.pdz \

+ --voc_stat=pwg_baker_ckpt_0.4/pwg_stats.npy \

+ --test_metadata=dump/test/norm/metadata.jsonl \

+ --output_dir=${train_output_path}/test \

+ --phones_dict=dump/phone_id_map.txt \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+# for more GAN Vocoders

+# multi band melgan

+if [ ${stage} -le 1 ] && [ ${stop_stage} -ge 1 ]; then

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize.py \

+ --am=fastspeech2_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/speech_stats.npy \

+ --voc=mb_melgan_csmsc \

+ --voc_config=mb_melgan_csmsc_ckpt_0.1.1/default.yaml \

+ --voc_ckpt=mb_melgan_csmsc_ckpt_0.1.1/snapshot_iter_1000000.pdz\

+ --voc_stat=mb_melgan_csmsc_ckpt_0.1.1/feats_stats.npy \

+ --test_metadata=dump/test/norm/metadata.jsonl \

+ --output_dir=${train_output_path}/test \

+ --phones_dict=dump/phone_id_map.txt \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+# style melgan

+if [ ${stage} -le 2 ] && [ ${stop_stage} -ge 2 ]; then

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize.py \

+ --am=fastspeech2_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/speech_stats.npy \

+ --voc=style_melgan_csmsc \

+ --voc_config=style_melgan_csmsc_ckpt_0.1.1/default.yaml \

+ --voc_ckpt=style_melgan_csmsc_ckpt_0.1.1/snapshot_iter_1500000.pdz \

+ --voc_stat=style_melgan_csmsc_ckpt_0.1.1/feats_stats.npy \

+ --test_metadata=dump/test/norm/metadata.jsonl \

+ --output_dir=${train_output_path}/test \

+ --phones_dict=dump/phone_id_map.txt \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+# hifigan

+if [ ${stage} -le 3 ] && [ ${stop_stage} -ge 3 ]; then

+ echo "in hifigan syn"

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize.py \

+ --am=fastspeech2_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/speech_stats.npy \

+ --voc=hifigan_csmsc \

+ --voc_config=hifigan_csmsc_ckpt_0.1.1/default.yaml \

+ --voc_ckpt=hifigan_csmsc_ckpt_0.1.1/snapshot_iter_2500000.pdz \

+ --voc_stat=hifigan_csmsc_ckpt_0.1.1/feats_stats.npy \

+ --test_metadata=dump/test/norm/metadata.jsonl \

+ --output_dir=${train_output_path}/test \

+ --phones_dict=dump/phone_id_map.txt \

+ --ngpu=0 \

+ --nxpu=1

+fi

+

+# wavernn

+if [ ${stage} -le 4 ] && [ ${stop_stage} -ge 4 ]; then

+ echo "in wavernn syn"

+ FLAGS_allocator_strategy=naive_best_fit \

+ python3 ${BIN_DIR}/../synthesize.py \

+ --am=fastspeech2_csmsc \

+ --am_config=${config_path} \

+ --am_ckpt=${train_output_path}/checkpoints/${ckpt_name} \

+ --am_stat=dump/train/speech_stats.npy \

+ --voc=wavernn_csmsc \

+ --voc_config=wavernn_csmsc_ckpt_0.2.0/default.yaml \

+ --voc_ckpt=wavernn_csmsc_ckpt_0.2.0/snapshot_iter_400000.pdz \

+ --voc_stat=wavernn_csmsc_ckpt_0.2.0/feats_stats.npy \

+ --test_metadata=dump/test/norm/metadata.jsonl \

+ --output_dir=${train_output_path}/test \

+ --phones_dict=dump/phone_id_map.txt \

+ --ngpu=0 \

+ --nxpu=1

+fi

diff --git a/examples/csmsc/tts3/local/train_xpu.sh b/examples/csmsc/tts3/local/train_xpu.sh

new file mode 100644

index 000000000..a7d889888

--- /dev/null

+++ b/examples/csmsc/tts3/local/train_xpu.sh

@@ -0,0 +1,13 @@

+#!/bin/bash

+

+config_path=$1

+train_output_path=$2

+

+python3 ${BIN_DIR}/train.py \

+ --train-metadata=dump/train/norm/metadata.jsonl \

+ --dev-metadata=dump/dev/norm/metadata.jsonl \

+ --config=${config_path} \

+ --output-dir=${train_output_path} \

+ --ngpu=0 \

+ --nxpu=1 \

+ --phones-dict=dump/phone_id_map.txt

\ No newline at end of file

diff --git a/examples/csmsc/tts3/run_xpu.sh b/examples/csmsc/tts3/run_xpu.sh

new file mode 100644

index 000000000..4922d6b4b

--- /dev/null

+++ b/examples/csmsc/tts3/run_xpu.sh

@@ -0,0 +1,42 @@

+#!/bin/bash

+

+set -e

+source path.sh

+

+xpus=0,1

+stage=0

+stop_stage=100

+

+conf_path=conf/default.yaml

+train_output_path=exp/default

+ckpt_name=snapshot_iter_153.pdz

+

+# with the following command, you can choose the stage range you want to run

+# such as `./run.sh --stage 0 --stop-stage 0`

+# this can not be mixed use with `$1`, `$2` ...

+source ${MAIN_ROOT}/utils/parse_options.sh || exit 1

+

+if [ ${stage} -le 0 ] && [ ${stop_stage} -ge 0 ]; then

+ # prepare data

+ ./local/preprocess.sh ${conf_path} || exit -1

+fi

+

+if [ ${stage} -le 1 ] && [ ${stop_stage} -ge 1 ]; then

+ # train model, all `ckpt` under `train_output_path/checkpoints/` dir

+ FLAGS_selected_xpus=${xpus} ./local/train_xpu.sh ${conf_path} ${train_output_path} || exit -1

+fi

+

+if [ ${stage} -le 2 ] && [ ${stop_stage} -ge 2 ]; then

+ # synthesize, vocoder is pwgan by default

+ FLAGS_selected_xpus=${xpus} ./local/synthesize_xpu.sh ${conf_path} ${train_output_path} ${ckpt_name} || exit -1

+fi

+

+if [ ${stage} -le 3 ] && [ ${stop_stage} -ge 3 ]; then

+ # synthesize_e2e, vocoder is pwgan by default

+ FLAGS_selected_xpus=${xpus} ./local/synthesize_e2e_xpu.sh ${conf_path} ${train_output_path} ${ckpt_name} || exit -1

+fi

+

+if [ ${stage} -le 4 ] && [ ${stop_stage} -ge 4 ]; then

+ # inference with static model, vocoder is pwgan by default

+ FLAGS_selected_xpus=${xpus} ./local/inference_xpu.sh ${train_output_path} || exit -1

+fi

diff --git a/paddlespeech/dataset/s2t/avg_model.py b/paddlespeech/dataset/s2t/avg_model.py

index c5753b726..5bd5cb1f0 100755

--- a/paddlespeech/dataset/s2t/avg_model.py

+++ b/paddlespeech/dataset/s2t/avg_model.py

@@ -20,30 +20,6 @@ import numpy as np

import paddle

-def define_argparse():

- parser = argparse.ArgumentParser(description='average model')

- parser.add_argument('--dst_model', required=True, help='averaged model')

- parser.add_argument(

- '--ckpt_dir', required=True, help='ckpt model dir for average')

- parser.add_argument(

- '--val_best', action="store_true", help='averaged model')

- parser.add_argument(

- '--num', default=5, type=int, help='nums for averaged model')

- parser.add_argument(

- '--min_epoch',

- default=0,

- type=int,

- help='min epoch used for averaging model')

- parser.add_argument(

- '--max_epoch',

- default=65536, # Big enough

- type=int,

- help='max epoch used for averaging model')

-

- args = parser.parse_args()

- return args

-

-

def average_checkpoints(dst_model="",

ckpt_dir="",

val_best=True,

@@ -85,7 +61,7 @@ def average_checkpoints(dst_model="",

print(path_list)

avg = None

- num = args.num

+ num = num

assert num == len(path_list)

for path in path_list:

print(f'Processing {path}')

@@ -100,14 +76,14 @@ def average_checkpoints(dst_model="",

if avg[k] is not None:

avg[k] /= num

- paddle.save(avg, args.dst_model)

- print(f'Saving to {args.dst_model}')

+ paddle.save(avg, dst_model)

+ print(f'Saving to {dst_model}')

- meta_path = os.path.splitext(args.dst_model)[0] + '.avg.json'

+ meta_path = os.path.splitext(dst_model)[0] + '.avg.json'

with open(meta_path, 'w') as f:

data = json.dumps({

- "mode": 'val_best' if args.val_best else 'latest',

- "avg_ckpt": args.dst_model,

+ "mode": 'val_best' if val_best else 'latest',

+ "avg_ckpt": dst_model,

"val_loss_mean": avg_val_score,

"ckpts": path_list,

"epochs": selected_epochs.tolist(),

@@ -116,9 +92,40 @@ def average_checkpoints(dst_model="",

f.write(data + "\n")

+def define_argparse():

+ parser = argparse.ArgumentParser(description='average model')

+ parser.add_argument('--dst_model', required=True, help='averaged model')

+ parser.add_argument(

+ '--ckpt_dir', required=True, help='ckpt model dir for average')

+ parser.add_argument(

+ '--val_best', action="store_true", help='averaged model')

+ parser.add_argument(

+ '--num', default=5, type=int, help='nums for averaged model')

+ parser.add_argument(

+ '--min_epoch',

+ default=0,

+ type=int,

+ help='min epoch used for averaging model')

+ parser.add_argument(

+ '--max_epoch',

+ default=65536, # Big enough

+ type=int,

+ help='max epoch used for averaging model')

+

+ args = parser.parse_args()

+ print(args)

+ return args

+

+

def main():

args = define_argparse()

- average_checkpoints(args)

+ average_checkpoints(

+ dst_model=args.dst_model,

+ ckpt_dir=args.ckpt_dir,

+ val_best=args.val_best,

+ num=args.num,

+ min_epoch=args.min_epoch,

+ max_epoch=args.max_epoch)

if __name__ == '__main__':

diff --git a/paddlespeech/s2t/exps/deepspeech2/model.py b/paddlespeech/s2t/exps/deepspeech2/model.py

index 7ab8cf853..d007a9e39 100644

--- a/paddlespeech/s2t/exps/deepspeech2/model.py

+++ b/paddlespeech/s2t/exps/deepspeech2/model.py

@@ -27,7 +27,6 @@ from paddlespeech.audio.text.text_featurizer import TextFeaturizer

from paddlespeech.s2t.io.dataloader import BatchDataLoader

from paddlespeech.s2t.models.ds2 import DeepSpeech2InferModel

from paddlespeech.s2t.models.ds2 import DeepSpeech2Model

-from paddlespeech.s2t.training.gradclip import ClipGradByGlobalNormWithLog

from paddlespeech.s2t.training.reporter import report

from paddlespeech.s2t.training.timer import Timer

from paddlespeech.s2t.training.trainer import Trainer

@@ -148,7 +147,7 @@ class DeepSpeech2Trainer(Trainer):

if not self.train:

return

- grad_clip = ClipGradByGlobalNormWithLog(config.global_grad_clip)

+ grad_clip = paddle.nn.ClipGradByGlobalNorm(config.global_grad_clip)

lr_scheduler = paddle.optimizer.lr.ExponentialDecay(

learning_rate=config.lr, gamma=config.lr_decay, verbose=True)

optimizer = paddle.optimizer.Adam(

diff --git a/paddlespeech/s2t/models/u2/u2.py b/paddlespeech/s2t/models/u2/u2.py

index f716fa3b5..2e1c14ac1 100644

--- a/paddlespeech/s2t/models/u2/u2.py

+++ b/paddlespeech/s2t/models/u2/u2.py

@@ -145,7 +145,6 @@ class U2BaseModel(ASRInterface, nn.Layer):

text_lengths)

ctc_time = time.time() - start

#logger.debug(f"ctc time: {ctc_time}")

-

if loss_ctc is None:

loss = loss_att

elif loss_att is None:

@@ -916,6 +915,8 @@ class U2Model(U2DecodeModel):

decoder_type = configs.get('decoder', 'transformer')

logger.debug(f"U2 Decoder type: {decoder_type}")

if decoder_type == 'transformer':

+ configs['model_conf'].pop('reverse_weight', None)

+ configs['decoder_conf'].pop('r_num_blocks', None)

decoder = TransformerDecoder(vocab_size,

encoder.output_size(),

**configs['decoder_conf'])

diff --git a/paddlespeech/s2t/models/wav2vec2/wav2vec2_ASR.py b/paddlespeech/s2t/models/wav2vec2/wav2vec2_ASR.py

index 59a67a1e5..a3744d340 100755

--- a/paddlespeech/s2t/models/wav2vec2/wav2vec2_ASR.py

+++ b/paddlespeech/s2t/models/wav2vec2/wav2vec2_ASR.py

@@ -188,7 +188,7 @@ class Wav2vec2ASR(nn.Layer):

x_lens = x.shape[1]

ctc_probs = self.ctc.log_softmax(x) # (B, maxlen, vocab_size)

topk_prob, topk_index = ctc_probs.topk(1, axis=2) # (B, maxlen, 1)

- topk_index = topk_index.view(batch_size, x_lens) # (B, maxlen)

+ topk_index = topk_index.view([batch_size, x_lens]) # (B, maxlen)

hyps = [hyp.tolist() for hyp in topk_index]

hyps = [remove_duplicates_and_blank(hyp) for hyp in hyps]

diff --git a/paddlespeech/s2t/modules/attention.py b/paddlespeech/s2t/modules/attention.py

index 14336c03d..10ab3eaea 100644

--- a/paddlespeech/s2t/modules/attention.py

+++ b/paddlespeech/s2t/modules/attention.py

@@ -15,6 +15,7 @@

# Modified from wenet(https://github.com/wenet-e2e/wenet)

"""Multi-Head Attention layer definition."""

import math

+from typing import List

from typing import Tuple

import paddle

@@ -26,7 +27,10 @@ from paddlespeech.s2t.utils.log import Log

logger = Log(__name__).getlog()

-__all__ = ["MultiHeadedAttention", "RelPositionMultiHeadedAttention"]

+__all__ = [

+ "MultiHeadedAttention", "RelPositionMultiHeadedAttention",

+ "RoPERelPositionMultiHeadedAttention"

+]

# Relative Positional Encodings

# https://www.jianshu.com/p/c0608efcc26f

@@ -165,6 +169,7 @@ class MultiHeadedAttention(nn.Layer):

and `head * d_k == size`

"""

+ # (B,T,D) -> (B,T,H,D/H)

q, k, v = self.forward_qkv(query, key, value)

# when export onnx model, for 1st chunk, we feed

@@ -373,3 +378,139 @@ class RelPositionMultiHeadedAttention(MultiHeadedAttention):

self.d_k) # (batch, head, time1, time2)

return self.forward_attention(v, scores, mask), new_cache

+

+

+class RoPERelPositionMultiHeadedAttention(MultiHeadedAttention):

+ """Multi-Head Attention layer with RoPE relative position encoding."""

+

+ def __init__(self,

+ n_head,

+ n_feat,

+ dropout_rate,

+ adaptive_scale=False,

+ init_weights=False):

+ """Construct an RelPositionMultiHeadedAttention object.

+ Paper: https://arxiv.org/abs/1901.02860

+ Args:

+ n_head (int): The number of heads.

+ n_feat (int): The number of features.

+ dropout_rate (float): Dropout rate.

+ """

+ super().__init__(n_head, n_feat, dropout_rate)

+

+ def align(self, tensor: paddle.Tensor, axes: List[int], ndim=None):

+ """重新对齐tensor(批量版expand_dims)

+ axes:原来的第i维对齐新tensor的第axes[i]维;

+ ndim:新tensor的维度。

+ """

+ assert len(axes) == tensor.dim()

+ assert ndim or min(axes) >= 0

+

+ ndim = ndim or max(axes) + 1

+

+ # a[0, None, 1] = a[0, np.newaxis, 1]

+ indices = [None] * ndim

+ for i in axes:

+ # slice nothing, a[0, slice(None), 1] = a[0, :, 1]

+ indices[i] = slice(None)

+

+ return tensor[indices]

+

+ def apply_rotary_position_embeddings(self, sinusoidal, *tensors):

+ """应用RoPE到tensors中

+ 其中,sinusoidal.shape=[B, T, D],tensors为tensor的列表,而

+ tensor.shape=[B, T, ..., D], or (B,H,T,D/H)

+ """

+ assert len(tensors) > 0, 'at least one input tensor'

+ assert all(

+ [tensor.shape == tensors[0].shape

+ for tensor in tensors[1:]]), 'all tensors must have the same shape'

+

+ # (B,H,T,D)

+ ndim = tensors[0].dim()

+ _, H, T, D = tensors[0].shape

+

+ # sinusoidal shape same with tensors[0]

+ # [B,T,D] -> [B,T,H,D/H] -> (B,H,T,D/H)

+ # sinusoidal = self.align(sinusoidal, [0, 1, -1], ndim)

+ sinusoidal = sinusoidal.reshape((1, T, H, D)).transpose([0, 2, 1, 3])

+

+ # http://man.hubwiz.com/docset/TensorFlow.docset/Contents/Resources/Documents/api_docs/python/tf/keras/backend/repeat_elements.html

+ # like np.repeat, x (s1, s2, s3), axis 1, (s1, s2*rep, s3)

+ # [b,T, ..., d/2] -> [b,T, ..., d]

+ cos_pos = paddle.repeat_interleave(sinusoidal[..., 1::2], 2, axis=-1)

+ sin_pos = paddle.repeat_interleave(sinusoidal[..., 0::2], 2, axis=-1)

+ outputs = []

+ for tensor in tensors:

+ # x2 = [-x2, x1, -x4, x3, ..., -x_d, x_{d-1}]

+ tensor2 = paddle.stack([-tensor[..., 1::2], tensor[..., ::2]], ndim)

+ tensor2 = paddle.reshape(tensor2, paddle.shape(tensor))

+

+ # 公式 34, out = x * cos_pos + x2 * sin_pos

+ outputs.append(tensor * cos_pos + tensor2 * sin_pos)

+ return outputs[0] if len(outputs) == 1 else outputs

+

+ def forward(self,

+ query: paddle.Tensor,

+ key: paddle.Tensor,

+ value: paddle.Tensor,

+ mask: paddle.Tensor=paddle.ones([0, 0, 0], dtype=paddle.bool),

+ pos_emb: paddle.Tensor=paddle.empty([0]),

+ cache: paddle.Tensor=paddle.zeros([0, 0, 0, 0])

+ ) -> Tuple[paddle.Tensor, paddle.Tensor]:

+ """Compute 'Scaled Dot Product Attention' with rel. positional encoding.

+ Ref: https://github.com/facebookresearch/llama/blob/main/llama/model.py

+ Args:

+ query (paddle.Tensor): Query tensor (#batch, time1, size).

+ key (paddle.Tensor): Key tensor (#batch, time2, size).

+ value (paddle.Tensor): Value tensor (#batch, time2, size).

+ mask (paddle.Tensor): Mask tensor (#batch, 1, time2) or

+ (#batch, time1, time2), (0, 0, 0) means fake mask.

+ pos_emb (paddle.Tensor): Positional embedding tensor

+ (#batch, time2, size).

+ cache (paddle.Tensor): Cache tensor (1, head, cache_t, d_k * 2),

+ where `cache_t == chunk_size * num_decoding_left_chunks`

+ and `head * d_k == size`

+ Returns:

+ paddle.Tensor: Output tensor (#batch, time1, d_model).

+ paddle.Tensor: Cache tensor (1, head, cache_t + time1, d_k * 2)

+ where `cache_t == chunk_size * num_decoding_left_chunks`

+ and `head * d_k == size`

+ """

+ q, k, v = self.forward_qkv(query, key, value)

+ # q = q.transpose([0, 2, 1, 3]) # (batch, time1, head, d_k)

+

+ # f{q,k}(x_m, m) = R^d_{\theta, m} W_{q,k} x_m, m is position index

+ # q_t always is chunk_size

+ q_t = q.shape[2]

+ q = self.apply_rotary_position_embeddings(pos_emb[:, -q_t:, :], q)

+ # k will increase when in streaming decoding.

+ k = self.apply_rotary_position_embeddings(pos_emb[:, -q_t:, :], k)

+

+ # when export onnx model, for 1st chunk, we feed

+ # cache(1, head, 0, d_k * 2) (16/-1, -1/-1, 16/0 mode)

+ # or cache(1, head, real_cache_t, d_k * 2) (16/4 mode).

+ # In all modes, `if cache.size(0) > 0` will alwayse be `True`

+ # and we will always do splitting and

+ # concatnation(this will simplify onnx export). Note that

+ # it's OK to concat & split zero-shaped tensors(see code below).

+ # when export jit model, for 1st chunk, we always feed

+ # cache(0, 0, 0, 0) since jit supports dynamic if-branch.

+ # >>> a = torch.ones((1, 2, 0, 4))

+ # >>> b = torch.ones((1, 2, 3, 4))

+ # >>> c = torch.cat((a, b), dim=2)

+ # >>> torch.equal(b, c) # True

+ # >>> d = torch.split(a, 2, dim=-1)

+ # >>> torch.equal(d[0], d[1]) # True

+ if cache.shape[0] > 0:

+ # last dim `d_k * 2` for (key, val)

+ key_cache, value_cache = paddle.split(cache, 2, axis=-1)

+ k = paddle.concat([key_cache, k], axis=2)

+ v = paddle.concat([value_cache, v], axis=2)

+ # We do cache slicing in encoder.forward_chunk, since it's

+ # non-trivial to calculate `next_cache_start` here.

+ new_cache = paddle.concat((k, v), axis=-1)

+

+ # dot(q, k)

+ scores = paddle.matmul(q, k, transpose_y=True) / math.sqrt(self.d_k)

+ return self.forward_attention(v, scores, mask), new_cache

diff --git a/paddlespeech/s2t/modules/embedding.py b/paddlespeech/s2t/modules/embedding.py

index f41a7b5d4..1e9f01018 100644

--- a/paddlespeech/s2t/modules/embedding.py

+++ b/paddlespeech/s2t/modules/embedding.py

@@ -85,18 +85,21 @@ class PositionalEncoding(nn.Layer, PositionalEncodingInterface):

reverse (bool, optional): Not used. Defaults to False.

"""

nn.Layer.__init__(self)

- self.d_model = d_model

+ self.d_model = paddle.to_tensor(d_model)

self.max_len = max_len

self.xscale = paddle.to_tensor(math.sqrt(self.d_model))

self.dropout = nn.Dropout(p=dropout_rate)

+ self.base = paddle.to_tensor(10000.0)

self.pe = paddle.zeros([1, self.max_len, self.d_model]) #[B=1,T,D]

position = paddle.arange(

0, self.max_len, dtype=paddle.float32).unsqueeze(1) #[T, 1]

+ # base^{-2(i-1)/d)}, i \in (1,2...,d/2)

div_term = paddle.exp(

- paddle.arange(0, self.d_model, 2, dtype=paddle.float32) *

- -(math.log(10000.0) / self.d_model))

+ -paddle.arange(0, self.d_model, 2, dtype=paddle.float32) *

+ (paddle.log(self.base) / self.d_model))

+ # [B,T,D]

self.pe[:, :, 0::2] = paddle.sin(position * div_term)

self.pe[:, :, 1::2] = paddle.cos(position * div_term)

@@ -161,6 +164,98 @@ class RelPositionalEncoding(PositionalEncoding):

assert offset + x.shape[

1] < self.max_len, "offset: {} + x.shape[1]: {} is larger than the max_len: {}".format(

offset, x.shape[1], self.max_len)

+

x = x * self.xscale

pos_emb = self.pe[:, offset:offset + x.shape[1]]

return self.dropout(x), self.dropout(pos_emb)

+

+

+# RotaryRelPositionalEncoding is same to RelPositionalEncoding

+class ScaledRotaryRelPositionalEncoding(RelPositionalEncoding):

+ """Scaled Rotary Relative positional encoding module.

+ POSITION INTERPOLATION: : https://arxiv.org/pdf/2306.15595v2.pdf

+ """

+

+ def __init__(self,

+ d_model: int,

+ dropout_rate: float,

+ max_len: int=5000,

+ scale=1):

+ """

+ Args:

+ d_model (int): Embedding dimension.

+ dropout_rate (float): Dropout rate.

+ max_len (int, optional): [Maximum input length.]. Defaults to 5000.

+ scale (int): Interpolation max input length to `scale * max_len` positions.

+ """

+ super().__init__(d_model, dropout_rate, max_len, reverse=True)

+ self.pscale = paddle.to_tensor(scale)

+ self.max_len = max_len * scale

+

+ def sinusoidal_embeddings(self,

+ pos: paddle.Tensor,

+ dim: paddle.Tensor,

+ base=10000) -> paddle.Tensor:

+ """计算pos位置的dim维sinusoidal编码"""

+ assert dim % 2 == 0

+ # (d/2,)

+ indices = paddle.arange(0, dim // 2, dtype=pos.dtype)

+ indices = paddle.pow(paddle.cast(base, pos.dtype), -2 * indices / dim)

+ # pos (1, T), indices (d/2,) -> (1, T, d/2)

+ embeddings = paddle.einsum('...,d->...d', pos, indices)

+ # (1, T, d/2, 2)

+ embeddings = paddle.stack(

+ [paddle.sin(embeddings), paddle.cos(embeddings)], axis=-1)

+ # (1, T, d)

+ embeddings = paddle.flatten(embeddings, start_axis=-2, stop_axis=-1)

+ return embeddings

+

+ def forward(self, x: paddle.Tensor,

+ offset: int=0) -> Tuple[paddle.Tensor, paddle.Tensor]:

+ """Compute positional encoding.

+ Args:

+ x (paddle.Tensor): Input tensor (batch, time, `*`).

+ Returns:

+ paddle.Tensor: Encoded tensor (batch, time, `*`).

+ paddle.Tensor: Positional embedding tensor (1, time, `*`).

+ """

+ x = x * self.xscale

+

+ B, T, D = x.shape

+ assert D == self.d_model

+

+ # postion interploation

+ start = 0

+ end = T * self.pscale

+ assert end <= self.max_len

+ position = paddle.arange(start, end, dtype=x.dtype).unsqueeze(0)

+ position *= 1.0 / self.pscale

+ pe = self.sinusoidal_embeddings(position, self.d_model, base=self.base)

+

+ pos_emb = pe[:, offset:offset + x.shape[1]]

+ return self.dropout(x), self.dropout(pos_emb)

+

+ def position_encoding(self, offset: int, size: int) -> paddle.Tensor:

+ """ For getting encoding in a streaming fashion

+ Attention!!!!!

+ we apply dropout only once at the whole utterance level in a none

+ streaming way, but will call this function several times with

+ increasing input size in a streaming scenario, so the dropout will

+ be applied several times.

+ Args:

+ offset (int): start offset

+ size (int): requried size of position encoding

+ Returns:

+ paddle.Tensor: Corresponding position encoding, #[1, T, D].

+ """

+ # postion interploation

+ start = offset

+ end = (offset + size) * self.pscale

+ assert end <= self.max_len

+ position = paddle.arange(

+ start, end, dtype=paddle.get_default_dtype()).unsqueeze(0)

+ position *= 1.0 / self.pscale

+

+ pe = self.sinusoidal_embeddings(position, self.d_model, base=self.base)

+

+ return self.dropout(pe)

diff --git a/paddlespeech/s2t/modules/encoder.py b/paddlespeech/s2t/modules/encoder.py

index d90d69d77..27d7ffbd7 100644

--- a/paddlespeech/s2t/modules/encoder.py

+++ b/paddlespeech/s2t/modules/encoder.py

@@ -28,6 +28,7 @@ from paddlespeech.s2t.modules.align import LayerNorm

from paddlespeech.s2t.modules.align import Linear

from paddlespeech.s2t.modules.attention import MultiHeadedAttention

from paddlespeech.s2t.modules.attention import RelPositionMultiHeadedAttention

+from paddlespeech.s2t.modules.attention import RoPERelPositionMultiHeadedAttention

from paddlespeech.s2t.modules.conformer_convolution import ConvolutionModule

from paddlespeech.s2t.modules.embedding import NoPositionalEncoding

from paddlespeech.s2t.modules.embedding import PositionalEncoding

@@ -115,6 +116,8 @@ class BaseEncoder(nn.Layer):

pos_enc_class = PositionalEncoding

elif pos_enc_layer_type == "rel_pos":

pos_enc_class = RelPositionalEncoding

+ elif pos_enc_layer_type == "rope_pos":

+ pos_enc_class = RelPositionalEncoding

elif pos_enc_layer_type == "no_pos":

pos_enc_class = NoPositionalEncoding

else:

@@ -230,14 +233,14 @@ class BaseEncoder(nn.Layer):

xs = self.global_cmvn(xs)

# before embed, xs=(B, T, D1), pos_emb=(B=1, T, D)

- xs, pos_emb, _ = self.embed(xs, tmp_masks, offset=offset)

+ xs, _, _ = self.embed(xs, tmp_masks, offset=offset)

# after embed, xs=(B=1, chunk_size, hidden-dim)

elayers, _, cache_t1, _ = att_cache.shape

chunk_size = xs.shape[1]

attention_key_size = cache_t1 + chunk_size

- # only used when using `RelPositionMultiHeadedAttention`

+ # only used when using `RelPositionMultiHeadedAttention` and `RoPERelPositionMultiHeadedAttention`

pos_emb = self.embed.position_encoding(

offset=offset - cache_t1, size=attention_key_size)

@@ -474,21 +477,35 @@ class ConformerEncoder(BaseEncoder):

activation = get_activation(activation_type)

# self-attention module definition

- encoder_selfattn_layer = RelPositionMultiHeadedAttention

- encoder_selfattn_layer_args = (attention_heads, output_size,

- attention_dropout_rate)

+ encoder_dim = output_size

+ if pos_enc_layer_type == "abs_pos":

+ encoder_selfattn_layer = MultiHeadedAttention

+ encoder_selfattn_layer_args = (attention_heads, encoder_dim,

+ attention_dropout_rate)

+ elif pos_enc_layer_type == "rel_pos":

+ encoder_selfattn_layer = RelPositionMultiHeadedAttention

+ encoder_selfattn_layer_args = (attention_heads, encoder_dim,

+ attention_dropout_rate)

+ elif pos_enc_layer_type == "rope_pos":

+ encoder_selfattn_layer = RoPERelPositionMultiHeadedAttention

+ encoder_selfattn_layer_args = (attention_heads, encoder_dim,

+ attention_dropout_rate)

+ else:

+ raise ValueError(

+ f"pos_enc_layer_type {pos_enc_layer_type} not supported.")

+

# feed-forward module definition

positionwise_layer = PositionwiseFeedForward

- positionwise_layer_args = (output_size, linear_units, dropout_rate,

+ positionwise_layer_args = (encoder_dim, linear_units, dropout_rate,

activation)

# convolution module definition

convolution_layer = ConvolutionModule

- convolution_layer_args = (output_size, cnn_module_kernel, activation,

+ convolution_layer_args = (encoder_dim, cnn_module_kernel, activation,

cnn_module_norm, causal)

self.encoders = nn.LayerList([

ConformerEncoderLayer(

- size=output_size,

+ size=encoder_dim,

self_attn=encoder_selfattn_layer(*encoder_selfattn_layer_args),

feed_forward=positionwise_layer(*positionwise_layer_args),

feed_forward_macaron=positionwise_layer(

@@ -580,15 +597,23 @@ class SqueezeformerEncoder(nn.Layer):

activation = get_activation(activation_type)

# self-attention module definition

- if pos_enc_layer_type != "rel_pos":

+ if pos_enc_layer_type == "abs_pos":

encoder_selfattn_layer = MultiHeadedAttention

encoder_selfattn_layer_args = (attention_heads, output_size,

attention_dropout_rate)

- else:

+ elif pos_enc_layer_type == "rel_pos":

encoder_selfattn_layer = RelPositionMultiHeadedAttention

encoder_selfattn_layer_args = (attention_heads, encoder_dim,

attention_dropout_rate,

adaptive_scale, init_weights)

+ elif pos_enc_layer_type == "rope_pos":

+ encoder_selfattn_layer = RoPERelPositionMultiHeadedAttention

+ encoder_selfattn_layer_args = (attention_heads, encoder_dim,

+ attention_dropout_rate,

+ adaptive_scale, init_weights)

+ else:

+ raise ValueError(

+ f"pos_enc_layer_type {pos_enc_layer_type} not supported.")

# feed-forward module definition

positionwise_layer = PositionwiseFeedForward

diff --git a/paddlespeech/s2t/modules/encoder_layer.py b/paddlespeech/s2t/modules/encoder_layer.py

index ecba95e85..0499e742b 100644

--- a/paddlespeech/s2t/modules/encoder_layer.py

+++ b/paddlespeech/s2t/modules/encoder_layer.py

@@ -48,7 +48,7 @@ class TransformerEncoderLayer(nn.Layer):

Args:

size (int): Input dimension.

self_attn (nn.Layer): Self-attention module instance.

- `MultiHeadedAttention` or `RelPositionMultiHeadedAttention`

+ `MultiHeadedAttention`, `RelPositionMultiHeadedAttention` or `RoPERelPositionMultiHeadedAttention`

instance can be used as the argument.

feed_forward (nn.Layer): Feed-forward module instance.

`PositionwiseFeedForward`, instance can be used as the argument.

@@ -147,7 +147,7 @@ class ConformerEncoderLayer(nn.Layer):

Args:

size (int): Input dimension.

self_attn (nn.Layer): Self-attention module instance.

- `MultiHeadedAttention` or `RelPositionMultiHeadedAttention`

+ `MultiHeadedAttention`, `RelPositionMultiHeadedAttention` or `RoPERelPositionMultiHeadedAttention`

instance can be used as the argument.

feed_forward (nn.Layer): Feed-forward module instance.

`PositionwiseFeedForward` instance can be used as the argument.

@@ -298,7 +298,7 @@ class SqueezeformerEncoderLayer(nn.Layer):

Args:

size (int): Input dimension.

self_attn (paddle.nn.Layer): Self-attention module instance.

- `MultiHeadedAttention` or `RelPositionMultiHeadedAttention`

+ `MultiHeadedAttention`, `RelPositionMultiHeadedAttention` or `RoPERelPositionMultiHeadedAttention`

instance can be used as the argument.

feed_forward1 (paddle.nn.Layer): Feed-forward module instance.

`PositionwiseFeedForward` instance can be used as the argument.

diff --git a/paddlespeech/s2t/training/gradclip.py b/paddlespeech/s2t/training/gradclip.py

deleted file mode 100644

index 06587c749..000000000

--- a/paddlespeech/s2t/training/gradclip.py

+++ /dev/null

@@ -1,86 +0,0 @@

-# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-import paddle

-from paddle.fluid import core

-from paddle.fluid import layers

-from paddle.fluid.dygraph import base as imperative_base

-

-from paddlespeech.s2t.utils.log import Log

-

-__all__ = ["ClipGradByGlobalNormWithLog"]

-

-logger = Log(__name__).getlog()

-

-

-class ClipGradByGlobalNormWithLog(paddle.nn.ClipGradByGlobalNorm):

- def __init__(self, clip_norm):

- super().__init__(clip_norm)

-

- def __repr__(self):

- return f"{self.__class__.__name__}(global_clip_norm={self.clip_norm})"

-

- @imperative_base.no_grad

- def _dygraph_clip(self, params_grads):

- params_and_grads = []

- sum_square_list = []

- for i, (p, g) in enumerate(params_grads):

- if g is None:

- continue

- if getattr(p, 'need_clip', True) is False:

- continue

- merge_grad = g

- if g.type == core.VarDesc.VarType.SELECTED_ROWS:

- merge_grad = layers.merge_selected_rows(g)

- merge_grad = layers.get_tensor_from_selected_rows(merge_grad)

- square = paddle.square(merge_grad)

- sum_square = paddle.sum(square)

- sum_square_list.append(sum_square)

-

- # debug log, not dump all since slow down train process

- if i < 10:

- logger.debug(

- f"Grad Before Clip: {p.name}: {float(sum_square.sqrt()) }")

-

- # all parameters have been filterd out

- if len(sum_square_list) == 0:

- return params_grads

-

- global_norm_var = paddle.concat(sum_square_list)

- global_norm_var = paddle.sum(global_norm_var)

- global_norm_var = paddle.sqrt(global_norm_var)

-

- # debug log

- logger.debug(f"Grad Global Norm: {float(global_norm_var)}!!!!")

-

- max_global_norm = paddle.full(

- shape=[1], dtype=global_norm_var.dtype, fill_value=self.clip_norm)

- clip_var = paddle.divide(

- x=max_global_norm,

- y=paddle.maximum(x=global_norm_var, y=max_global_norm))

- for i, (p, g) in enumerate(params_grads):

- if g is None:

- continue

- if getattr(p, 'need_clip', True) is False:

- params_and_grads.append((p, g))

- continue

- new_grad = paddle.multiply(x=g, y=clip_var)

- params_and_grads.append((p, new_grad))

-

- # debug log, not dump all since slow down train process

- if i < 10:

- logger.debug(

- f"Grad After Clip: {p.name}: {float(new_grad.square().sum().sqrt())}"

- )

-

- return params_and_grads

diff --git a/paddlespeech/s2t/training/optimizer/__init__.py b/paddlespeech/s2t/training/optimizer/__init__.py

index aafdc5b6a..90281e1ed 100644

--- a/paddlespeech/s2t/training/optimizer/__init__.py

+++ b/paddlespeech/s2t/training/optimizer/__init__.py

@@ -19,7 +19,7 @@ from typing import Text

import paddle

from paddle.optimizer import Optimizer

from paddle.regularizer import L2Decay

-from paddlespeech.s2t.training.gradclip import ClipGradByGlobalNormWithLog

+

from paddlespeech.s2t.utils.dynamic_import import dynamic_import

from paddlespeech.s2t.utils.dynamic_import import instance_class

from paddlespeech.s2t.utils.log import Log

@@ -100,10 +100,9 @@ class OptimizerFactory():

assert "parameters" in args, "parameters not in args."

assert "learning_rate" in args, "learning_rate not in args."

- grad_clip = ClipGradByGlobalNormWithLog(

+ grad_clip = paddle.nn.ClipGradByGlobalNorm(

args['grad_clip']) if "grad_clip" in args else None

- weight_decay = L2Decay(

- args['weight_decay']) if "weight_decay" in args else None

+ weight_decay = args.get("weight_decay", None)

if weight_decay:

logger.info(f'')

if grad_clip:

diff --git a/paddlespeech/s2t/training/optimizer/adadelta.py b/paddlespeech/s2t/training/optimizer/adadelta.py

index 900b697c5..7c3950a90 100644

--- a/paddlespeech/s2t/training/optimizer/adadelta.py

+++ b/paddlespeech/s2t/training/optimizer/adadelta.py

@@ -12,7 +12,7 @@

# See the License for the specific language governing permissions and

# limitations under the License.

import paddle

-from paddle.fluid import framework

+from paddle import framework

from paddle.optimizer import Optimizer

__all__ = []

diff --git a/paddlespeech/server/engine/tts/online/onnx/tts_engine.py b/paddlespeech/server/engine/tts/online/onnx/tts_engine.py

index 0995a55da..9dd31a08b 100644

--- a/paddlespeech/server/engine/tts/online/onnx/tts_engine.py

+++ b/paddlespeech/server/engine/tts/online/onnx/tts_engine.py

@@ -28,7 +28,7 @@ from paddlespeech.server.utils.audio_process import float2pcm

from paddlespeech.server.utils.onnx_infer import get_sess

from paddlespeech.server.utils.util import denorm

from paddlespeech.server.utils.util import get_chunks

-from paddlespeech.t2s.frontend import English

+from paddlespeech.t2s.frontend.en_frontend import English

from paddlespeech.t2s.frontend.zh_frontend import Frontend

__all__ = ['TTSEngine', 'PaddleTTSConnectionHandler']

diff --git a/paddlespeech/server/engine/tts/online/python/tts_engine.py b/paddlespeech/server/engine/tts/online/python/tts_engine.py

index a46b84bd9..0cfb20354 100644

--- a/paddlespeech/server/engine/tts/online/python/tts_engine.py

+++ b/paddlespeech/server/engine/tts/online/python/tts_engine.py

@@ -29,7 +29,7 @@ from paddlespeech.server.engine.base_engine import BaseEngine