+------------------------------------------------------------------------------------

**PaddleSpeech** is an open-source toolkit on [PaddlePaddle](https://github.com/PaddlePaddle/Paddle) platform for a variety of critical tasks in speech and audio, with the state-of-art and influential models.

@@ -142,53 +143,35 @@ For more synthesized audios, please refer to [PaddleSpeech Text-to-Speech sample

-### ⭐ Examples

-- **[PaddleBoBo](https://github.com/JiehangXie/PaddleBoBo): Use PaddleSpeech TTS to generate virtual human voice.**

-

-

-

-- [PaddleSpeech Demo Video](https://paddlespeech.readthedocs.io/en/latest/demo_video.html)

-

-- **[VTuberTalk](https://github.com/jerryuhoo/VTuberTalk): Use PaddleSpeech TTS and ASR to clone voice from videos.**

-

-

-

-

-

-### 🔥 Hot Activities

-

-- 2021.12.21~12.24

-

- 4 Days Live Courses: Depth interpretation of PaddleSpeech!

-

- **Courses videos and related materials: https://aistudio.baidu.com/aistudio/education/group/info/25130**

### Features

Via the easy-to-use, efficient, flexible and scalable implementation, our vision is to empower both industrial application and academic research, including training, inference & testing modules, and deployment process. To be more specific, this toolkit features at:

-- 📦 **Ease of Use**: low barriers to install, and [CLI](#quick-start) is available to quick-start your journey.

+- 📦 **Ease of Use**: low barriers to install, [CLI](#quick-start), [Server](#quick-start-server), and [Streaming Server](#quick-start-streaming-server) is available to quick-start your journey.

- 🏆 **Align to the State-of-the-Art**: we provide high-speed and ultra-lightweight models, and also cutting-edge technology.

+- 🏆 **Streaming ASR and TTS System**: we provide production ready streaming asr and streaming tts system.

- 💯 **Rule-based Chinese frontend**: our frontend contains Text Normalization and Grapheme-to-Phoneme (G2P, including Polyphone and Tone Sandhi). Moreover, we use self-defined linguistic rules to adapt Chinese context.

-- **Varieties of Functions that Vitalize both Industrial and Academia**:

- - 🛎️ *Implementation of critical audio tasks*: this toolkit contains audio functions like Audio Classification, Speech Translation, Automatic Speech Recognition, Text-to-Speech Synthesis, etc.

+- 📦 **Varieties of Functions that Vitalize both Industrial and Academia**:

+ - 🛎️ *Implementation of critical audio tasks*: this toolkit contains audio functions like Automatic Speech Recognition, Text-to-Speech Synthesis, Speaker Verfication, KeyWord Spotting, Audio Classification, and Speech Translation, etc.

- 🔬 *Integration of mainstream models and datasets*: the toolkit implements modules that participate in the whole pipeline of the speech tasks, and uses mainstream datasets like LibriSpeech, LJSpeech, AIShell, CSMSC, etc. See also [model list](#model-list) for more details.

- 🧩 *Cascaded models application*: as an extension of the typical traditional audio tasks, we combine the workflows of the aforementioned tasks with other fields like Natural language processing (NLP) and Computer Vision (CV).

### Recent Update

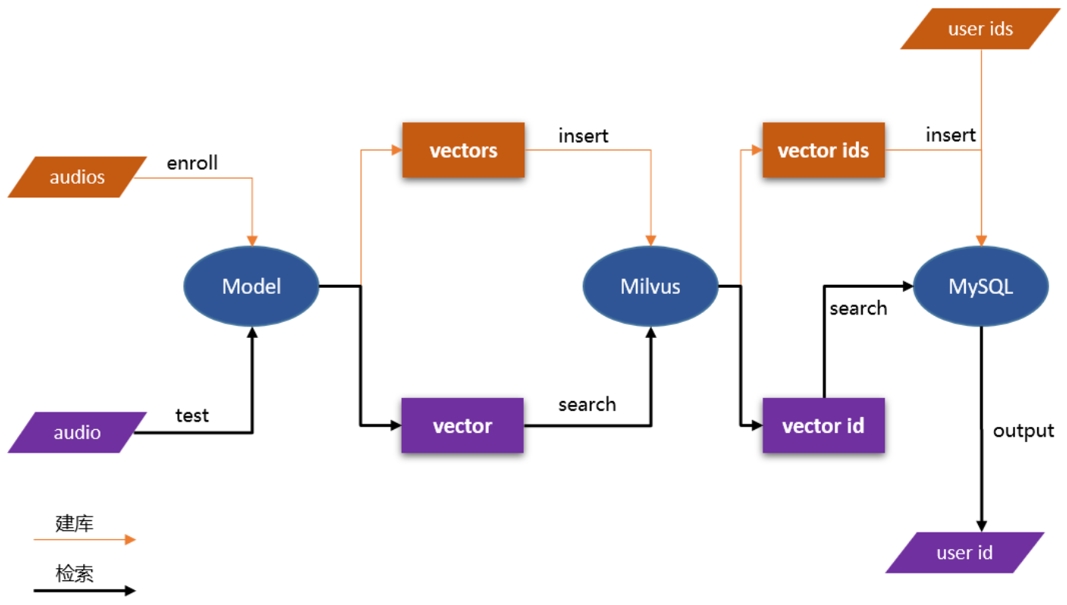

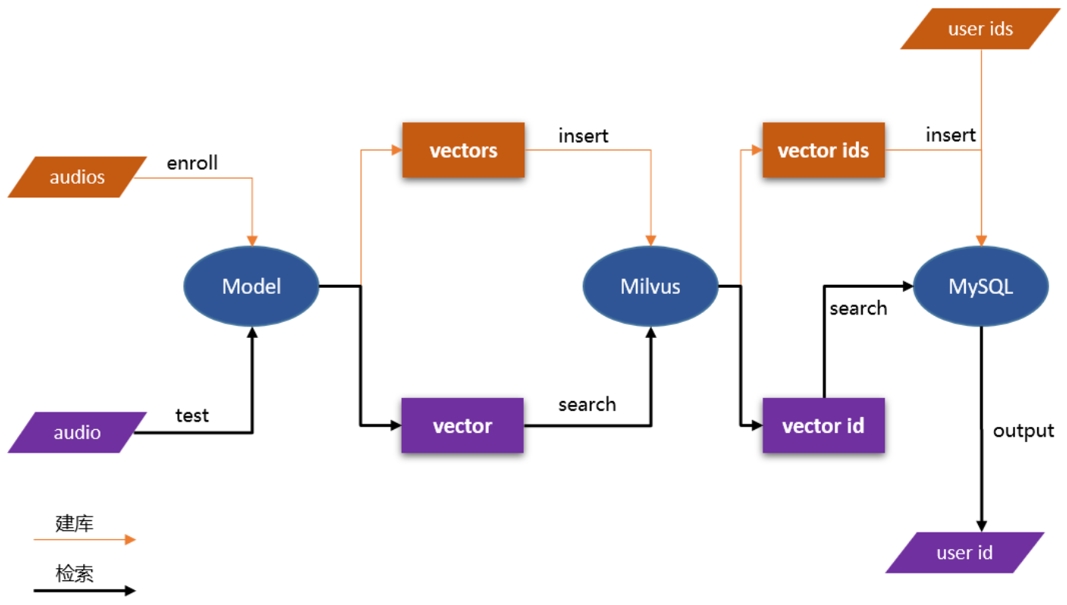

+- 👑 2022.05.13: Release [PP-ASR](./docs/source/asr/PPASR.md)、[PP-TTS](./docs/source/tts/PPTTS.md)、[PP-VPR](docs/source/vpr/PPVPR.md)

+- 👏🏻 2022.05.06: `Streaming ASR` with `Punctuation Restoration` and `Token Timestamp`.

+- 👏🏻 2022.05.06: `Server` is available for `Speaker Verification`, and `Punctuation Restoration`.

+- 👏🏻 2022.04.28: `Streaming Server` is available for `Automatic Speech Recognition` and `Text-to-Speech`.

+- 👏🏻 2022.03.28: `Server` is available for `Audio Classification`, `Automatic Speech Recognition` and `Text-to-Speech`.

+- 👏🏻 2022.03.28: `CLI` is available for `Speaker Verification`.

+- 🤗 2021.12.14: [ASR](https://huggingface.co/spaces/KPatrick/PaddleSpeechASR) and [TTS](https://huggingface.co/spaces/KPatrick/PaddleSpeechTTS) Demos on Hugging Face Spaces are available!

+- 👏🏻 2021.12.10: `CLI` is available for `Audio Classification`, `Automatic Speech Recognition`, `Speech Translation (English to Chinese)` and `Text-to-Speech`.

-

-- 👏🏻 2022.03.28: PaddleSpeech Server is available for Audio Classification, Automatic Speech Recognition and Text-to-Speech.

-- 👏🏻 2022.03.28: PaddleSpeech CLI is available for Speaker Verification.

-- 🤗 2021.12.14: Our PaddleSpeech [ASR](https://huggingface.co/spaces/KPatrick/PaddleSpeechASR) and [TTS](https://huggingface.co/spaces/KPatrick/PaddleSpeechTTS) Demos on Hugging Face Spaces are available!

-- 👏🏻 2021.12.10: PaddleSpeech CLI is available for Audio Classification, Automatic Speech Recognition, Speech Translation (English to Chinese) and Text-to-Speech.

### Community

-- Scan the QR code below with your Wechat (reply【语音】after your friend's application is approved), you can access to official technical exchange group. Look forward to your participation.

+- Scan the QR code below with your Wechat, you can access to official technical exchange group and get the bonus ( more than 20GB learning materials, such as papers, codes and videos ) and the live link of the lessons. Look forward to your participation.

-

+

## Installation

@@ -196,6 +179,7 @@ Via the easy-to-use, efficient, flexible and scalable implementation, our vision

We strongly recommend our users to install PaddleSpeech in **Linux** with *python>=3.7*.

Up to now, **Linux** supports CLI for the all our tasks, **Mac OSX** and **Windows** only supports PaddleSpeech CLI for Audio Classification, Speech-to-Text and Text-to-Speech. To install `PaddleSpeech`, please see [installation](./docs/source/install.md).

+

## Quick Start

@@ -238,7 +222,7 @@ paddlespeech tts --input "你好,欢迎使用飞桨深度学习框架!" --ou

**Batch Process**

```

echo -e "1 欢迎光临。\n2 谢谢惠顾。" | paddlespeech tts

-```

+```

**Shell Pipeline**

- ASR + Punctuation Restoration

@@ -257,16 +241,19 @@ If you want to try more functions like training and tuning, please have a look a

Developers can have a try of our speech server with [PaddleSpeech Server Command Line](./paddlespeech/server/README.md).

**Start server**

+

```shell

paddlespeech_server start --config_file ./paddlespeech/server/conf/application.yaml

```

**Access Speech Recognition Services**

+

```shell

paddlespeech_client asr --server_ip 127.0.0.1 --port 8090 --input input_16k.wav

```

**Access Text to Speech Services**

+

```shell

paddlespeech_client tts --server_ip 127.0.0.1 --port 8090 --input "您好,欢迎使用百度飞桨语音合成服务。" --output output.wav

```

@@ -280,6 +267,37 @@ paddlespeech_client cls --server_ip 127.0.0.1 --port 8090 --input input.wav

For more information about server command lines, please see: [speech server demos](https://github.com/PaddlePaddle/PaddleSpeech/tree/develop/demos/speech_server)

+

+## Quick Start Streaming Server

+

+Developers can have a try of [streaming asr](./demos/streaming_asr_server/README.md) and [streaming tts](./demos/streaming_tts_server/README.md) server.

+

+**Start Streaming Speech Recognition Server**

+

+```

+paddlespeech_server start --config_file ./demos/streaming_asr_server/conf/application.yaml

+```

+

+**Access Streaming Speech Recognition Services**

+

+```

+paddlespeech_client asr_online --server_ip 127.0.0.1 --port 8090 --input input_16k.wav

+```

+

+**Start Streaming Text to Speech Server**

+

+```

+paddlespeech_server start --config_file ./demos/streaming_tts_server/conf/tts_online_application.yaml

+```

+

+**Access Streaming Text to Speech Services**

+

+```

+paddlespeech_client tts_online --server_ip 127.0.0.1 --port 8092 --protocol http --input "您好,欢迎使用百度飞桨语音合成服务。" --output output.wav

+```

+

+For more information please see: [streaming asr](./demos/streaming_asr_server/README.md) and [streaming tts](./demos/streaming_tts_server/README.md)

+

## Model List

@@ -296,7 +314,7 @@ PaddleSpeech supports a series of most popular models. They are summarized in [r

Speech-to-Text Module Type

Dataset

Model Type

-

Link

+

Example

@@ -371,7 +389,7 @@ PaddleSpeech supports a series of most popular models. They are summarized in [r

Text-to-Speech Module Type

Model Type

Dataset

-

Link

+

Example

@@ -489,7 +507,7 @@ PaddleSpeech supports a series of most popular models. They are summarized in [r

Task

Dataset

Model Type

-

Link

+

Example

@@ -514,7 +532,7 @@ PaddleSpeech supports a series of most popular models. They are summarized in [r

Task

Dataset

Model Type

-

Link

+

Example

@@ -539,7 +557,7 @@ PaddleSpeech supports a series of most popular models. They are summarized in [r

Task

Dataset

Model Type

-

Link

+

Example

@@ -589,6 +607,21 @@ Normally, [Speech SoTA](https://paperswithcode.com/area/speech), [Audio SoTA](ht

The Text-to-Speech module is originally called [Parakeet](https://github.com/PaddlePaddle/Parakeet), and now merged with this repository. If you are interested in academic research about this task, please see [TTS research overview](https://github.com/PaddlePaddle/PaddleSpeech/tree/develop/docs/source/tts#overview). Also, [this document](https://github.com/PaddlePaddle/PaddleSpeech/blob/develop/docs/source/tts/models_introduction.md) is a good guideline for the pipeline components.

+

+## ⭐ Examples

+- **[PaddleBoBo](https://github.com/JiehangXie/PaddleBoBo): Use PaddleSpeech TTS to generate virtual human voice.**

+

+

+

+- [PaddleSpeech Demo Video](https://paddlespeech.readthedocs.io/en/latest/demo_video.html)

+

+- **[VTuberTalk](https://github.com/jerryuhoo/VTuberTalk): Use PaddleSpeech TTS and ASR to clone voice from videos.**

+

+

+

+

+

+

## Citation

To cite PaddleSpeech for research, please use the following format.

@@ -655,7 +688,6 @@ You are warmly welcome to submit questions in [discussions](https://github.com/P

## Acknowledgement

-

- Many thanks to [yeyupiaoling](https://github.com/yeyupiaoling)/[PPASR](https://github.com/yeyupiaoling/PPASR)/[PaddlePaddle-DeepSpeech](https://github.com/yeyupiaoling/PaddlePaddle-DeepSpeech)/[VoiceprintRecognition-PaddlePaddle](https://github.com/yeyupiaoling/VoiceprintRecognition-PaddlePaddle)/[AudioClassification-PaddlePaddle](https://github.com/yeyupiaoling/AudioClassification-PaddlePaddle) for years of attention, constructive advice and great help.

- Many thanks to [mymagicpower](https://github.com/mymagicpower) for the Java implementation of ASR upon [short](https://github.com/mymagicpower/AIAS/tree/main/3_audio_sdks/asr_sdk) and [long](https://github.com/mymagicpower/AIAS/tree/main/3_audio_sdks/asr_long_audio_sdk) audio files.

- Many thanks to [JiehangXie](https://github.com/JiehangXie)/[PaddleBoBo](https://github.com/JiehangXie/PaddleBoBo) for developing Virtual Uploader(VUP)/Virtual YouTuber(VTuber) with PaddleSpeech TTS function.

diff --git a/README_cn.md b/README_cn.md

index 228d5d783..c751b061d 100644

--- a/README_cn.md

+++ b/README_cn.md

@@ -2,34 +2,36 @@

@@ -658,6 +691,7 @@ PaddleSpeech 的 **语音合成** 主要包含三个模块:文本前端、声

- 非常感谢 [jerryuhoo](https://github.com/jerryuhoo)/[VTuberTalk](https://github.com/jerryuhoo/VTuberTalk) 基于 PaddleSpeech 的 TTS GUI 界面和基于 ASR 制作数据集的相关代码。

+

此外,PaddleSpeech 依赖于许多开源存储库。有关更多信息,请参阅 [references](./docs/source/reference.md)。

## License

diff --git a/audio/.gitignore b/audio/.gitignore

deleted file mode 100644

index 1c930053d..000000000

--- a/audio/.gitignore

+++ /dev/null

@@ -1,2 +0,0 @@

-.eggs

-*.wav

diff --git a/audio/CHANGELOG.md b/audio/CHANGELOG.md

deleted file mode 100644

index 925d77696..000000000

--- a/audio/CHANGELOG.md

+++ /dev/null

@@ -1,9 +0,0 @@

-# Changelog

-

-Date: 2022-3-15, Author: Xiaojie Chen.

- - kaldi and librosa mfcc, fbank, spectrogram.

- - unit test and benchmark.

-

-Date: 2022-2-25, Author: Hui Zhang.

- - Refactor architecture.

- - dtw distance and mcd style dtw.

diff --git a/audio/README.md b/audio/README.md

deleted file mode 100644

index 697c01739..000000000

--- a/audio/README.md

+++ /dev/null

@@ -1,7 +0,0 @@

-# PaddleAudio

-

-PaddleAudio is an audio library for PaddlePaddle.

-

-## Install

-

-`pip install .`

diff --git a/audio/docs/Makefile b/audio/docs/Makefile

deleted file mode 100644

index 69fe55ecf..000000000

--- a/audio/docs/Makefile

+++ /dev/null

@@ -1,19 +0,0 @@

-# Minimal makefile for Sphinx documentation

-#

-

-# You can set these variables from the command line.

-SPHINXOPTS =

-SPHINXBUILD = sphinx-build

-SOURCEDIR = source

-BUILDDIR = build

-

-# Put it first so that "make" without argument is like "make help".

-help:

- @$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

-

-.PHONY: help Makefile

-

-# Catch-all target: route all unknown targets to Sphinx using the new

-# "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

-%: Makefile

- @$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

\ No newline at end of file

diff --git a/audio/docs/README.md b/audio/docs/README.md

deleted file mode 100644

index 20626f52b..000000000

--- a/audio/docs/README.md

+++ /dev/null

@@ -1,24 +0,0 @@

-# Build docs for PaddleAudio

-

-Execute the following steps in **current directory**.

-

-## 1. Install

-

-`pip install Sphinx sphinx_rtd_theme`

-

-

-## 2. Generate API docs

-

-Generate API docs from doc string.

-

-`sphinx-apidoc -fMeT -o source ../paddleaudio ../paddleaudio/utils --templatedir source/_templates`

-

-

-## 3. Build

-

-`sphinx-build source _html`

-

-

-## 4. Preview

-

-Open `_html/index.html` for page preview.

diff --git a/audio/docs/images/paddle.png b/audio/docs/images/paddle.png

deleted file mode 100644

index bc1135abf..000000000

Binary files a/audio/docs/images/paddle.png and /dev/null differ

diff --git a/audio/docs/make.bat b/audio/docs/make.bat

deleted file mode 100644

index 543c6b13b..000000000

--- a/audio/docs/make.bat

+++ /dev/null

@@ -1,35 +0,0 @@

-@ECHO OFF

-

-pushd %~dp0

-

-REM Command file for Sphinx documentation

-

-if "%SPHINXBUILD%" == "" (

- set SPHINXBUILD=sphinx-build

-)

-set SOURCEDIR=source

-set BUILDDIR=build

-

-if "%1" == "" goto help

-

-%SPHINXBUILD% >NUL 2>NUL

-if errorlevel 9009 (

- echo.

- echo.The 'sphinx-build' command was not found. Make sure you have Sphinx

- echo.installed, then set the SPHINXBUILD environment variable to point

- echo.to the full path of the 'sphinx-build' executable. Alternatively you

- echo.may add the Sphinx directory to PATH.

- echo.

- echo.If you don't have Sphinx installed, grab it from

- echo.http://sphinx-doc.org/

- exit /b 1

-)

-

-%SPHINXBUILD% -M %1 %SOURCEDIR% %BUILDDIR% %SPHINXOPTS%

-goto end

-

-:help

-%SPHINXBUILD% -M help %SOURCEDIR% %BUILDDIR% %SPHINXOPTS%

-

-:end

-popd

diff --git a/audio/paddleaudio/metric/dtw.py b/audio/paddleaudio/metric/dtw.py

deleted file mode 100644

index 662e4506d..000000000

--- a/audio/paddleaudio/metric/dtw.py

+++ /dev/null

@@ -1,44 +0,0 @@

-# Copyright (c) 2022 PaddlePaddle Authors. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-import numpy as np

-from dtaidistance import dtw_ndim

-

-__all__ = [

- 'dtw_distance',

-]

-

-

-def dtw_distance(xs: np.ndarray, ys: np.ndarray) -> float:

- """Dynamic Time Warping.

- This function keeps a compact matrix, not the full warping paths matrix.

- Uses dynamic programming to compute:

-

- Examples:

- .. code-block:: python

-

- wps[i, j] = (s1[i]-s2[j])**2 + min(

- wps[i-1, j ] + penalty, // vertical / insertion / expansion

- wps[i , j-1] + penalty, // horizontal / deletion / compression

- wps[i-1, j-1]) // diagonal / match

-

- dtw = sqrt(wps[-1, -1])

-

- Args:

- xs (np.ndarray): ref sequence, [T,D]

- ys (np.ndarray): hyp sequence, [T,D]

-

- Returns:

- float: dtw distance

- """

- return dtw_ndim.distance(xs, ys)

diff --git a/audio/paddleaudio/utils/env.py b/audio/paddleaudio/utils/env.py

deleted file mode 100644

index a2d14b89e..000000000

--- a/audio/paddleaudio/utils/env.py

+++ /dev/null

@@ -1,60 +0,0 @@

-# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License"

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-'''

-This module is used to store environmental variables in PaddleAudio.

-PPAUDIO_HOME --> the root directory for storing PaddleAudio related data. Default to ~/.paddleaudio. Users can change the

-├ default value through the PPAUDIO_HOME environment variable.

-├─ MODEL_HOME --> Store model files.

-└─ DATA_HOME --> Store automatically downloaded datasets.

-'''

-import os

-

-__all__ = [

- 'USER_HOME',

- 'PPAUDIO_HOME',

- 'MODEL_HOME',

- 'DATA_HOME',

-]

-

-

-def _get_user_home():

- return os.path.expanduser('~')

-

-

-def _get_ppaudio_home():

- if 'PPAUDIO_HOME' in os.environ:

- home_path = os.environ['PPAUDIO_HOME']

- if os.path.exists(home_path):

- if os.path.isdir(home_path):

- return home_path

- else:

- raise RuntimeError(

- 'The environment variable PPAUDIO_HOME {} is not a directory.'.

- format(home_path))

- else:

- return home_path

- return os.path.join(_get_user_home(), '.paddleaudio')

-

-

-def _get_sub_home(directory):

- home = os.path.join(_get_ppaudio_home(), directory)

- if not os.path.exists(home):

- os.makedirs(home)

- return home

-

-

-USER_HOME = _get_user_home()

-PPAUDIO_HOME = _get_ppaudio_home()

-MODEL_HOME = _get_sub_home('models')

-DATA_HOME = _get_sub_home('datasets')

diff --git a/audio/setup.py b/audio/setup.py

deleted file mode 100644

index bf6c4d163..000000000

--- a/audio/setup.py

+++ /dev/null

@@ -1,150 +0,0 @@

-# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

-#

-# Licensed under the Apache License, Version 2.0 (the "License");

-# you may not use this file except in compliance with the License.

-# You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-import glob

-import os

-import subprocess

-

-import pybind11

-import setuptools

-from setuptools import Extension

-from setuptools.command.build_ext import build_ext

-from setuptools.command.test import test

-

-# set the version here

-VERSION = '1.0.0a'

-

-

-# Inspired by the example at https://pytest.org/latest/goodpractises.html

-class TestCommand(test):

- def finalize_options(self):

- test.finalize_options(self)

- self.test_args = []

- self.test_suite = True

-

- def run(self):

- self.run_benchmark()

- super(TestCommand, self).run()

-

- def run_tests(self):

- # Run nose ensuring that argv simulates running nosetests directly

- import nose

- nose.run_exit(argv=['nosetests', '-w', 'tests'])

-

- def run_benchmark(self):

- for benchmark_item in glob.glob('tests/benchmark/*py'):

- os.system(f'pytest {benchmark_item}')

-

-

-class ExtBuildCommand(build_ext):

- def run(self):

- try:

- subprocess.check_output(["cmake", "--version"])

- except OSError:

- raise RuntimeError("CMake is not available.") from None

- super().run()

-

- def build_extension(self, ext):

- extdir = os.path.abspath(

- os.path.dirname(self.get_ext_fullpath(ext.name)))

- cfg = "Debug" if self.debug else "Release"

- cmake_args = [

- f"-DCMAKE_BUILD_TYPE={cfg}",

- f"-Dpybind11_DIR={pybind11.get_cmake_dir()}",

- f"-DCMAKE_INSTALL_PREFIX={extdir}",

- "-DCMAKE_VERBOSE_MAKEFILE=ON",

- "-DBUILD_SOX:BOOL=ON",

- ]

- build_args = ["--target", "install"]

-

- # Set CMAKE_BUILD_PARALLEL_LEVEL to control the parallel build level

- # across all generators.

- if "CMAKE_BUILD_PARALLEL_LEVEL" not in os.environ:

- if hasattr(self, "parallel") and self.parallel:

- build_args += ["-j{}".format(self.parallel)]

-

- if not os.path.exists(self.build_temp):

- os.makedirs(self.build_temp)

-

- subprocess.check_call(

- ["cmake", os.path.abspath(os.path.dirname(__file__))] + cmake_args,

- cwd=self.build_temp)

- subprocess.check_call(

- ["cmake", "--build", "."] + build_args, cwd=self.build_temp)

-

- def get_ext_filename(self, fullname):

- ext_filename = super().get_ext_filename(fullname)

- ext_filename_parts = ext_filename.split(".")

- without_abi = ext_filename_parts[:-2] + ext_filename_parts[-1:]

- ext_filename = ".".join(without_abi)

- return ext_filename

-

-

-def write_version_py(filename='paddleaudio/__init__.py'):

- with open(filename, "a") as f:

- f.write(f"__version__ = '{VERSION}'")

-

-

-def remove_version_py(filename='paddleaudio/__init__.py'):

- with open(filename, "r") as f:

- lines = f.readlines()

- with open(filename, "w") as f:

- for line in lines:

- if "__version__" not in line:

- f.write(line)

-

-

-def get_ext_modules():

- modules = [

- Extension(name="paddleaudio._paddleaudio", sources=[]),

- ]

-

- return modules

-

-

-remove_version_py()

-write_version_py()

-

-setuptools.setup(

- name="paddleaudio",

- version=VERSION,

- author="",

- author_email="",

- description="PaddleAudio, in development",

- long_description="",

- long_description_content_type="text/markdown",

- url="",

- packages=setuptools.find_packages(include=['paddleaudio*']),

- classifiers=[

- "Programming Language :: Python :: 3",

- "License :: OSI Approved :: MIT License",

- "Operating System :: OS Independent",

- ],

- python_requires='>=3.6',

- install_requires=[

- 'numpy >= 1.15.0', 'scipy >= 1.0.0', 'resampy >= 0.2.2',

- 'soundfile >= 0.9.0', 'colorlog', 'dtaidistance == 2.3.1', 'pathos'

- ],

- extras_require={

- 'test': [

- 'nose', 'librosa==0.8.1', 'soundfile==0.10.3.post1',

- 'torchaudio==0.10.2', 'pytest-benchmark'

- ],

- },

- ext_modules=get_ext_modules(),

- cmdclass={

- "build_ext": ExtBuildCommand,

- 'test': TestCommand,

- }, )

-

-remove_version_py()

diff --git a/audio/tests/.gitkeep b/audio/tests/.gitkeep

deleted file mode 100644

index e69de29bb..000000000

diff --git a/demos/README.md b/demos/README.md

index 8abd67249..2a306df6b 100644

--- a/demos/README.md

+++ b/demos/README.md

@@ -2,14 +2,14 @@

([简体中文](./README_cn.md)|English)

-The directory containes many speech applications in multi scenarios.

+This directory contains many speech applications in multiple scenarios.

* audio searching - mass audio similarity retrieval

* audio tagging - multi-label tagging of an audio file

-* automatic_video_subtitiles - generate subtitles from a video

+* automatic_video_subtitles - generate subtitles from a video

* metaverse - 2D AR with TTS

* punctuation_restoration - restore punctuation from raw text

-* speech recogintion - recognize text of an audio file

+* speech recognition - recognize text of an audio file

* speech server - Server for Speech Task, e.g. ASR,TTS,CLS

* streaming asr server - receive audio stream from websocket, and recognize to transcript.

* speech translation - end to end speech translation

diff --git a/demos/audio_content_search/README.md b/demos/audio_content_search/README.md

new file mode 100644

index 000000000..4428bf389

--- /dev/null

+++ b/demos/audio_content_search/README.md

@@ -0,0 +1,79 @@

+([简体中文](./README_cn.md)|English)

+# ACS (Audio Content Search)

+

+## Introduction

+ACS, or Audio Content Search, refers to the problem of getting the key word time stamp from automatically transcribe spoken language (speech-to-text).

+

+This demo is an implementation of obtaining the keyword timestamp in the text from a given audio file. It can be done by a single command or a few lines in python using `PaddleSpeech`.

+Now, the search word in demo is:

+```

+我

+康

+```

+## Usage

+### 1. Installation

+see [installation](https://github.com/PaddlePaddle/PaddleSpeech/blob/develop/docs/source/install.md).

+

+You can choose one way from meduim and hard to install paddlespeech.

+

+The dependency refers to the requirements.txt, and install the dependency as follows:

+

+```

+pip install -r requriement.txt

+```

+

+### 2. Prepare Input File

+The input of this demo should be a WAV file(`.wav`), and the sample rate must be the same as the model.

+

+Here are sample files for this demo that can be downloaded:

+```bash

+wget -c https://paddlespeech.bj.bcebos.com/PaddleAudio/zh.wav

+```

+

+### 3. Usage

+- Command Line(Recommended)

+ ```bash

+ # Chinese

+ paddlespeech_client acs --server_ip 127.0.0.1 --port 8090 --input ./zh.wav

+ ```

+

+ Usage:

+ ```bash

+ paddlespeech asr --help

+ ```

+ Arguments:

+ - `input`(required): Audio file to recognize.

+ - `server_ip`: the server ip.

+ - `port`: the server port.

+ - `lang`: the language type of the model. Default: `zh`.

+ - `sample_rate`: Sample rate of the model. Default: `16000`.

+ - `audio_format`: The audio format.

+

+ Output:

+ ```bash

+ [2022-05-15 15:00:58,185] [ INFO] - acs http client start

+ [2022-05-15 15:00:58,185] [ INFO] - endpoint: http://127.0.0.1:8490/paddlespeech/asr/search

+ [2022-05-15 15:01:03,220] [ INFO] - acs http client finished

+ [2022-05-15 15:01:03,221] [ INFO] - ACS result: {'transcription': '我认为跑步最重要的就是给我带来了身体健康', 'acs': [{'w': '我', 'bg': 0, 'ed': 1.6800000000000002}, {'w': '我', 'bg': 2.1, 'ed': 4.28}, {'w': '康', 'bg': 3.2, 'ed': 4.92}]}

+ [2022-05-15 15:01:03,221] [ INFO] - Response time 5.036084 s.

+ ```

+

+- Python API

+ ```python

+ from paddlespeech.server.bin.paddlespeech_client import ACSClientExecutor

+

+ acs_executor = ACSClientExecutor()

+ res = acs_executor(

+ input='./zh.wav',

+ server_ip="127.0.0.1",

+ port=8490,)

+ print(res)

+ ```

+

+ Output:

+ ```bash

+ [2022-05-15 15:08:13,955] [ INFO] - acs http client start

+ [2022-05-15 15:08:13,956] [ INFO] - endpoint: http://127.0.0.1:8490/paddlespeech/asr/search

+ [2022-05-15 15:08:19,026] [ INFO] - acs http client finished

+ {'transcription': '我认为跑步最重要的就是给我带来了身体健康', 'acs': [{'w': '我', 'bg': 0, 'ed': 1.6800000000000002}, {'w': '我', 'bg': 2.1, 'ed': 4.28}, {'w': '康', 'bg': 3.2, 'ed': 4.92}]}

+ ```

diff --git a/demos/audio_content_search/README_cn.md b/demos/audio_content_search/README_cn.md

new file mode 100644

index 000000000..6f51c4cf2

--- /dev/null

+++ b/demos/audio_content_search/README_cn.md

@@ -0,0 +1,78 @@

+(简体中文|[English](./README.md))

+

+# 语音内容搜索

+## 介绍

+语音内容搜索是一项用计算机程序获取转录语音内容关键词时间戳的技术。

+

+这个 demo 是一个从给定音频文件获取其文本中关键词时间戳的实现,它可以通过使用 `PaddleSpeech` 的单个命令或 python 中的几行代码来实现。

+

+当前示例中检索词是

+```

+我

+康

+```

+## 使用方法

+### 1. 安装

+请看[安装文档](https://github.com/PaddlePaddle/PaddleSpeech/blob/develop/docs/source/install_cn.md)。

+

+你可以从 medium,hard 三中方式中选择一种方式安装。

+依赖参见 requirements.txt, 安装依赖

+

+```

+pip install -r requriement.txt

+```

+

+### 2. 准备输入

+这个 demo 的输入应该是一个 WAV 文件(`.wav`),并且采样率必须与模型的采样率相同。

+

+可以下载此 demo 的示例音频:

+```bash

+wget -c https://paddlespeech.bj.bcebos.com/PaddleAudio/zh.wav

+```

+### 3. 使用方法

+- 命令行 (推荐使用)

+ ```bash

+ # 中文

+ paddlespeech_client acs --server_ip 127.0.0.1 --port 8090 --input ./zh.wav

+ ```

+

+ 使用方法:

+ ```bash

+ paddlespeech acs --help

+ ```

+ 参数:

+ - `input`(必须输入):用于识别的音频文件。

+ - `server_ip`: 服务的ip。

+ - `port`:服务的端口。

+ - `lang`:模型语言,默认值:`zh`。

+ - `sample_rate`:音频采样率,默认值:`16000`。

+ - `audio_format`: 音频的格式。

+

+ 输出:

+ ```bash

+ [2022-05-15 15:00:58,185] [ INFO] - acs http client start

+ [2022-05-15 15:00:58,185] [ INFO] - endpoint: http://127.0.0.1:8490/paddlespeech/asr/search

+ [2022-05-15 15:01:03,220] [ INFO] - acs http client finished

+ [2022-05-15 15:01:03,221] [ INFO] - ACS result: {'transcription': '我认为跑步最重要的就是给我带来了身体健康', 'acs': [{'w': '我', 'bg': 0, 'ed': 1.6800000000000002}, {'w': '我', 'bg': 2.1, 'ed': 4.28}, {'w': '康', 'bg': 3.2, 'ed': 4.92}]}

+ [2022-05-15 15:01:03,221] [ INFO] - Response time 5.036084 s.

+ ```

+

+- Python API

+ ```python

+ from paddlespeech.server.bin.paddlespeech_client import ACSClientExecutor

+

+ acs_executor = ACSClientExecutor()

+ res = acs_executor(

+ input='./zh.wav',

+ server_ip="127.0.0.1",

+ port=8490,)

+ print(res)

+ ```

+

+ 输出:

+ ```bash

+ [2022-05-15 15:08:13,955] [ INFO] - acs http client start

+ [2022-05-15 15:08:13,956] [ INFO] - endpoint: http://127.0.0.1:8490/paddlespeech/asr/search

+ [2022-05-15 15:08:19,026] [ INFO] - acs http client finished

+ {'transcription': '我认为跑步最重要的就是给我带来了身体健康', 'acs': [{'w': '我', 'bg': 0, 'ed': 1.6800000000000002}, {'w': '我', 'bg': 2.1, 'ed': 4.28}, {'w': '康', 'bg': 3.2, 'ed': 4.92}]}

+ ```

diff --git a/demos/audio_content_search/acs_clinet.py b/demos/audio_content_search/acs_clinet.py

new file mode 100644

index 000000000..11f99aca7

--- /dev/null

+++ b/demos/audio_content_search/acs_clinet.py

@@ -0,0 +1,49 @@

+# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import argparse

+

+from paddlespeech.cli.log import logger

+from paddlespeech.server.utils.audio_handler import ASRHttpHandler

+

+

+def main(args):

+ logger.info("asr http client start")

+ audio_format = "wav"

+ sample_rate = 16000

+ lang = "zh"

+ handler = ASRHttpHandler(

+ server_ip=args.server_ip, port=args.port, endpoint=args.endpoint)

+ res = handler.run(args.wavfile, audio_format, sample_rate, lang)

+ # res = res['result']

+ logger.info(f"the final result: {res}")

+

+

+if __name__ == "__main__":

+ parser = argparse.ArgumentParser(description="audio content search client")

+ parser.add_argument(

+ '--server_ip', type=str, default='127.0.0.1', help='server ip')

+ parser.add_argument('--port', type=int, default=8090, help='server port')

+ parser.add_argument(

+ "--wavfile",

+ action="store",

+ help="wav file path ",

+ default="./16_audio.wav")

+ parser.add_argument(

+ '--endpoint',

+ type=str,

+ default='/paddlespeech/asr/search',

+ help='server endpoint')

+ args = parser.parse_args()

+

+ main(args)

diff --git a/demos/audio_content_search/conf/acs_application.yaml b/demos/audio_content_search/conf/acs_application.yaml

new file mode 100644

index 000000000..dbddd06fb

--- /dev/null

+++ b/demos/audio_content_search/conf/acs_application.yaml

@@ -0,0 +1,35 @@

+#################################################################################

+# SERVER SETTING #

+#################################################################################

+host: 0.0.0.0

+port: 8490

+

+# The task format in the engin_list is: _

+# task choices = ['acs_python']

+# protocol = ['http'] (only one can be selected).

+# http only support offline engine type.

+protocol: 'http'

+engine_list: ['acs_python']

+

+

+#################################################################################

+# ENGINE CONFIG #

+#################################################################################

+

+################################### ACS #########################################

+################### acs task: engine_type: python ###############################

+acs_python:

+ task: acs

+ asr_protocol: 'websocket' # 'websocket'

+ offset: 1.0 # second

+ asr_server_ip: 127.0.0.1

+ asr_server_port: 8390

+ lang: 'zh'

+ word_list: "./conf/words.txt"

+ sample_rate: 16000

+ device: 'cpu' # set 'gpu:id' or 'cpu'

+ ping_timeout: 100 # seconds

+

+

+

+

diff --git a/demos/audio_content_search/conf/words.txt b/demos/audio_content_search/conf/words.txt

new file mode 100644

index 000000000..25510eb42

--- /dev/null

+++ b/demos/audio_content_search/conf/words.txt

@@ -0,0 +1,2 @@

+我

+康

\ No newline at end of file

diff --git a/demos/audio_content_search/conf/ws_conformer_application.yaml b/demos/audio_content_search/conf/ws_conformer_application.yaml

new file mode 100644

index 000000000..97201382f

--- /dev/null

+++ b/demos/audio_content_search/conf/ws_conformer_application.yaml

@@ -0,0 +1,43 @@

+#################################################################################

+# SERVER SETTING #

+#################################################################################

+host: 0.0.0.0

+port: 8390

+

+# The task format in the engin_list is: _

+# task choices = ['asr_online']

+# protocol = ['websocket'] (only one can be selected).

+# websocket only support online engine type.

+protocol: 'websocket'

+engine_list: ['asr_online']

+

+

+#################################################################################

+# ENGINE CONFIG #

+#################################################################################

+

+################################### ASR #########################################

+################### speech task: asr; engine_type: online #######################

+asr_online:

+ model_type: 'conformer_online_multicn'

+ am_model: # the pdmodel file of am static model [optional]

+ am_params: # the pdiparams file of am static model [optional]

+ lang: 'zh'

+ sample_rate: 16000

+ cfg_path:

+ decode_method: 'attention_rescoring'

+ force_yes: True

+ device: 'cpu' # cpu or gpu:id

+ am_predictor_conf:

+ device: # set 'gpu:id' or 'cpu'

+ switch_ir_optim: True

+ glog_info: False # True -> print glog

+ summary: True # False -> do not show predictor config

+

+ chunk_buffer_conf:

+ window_n: 7 # frame

+ shift_n: 4 # frame

+ window_ms: 25 # ms

+ shift_ms: 10 # ms

+ sample_rate: 16000

+ sample_width: 2

diff --git a/paddlespeech/server/conf/ws_application.yaml b/demos/audio_content_search/conf/ws_conformer_wenetspeech_application.yaml

similarity index 85%

rename from paddlespeech/server/conf/ws_application.yaml

rename to demos/audio_content_search/conf/ws_conformer_wenetspeech_application.yaml

index dee8d78ba..c23680bd5 100644

--- a/paddlespeech/server/conf/ws_application.yaml

+++ b/demos/audio_content_search/conf/ws_conformer_wenetspeech_application.yaml

@@ -4,11 +4,11 @@

# SERVER SETTING #

#################################################################################

host: 0.0.0.0

-port: 8090

+port: 8390

# The task format in the engin_list is: _

-# task choices = ['asr_online', 'tts_online']

-# protocol = ['websocket', 'http'] (only one can be selected).

+# task choices = ['asr_online']

+# protocol = ['websocket'] (only one can be selected).

# websocket only support online engine type.

protocol: 'websocket'

engine_list: ['asr_online']

@@ -21,7 +21,7 @@ engine_list: ['asr_online']

################################### ASR #########################################

################### speech task: asr; engine_type: online #######################

asr_online:

- model_type: 'deepspeech2online_aishell'

+ model_type: 'conformer_online_wenetspeech'

am_model: # the pdmodel file of am static model [optional]

am_params: # the pdiparams file of am static model [optional]

lang: 'zh'

@@ -29,7 +29,8 @@ asr_online:

cfg_path:

decode_method:

force_yes: True

-

+ device: 'cpu' # cpu or gpu:id

+ decode_method: "attention_rescoring"

am_predictor_conf:

device: # set 'gpu:id' or 'cpu'

switch_ir_optim: True

@@ -37,11 +38,9 @@ asr_online:

summary: True # False -> do not show predictor config

chunk_buffer_conf:

- frame_duration_ms: 80

- shift_ms: 40

- sample_rate: 16000

- sample_width: 2

window_n: 7 # frame

shift_n: 4 # frame

- window_ms: 20 # ms

+ window_ms: 25 # ms

shift_ms: 10 # ms

+ sample_rate: 16000

+ sample_width: 2

diff --git a/demos/audio_content_search/requirements.txt b/demos/audio_content_search/requirements.txt

new file mode 100644

index 000000000..4126a4868

--- /dev/null

+++ b/demos/audio_content_search/requirements.txt

@@ -0,0 +1 @@

+websocket-client

\ No newline at end of file

diff --git a/demos/audio_content_search/run.sh b/demos/audio_content_search/run.sh

new file mode 100755

index 000000000..e322a37c5

--- /dev/null

+++ b/demos/audio_content_search/run.sh

@@ -0,0 +1,7 @@

+export CUDA_VISIBLE_DEVICE=0,1,2,3

+# we need the streaming asr server

+nohup python3 streaming_asr_server.py --config_file conf/ws_conformer_application.yaml > streaming_asr.log 2>&1 &

+

+# start the acs server

+nohup paddlespeech_server start --config_file conf/acs_application.yaml > acs.log 2>&1 &

+

diff --git a/demos/audio_content_search/streaming_asr_server.py b/demos/audio_content_search/streaming_asr_server.py

new file mode 100644

index 000000000..011b009aa

--- /dev/null

+++ b/demos/audio_content_search/streaming_asr_server.py

@@ -0,0 +1,38 @@

+# Copyright (c) 2022 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import argparse

+

+from paddlespeech.cli.log import logger

+from paddlespeech.server.bin.paddlespeech_server import ServerExecutor

+if __name__ == "__main__":

+ parser = argparse.ArgumentParser(

+ prog='paddlespeech_server.start', add_help=True)

+ parser.add_argument(

+ "--config_file",

+ action="store",

+ help="yaml file of the app",

+ default=None,

+ required=True)

+

+ parser.add_argument(

+ "--log_file",

+ action="store",

+ help="log file",

+ default="./log/paddlespeech.log")

+ logger.info("start to parse the args")

+ args = parser.parse_args()

+

+ logger.info("start to launch the streaming asr server")

+ streaming_asr_server = ServerExecutor()

+ streaming_asr_server(config_file=args.config_file, log_file=args.log_file)

diff --git a/demos/audio_searching/README.md b/demos/audio_searching/README.md

index e829d991a..db38d14ed 100644

--- a/demos/audio_searching/README.md

+++ b/demos/audio_searching/README.md

@@ -89,7 +89,7 @@ Then to start the system server, and it provides HTTP backend services.

Then start the server with Fastapi.

```bash

- export PYTHONPATH=$PYTHONPATH:./src:../../paddleaudio

+ export PYTHONPATH=$PYTHONPATH:./src

python src/audio_search.py

```

diff --git a/demos/audio_searching/README_cn.md b/demos/audio_searching/README_cn.md

index c13742af7..6d38b91f5 100644

--- a/demos/audio_searching/README_cn.md

+++ b/demos/audio_searching/README_cn.md

@@ -91,7 +91,7 @@ ffce340b3790 minio/minio:RELEASE.2020-12-03T00-03-10Z "/usr/bin/docker-ent…"

启动用 Fastapi 构建的服务

```bash

- export PYTHONPATH=$PYTHONPATH:./src:../../paddleaudio

+ export PYTHONPATH=$PYTHONPATH:./src

python src/audio_search.py

```

diff --git a/demos/audio_searching/src/encode.py b/demos/audio_searching/src/encode.py

index c89a11c1f..f6bcb00ad 100644

--- a/demos/audio_searching/src/encode.py

+++ b/demos/audio_searching/src/encode.py

@@ -14,7 +14,7 @@

import numpy as np

from logs import LOGGER

-from paddlespeech.cli import VectorExecutor

+from paddlespeech.cli.vector import VectorExecutor

vector_executor = VectorExecutor()

diff --git a/demos/audio_tagging/README.md b/demos/audio_tagging/README.md

index 9d4af0be6..fc4a334ea 100644

--- a/demos/audio_tagging/README.md

+++ b/demos/audio_tagging/README.md

@@ -57,7 +57,7 @@ wget -c https://paddlespeech.bj.bcebos.com/PaddleAudio/cat.wav https://paddlespe

- Python API

```python

import paddle

- from paddlespeech.cli import CLSExecutor

+ from paddlespeech.cli.cls import CLSExecutor

cls_executor = CLSExecutor()

result = cls_executor(

diff --git a/demos/audio_tagging/README_cn.md b/demos/audio_tagging/README_cn.md

index 79f87bf8c..36b5d8aaf 100644

--- a/demos/audio_tagging/README_cn.md

+++ b/demos/audio_tagging/README_cn.md

@@ -57,7 +57,7 @@ wget -c https://paddlespeech.bj.bcebos.com/PaddleAudio/cat.wav https://paddlespe

- Python API

```python

import paddle

- from paddlespeech.cli import CLSExecutor

+ from paddlespeech.cli.cls import CLSExecutor

cls_executor = CLSExecutor()

result = cls_executor(

diff --git a/demos/automatic_video_subtitiles/README.md b/demos/automatic_video_subtitiles/README.md

index db6da40db..b815425ec 100644

--- a/demos/automatic_video_subtitiles/README.md

+++ b/demos/automatic_video_subtitiles/README.md

@@ -28,7 +28,8 @@ ffmpeg -i subtitle_demo1.mp4 -ac 1 -ar 16000 -vn input.wav

- Python API

```python

import paddle

- from paddlespeech.cli import ASRExecutor, TextExecutor

+ from paddlespeech.cli.asr import ASRExecutor

+ from paddlespeech.cli.text import TextExecutor

asr_executor = ASRExecutor()

text_executor = TextExecutor()

diff --git a/demos/automatic_video_subtitiles/README_cn.md b/demos/automatic_video_subtitiles/README_cn.md

index fc7b2cf6a..990ff6dbd 100644

--- a/demos/automatic_video_subtitiles/README_cn.md

+++ b/demos/automatic_video_subtitiles/README_cn.md

@@ -23,7 +23,8 @@ ffmpeg -i subtitle_demo1.mp4 -ac 1 -ar 16000 -vn input.wav

- Python API

```python

import paddle

- from paddlespeech.cli import ASRExecutor, TextExecutor

+ from paddlespeech.cli.asr import ASRExecutor

+ from paddlespeech.cli.text import TextExecutor

asr_executor = ASRExecutor()

text_executor = TextExecutor()

diff --git a/demos/automatic_video_subtitiles/recognize.py b/demos/automatic_video_subtitiles/recognize.py

index 72e3c3a85..304599d19 100644

--- a/demos/automatic_video_subtitiles/recognize.py

+++ b/demos/automatic_video_subtitiles/recognize.py

@@ -16,8 +16,8 @@ import os

import paddle

-from paddlespeech.cli import ASRExecutor

-from paddlespeech.cli import TextExecutor

+from paddlespeech.cli.asr import ASRExecutor

+from paddlespeech.cli.text import TextExecutor

# yapf: disable

parser = argparse.ArgumentParser(__doc__)

diff --git a/demos/custom_streaming_asr/README.md b/demos/custom_streaming_asr/README.md

new file mode 100644

index 000000000..da86e90ab

--- /dev/null

+++ b/demos/custom_streaming_asr/README.md

@@ -0,0 +1,68 @@

+([简体中文](./README_cn.md)|English)

+

+# Customized Auto Speech Recognition

+

+## introduction

+

+In some cases, we need to recognize the specific rare words with high accuracy. eg: address recognition in navigation apps. customized ASR can slove those issues.

+

+this demo is customized for expense account, which need to recognize rare address.

+

+the scripts are in https://github.com/PaddlePaddle/PaddleSpeech/tree/develop/speechx/examples/custom_asr

+

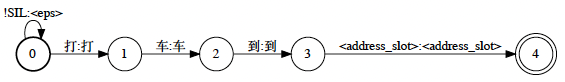

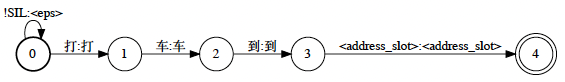

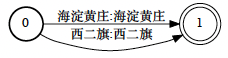

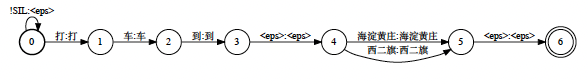

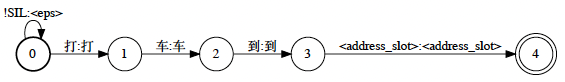

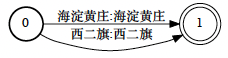

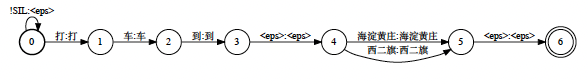

+* G with slot: 打车到 "address_slot"。

+

+

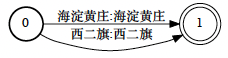

+* this is address slot wfst, you can add the address which want to recognize.

+

+

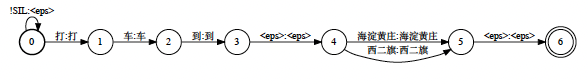

+* after replace operation, G = fstreplace(G_with_slot, address_slot), we will get the customized graph.

+

+

+## Usage

+### 1. Installation

+install paddle:2.2.2 docker.

+```

+sudo docker pull registry.baidubce.com/paddlepaddle/paddle:2.2.2

+

+sudo docker run --privileged --net=host --ipc=host -it --rm -v $PWD:/paddle --name=paddle_demo_docker registry.baidubce.com/paddlepaddle/paddle:2.2.2 /bin/bash

+```

+

+### 2. demo

+* run websocket_server.sh. This script will download resources and libs, and launch the service.

+```

+cd /paddle

+bash websocket_server.sh

+```

+this script run in two steps:

+1. download the resources.tar.gz, those direcotries will be found in resource directory.

+model: acustic model

+graph: the decoder graph (TLG.fst)

+lib: some libs

+bin: binary

+data: audio and wav.scp

+

+2. websocket_server_main launch the service.

+some params:

+port: the service port

+graph_path: the decoder graph path

+model_path: acustic model path

+please refer other params in those files:

+PaddleSpeech/speechx/speechx/decoder/param.h

+PaddleSpeech/speechx/examples/ds2_ol/websocket/websocket_server_main.cc

+

+* In other terminal, run script websocket_client.sh, the client will send data and get the results.

+```

+bash websocket_client.sh

+```

+websocket_client_main will launch the client, the wav_scp is the wav set, port is the server service port.

+

+* result:

+In the log of client, you will see the message below:

+```

+0513 10:58:13.827821 41768 recognizer_test_main.cc:56] wav len (sample): 70208

+I0513 10:58:13.884493 41768 feature_cache.h:52] set finished

+I0513 10:58:24.247171 41768 paddle_nnet.h:76] Tensor neml: 10240

+I0513 10:58:24.247249 41768 paddle_nnet.h:76] Tensor neml: 10240

+LOG ([5.5.544~2-f21d7]:main():decoder/recognizer_test_main.cc:90) the result of case_10 is 五月十二日二十二点三十六分加班打车回家四十一元

+```

diff --git a/demos/custom_streaming_asr/README_cn.md b/demos/custom_streaming_asr/README_cn.md

new file mode 100644

index 000000000..f9981a6ae

--- /dev/null

+++ b/demos/custom_streaming_asr/README_cn.md

@@ -0,0 +1,65 @@

+(简体中文|[English](./README.md))

+

+# 定制化语音识别演示

+## 介绍

+在一些场景中,识别系统需要高精度的识别一些稀有词,例如导航软件中地名识别。而通过定制化识别可以满足这一需求。

+

+这个 demo 是打车报销单的场景识别,需要识别一些稀有的地名,可以通过如下操作实现。

+

+相关脚本:https://github.com/PaddlePaddle/PaddleSpeech/tree/develop/speechx/examples/custom_asr

+

+* G with slot: 打车到 "address_slot"。

+

+

+* 这是 address slot wfst, 可以添加一些需要识别的地名.

+

+

+* 通过 replace 操作, G = fstreplace(G_with_slot, address_slot), 最终可以得到定制化的解码图。

+

+

+## 使用方法

+### 1. 配置环境

+安装paddle:2.2.2 docker镜像。

+```

+sudo docker pull registry.baidubce.com/paddlepaddle/paddle:2.2.2

+

+sudo docker run --privileged --net=host --ipc=host -it --rm -v $PWD:/paddle --name=paddle_demo_docker registry.baidubce.com/paddlepaddle/paddle:2.2.2 /bin/bash

+```

+

+### 2. 演示

+* 运行如下命令,完成相关资源和库的下载和服务启动。

+```

+cd /paddle

+bash websocket_server.sh

+```

+上面脚本完成了如下两个功能:

+1. 完成 resource.tar.gz 下载,解压后,会在 resource 中发现如下目录:

+model: 声学模型

+graph: 解码构图

+lib: 相关库

+bin: 运行程序

+data: 语音数据

+

+2. 通过 websocket_server_main 来启动服务。

+这里简单的介绍几个参数:

+port 是服务端口,

+graph_path 用来指定解码图文件,

+其他参数说明可参见代码:

+PaddleSpeech/speechx/speechx/decoder/param.h

+PaddleSpeech/speechx/examples/ds2_ol/websocket/websocket_server_main.cc

+

+* 在另一个终端中, 通过 client 发送数据,得到结果。运行如下命令:

+```

+bash websocket_client.sh

+```

+通过 websocket_client_main 来启动 client 服务,其中 wav_scp 是发送的语音句子集合,port 为服务端口。

+

+* 结果:

+client 的 log 中可以看到如下类似的结果

+```

+0513 10:58:13.827821 41768 recognizer_test_main.cc:56] wav len (sample): 70208

+I0513 10:58:13.884493 41768 feature_cache.h:52] set finished

+I0513 10:58:24.247171 41768 paddle_nnet.h:76] Tensor neml: 10240

+I0513 10:58:24.247249 41768 paddle_nnet.h:76] Tensor neml: 10240

+LOG ([5.5.544~2-f21d7]:main():decoder/recognizer_test_main.cc:90) the result of case_10 is 五月十二日二十二点三十六分加班打车回家四十一元

+```

diff --git a/demos/custom_streaming_asr/path.sh b/demos/custom_streaming_asr/path.sh

new file mode 100644

index 000000000..47462324d

--- /dev/null

+++ b/demos/custom_streaming_asr/path.sh

@@ -0,0 +1,2 @@

+export LD_LIBRARY_PATH=$PWD/resource/lib

+export PATH=$PATH:$PWD/resource/bin

diff --git a/demos/custom_streaming_asr/setup_docker.sh b/demos/custom_streaming_asr/setup_docker.sh

new file mode 100644

index 000000000..329a75db0

--- /dev/null

+++ b/demos/custom_streaming_asr/setup_docker.sh

@@ -0,0 +1 @@

+sudo nvidia-docker run --privileged --net=host --ipc=host -it --rm -v $PWD:/paddle --name=paddle_demo_docker registry.baidubce.com/paddlepaddle/paddle:2.2.2 /bin/bash

diff --git a/demos/custom_streaming_asr/websocket_client.sh b/demos/custom_streaming_asr/websocket_client.sh

new file mode 100755

index 000000000..ede076caf

--- /dev/null

+++ b/demos/custom_streaming_asr/websocket_client.sh

@@ -0,0 +1,18 @@

+#!/bin/bash

+set +x

+set -e

+

+. path.sh

+# input

+data=$PWD/data

+

+# output

+wav_scp=wav.scp

+

+export GLOG_logtostderr=1

+

+# websocket client

+websocket_client_main \

+ --wav_rspecifier=scp:$data/$wav_scp \

+ --streaming_chunk=0.36 \

+ --port=8881

diff --git a/demos/custom_streaming_asr/websocket_server.sh b/demos/custom_streaming_asr/websocket_server.sh

new file mode 100755

index 000000000..041c345be

--- /dev/null

+++ b/demos/custom_streaming_asr/websocket_server.sh

@@ -0,0 +1,33 @@

+#!/bin/bash

+set +x

+set -e

+

+export GLOG_logtostderr=1

+

+. path.sh

+#test websocket server

+

+model_dir=./resource/model

+graph_dir=./resource/graph

+cmvn=./data/cmvn.ark

+

+

+#paddle_asr_online/resource.tar.gz

+if [ ! -f $cmvn ]; then

+ wget -c https://paddlespeech.bj.bcebos.com/s2t/paddle_asr_online/resource.tar.gz

+ tar xzfv resource.tar.gz

+ ln -s ./resource/data .

+fi

+

+websocket_server_main \

+ --cmvn_file=$cmvn \

+ --streaming_chunk=0.1 \

+ --use_fbank=true \

+ --model_path=$model_dir/avg_10.jit.pdmodel \

+ --param_path=$model_dir/avg_10.jit.pdiparams \

+ --model_cache_shapes="5-1-2048,5-1-2048" \

+ --model_output_names=softmax_0.tmp_0,tmp_5,concat_0.tmp_0,concat_1.tmp_0 \

+ --word_symbol_table=$graph_dir/words.txt \

+ --graph_path=$graph_dir/TLG.fst --max_active=7500 \

+ --port=8881 \

+ --acoustic_scale=12

diff --git a/demos/punctuation_restoration/README.md b/demos/punctuation_restoration/README.md

index 518d437dc..458ab92f9 100644

--- a/demos/punctuation_restoration/README.md

+++ b/demos/punctuation_restoration/README.md

@@ -42,7 +42,7 @@ The input of this demo should be a text of the specific language that can be pas

- Python API

```python

import paddle

- from paddlespeech.cli import TextExecutor

+ from paddlespeech.cli.text import TextExecutor

text_executor = TextExecutor()

result = text_executor(

diff --git a/demos/punctuation_restoration/README_cn.md b/demos/punctuation_restoration/README_cn.md

index 9d4be8bf0..f25acdadb 100644

--- a/demos/punctuation_restoration/README_cn.md

+++ b/demos/punctuation_restoration/README_cn.md

@@ -44,7 +44,7 @@

- Python API

```python

import paddle

- from paddlespeech.cli import TextExecutor

+ from paddlespeech.cli.text import TextExecutor

text_executor = TextExecutor()

result = text_executor(

diff --git a/demos/speaker_verification/README.md b/demos/speaker_verification/README.md

index b79f3f7a1..900b5ae40 100644

--- a/demos/speaker_verification/README.md

+++ b/demos/speaker_verification/README.md

@@ -14,7 +14,7 @@ see [installation](https://github.com/PaddlePaddle/PaddleSpeech/blob/develop/doc

You can choose one way from easy, meduim and hard to install paddlespeech.

### 2. Prepare Input File

-The input of this demo should be a WAV file(`.wav`), and the sample rate must be the same as the model.

+The input of this cli demo should be a WAV file(`.wav`), and the sample rate must be the same as the model.

Here are sample files for this demo that can be downloaded:

```bash

@@ -53,51 +53,50 @@ wget -c https://paddlespeech.bj.bcebos.com/vector/audio/85236145389.wav

Output:

```bash

- demo [ 1.4217498 5.626253 -5.342073 1.1773866 3.308055

- 1.756596 5.167894 10.80636 -3.8226728 -5.6141334

- 2.623845 -0.8072968 1.9635103 -7.3128724 0.01103897

- -9.723131 0.6619743 -6.976803 10.213478 7.494748

- 2.9105635 3.8949256 3.7999806 7.1061673 16.905321

- -7.1493764 8.733103 3.4230042 -4.831653 -11.403367

- 11.232214 7.1274667 -4.2828417 2.452362 -5.130748

- -18.177666 -2.6116815 -11.000337 -6.7314315 1.6564683

- 0.7618269 1.1253023 -2.083836 4.725744 -8.782597

- -3.539873 3.814236 5.1420674 2.162061 4.096431

- -6.4162116 12.747448 1.9429878 -15.152943 6.417416

- 16.097002 -9.716668 -1.9920526 -3.3649497 -1.871939

- 11.567354 3.69788 11.258265 7.442363 9.183411

- 4.5281515 -1.2417862 4.3959084 6.6727695 5.8898783

- 7.627124 -0.66919386 -11.889693 -9.208865 -7.4274073

- -3.7776625 6.917234 -9.848748 -2.0944717 -5.135116

- 0.49563864 9.317534 -5.9141874 -1.8098574 -0.11738578

- -7.169265 -1.0578263 -5.7216787 -5.1173844 16.137651

- -4.473626 7.6624317 -0.55381083 9.631587 -6.4704556

- -8.548508 4.3716145 -0.79702514 4.478997 -2.9758704

- 3.272176 2.8382776 5.134597 -9.190781 -0.5657382

- -4.8745747 2.3165567 -5.984303 -2.1798875 0.35541576

- -0.31784213 9.493548 2.1144536 4.358092 -12.089823

- 8.451689 -7.925461 4.6242585 4.4289427 18.692003

- -2.6204622 -5.149185 -0.35821092 8.488551 4.981496

- -9.32683 -2.2544234 6.6417594 1.2119585 10.977129

- 16.555033 3.3238444 9.551863 -1.6676947 -0.79539716

- -8.605674 -0.47356385 2.6741948 -5.359179 -2.6673796

- 0.66607 15.443222 4.740594 -3.4725387 11.592567

- -2.054497 1.7361217 -8.265324 -9.30447 5.4068313

- -1.5180256 -7.746615 -6.089606 0.07112726 -0.34904733

- -8.649895 -9.998958 -2.564841 -0.53999114 2.601808

- -0.31927416 -1.8815292 -2.07215 -3.4105783 -8.2998085

- 1.483641 -15.365992 -8.288208 3.8847756 -3.4876456

- 7.3629923 0.4657332 3.132599 12.438889 -1.8337058

- 4.532936 2.7264361 10.145339 -6.521951 2.897153

- -3.3925855 5.079156 7.759716 4.677565 5.8457737

- 2.402413 7.7071047 3.9711342 -6.390043 6.1268735

- -3.7760346 -11.118123 ]

+ demo [ -1.3251206 7.8606825 -4.620626 0.3000721 2.2648535

+ -1.1931441 3.0647137 7.673595 -6.0044727 -12.02426

+ -1.9496069 3.1269536 1.618838 -7.6383104 -1.2299773

+ -12.338331 2.1373026 -5.3957124 9.717328 5.6752305

+ 3.7805123 3.0597172 3.429692 8.97601 13.174125

+ -0.53132284 8.9424715 4.46511 -4.4262476 -9.726503

+ 8.399328 7.2239175 -7.435854 2.9441683 -4.3430395

+ -13.886965 -1.6346735 -10.9027405 -5.311245 3.8007221

+ 3.8976038 -2.1230774 -2.3521194 4.151031 -7.4048667

+ 0.13911647 2.4626107 4.9664545 0.9897574 5.4839754

+ -3.3574002 10.1340065 -0.6120171 -10.403095 4.6007543

+ 16.00935 -7.7836914 -4.1945305 -6.9368606 1.1789556

+ 11.490801 4.2380238 9.550931 8.375046 7.5089145

+ -0.65707296 -0.30051577 2.8406055 3.0828028 0.730817

+ 6.148354 0.13766119 -13.424735 -7.7461405 -2.3227983

+ -8.305252 2.9879124 -10.995229 0.15211068 -2.3820348

+ -1.7984174 8.495629 -5.8522367 -3.755498 0.6989711

+ -5.2702994 -2.6188622 -1.8828466 -4.64665 14.078544

+ -0.5495333 10.579158 -3.2160501 9.349004 -4.381078

+ -11.675817 -2.8630207 4.5721755 2.246612 -4.574342

+ 1.8610188 2.3767874 5.6257877 -9.784078 0.64967257

+ -1.4579505 0.4263264 -4.9211264 -2.454784 3.4869802

+ -0.42654222 8.341269 1.356552 7.0966883 -13.102829

+ 8.016734 -7.1159344 1.8699781 0.208721 14.699384

+ -1.025278 -2.6107233 -2.5082312 8.427193 6.9138527

+ -6.2912464 0.6157366 2.489688 -3.4668267 9.921763

+ 11.200815 -0.1966403 7.4916005 -0.62312716 -0.25848144

+ -9.947997 -0.9611041 1.1649219 -2.1907122 -1.5028487

+ -0.51926106 15.165954 2.4649463 -0.9980445 7.4416637

+ -2.0768049 3.5896823 -7.3055434 -7.5620847 4.323335

+ 0.0804418 -6.56401 -2.3148053 -1.7642345 -2.4708817

+ -7.675618 -9.548878 -1.0177554 0.16986446 2.5877135

+ -1.8752296 -0.36614323 -6.0493784 -2.3965611 -5.9453387

+ 0.9424033 -13.155974 -7.457801 0.14658108 -3.742797

+ 5.8414927 -1.2872906 5.5694313 12.57059 1.0939219

+ 2.2142086 1.9181576 6.9914207 -5.888139 3.1409824

+ -2.003628 2.4434285 9.973139 5.03668 2.0051203

+ 2.8615603 5.860224 2.9176188 -1.6311141 2.0292206

+ -4.070415 -6.831437 ]

```

- Python API

```python

- import paddle

- from paddlespeech.cli import VectorExecutor

+ from paddlespeech.cli.vector import VectorExecutor

vector_executor = VectorExecutor()

audio_emb = vector_executor(

@@ -128,88 +127,88 @@ wget -c https://paddlespeech.bj.bcebos.com/vector/audio/85236145389.wav

```bash

# Vector Result:

Audio embedding Result:

- [ 1.4217498 5.626253 -5.342073 1.1773866 3.308055

- 1.756596 5.167894 10.80636 -3.8226728 -5.6141334

- 2.623845 -0.8072968 1.9635103 -7.3128724 0.01103897

- -9.723131 0.6619743 -6.976803 10.213478 7.494748

- 2.9105635 3.8949256 3.7999806 7.1061673 16.905321

- -7.1493764 8.733103 3.4230042 -4.831653 -11.403367

- 11.232214 7.1274667 -4.2828417 2.452362 -5.130748

- -18.177666 -2.6116815 -11.000337 -6.7314315 1.6564683

- 0.7618269 1.1253023 -2.083836 4.725744 -8.782597

- -3.539873 3.814236 5.1420674 2.162061 4.096431

- -6.4162116 12.747448 1.9429878 -15.152943 6.417416

- 16.097002 -9.716668 -1.9920526 -3.3649497 -1.871939

- 11.567354 3.69788 11.258265 7.442363 9.183411

- 4.5281515 -1.2417862 4.3959084 6.6727695 5.8898783

- 7.627124 -0.66919386 -11.889693 -9.208865 -7.4274073

- -3.7776625 6.917234 -9.848748 -2.0944717 -5.135116

- 0.49563864 9.317534 -5.9141874 -1.8098574 -0.11738578

- -7.169265 -1.0578263 -5.7216787 -5.1173844 16.137651

- -4.473626 7.6624317 -0.55381083 9.631587 -6.4704556

- -8.548508 4.3716145 -0.79702514 4.478997 -2.9758704

- 3.272176 2.8382776 5.134597 -9.190781 -0.5657382

- -4.8745747 2.3165567 -5.984303 -2.1798875 0.35541576

- -0.31784213 9.493548 2.1144536 4.358092 -12.089823

- 8.451689 -7.925461 4.6242585 4.4289427 18.692003

- -2.6204622 -5.149185 -0.35821092 8.488551 4.981496

- -9.32683 -2.2544234 6.6417594 1.2119585 10.977129

- 16.555033 3.3238444 9.551863 -1.6676947 -0.79539716

- -8.605674 -0.47356385 2.6741948 -5.359179 -2.6673796

- 0.66607 15.443222 4.740594 -3.4725387 11.592567

- -2.054497 1.7361217 -8.265324 -9.30447 5.4068313

- -1.5180256 -7.746615 -6.089606 0.07112726 -0.34904733

- -8.649895 -9.998958 -2.564841 -0.53999114 2.601808

- -0.31927416 -1.8815292 -2.07215 -3.4105783 -8.2998085

- 1.483641 -15.365992 -8.288208 3.8847756 -3.4876456

- 7.3629923 0.4657332 3.132599 12.438889 -1.8337058

- 4.532936 2.7264361 10.145339 -6.521951 2.897153

- -3.3925855 5.079156 7.759716 4.677565 5.8457737

- 2.402413 7.7071047 3.9711342 -6.390043 6.1268735

- -3.7760346 -11.118123 ]

+ [ -1.3251206 7.8606825 -4.620626 0.3000721 2.2648535

+ -1.1931441 3.0647137 7.673595 -6.0044727 -12.02426

+ -1.9496069 3.1269536 1.618838 -7.6383104 -1.2299773

+ -12.338331 2.1373026 -5.3957124 9.717328 5.6752305

+ 3.7805123 3.0597172 3.429692 8.97601 13.174125

+ -0.53132284 8.9424715 4.46511 -4.4262476 -9.726503

+ 8.399328 7.2239175 -7.435854 2.9441683 -4.3430395

+ -13.886965 -1.6346735 -10.9027405 -5.311245 3.8007221

+ 3.8976038 -2.1230774 -2.3521194 4.151031 -7.4048667

+ 0.13911647 2.4626107 4.9664545 0.9897574 5.4839754

+ -3.3574002 10.1340065 -0.6120171 -10.403095 4.6007543

+ 16.00935 -7.7836914 -4.1945305 -6.9368606 1.1789556

+ 11.490801 4.2380238 9.550931 8.375046 7.5089145

+ -0.65707296 -0.30051577 2.8406055 3.0828028 0.730817

+ 6.148354 0.13766119 -13.424735 -7.7461405 -2.3227983

+ -8.305252 2.9879124 -10.995229 0.15211068 -2.3820348

+ -1.7984174 8.495629 -5.8522367 -3.755498 0.6989711

+ -5.2702994 -2.6188622 -1.8828466 -4.64665 14.078544

+ -0.5495333 10.579158 -3.2160501 9.349004 -4.381078

+ -11.675817 -2.8630207 4.5721755 2.246612 -4.574342

+ 1.8610188 2.3767874 5.6257877 -9.784078 0.64967257

+ -1.4579505 0.4263264 -4.9211264 -2.454784 3.4869802

+ -0.42654222 8.341269 1.356552 7.0966883 -13.102829

+ 8.016734 -7.1159344 1.8699781 0.208721 14.699384

+ -1.025278 -2.6107233 -2.5082312 8.427193 6.9138527

+ -6.2912464 0.6157366 2.489688 -3.4668267 9.921763

+ 11.200815 -0.1966403 7.4916005 -0.62312716 -0.25848144

+ -9.947997 -0.9611041 1.1649219 -2.1907122 -1.5028487

+ -0.51926106 15.165954 2.4649463 -0.9980445 7.4416637

+ -2.0768049 3.5896823 -7.3055434 -7.5620847 4.323335

+ 0.0804418 -6.56401 -2.3148053 -1.7642345 -2.4708817

+ -7.675618 -9.548878 -1.0177554 0.16986446 2.5877135

+ -1.8752296 -0.36614323 -6.0493784 -2.3965611 -5.9453387

+ 0.9424033 -13.155974 -7.457801 0.14658108 -3.742797

+ 5.8414927 -1.2872906 5.5694313 12.57059 1.0939219

+ 2.2142086 1.9181576 6.9914207 -5.888139 3.1409824

+ -2.003628 2.4434285 9.973139 5.03668 2.0051203

+ 2.8615603 5.860224 2.9176188 -1.6311141 2.0292206

+ -4.070415 -6.831437 ]

# get the test embedding

Test embedding Result:

- [ -1.902964 2.0690894 -8.034194 3.5472693 0.18089125

- 6.9085927 1.4097427 -1.9487704 -10.021278 -0.20755845

- -8.04332 4.344489 2.3200977 -14.306299 5.184692

- -11.55602 -3.8497238 0.6444722 1.2833948 2.6766639

- 0.5878921 0.7946299 1.7207596 2.5791872 14.998469

- -1.3385371 15.031221 -0.8006958 1.99287 -9.52007

- 2.435466 4.003221 -4.33817 -4.898601 -5.304714

- -18.033886 10.790787 -12.784645 -5.641755 2.9761686

- -10.566622 1.4839455 6.152458 -5.7195854 2.8603241

- 6.112133 8.489869 5.5958056 1.2836679 -1.2293907

- 0.89927405 7.0288725 -2.854029 -0.9782962 5.8255906

- 14.905906 -5.025907 0.7866458 -4.2444224 -16.354029

- 10.521315 0.9604709 -3.3257897 7.144871 -13.592733

- -8.568869 -1.7953678 0.26313916 10.916714 -6.9374123

- 1.857403 -6.2746415 2.8154466 -7.2338667 -2.293357

- -0.05452765 5.4287076 5.0849075 -6.690375 -1.6183422

- 3.654291 0.94352573 -9.200294 -5.4749465 -3.5235846

- 1.3420814 4.240421 -2.772944 -2.8451524 16.311104

- 4.2969875 -1.762936 -12.5758915 8.595198 -0.8835239

- -1.5708797 1.568961 1.1413603 3.5032008 -0.45251232

- -6.786333 16.89443 5.3366146 -8.789056 0.6355629

- 3.2579517 -3.328322 7.5969577 0.66025066 -6.550468

- -9.148656 2.020372 -0.4615173 1.1965656 -3.8764873

- 11.6562195 -6.0750933 12.182899 3.2218833 0.81969476

- 5.570001 -3.8459578 -7.205299 7.9262037 -7.6611166

- -5.249467 -2.2671914 7.2658715 -13.298164 4.821147

- -2.7263982 11.691089 -3.8918593 -2.838112 -1.0336838

- -3.8034165 2.8536487 -5.60398 -1.1972581 1.3455094

- -3.4903061 2.2408795 5.5010734 -3.970756 11.99696

- -7.8858757 0.43160373 -5.5059714 4.3426995 16.322706

- 11.635366 0.72157705 -9.245714 -3.91465 -4.449838

- -1.5716927 7.713747 -2.2430465 -6.198303 -13.481864

- 2.8156567 -5.7812386 5.1456156 2.7289324 -14.505571

- 13.270688 3.448231 -7.0659585 4.5886116 -4.466099

- -0.296428 -11.463529 -2.6076477 14.110243 -6.9725137

- -1.9962958 2.7119343 19.391657 0.01961198 14.607133

- -1.6695905 -4.391516 1.3131028 -6.670972 -5.888604

- 12.0612335 5.9285784 3.3715196 1.492534 10.723728

- -0.95514804 -12.085431 ]

+ [ 2.5247195 5.119042 -4.335273 4.4583654 5.047907

+ 3.5059214 1.6159848 0.49364898 -11.6899185 -3.1014526

+ -5.6589785 -0.42684984 2.674276 -11.937654 6.2248464

+ -10.776924 -5.694543 1.112041 1.5709964 1.0961034

+ 1.3976512 2.324352 1.339981 5.279319 13.734659

+ -2.5753925 13.651442 -2.2357535 5.1575427 -3.251567

+ 1.4023279 6.1191974 -6.0845175 -1.3646189 -2.6789894

+ -15.220778 9.779349 -9.411551 -6.388947 6.8313975

+ -9.245996 0.31196198 2.5509644 -4.413065 6.1649427

+ 6.793837 2.6328635 8.620976 3.4832475 0.52491665

+ 2.9115407 5.8392377 0.6702376 -3.2726715 2.6694255

+ 16.91701 -5.5811176 0.23362345 -4.5573606 -11.801059

+ 14.728292 -0.5198082 -3.999922 7.0927105 -7.0459595

+ -5.4389 -0.46420583 -5.1085467 10.376568 -8.889225

+ -0.37705845 -1.659806 2.6731026 -7.1909504 1.4608804

+ -2.163136 -0.17949677 4.0241547 0.11319201 0.601279

+ 2.039692 3.1910992 -11.649526 -8.121584 -4.8707457

+ 0.3851982 1.4231744 -2.3321972 0.99332285 14.121717

+ 5.899413 0.7384519 -17.760096 10.555021 4.1366534

+ -0.3391071 -0.20792882 3.208204 0.8847948 -8.721497

+ -6.432868 13.006379 4.8956 -9.155822 -1.9441519

+ 5.7815638 -2.066733 10.425042 -0.8802383 -2.4314315

+ -9.869258 0.35095334 -5.3549943 2.1076174 -8.290468

+ 8.4433365 -4.689333 9.334139 -2.172678 -3.0250976

+ 8.394216 -3.2110903 -7.93868 2.3960824 -2.3213403

+ -1.4963245 -3.476059 4.132903 -10.893354 4.362673

+ -0.45456508 10.258634 -1.1655927 -6.7799754 0.22885278

+ -4.399287 2.333433 -4.84745 -4.2752337 -1.3577863

+ -1.0685898 9.505196 7.3062205 0.08708266 12.927811

+ -9.57974 1.3936648 -1.9444873 5.776769 15.251903

+ 10.6118355 -1.4903594 -9.535318 -3.6553776 -1.6699586

+ -0.5933151 7.600357 -4.8815503 -8.698617 -15.855757

+ 0.25632986 -7.2235737 0.9506656 0.7128582 -9.051738

+ 8.74869 -1.6426028 -6.5762258 2.506905 -6.7431564

+ 5.129912 -12.189555 -3.6435068 12.068113 -6.0059533

+ -2.3535995 2.9014351 22.3082 -1.5563312 13.193291

+ 2.7583609 -7.468798 1.3407065 -4.599617 -6.2345777

+ 10.7689295 7.137627 5.099476 0.3473359 9.647881

+ -2.0484571 -5.8549366 ]

# get the score between enroll and test

- Eembeddings Score: 0.4292638301849365

+ Eembeddings Score: 0.45332613587379456

```

### 4.Pretrained Models

diff --git a/demos/speaker_verification/README_cn.md b/demos/speaker_verification/README_cn.md

index db382f298..f6afa86ac 100644

--- a/demos/speaker_verification/README_cn.md

+++ b/demos/speaker_verification/README_cn.md

@@ -4,16 +4,16 @@

## 介绍

声纹识别是一项用计算机程序自动提取说话人特征的技术。

-这个 demo 是一个从给定音频文件提取说话人特征,它可以通过使用 `PaddleSpeech` 的单个命令或 python 中的几行代码来实现。

+这个 demo 是从一个给定音频文件中提取说话人特征,它可以通过使用 `PaddleSpeech` 的单个命令或 python 中的几行代码来实现。

## 使用方法

### 1. 安装

请看[安装文档](https://github.com/PaddlePaddle/PaddleSpeech/blob/develop/docs/source/install_cn.md)。

-你可以从 easy,medium,hard 三中方式中选择一种方式安装。

+你可以从easy medium,hard 三种方式中选择一种方式安装。

### 2. 准备输入

-这个 demo 的输入应该是一个 WAV 文件(`.wav`),并且采样率必须与模型的采样率相同。

+声纹cli demo 的输入应该是一个 WAV 文件(`.wav`),并且采样率必须与模型的采样率相同。

可以下载此 demo 的示例音频:

```bash

@@ -51,51 +51,51 @@ wget -c https://paddlespeech.bj.bcebos.com/vector/audio/85236145389.wav

输出:

```bash

- demo [ 1.4217498 5.626253 -5.342073 1.1773866 3.308055

- 1.756596 5.167894 10.80636 -3.8226728 -5.6141334

- 2.623845 -0.8072968 1.9635103 -7.3128724 0.01103897

- -9.723131 0.6619743 -6.976803 10.213478 7.494748

- 2.9105635 3.8949256 3.7999806 7.1061673 16.905321

- -7.1493764 8.733103 3.4230042 -4.831653 -11.403367

- 11.232214 7.1274667 -4.2828417 2.452362 -5.130748

- -18.177666 -2.6116815 -11.000337 -6.7314315 1.6564683

- 0.7618269 1.1253023 -2.083836 4.725744 -8.782597

- -3.539873 3.814236 5.1420674 2.162061 4.096431

- -6.4162116 12.747448 1.9429878 -15.152943 6.417416

- 16.097002 -9.716668 -1.9920526 -3.3649497 -1.871939

- 11.567354 3.69788 11.258265 7.442363 9.183411

- 4.5281515 -1.2417862 4.3959084 6.6727695 5.8898783

- 7.627124 -0.66919386 -11.889693 -9.208865 -7.4274073

- -3.7776625 6.917234 -9.848748 -2.0944717 -5.135116

- 0.49563864 9.317534 -5.9141874 -1.8098574 -0.11738578

- -7.169265 -1.0578263 -5.7216787 -5.1173844 16.137651

- -4.473626 7.6624317 -0.55381083 9.631587 -6.4704556

- -8.548508 4.3716145 -0.79702514 4.478997 -2.9758704

- 3.272176 2.8382776 5.134597 -9.190781 -0.5657382

- -4.8745747 2.3165567 -5.984303 -2.1798875 0.35541576

- -0.31784213 9.493548 2.1144536 4.358092 -12.089823

- 8.451689 -7.925461 4.6242585 4.4289427 18.692003

- -2.6204622 -5.149185 -0.35821092 8.488551 4.981496

- -9.32683 -2.2544234 6.6417594 1.2119585 10.977129

- 16.555033 3.3238444 9.551863 -1.6676947 -0.79539716

- -8.605674 -0.47356385 2.6741948 -5.359179 -2.6673796

- 0.66607 15.443222 4.740594 -3.4725387 11.592567

- -2.054497 1.7361217 -8.265324 -9.30447 5.4068313

- -1.5180256 -7.746615 -6.089606 0.07112726 -0.34904733

- -8.649895 -9.998958 -2.564841 -0.53999114 2.601808

- -0.31927416 -1.8815292 -2.07215 -3.4105783 -8.2998085

- 1.483641 -15.365992 -8.288208 3.8847756 -3.4876456

- 7.3629923 0.4657332 3.132599 12.438889 -1.8337058

- 4.532936 2.7264361 10.145339 -6.521951 2.897153

- -3.3925855 5.079156 7.759716 4.677565 5.8457737

- 2.402413 7.7071047 3.9711342 -6.390043 6.1268735

- -3.7760346 -11.118123 ]

+ [ -1.3251206 7.8606825 -4.620626 0.3000721 2.2648535

+ -1.1931441 3.0647137 7.673595 -6.0044727 -12.02426

+ -1.9496069 3.1269536 1.618838 -7.6383104 -1.2299773

+ -12.338331 2.1373026 -5.3957124 9.717328 5.6752305

+ 3.7805123 3.0597172 3.429692 8.97601 13.174125

+ -0.53132284 8.9424715 4.46511 -4.4262476 -9.726503

+ 8.399328 7.2239175 -7.435854 2.9441683 -4.3430395

+ -13.886965 -1.6346735 -10.9027405 -5.311245 3.8007221

+ 3.8976038 -2.1230774 -2.3521194 4.151031 -7.4048667

+ 0.13911647 2.4626107 4.9664545 0.9897574 5.4839754

+ -3.3574002 10.1340065 -0.6120171 -10.403095 4.6007543

+ 16.00935 -7.7836914 -4.1945305 -6.9368606 1.1789556

+ 11.490801 4.2380238 9.550931 8.375046 7.5089145

+ -0.65707296 -0.30051577 2.8406055 3.0828028 0.730817

+ 6.148354 0.13766119 -13.424735 -7.7461405 -2.3227983

+ -8.305252 2.9879124 -10.995229 0.15211068 -2.3820348

+ -1.7984174 8.495629 -5.8522367 -3.755498 0.6989711

+ -5.2702994 -2.6188622 -1.8828466 -4.64665 14.078544

+ -0.5495333 10.579158 -3.2160501 9.349004 -4.381078

+ -11.675817 -2.8630207 4.5721755 2.246612 -4.574342

+ 1.8610188 2.3767874 5.6257877 -9.784078 0.64967257

+ -1.4579505 0.4263264 -4.9211264 -2.454784 3.4869802

+ -0.42654222 8.341269 1.356552 7.0966883 -13.102829

+ 8.016734 -7.1159344 1.8699781 0.208721 14.699384

+ -1.025278 -2.6107233 -2.5082312 8.427193 6.9138527

+ -6.2912464 0.6157366 2.489688 -3.4668267 9.921763

+ 11.200815 -0.1966403 7.4916005 -0.62312716 -0.25848144

+ -9.947997 -0.9611041 1.1649219 -2.1907122 -1.5028487

+ -0.51926106 15.165954 2.4649463 -0.9980445 7.4416637

+ -2.0768049 3.5896823 -7.3055434 -7.5620847 4.323335

+ 0.0804418 -6.56401 -2.3148053 -1.7642345 -2.4708817

+ -7.675618 -9.548878 -1.0177554 0.16986446 2.5877135

+ -1.8752296 -0.36614323 -6.0493784 -2.3965611 -5.9453387

+ 0.9424033 -13.155974 -7.457801 0.14658108 -3.742797

+ 5.8414927 -1.2872906 5.5694313 12.57059 1.0939219

+ 2.2142086 1.9181576 6.9914207 -5.888139 3.1409824

+ -2.003628 2.4434285 9.973139 5.03668 2.0051203

+ 2.8615603 5.860224 2.9176188 -1.6311141 2.0292206

+ -4.070415 -6.831437 ]

```

- Python API

```python

import paddle

- from paddlespeech.cli import VectorExecutor

+ from paddlespeech.cli.vector import VectorExecutor

vector_executor = VectorExecutor()

audio_emb = vector_executor(