|

|

5 years ago | |

|---|---|---|

| .. | ||

| images | 5 years ago | |

| solution | 5 years ago | |

| translations | 5 years ago | |

| README.md | 5 years ago | |

| assignment.md | 5 years ago | |

| notebook.ipynb | 5 years ago | |

README.md

Get started with Python and Scikit-Learn for Regression models

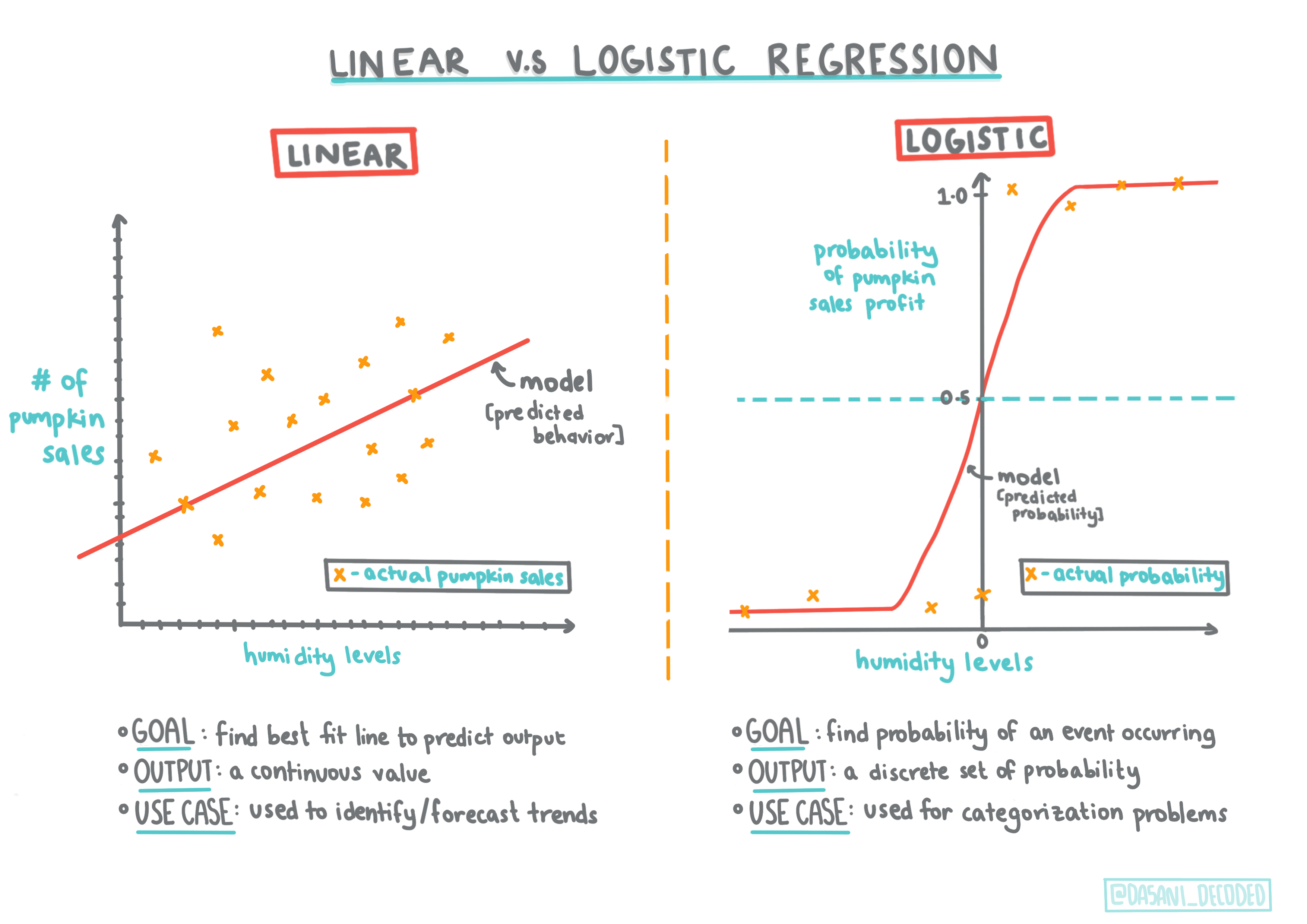

Infographic by Dasani Madipalli

Pre-lecture quiz

Introduction

The lessons in this section cover types of Regression in the context of machine learning. Regression models can help determine the relationship between variables. This type of model can predict values such as length, temperature, or age, thus uncovering relationships between variables as it analyzes datapoints.

In this series of lessons, you'll discover the difference between Linear vs. Logistic Regression, and when you should use one or the other.

But before you do anything, make sure you have the right tools in place!

In this lesson, you will learn:

- How to configure your computer for local machine learning tasks

- Getting used to working with Jupyter notebooks

- An introduction to Scikit-Learn, including installation

- An introduction to Linear Regression with a hands-on exercise

Installations and Configurations

Click this image to watch a video on using Python within VS Code.

-

Ensure that Python is installed on your computer. You will use Python for many data science and machine learning tasks. Most computer systems already include a Python installation. There are useful Python Coding Packs available as well to ease the setup for some users. Some usages of Python, however, require one version of the software, whereas others require a different version. For this reason, it's useful to work within a virtual environment.

-

Make sure you have Visual Studio Code installed on your computer. Follow these instructions for the basic installation. You are going to use Python in Visual Studio Code in this course, so you might want to brush up on how to configure VS Code for Python development.

Get comfortable with Python by working through this collection of Learn modules

- Install Scikit-Learn by following these instructions. Since you need to ensure that you use Python 3, it's recommended that you use a virtual environment. Note, if you are installing this library on a M1 Mac, there are special instructions on the page linked above.

Your ML Authoring Environment

You are going to use notebooks to develop your Python code and create machine learning models. This type of file is a common tool for data scientists, and they can be identified by their suffix or extension .ipynb.

Notebooks are an interactive environment that allow the developer to both code and add notes and write documentation around the code which is quite helpful for experimental or research-oriented projects.

Working with A Notebook

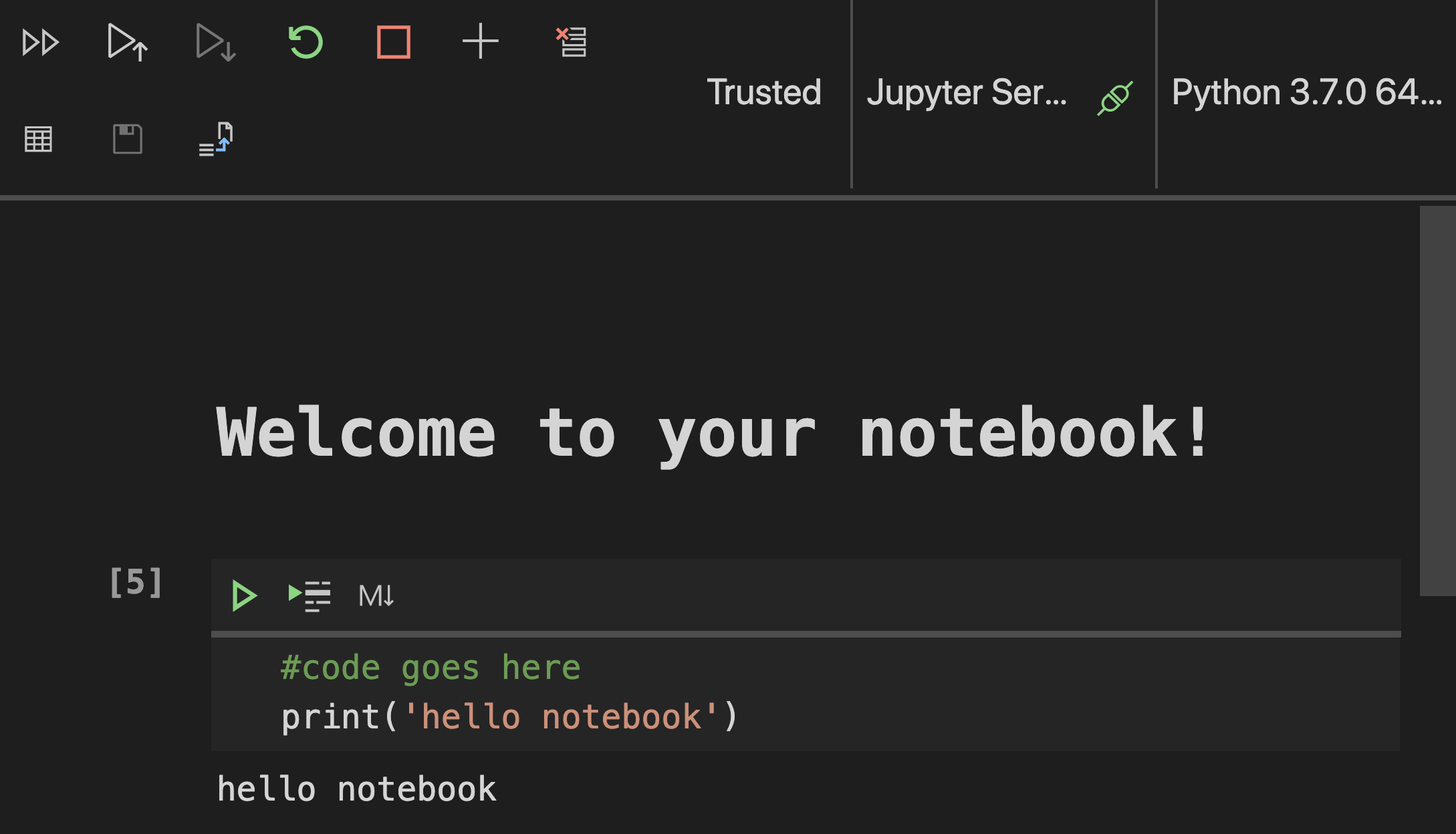

In this folder, you will find the file notebook.ipynb. If you open it in VS Code, assuming VS Code is properly configured, a Jupyter server will start with Python 3+ started. You will find areas of the notebook that can be 'run' by pressing arrows next to code blocks, and other areas that contain text.

In your notebook, add a comment. To do this, click the 'md' icon and add a bit of markdown, like # Welcome to your notebook.

Next, add some Python code: Type print('hello notebook') and click the arrow to run the code. You should see the printed statement, 'hello notebook'.

You can interleaf your code with comments to self-document the notebook.

✅ Think for a minute how different a web developer's working environment is versus that of a data scientist.

Up and Running with Scikit-Learn

Now that Python is set up in your local environment and you are comfortable with Jupyter notebooks, let's get equally comfortable with Scikit-Learn (pronounce it sci as in science). Scikit-Learn provides an extensive API to help you perform ML tasks.

According to their website, "Scikit-learn is an open source machine learning library that supports supervised and unsupervised learning. It also provides various tools for model fitting, data preprocessing, model selection and evaluation, and many other utilities."

Let's unpack some of this jargon:

🎓 A machine learning model is a mathematical model that generates predictions given data to which it has not been exposed. It builds these predictions based on its analysis of data and extrapolating patterns.

🎓 Supervised Learning works by mapping an input to an output based on example pairs. It uses labeled training data to build a function to make predictions. Download a printable Zine about Supervised Learning. Regression, which is covered in this group of lessons, is a type of supervised learning.

🎓 Unsupervised Learning works similarly but it maps pairs using unlabeled data. Download a printable Zine about Unsupervised Learning

🎓 Model Fitting in the context of machine learning refers to the accuracy of the model's underlying function as it attempts to analyze data with which it is not familiar. Underfitting and overfitting are common problems that degrade the quality of the model as the model fits either not well enough or too well. This causes the model to make predictions either too closely aligned or too loosely aligned with its training data. An overfit model predicts training data too well because it has learned the data's details and noise too well. An underfit model is not accurate as it can neither accurately analyze its training data nor data it has not yet 'seen'.

TODO: Infographic to show underfitting/overfitting like this https://miro.medium.com/max/1525/1*TzMis7bbuaU1OE2q64hnbg.png

🎓 Data Preprocessing is the process whereby data scientists clean and convert data for use in the machine learning lifecycle.

🎓 Model Selection and Evaluation is the process whereby data scientists evaluate the performance of a model or any other relevant metric of a model by feeding it unseen data, selecting the most appropriate model for the task at hand.

🎓 Feature Variable A feature is a measurable property of your data. In many datasets it is expressed as a column heading like 'date' 'size' or 'color'.

🎓 Training and Testing datasets Throughout this curriculum, you will divide up a dataset into at least two parts, one large group of data for 'training' and a smaller part for 'testing'. Sometimes you'll also find a 'validation' set. A training set is the group of examples you use to train a model. A validation set is a smaller independent group of examples that you use to tune the model's hyperparameters, or architecture, to improve the model. A test dataset is another independent group of data, often gathered from the original data, that you use to confirm the performance of the built model.

🎓 Feature Selection and Feature Extraction How do you know which variable to choose when building a model? You'll probably go through a process of feature selection or feature extraction to choose the right variables for the most performant model. They're not the same thing, however: "Feature extraction creates new features from functions of the original features, whereas feature selection returns a subset of the features." source

In this course, you will use Scikit-Learn and other tools to build machine learning models to perform what we call 'traditional machine learning' tasks. We have deliberately avoided neural networks and deep learning, as they are better covered in our forthcoming 'AI for Beginners' curriculum.

Scikit-Learn makes it straightforward to build models and evaluate them for use. It is primarily focused on using numeric data and contains several ready-made datasets for use as learning tools. It also includes pre-built models for students to try. Let's explore the process of loading prepackaged data and using a built in estimator first ML model with Scikit-Learn with some basic data.

Your First Scikit-Learn Notebook

This tutorial was inspired by the Linear Regression example on Scikit-Learn's web site.

In the notebook.ipynb file associated to this lesson, clear out all the cells by pressing the 'trash can' icon.

In this section, you will work with a small dataset about diabetes that is built into Scikit-Learn for learning purposes. Imagine that you wanted to test a treatment for diabetic patients. Machine Learning models might help you determine which patients would respond better to the treatment, based on combinations of variables. Even a very basic Regression model, when visualized, might show information about variables that would help you organize your theoretical clinical trials.

✅ There are many types of Regression methods, and which one you pick depends on the answer you're looking for. If you want to predict the probable height for a person of a given age, you'd use Linear Regression, as you're seeking a numeric value. If you're interested in discovering whether a type of recipe should be considered vegan or not, you're looking for a category assignment so you would use Logistic Regression. You'll learn more about Logistic Regression later. Think a bit about some questions you can ask of data, and which of these methods would be more appropriate.

Let's get started on this task.

- Import some libraries to help with your tasks. First, import

matplotlib, a useful graphing tool. We will use it to create a line plot. Also import numpy, a useful library for handling numeric data in Python. Load updatasetsand thelinear_modelfrom the Scikit-Learn library. Loadmodel_selectionfor splitting data into training and test sets.

import matplotlib.pyplot as plt

import numpy as np

from sklearn import datasets, linear_model, model_selection

- Print out a bit of the built-in diabetes housing dataset. It includes 442 samples of data around diabetes, with 10 feature variables, some of which include:

age: age in years bmi: body mass index bp: average blood pressure s1 tc: T-Cells (a type of white blood cells)

✅ This dataset includes the concept of 'sex' as a feature variable important to research around diabetes. Many medical datasets include this type of binary classification. Think a bit about how categorizations such as this might exclude certain parts of a population from treatments.

Now, load up the X and y data.

🎓 Remember, this is supervised learning, and we need a named 'y' target.

- In a new cell, load the diabetes dataset as data and target (X and y, loaded as a tuple). X will be a data matrix, and y will be the regression target. Add some print commands to show the shape of the data matrix and its first element:

🎓 A tuple is an ordered list of elements.

✅ Think a bit about the relationship between the data and the regression target. Linear regression predicts relationships between feature X and target variable y. Can you find the target for the diabetes dataset in the documentation? What is this dataset demonstrating, given that target?

X, y = datasets.load_diabetes(return_X_y=True)

print(X.shape)

print(X[0])

You can see that this data has 442 items shaped in arrays of 10 elements:

(442, 10)

[ 0.03807591 0.05068012 0.06169621 0.02187235 -0.0442235 -0.03482076

-0.04340085 -0.00259226 0.01990842 -0.01764613]

- Next, select a portion of this dataset to plot by arranging it into a new array using numpy's newaxis function. We are going to use Linear Regression to generate a line between values in this data, according to a pattern it determines.

X = X[:, np.newaxis, 2]

✅ At any time, print out the data to check its shape

- Now that you have data ready to be plotted, you can see if a machine can help determine a logical split between the numbers in this dataset. To do this, you need to split both the data (X) and the target (y) into test and training sets. Scikit-Learn has a straightforward way to do this; you can split your test data at a given point.

X_train, X_test, y_train, y_test = model_selection.train_test_split(X, y, test_size=0.33)

- Now you are ready to train your model! Load up the Linear Regression model and train it with your X and y training sets:

✅ model.fit is a command you'll see in many ML libraries such as TensorFlow

model = linear_model.LinearRegression()

model.fit(X_train, y_train)

- Then, create a prediction using test data. This will be used to draw the line between data groups

y_pred = model.predict(X_test)

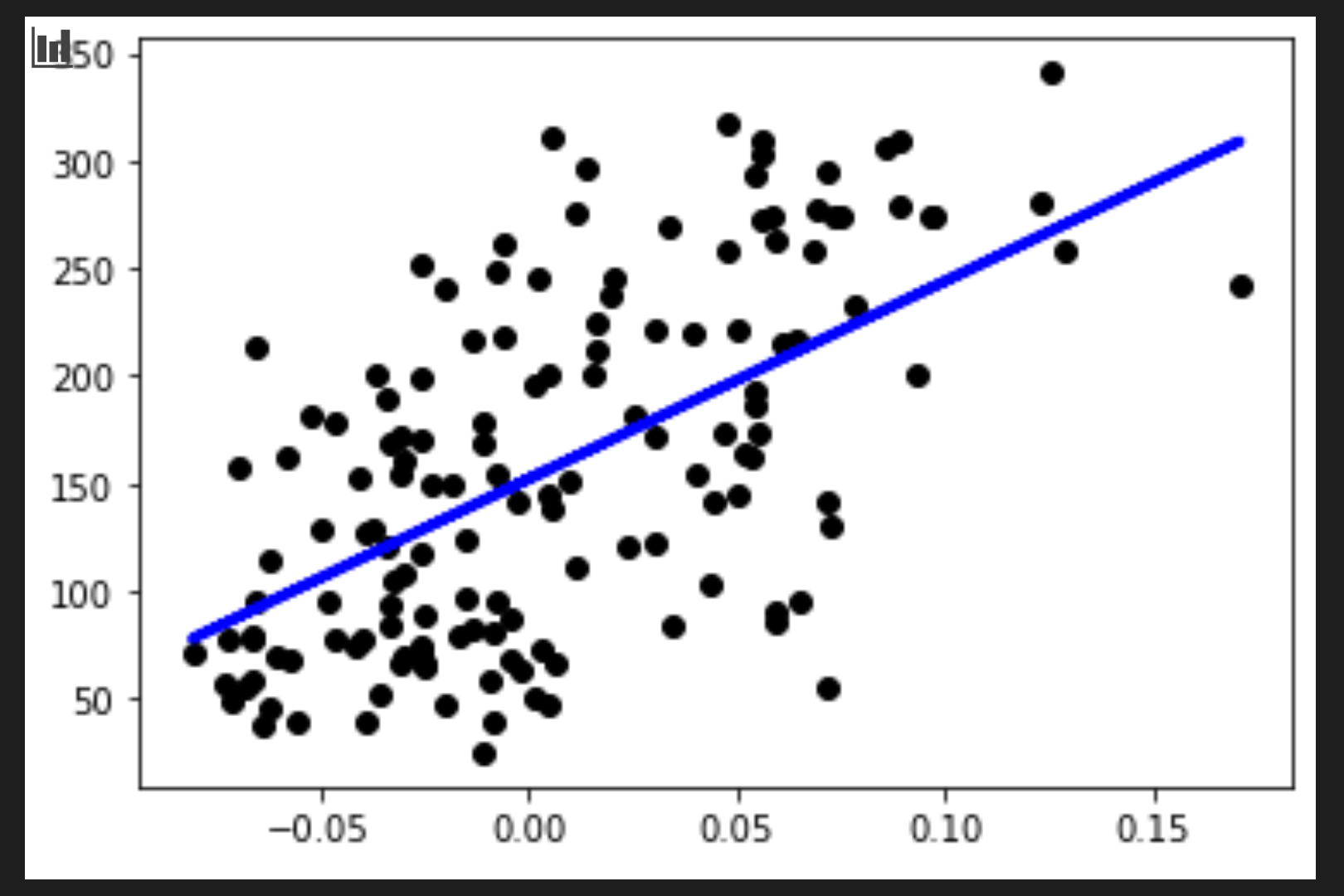

- Now it's time to show the data in a plot. Matplotlib is a very useful tool for this task. Create a scatterplot of all the X and y test data, and use the prediction to draw a line in the most appropriate place, between the model's data groupings.

plt.scatter(X_test, y_test, color='black')

plt.plot(X_test, y_pred, color='blue', linewidth=3)

plt.show()

✅ Think a bit about what's going on here. A straight line is running through many small dots of data, but what is it doing exactly? Can you see how you should be able to use this line to predict where a new, unseen data point should fit in relationship to the plot's y axis? Try to put into words the practical use of this model.

Congratulations, you just built your first Linear Regression model, created a prediction with it, and displayed it in a plot!

🚀Challenge

Plot a different variable from this dataset. Hint: edit this line: X = X[:, np.newaxis, 2]. Given this dataset's target, what are you able to discover about the progression of diabetes as a disease?

Post-lecture quiz

Review & Self Study

In this tutorial, you worked with simple linear regression, rather than univariate or multiple linear regression. Read a little about the differences between these methods, or take a look at this video

Read more about the concept of Regression and think about what kinds of questions can be answered by this technique. Take this tutorial to deepen your understanding.

Assignment: A different dataset