|

|

5 years ago | |

|---|---|---|

| .. | ||

| solution | 5 years ago | |

| translations | 5 years ago | |

| README.md | 5 years ago | |

| assignment.md | 5 years ago | |

| megan-smith-algorithms.png | 5 years ago | |

| notebook.ipynb | 5 years ago | |

| resources.md | 5 years ago | |

README.md

Data Ethics

Pre-Lecture Quiz 🎯

Pre-lecture quiz

Sketchnote 🖼

A Visual Guide to Data Ethics by Nitya Narasimhan / (@sketchthedocs)

1. Introduction

This lesson will look at the field of data ethics - from core concepts (ethical challenges & societal consequences) to applied ethics (ethical principles, practices and culture). Let's start with the basics: definitions and motivations.

1.1 Definitions

Ethics comes from the Greek word "ethikos" and its root "ethos". It refers to the set of shared values and moral principles that govern our behavior in society and is based on widely-accepted ideas of right vs. wrong. Ethics are not laws! They can't be legally enforced but they can influence corporate initiatives and government regulations that help with compliance and governance.

Data Ethics is defined as a new branch of ethics that "studies and evaluates moral problems related to data, algorithms and corresponding practices .. to formulate and support morally good solutions" where:

data= generation, recording, curation, dissemination, sharing and usagealgorithms= AI, machine learning, botspractices= responsible innovation, ethical hacking, codes of conduct

Applied Ethics is the practical application of moral considerations. If focuses on understanding how ethical issues impact real-world actions, products and processes, by asking moral questions - like "is this fair?" and "how can this harm individuals or society as a whole?" when working with big data and AI algorithms. Applied ethics practices can then focus on taking corrective measures - like employing checklists ("did we test data model accruacy with diverse groups, for fairness?") - to minimize or prevent any unintended consequences.

Ethics Culture: Applied ethics focuses on identifying moral questions and adopting ethically-motivated actions with respect to real-world scenarios and projects. Ethics culture is about operationalizing these practices, collaboratively and at scale, to ensure governances at the scale of organizations and industries. Establishing an ethics culture requires identifying and addressing systemic issues (historical or ingrained) and creating norms & incentives htat keep members accountable for adherence to ethical principles.

1.2 Motivation

Let's look at some emerging trends in big data and AI:

- By 2022 one-in-three large organizations will buy and sell data via online Marketplaces and Exchanges.

- By 2025 we'll be creating and consuming over 180 zettabytes of data.

Data scientists will have unimaginable levels of access to personal and behavioral data, helping them develop the algorithms to fuel an AI-driven economy. This raises data ethics issues around protection of data privacy with implications for individual rights around personal data collection and usage.

App developers will find it easier and cheaper to integrate AI into everday consumer experiences, thanks to the economies of scale and efficiencies of distribution in centralized exchanges. This raises ethical issues around the weaponization of AI with implications for societal harms caused by unfairness, misrepresentation and systemic biases.

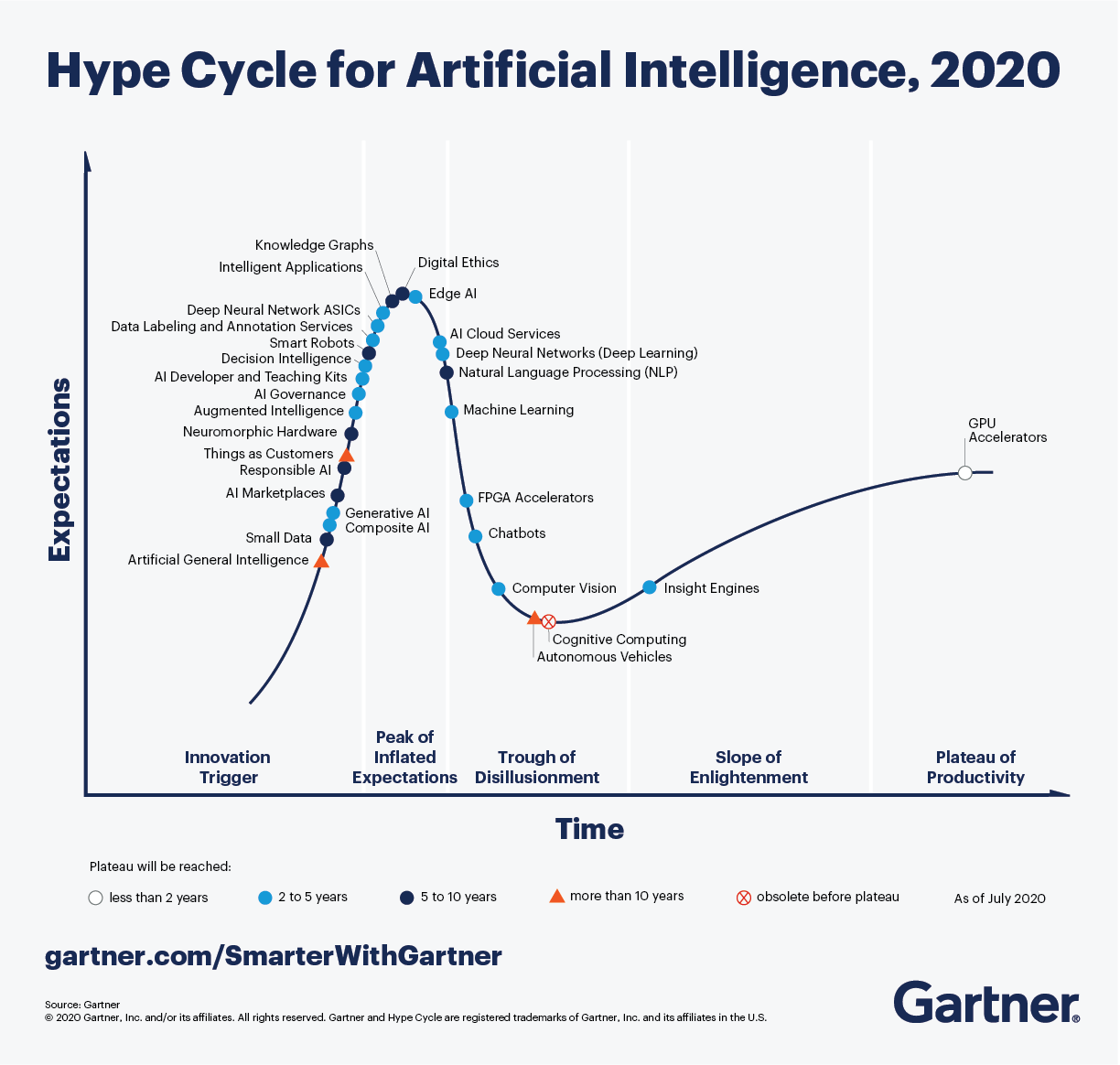

Democratization and Industrialization of AI are seen as the two megatrends in Gartner's 2020 Hype Cycle for AI, shown below. The first positions developers to be a major force in driving increased AI adoption, while the second makes responsible AI and governance a priority for industries.

Data ethics are now necessary guardrails ensuring developers ask the right moral questions and adopt the right practices (to uphold ethical values). And they influence the regulations and frameworks defined (for governance) by governments and organizations.

2. Core Concepts

A data ethics culture requires an understanding of three things: the shared values we embrace as a society, the moral questions we ask (to ensure adherence to those values), and the potential harms & consequences (of non-adherence).

2.1 Ethical AI Values

Our shared values reflect our ideas of wrong-vs-right when it comes to big data and AI. Different organizations have their own views of what responsible AI and ethical AI principles look like.

Here is an example - the Responsible AI Framework from Microsoft defines 6 core ethics principles for all products and processes to follow, when implementing AI solutions:

- Accountability: ensure AI designers & developers take responsibility for its operation.

- Transparency: make AI operations and decisions understandable to users.

- Fairness: understand biases and ensure AI behaves comparably across target groups.

- Reliability & Safety: make sure AI behaves consistently, and without malicious intent.

- Security & Privacy: get informed consent for data collection, provide data privacy controls.

- Inclusiveness: adapt AI behaviors to broad range of human needs and capabilities.

Note that accountability and transparency are cross-cutting concerns that are foundational to the top 4 values, and can be explored in their contexts. In the next section we'll look at the ethical challenges (moral questions) raised in two core contexts:

- Data Privacy - focused on personal data collection & use, with consequences to individuals.

- Fairness - focused on algorithm design & use, with consequences to society at large.

2.2 Ethics of Personal Data

Personal data or personally-identifiable information (PII) is any data that relates to an identified or identifiable living individual. It can also extend to diverse pieces of non-personal data that collectively can lead to the identification of a specific individual. Examples include: participant data from research studies, social media interactions, mobile & web app data, online commerce transactions and more.

Here are some ethical concepts and moral questions to explore in context:

- Data Ownership. Who owns the data - user or organization? How does this impact users' rights?

- Informed Consent. Did users give permissions for data capture? Did they understand purpose?

- Intellectual Property. Does data have economic value? What are the users' rights & controls?

- Data Privacy. Is data secured from hacks/leaks? Is anonymity preserved on data use or sharing?

- Right to be Forgotten. Can user request their data be deleted or removed to reclaim privacy?

2.3 Ethics of Algorithms

Algorithm design begins with collecting & curating datasets relevant to a specific AI problem or domain, then processing & analyzing it to create models that can help predict outcomes or automate decisions in real-world applications. Moral questions can now arise in various contexts, at any one of these stages.

Here are some ethical concepts and moral questions to explore in context:

- Dataset Bias - Is data representative of target audience? Have we checked for different data biases?

- Data Quality - Does dataset and feature selection provide the required data quality assurance?

- Algorithm Fairness - Does the data model systematically discriminate against some subgroups?

- Misrepresentation - Are we communicating honestly reported data in a deceptive manner?

- Explainable AI - Are the results of AI understandable by humans? White-box (vs. black-box) models.

- Free Choice - Did user exercise free will or did algorithm nudge them towards a desired outcome?

2.3 Case Studies

The above are a subset of the core ethical challenges posed for big data and AI. More organizations are defining and adopting responsible AI or ethical AI frameworks that may identify additional shared values and related ethical challenges for specific domains or needs.

To understand the potential harms and consequences of neglecting or violating these data ethics principles, it helps to explore this in a real-world context. Here are some famous case studies and recent examples to get you started:

1972: The Tuskegee Syphillis Study is a landmark case study for informed consent in data science. African American men who participated in the study were promised free medical care but deceived by researchers who failed to inform subjects of their diagnosis or about availability of treatment. Many subjects died; some partners or children were affected by complications. The study lasted 40 years.2007: The Netflix data prize provided researchers with 10M anonymized movie rankings from 50K customers to help improve recommendation algorithms. This became a landmark case study in de-identification (data privacy) where researchers were able to correlate the anonymized data with other datasets (e.g., IMDb) that had personally identifiable information - helping them "de-anonymize" users.2013: The City of Boston developed Street Bump, an app that let citizens report potholes, giving the city better roadway data to find and fix issues. This became a case study for collection bias where people in lower income groups had less access to cars and phones, making their roadway issues invisible in this app. Developers worked with academics to equitable access and digital divides issues for fairness.2018: The MIT Gender Shades Study evaluated the accuracy of gender classification AI products, exposing gaps in accuracy for women and persons of color. A 2019 Apple Card seemed to offer less credit to women than men. Both these illustrated issues in algorithmic fairness and discrimination.2020: The Georgia Department of Public Health released COVID-19 charts that appeared to mislead citizens about trends in confirmed cases with non-chronological ordering on the x-axis. This illustrates data misrepresentation where honest data is presented dishonestly to support a desired narrative.2020: Learning app ABCmouse paid $10M to settle an FTC complaint where parents were trapped into paying for subscriptions they couldn't cancel. This highlights the illusion of free choice in algorithmic decision-making, and potential harms from dark patterns that exploit user insights.2021: Facebook Data Breach exposed data from 530M users, resulting in a $5B settlement to the FTC. It however refused to notify users of the breach - raising issues like data privacy, data security and accountability, including user rights to redress for those affected.

Want to explore more case studies on your own? Check out these resources:

- Ethics Unwrapped - ethics dilemmas across diverse industries.

- Data Science Ethics course - landmark case studies in data ethics.

- Where things have gone wrong - deon checklist examples of ethical issues

3. Applied Ethics

We've learned about data ethics values, and the ethical challenges (+ moral questions) associated with adherence to these values. But how do we implement these ideas in real-world contexts? Here are some tools & practices that can help.

3.1 Have Professional Codes

Professional codes are moral guidelines for professional behavior, helping employees or members make decisions that align with organizational principles. Codes may not be legally enforceable, making them only as good as the willing compliance of members. An organization may inspire adherence by imposing incentives & penalties accordingly.

Professional codes of conduct are prescriptive rules and responsibilities that members must follow to remain in good standing with an organization. A professional code of ethics is more aspirational, defining the shared values and ideas of the organization. The terms are sometimes used interchangeably.

Examples include:

- Oxford Munich Code of Ethics

- Data Science Association Code of Conduct (created 2013)

- ACM Code of Ethics and Professional Conduct (since 1993)

3.2 Ask Moral Questions

Assuming you've already identified your shared values or ethical principles at a team or organization level, the next step is to identify the moral questions relevant to your specific use case and operational workflow.

Here are 6 basic questions about data ethics that you can build on:

- Is the data you're collecting fair and unbiased?

- Is the data being used ethically and fairly?

- Is user privacy being protected?

- To whom does data belong - the company or the user?

- What effects do the data and algorithms have on society (individual and collective)?

- Is the data manipulated or deceptive?

For larger team or project scope, you can choose to expand on questions that reflect a specific stage of the workflow. For example here are 22 questions on ethics in data and AI that were grouped into design, implementation & management, systems & organization categories for convenience.

3.3 Adopt Ethics Checklists

While professional codes define required ethical behavior from practitioners, they have known limitations for implementation, particularly in large-scale projects. In Ethics and Data Science), experts instead advocate for ethics checklists that can connect principles to practices in more deterministic and actionable ways.

Checklists convert questions into "yes/no" tasks that can be tracked and validated before product release. Tools like deon make this frictionless, creating default checklists aligned to industry recommendations and enabling users to customize and integrate them into workflows using a command-line tool. Deon also provides real-world examples of ethical challenges to provide context for these decisions.

3.4 Track Ethics Compliance

Ethics is about doing the right thing, even if there are no laws to enforce it. Compliance is about following the law, when defined and where applicable. Governance is the broader umbrella that covers all the ways in which an organization (company or government) operates to enforce ethical principles & comply with laws.

Companies are creating their own ethics frameworks (e.g., Microsoft, IBM, Google, Facebook, Accenture) for governances, while state and national governments tend to focus on regulations that protect the data privacy and rights of their citizens.

Here are some landmark data privacy regulations to know:

1974, US Privacy Act - regulates federal govt. collection, use and disclosure of personal information.1996, US Health Insurance Portability & Accountability Act (HIPAA) - protects personal health data.1998, US Children's Online Privacy Protection Act (COPPA) - protects data privacy of children under 13.2018, General Data Protection Regulation (GDPR) - provides user rights, data protection and privacy.2018, California Consumer Privacy Act (CCPA) gives consumers more rights over their personal data.

In Aug 2021, China passed the Personal Information Protection Law (to go into effect Nov 1) which, with its Data Security Law, will create one of the strongest online data privacy regulations in the world.

3.5 Establish Ethics Culture

There remains an intangible gap between compliance ("doing enough to meet the letter of the law") and addressing systemic issues (like ossification, information asymmetry and distributional unfairness) that can create self-fulfilling feedback loops to weaponizes AI further. This is motivating calls for formalizing data ethics cultures in organizations, where everyone is empowered to [pull the Andon cord](https://en.wikipedia.org/wiki/Andon_(manufacturing) to raise ethics concerns early. And exploring collaborative approaches to defining this culture that build emotional connections and consistent beliefs across organizations and industries.

Challenge 🚀

Post-Lecture Quiz 🎯

Post-lecture quiz