diff --git a/1-Introduction/02-ethics/1-fundamentals.md b/1-Introduction/02-ethics/1-fundamentals.md

deleted file mode 100644

index f86dccac..00000000

--- a/1-Introduction/02-ethics/1-fundamentals.md

+++ /dev/null

@@ -1,109 +0,0 @@

-

-## 1. Ethics Fundamentals

-[Back To Introduction](README.md#introduction)

-

-### 1.1 What is Ethics?

-

-The term "ethics" [comes from](https://en.wikipedia.org/wiki/Ethics) the Greek term "ethikos" - and its root "ethos", meaning _character or moral nature_. Think of ethics as the set of **shared values** or **moral principles** that govern our behavior in society. Our code of ethics is based on widely accepted ideas on what is _right vs. wrong_, creating informal rules (or "norms") that we follow voluntarily to ensure the good of the community.

-

-Ethics is critical for scientific research and technology advancement. The [Research Ethics timeline](https://www.niehs.nih.gov/research/resources/bioethics/timeline/index.cfm) gives examples from the past four centuries - including Charles Babbage's 1830 [Reflections on the Decline of Science in England ..](https://books.google.com/books/about/Reflections_on_the_Decline_of_Science_in.html) where he discusses dishonesty in data science approaches including fabrication of data to support desired outcomes. Ethics became _guardrails_ to prevent data misuse and protect society from unintended consequences or harms.

-

-_Applied Ethics_ is about the practical adoption of ethical principles and practices when developing new processes or products. It's about asking moral questions ("is this right or wrong?"), evaluating tradeoffs ("does this help or harm society more?") and taking informed actions to ensure compliance at individual and organizational levels. Ethics are *not* laws. But they can influence the creation of legal or social frameworks that support governance such as:

-

- * **Professional codes of conduct.** | For users or groups e.g., The [Hippocratic Oath](https://en.wikipedia.org/wiki/Hippocratic_Oath) (460-370 BC) for medical ethics defined principles like data confidentiality (led to _doctor-patient privilege_ laws) and non-maleficence (popularly known as _first, do no harm_) that are still adopted today.

- * **Regulatory standards** | For organizations or industries e.g., The [1996 Health Insurance Portability and Accountability Act](https://en.wikipedia.org/wiki/Health_Insurance_Portability_and_Accountability_Act) (HIPAA) mandated theft and fraud protections for _personally identifiable information_ (PII) collected by the healthcare industry - and stipulated how that data could be used or disclosed.

-

-### 1.2 What is Data Ethics?

-

-Data ethics is the application of ethics considerations to the domain of big data and data-driven algorithms.

-

- * [Wikipedia](https://en.wikipedia.org/wiki/Big_data_ethics) defines big data ethics as _systemizing, defending, and recommending, concepts of right and wrong conduct in relation to data_ - focusing on implications for **personal data**.

- * A [Royal Society article](https://royalsocietypublishing.org/doi/full/10.1098/rsta.2016.0360#sec-1) defines data ethics as a new branch of ethics that _studies and evaluates moral problems related to **data, algorithms and corresponding practices** .. to formulate and support morally good solutions (right conduct or values)_.

-

-The first definition puts it in perspective of users ("personal data") while the second puts it in perspective of operations ("data, algorithms, practices") where:

-

-* `data` = generation, recording, curation, dissemination, sharing & usage

-* `algorithms` = AI, agents, machine learning, bots

-* `practices` = responsible innovation, ethical hacking, codes of conduct

-

-Based on this, we can define data ethics as the study and evaluation of _moral questions_ related to data collection, algorithm development, and industry-wide models for governance. We'll explore these questions in the "how" section, but first let's talk about the "why".

-

-### 1.3 Why Data Ethics?

-

-To answer this question, let's look at recent trends in the big data and AI industries:

-

- * [_Statista_](https://www.statista.com/statistics/871513/worldwide-data-created/) - By 2025, we will be creating and consuming over **180 zettabytes of data**.

- * _[Gartner](https://www.gartner.com/smarterwithgartner/gartner-top-10-trends-in-data-and-analytics-for-2020/)_ - By 2022, 35% of large orgs will buy & sell data in **online Marketplaces and Exchanges**

- * _[Gartner](https://www.gartner.com/smarterwithgartner/2-megatrends-dominate-the-gartner-hype-cycle-for-artificial-intelligence-2020/)_ - AI **democratization and industrialization** are the new Hype Cycle megatrends.

-

-The first trend tells us that _data scientists_ will have unprecedented levels of access to personal data at global scale, building algorithms to fuel an AI-driven economy. The second trend tells us that economies of scale and efficiencies in distribution will make it easier and cheaper for _developers_ to integrate AI into more everyday consumer experiences.

-

-The potential for harm occurs when algorithms and AI get _weaponized_ against society in unforeseen ways. In [Weapons of Math Destruction](https://www.youtube.com/watch?v=TQHs8SA1qpk) author Cathy O'Neil talks about the three elements of AI algorithms that pose a danger to society: _opacity_, _scale_ and _damage_.

-

- * **Opacity** refers to the black box nature of many algorithms - do we understand why a specific decision was made, and can we _explain or interpret_ the data reasoning that drove the predictions behind it?

- * **Scale** refers to the speed with which algorithms can be deployed and replicated - how quickly can a minor algorithm design flaw get "baked in" with use, leading to irreversible societal harms to affected users?

- * **Damage** refers to the social and economic impact of poor algorithmic decision-making - how can bad or unrepresentative data lead to unfair algorithms that disproportionately harm specific user groups?

-

-So why does data ethics matter? Because democratization of AI can speed up weaponization, creating harms at scale in the absence of ethical guardrails. While industrialization of AI will motivate better governance - giving data ethics an important role in shaping policies and standards for developing responsible AI solutions.

-

-

-### 1.4 How To Apply Ethics?

-

-We know what data ethics is, and why it matters. But how do we _apply_ ethical principles or practices as data scientists or developers? It starts with us asking the right questions at every step of our data-driven pipelines and processes. These [Six questions about data science ethics](https://halpert3.medium.com/six-questions-about-data-science-ethics-252b5ae31fec) are a good starting point:

-

- 1. Is the data fair and unbiased?

- 2. Is the data being used fairly and ethically?

- 3. Is (user) privacy being protected?

- 4. To whom does data belong, company or user?

- 5. What effects do data and algorithms have on society?

- 6. Is the data manipulated or deceiving?

-

-The [22 questions for ethics in data and AI](https://medium.com/the-organization/22-questions-for-ethics-in-data-and-ai-efb68fd19429) article expands this into a framework, grouping questions by stage of processing: _design_, _implementation & management_, _systems & organization_. The [O'Reilly Ethics and Data Science](https://resources.oreilly.com/examples/0636920203964/) book advocates strongly for _checklists_, asking simple `have we done this? (y/n)` questions that improve ethics oversight without the overheads caused by analysis paralysis.

-

-And tools like [deon](https://deon.drivendata.org/)

-make it frictionless to integrate [ethics checklists](https://deon.drivendata.org/#data-science-ethics-checklist) into your project workflows. Deon builds on [industry practices](https://deon.drivendata.org/#checklist-citations), shares [real-world examples](https://deon.drivendata.org/examples/) that put the ethical challenges in context, and allows practitioners to derive custom checklists from the defaults, to suit specific scenarios or industries.

-

-### 1.5 Ethics Concepts

-

-Ethics checklists often revolve around yes/no questions related to core ethics concepts and challenges. Let's look at _a subset_ of these issues - inspired in part by the [deon ethics checklist](https://deon.drivendata.org/#data-science-ethics-checklist) - in two contexts: data (collection and storage) and algorithms (analysis and modeling).

-

-**Data Collection & Storage**

- * _Ownership_: Does the user own the data? Or the organization? Is there an agreement that defines this?

- * _Informed Consent_: Did human subjects give permission for data capture & understand purpose/usage?

- * _Collection Bias_: Is data representative of audience? Did we identify and mitigate biases?

- * _Data Security_: Is data stored and transmitted securely? Are valid access controls enforced?

- * _Data Privacy_: Does data contain personally identifiable information? Is anonymity preserved?

- * _Right to be Forgotten_: Does user have mechanism to request deletion of their personal information?

-

-**Data Modeling & Analysis**

-

- * _Data Validity_: Does data capture relevant features? Is it timeless? Is the data model valid?

- * _Misrepresentation_: Does analysis communicate honestly reported data in a deceptive manner?

- * _Auditability_: Is the data analysis or algorithm design documented well enough to be reproducible later?

- * _Explainability_: Can we explain why the data model or learning algorithm made a specific decision?

- * _Fairness_: Is the model fair (e.g., shows similar accuracy) across diverse groups of affected users?

-

-Finally, let's talk about two abstract concepts that often underlie users' ethics concerns around technology:

-

- * **Trust**: Can we trust an organization with our personal data? Can we trust that algorithmic decisions are fair and do no harm? Can we trust that information is not misrepresented?

- * **Choice**: Do I have free will when I make a choice in a consumer UI/UX? Are data-driven [choice architectures](https://en.wikipedia.org/wiki/Choice_architecture) nudging me towards good choices or are [dark patterns](https://www.darkpatterns.org/) working against my self-interest?

-

-

-### 1.6 Ethics History

-

-Knowing ethics concepts is one thing - understanding the intent behind them, and the potential harms or societal consequences they bring, is another. Let's look at some case studies that help frame ethics discussions in a more concrete way with real-world examples.

-

-| Historical Example | Ethics Issues |

-|---|---|

-| _[Facebook Data Breach](https://www.npr.org/2021/04/09/986005820/after-data-breach-exposes-530-million-facebook-says-it-will-not-notify-users)_ exposes data for 530M users. Facebook pays $5B to FTC, does not notify users. | Data Privacy, Data Security, Transparency, Accountability |

-| [Tuskegee Syphillis Study](https://en.wikipedia.org/wiki/Tuskegee_syphilis_experiment) - African-American men were enrolled in study without being told its true purpose. Treatments were withheld. | Informed Consent, Fairness, Social / Economic Harms |

-| [MIT Gender Shades Study](http://gendershades.org/index.html) - evaluated accuracy of industry AI gender classification models (used by law enforcement), detected bias | Fairness, Social/ Economic Harms, Collection Bias |

-| [Learning app ABCmouse pays $10 million to settle FTC complaint it trapped parents in subscription they couldn’t cancel](https://www.washingtonpost.com/business/2020/09/04/abcmouse-10-million-ftc-settlement/) - user experience masked context, nudged user towards choices with financial harms| Misrepresentation, Free Choice, Dark Patterns, Economic Harms |

-| [Netflix Prize Dataset de-anonymized by correlation](https://www.wired.com/2007/12/why-anonymous-data-sometimes-isnt/) - showed how Netflix prize dataset of 500M users was easily de-anonymized by cross-correlation with public IMDb comments (and other such datasets) | Data Privacy, Anonymity, De-identification |

-| [Georgia COVID-19 cases not declining as quickly as data suggested](https://www.vox.com/covid-19-coronavirus-us-response-trump/2020/5/18/21262265/georgia-covid-19-cases-declining-reopening) - graphs released had x-axis not ordered chronologically, misleading viewers| Misrepresentation, Social Harms |

-

-We covered just a subset of examples, but recommend you explore these resources for more:

-

- * [Ethics Unwrapped](https://ethicsunwrapped.utexas.edu/case-studies) - ethics dilemmas across diverse industries.

- * [Data Science Ethics course](https://www.coursera.org/learn/data-science-ethics#syllabus) - landmark case studies in data ethics.

- * [Where things have gone wrong](https://deon.drivendata.org/examples/) - deon checklist examples of ethical issues

diff --git a/1-Introduction/02-ethics/2-collection.md b/1-Introduction/02-ethics/2-collection.md

deleted file mode 100644

index 323b3087..00000000

--- a/1-Introduction/02-ethics/2-collection.md

+++ /dev/null

@@ -1,3 +0,0 @@

-

-## 2. Data Collection

-[Back To Introduction](README.md)

\ No newline at end of file

diff --git a/1-Introduction/02-ethics/3-privacy.md b/1-Introduction/02-ethics/3-privacy.md

deleted file mode 100644

index 93b6e22d..00000000

--- a/1-Introduction/02-ethics/3-privacy.md

+++ /dev/null

@@ -1,3 +0,0 @@

-

-## 3. Data Privacy

-[Back To Introduction](README.md)

\ No newline at end of file

diff --git a/1-Introduction/02-ethics/4-fairness.md b/1-Introduction/02-ethics/4-fairness.md

deleted file mode 100644

index edd8bf2b..00000000

--- a/1-Introduction/02-ethics/4-fairness.md

+++ /dev/null

@@ -1,3 +0,0 @@

-

-## 4. Algorithm Fairness

-[Back To Introduction](README.md)

\ No newline at end of file

diff --git a/1-Introduction/02-ethics/5-consequences.md b/1-Introduction/02-ethics/5-consequences.md

deleted file mode 100644

index 634f3ee5..00000000

--- a/1-Introduction/02-ethics/5-consequences.md

+++ /dev/null

@@ -1,3 +0,0 @@

-

-## 5. Societal Consequences

-[Back To Introduction](README.md)

\ No newline at end of file

diff --git a/1-Introduction/02-ethics/6-summary.md b/1-Introduction/02-ethics/6-summary.md

deleted file mode 100644

index 8837cd43..00000000

--- a/1-Introduction/02-ethics/6-summary.md

+++ /dev/null

@@ -1,3 +0,0 @@

-

-## 6. Summary & Resources

-[Back To Introduction](README.md)

\ No newline at end of file

diff --git a/1-Introduction/02-ethics/README.md b/1-Introduction/02-ethics/README.md

index b59130ea..a2eda93c 100644

--- a/1-Introduction/02-ethics/README.md

+++ b/1-Introduction/02-ethics/README.md

@@ -6,35 +6,174 @@

## Sketchnote 🖼

-| A Visual Guide to Data Ethics by [Nitya Narasimhan](https://twitter.com/nitya) / [(@sketchthedocs)](https://sketchthedocs.dev)|

-|---|

-|

|

+> A Visual Guide to Data Ethics by [Nitya Narasimhan](https://twitter.com/nitya) / [(@sketchthedocs)](https://sketchthedocs.dev)

-## Introduction

+## 1. Introduction

-What is ethics? What does data ethics mean, and how is it relevant to data scientists and developers in the context of big data, machine learning, and artificial intelligence? This lesson explores these ideas under the following sections:

+This lesson will look at the field of _data ethics_ - from core concepts (ethical challenges & societal consequences) to applied ethics (ethical principles, practices and culture). Let's start with the basics: definitions and motivations.

- * [**Fundamentals**](1-fundamentals) - Understand definitions, motivation and core concepts.

- * [**Data Collection**](2-collection) - Explore data ethics issues around data ownership, user consent and control.

- * [**Data Privacy**](3-privacy) - Understand degrees of privacy, challenges in anonymity and leakage, and user rights.

- * [**Algorithm Fairness**](4-fairness) - Explore consequences & harms of algorithm bias and data misrepresentation.

- * [**Societal Consequences**](5-consequences) - Explore socio-economic issues and case studies related to data ethics.

- * [**Summary & Resources**](6-summary) - Wrap-up with a review of current data ethics practices and resources.

+### 1.1 Definitions

----

+**Ethics** [comes from the Greek word "ethikos" and its root "ethos"](https://en.wikipedia.org/wiki/Ethics). It refers to the set of _shared values and moral principles_ that govern our behavior in society and is based on widely-accepted ideas of _right vs. wrong_. Ethics are not laws! They can't be legally enforced but they can influence corporate initiatives and government regulations that help with compliance and governance.

+

+**Data Ethics** is [defined as a new branch of ethics](https://royalsocietypublishing.org/doi/full/10.1098/rsta.2016.0360#sec-1) that "studies and evaluates moral problems related to _data, algorithms and corresponding practices_ .. to formulate and support morally good solutions" where:

+ * `data` = generation, recording, curation, dissemination, sharing and usage

+ * `algorithms` = AI, machine learning, bots

+ * `practices` = responsible innovation, ethical hacking, codes of conduct

+

+**Applied Ethics** is the [_practical application of moral considerations_](https://en.wikipedia.org/wiki/Applied_ethics). If focuses on understanding how ethical issues impact real-world actions, products and processes, by asking moral questions - like _"is this fair?"_ and _"how can this harm individuals or society as a whole?"_ when working with big data and AI algorithms. Applied ethics practices can then focus on taking corrective measures - like employing checklists (_"did we test data model accruacy with diverse groups, for fairness?"_) - to minimize or prevent any unintended consequences.

+

+**Ethics Culture**: Applied ethics focuses on identifying moral questions and adopting ethically-motivated actions with respect to real-world scenarios and projects. Ethics culture is about _operationalizing_ these practices, collaboratively and at scale, to ensure governances at the scale of organizations and industries. [Establishing an ethics culture](https://hbr.org/2019/05/how-to-design-an-ethical-organization) requires identifying and addressing _systemic_ issues (historical or ingrained) and creating norms & incentives htat keep members accountable for adherence to ethical principles.

+

+

+### 1.2 Motivation

+

+Let's look at some emerging trends in big data and AI:

+

+ * [By 2022](https://www.gartner.com/smarterwithgartner/gartner-top-10-trends-in-data-and-analytics-for-2020/) one-in-three large organizations will buy and sell data via online Marketplaces and Exchanges.

+ * [By 2025](https://www.statista.com/statistics/871513/worldwide-data-created/) we'll be creating and consuming over 180 zettabytes of data.

+

+**Data scientists** will have unimaginable levels of access to personal and behavioral data, helping them develop the algorithms to fuel an AI-driven economy. This raises data ethics issues around _protection of data privacy_ with implications for individual rights around personal data collection and usage.

+

+**App developers** will find it easier and cheaper to integrate AI into everday consumer experiences, thanks to the economies of scale and efficiencies of distribution in centralized exchanges. This raises ethical issues around the [_weaponization of AI_](https://www.youtube.com/watch?v=TQHs8SA1qpk) with implications for societal harms caused by unfairness, misrepresentation and systemic biases.

+

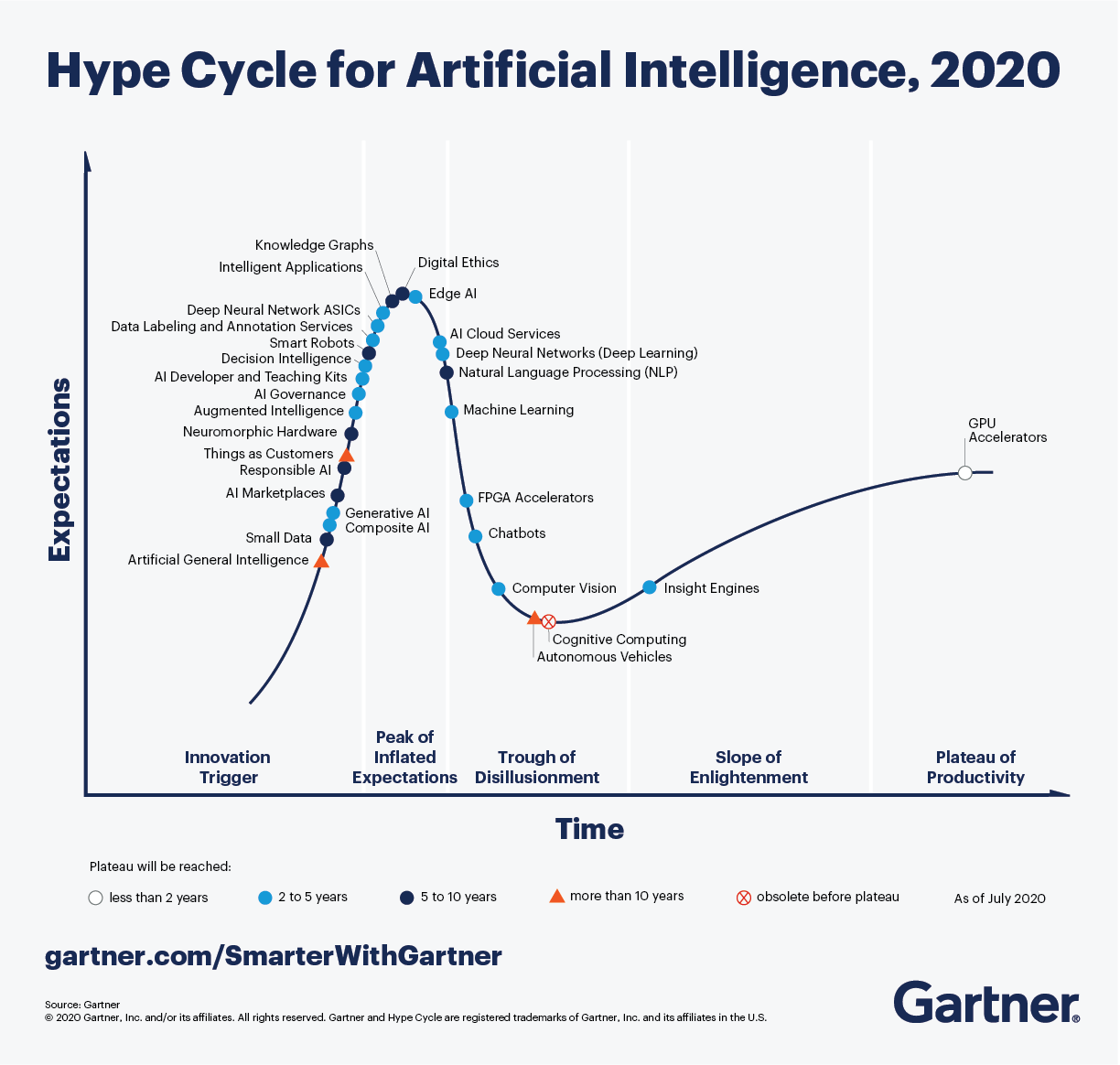

+**Democratization and Industrialization of AI** are seen as the two megatrends in Gartner's 2020 [Hype Cycle for AI](https://www.gartner.com/smarterwithgartner/2-megatrends-dominate-the-gartner-hype-cycle-for-artificial-intelligence-2020/), shown below. The first positions developers to be a major force in driving increased AI adoption, while the second makes responsible AI and governance a priority for industries.

+

+

+

+

+Data ethics are now **necessary guardrails** ensuring developers ask the right moral questions and adopt the right practices (to uphold ethical values). And they influence the regulations and frameworks defined (for governance) by governments and organizations.

+

+

+## 2. Core Concepts

+

+A data ethics culture requires an understanding of three things: the _shared values_ we embrace as a society, the _moral questions_ we ask (to ensure adherence to those values), and the potential _harms & consequences_ (of non-adherence).

+

+### 2.1 Ethical AI Values

+

+Our shared values reflect our ideas of wrong-vs-right when it comes to big data and AI. Different organizations have their own views of what responsible AI and ethical AI principles look like.

+

+Here is an example - the [Responsible AI Framework](https://docs.microsoft.com/en-gb/azure/cognitive-services/personalizer/media/ethics-and-responsible-use/ai-values-future-computed.png) from Microsoft defines 6 core ethics principles for all products and processes to follow, when implementing AI solutions:

+

+ * **Accountability**: ensure AI designers & developers take _responsibility_ for its operation.

+ * **Transparency**: make AI operations and decisions _understandable_ to users.

+ * **Fairness**: understand biases and ensure AI _behaves comparably_ across target groups.

+ * **Reliability & Safety**: make sure AI behaves consistently, and _without malicious intent_.

+ * **Security & Privacy**: get _informed consent_ for data collection, provide data privacy controls.

+ * **Inclusiveness**: adapt AI behaviors to _broad range of human needs_ and capabilities.

+

+

+

+Note that accountability and transparency are _cross-cutting_ concerns that are foundational to the top 4 values, and can be explored in their contexts. In the next section we'll look at the ethical challenges (moral questions) raised in two core contexts:

+

+ * Data Privacy - focused on **personal data** collection & use, with consequences to individuals.

+ * Fairness - focused on **algorithm** design & use, with consequences to society at large.

+

+### 2.2 Ethics of Personal Data

+

+[Personal data](https://en.wikipedia.org/wiki/Personal_data) or personally-identifiable information (PII) is _any data that relates to an identified or identifiable living individual_. It can also [extend to diverse pieces of non-personal data](https://ec.europa.eu/info/law/law-topic/data-protection/reform/what-personal-data_en) that collectively can lead to the identification of a specific individual. Examples include: participant data from research studies, social media interactions, mobile & web app data, online commerce transactions and more.

+

+Here are _some_ ethical concepts and moral questions to explore in context:

+

+* **Data Ownership**. Who owns the data - user or organization? How does this impact users' rights?

+* **Informed Consent**. Did users give permissions for data capture? Did they understand purpose?

+* **Intellectual Property**. Does data have economic value? What are the users' rights & controls?

+* **Data Privacy**. Is data secured from hacks/leaks? Is anonymity preserved on data use or sharing?

+* **Right to be Forgotten**. Can user request their data be deleted or removed to reclaim privacy?

+

+### 2.3 Ethics of Algorithms

+

+Algorithm design begins with collecting & curating datasets relevant to a specific AI problem or domain, then processing & analyzing it to create models that can help predict outcomes or automate decisions in real-world applications. Moral questions can now arise in various contexts, at any one of these stages.

+

+Here are _some_ ethical concepts and moral questions to explore in context:

+

+* **Dataset Bias** - Is data representative of target audience? Have we checked for different [data biases](https://towardsdatascience.com/survey-d4f168791e57)?

+* **Data Quality** - Does dataset and feature selection provide the required [data quality assurance](https://lakefs.io/data-quality-testing/)?

+* **Algorithm Fairness** - Does the data model [systematically discriminate](https://towardsdatascience.com/what-is-algorithm-fairness-3182e161cf9f) against some subgroups?

+* **Misrepresentation** - Are we [communicating honestly reported data in a deceptive manner?](https://www.sciencedirect.com/topics/computer-science/misrepresentation)

+* **Explainable AI** - Are the results of AI [understandable by humans](https://en.wikipedia.org/wiki/Explainable_artificial_intelligence)? White-box (vs. black-box) models.

+* **Free Choice** - Did user exercise free will or did algorithm nudge them towards a desired outcome?

+

+### 2.3 Case Studies

+

+The above are a subset of the core ethical challenges posed for big data and AI. More organizations are defining and adopting _responsible AI_ or _ethical AI_ frameworks that may identify additional shared values and related ethical challenges for specific domains or needs.

+

+To understand the potential _harms and consequences_ of neglecting or violating these data ethics principles, it helps to explore this in a real-world context. Here are some famous case studies and recent examples to get you started:

+

+

+* `1972`: The [Tuskegee Syphillis Study](https://en.wikipedia.org/wiki/Tuskegee_Syphilis_Study) is a landmark case study for **informed consent** in data science. African American men who participated in the study were promised free medical care _but deceived_ by researchers who failed to inform subjects of their diagnosis or about availability of treatment. Many subjects died; some partners or children were affected by complications. The study lasted 40 years.

+* `2007`: The Netflix data prize provided researchers with [_10M anonymized movie rankings from 50K customers_](https://www.wired.com/2007/12/why-anonymous-data-sometimes-isnt/) to help improve recommendation algorithms. This became a landmark case study in **de-identification (data privacy)** where researchers were able to correlate the anonymized data with _other datasets_ (e.g., IMDb) that had personally identifiable information - helping them "de-anonymize" users.

+* `2013`: The City of Boston [developed Street Bump](https://www.boston.gov/transportation/street-bump), an app that let citizens report potholes, giving the city better roadway data to find and fix issues. This became a case study for **collection bias** where [people in lower income groups had less access to cars and phones](https://hbr.org/2013/04/the-hidden-biases-in-big-data), making their roadway issues invisible in this app. Developers worked with academics to _equitable access and digital divides_ issues for fairness.

+* `2018`: The MIT [Gender Shades Study](http://gendershades.org/overview.html) evaluated the accuracy of gender classification AI products, exposing gaps in accuracy for women and persons of color. A [2019 Apple Card](https://www.wired.com/story/the-apple-card-didnt-see-genderand-thats-the-problem/) seemed to offer less credit to women than men. Both these illustrated issues in **algorithmic fairness** and discrimination.

+* `2020`: The [Georgia Department of Public Health released COVID-19 charts](https://www.vox.com/covid-19-coronavirus-us-response-trump/2020/5/18/21262265/georgia-covid-19-cases-declining-reopening) that appeared to mislead citizens about trends in confirmed cases with non-chronological ordering on the x-axis. This illustrates **data misrepresentation** where honest data is presented dishonestly to support a desired narrative.

+* `2020`: Learning app [ABCmouse paid $10M to settle an FTC complaint](https://www.washingtonpost.com/business/2020/09/04/abcmouse-10-million-ftc-settlement/) where parents were trapped into paying for subscriptions they couldn't cancel. This highlights the **illusion of free choice** in algorithmic decision-making, and potential harms from dark patterns that exploit user insights.

+* `2021`: Facebook [Data Breach](https://www.npr.org/2021/04/09/986005820/after-data-breach-exposes-530-million-facebook-says-it-will-not-notify-users) exposed data from 530M users, resulting in a $5B settlement to the FTC. It however refused to notify users of the breach - raising issues like **data privacy**, **data security** and **accountability**, including user rights to redress for those affected.

+

+Want to explore more case studies on your own? Check out these resources:

+

+ * [Ethics Unwrapped](https://ethicsunwrapped.utexas.edu/case-studies) - ethics dilemmas across diverse industries.

+ * [Data Science Ethics course](https://www.coursera.org/learn/data-science-ethics#syllabus) - landmark case studies in data ethics.

+ * [Where things have gone wrong](https://deon.drivendata.org/examples/) - deon checklist examples of ethical issues

+

+## 3. Applied Ethics

+

+We've learned about data ethics values, and the ethical challenges (+ moral questions) associated with adherence to these values. But how do we _implement_ these ideas in real-world contexts? Here are some tools & practices that can help.

+

+### 3.1 Have Professional Codes

+

+Professional codes are _moral guidelines_ for professional behavior, helping employees or members _make decisions that align with organizational principles_. Codes may not be legally enforceable, making them only as good as the willing compliance of members. An organization may inspire adherence by imposing incentives & penalties accordingly.

+

+Professional _codes of conduct_ are prescriptive rules and responsibilities that members must follow to remain in good standing with an organization. A professional *code of ethics* is more [_aspirational_](https://keydifferences.com/difference-between-code-of-ethics-and-code-of-conduct.html), defining the shared values and ideas of the organization. The terms are sometimes used interchangeably.

+

+Examples include:

+

+ * [Oxford Munich](http://www.code-of-ethics.org/code-of-conduct/) Code of Ethics

+ * [Data Science Association](http://datascienceassn.org/code-of-conduct.html) Code of Conduct (created 2013)

+ * [ACM Code of Ethics and Professional Conduct](https://www.acm.org/code-of-ethics) (since 1993)

+

+

+### 3.2 Ask Moral Questions

+

+Assuming you've already identified your shared values or ethical principles at a team or organization level, the next step is to identify the moral questions relevant to your specific use case and operational workflow.

+

+Here are [6 basic questions about data ethics](https://halpert3.medium.com/six-questions-about-data-science-ethics-252b5ae31fec) that you can build on:

+

+* Is the data you're collecting fair and unbiased?

+* Is the data being used ethically and fairly?

+* Is user privacy being protected?

+* To whom does data belong - the company or the user?

+* What effects do the data and algorithms have on society (individual and collective)?

+* Is the data manipulated or deceptive?

+

+For larger team or project scope, you can choose to expand on questions that reflect a specific stage of the workflow. For example here are [22 questions on ethics in data and AI](https://medium.com/the-organization/22-questions-for-ethics-in-data-and-ai-efb68fd19429) that were grouped into _design_, _implementation & management_, _systems & organization_ categories for convenience.

+

+### 3.3 Adopt Ethics Checklists

+

+While professional codes define required _ethical behavior_ from practitioners, they [have known limitations](https://resources.oreilly.com/examples/0636920203964/blob/master/of_oaths_and_checklists.md) for implementation, particularly in large-scale projects. In [Ethics and Data Science](https://resources.oreilly.com/examples/0636920203964/blob/master/of_oaths_and_checklists.md)), experts instead advocate for ethics checklists that can **connect principles to practices** in more deterministic and actionable ways.

+

+Checklists convert questions into "yes/no" tasks that can be tracked and validated before product release. Tools like [deon](https://deon.drivendata.org/) make this frictionless, creating default checklists aligned to [industry recommendations](https://deon.drivendata.org/#checklist-citations) and enabling users to customize and integrate them into workflows using a command-line tool. Deon also provides [real-world examples](ttps://deon.drivendata.org/examples/) of ethical challenges to provide context for these decisions.

+

+### 3.4 Track Ethics Compliance

+

+**Ethics** is about doing the right thing, even if there are no laws to enforce it. **Compliance** is about following the law, when defined and where applicable.

+**Governance** is the broader umbrella that covers all the ways in which an organization (company or government) operates to enforce ethical principles & comply with laws.

-[1. Ethics Fundamentals](1-fundamentals.md ':include')

+Companies are creating their own ethics frameworks (e.g., [Microsoft](https://www.microsoft.com/en-us/ai/responsible-ai), [IBM](https://www.ibm.com/cloud/learn/ai-ethics), [Google](https://ai.google/principles), [Facebook](https://ai.facebook.com/blog/facebooks-five-pillars-of-responsible-ai/), [Accenture](https://www.accenture.com/_acnmedia/PDF-149/Accenture-Responsible-AI-Final.pdf#zoom=50)) for governances, while state and national governments tend to focus on regulations that protect the data privacy and rights of their citizens.

-[2. Data Collection](2-collection.md ':include')

+Here are some landmark data privacy regulations to know:

+ * `1974`, [US Privacy Act](https://www.justice.gov/opcl/privacy-act-1974) - regulates _federal govt._ collection, use and disclosure of personal information.

+ * `1996`, [US Health Insurance Portability & Accountability Act (HIPAA)](https://www.cdc.gov/phlp/publications/topic/hipaa.html) - protects personal health data.

+ * `1998`, [US Children's Online Privacy Protection Act (COPPA)](https://www.ftc.gov/enforcement/rules/rulemaking-regulatory-reform-proceedings/childrens-online-privacy-protection-rule) - protects data privacy of children under 13.

+ * `2018`, [General Data Protection Regulation (GDPR)](https://gdpr-info.eu/) - provides user rights, data protection and privacy.

+ * `2018`, [California Consumer Privacy Act (CCPA)](https://www.oag.ca.gov/privacy/ccpa) gives consumers more _rights_ over their personal data.

-[3. Data Privacy](3-privacy.md ':include')

+In Aug 2021, China passed the [Personal Information Protection Law](https://www.reuters.com/world/china/china-passes-new-personal-data-privacy-law-take-effect-nov-1-2021-08-20/) (to go into effect Nov 1) which, with its Data Security Law, will create one of the strongest online data privacy regulations in the world.

-[4. Algorithm Fairness](4-fairness.md ':include')

-[5. Societal Consequences](5-consequences.md ':include')

+### 3.5 Establish Ethics Culture

-[6. Summary & Resources](6-summary.md ':include')

+There remains an intangible gap between compliance ("doing enough to meet the letter of the law") and addressing systemic issues ([like ossification, information asymmetry and distributional unfairness](https://www.coursera.org/learn/data-science-ethics/home/week/4)) that can create self-fulfilling feedback loops to weaponizes AI further. This is motivating calls for [formalizing data ethics cultures](https://www.codeforamerica.org/news/formalizing-an-ethical-data-culture/) in organizations, where everyone is empowered to [pull the Andon cord](https://en.wikipedia.org/wiki/Andon_(manufacturing) to raise ethics concerns early. And exploring [collaborative approaches to defining this culture](https://towardsdatascience.com/why-ai-ethics-requires-a-culture-driven-approach-26f451afa29f) that build emotional connections and consistent beliefs across organizations and industries.

---

@@ -52,10 +191,10 @@ What is ethics? What does data ethics mean, and how is it relevant to data scien

---

# Assignment

-[Assignment Title](assignment.md ':include')

+[Assignment Title](assignment.md)

---

# Resources

-[Related Resources](resources.md ':include')

\ No newline at end of file

+[Related Resources](resources.md)

\ No newline at end of file

diff --git a/1-Introduction/02-ethics/megan-smith-algorithms.png b/1-Introduction/02-ethics/megan-smith-algorithms.png

new file mode 100644

index 00000000..8cc73489

Binary files /dev/null and b/1-Introduction/02-ethics/megan-smith-algorithms.png differ

diff --git a/1-Introduction/04-stats-and-probability/README.md b/1-Introduction/04-stats-and-probability/README.md

index 504b6c0f..f2fa0c22 100644

--- a/1-Introduction/04-stats-and-probability/README.md

+++ b/1-Introduction/04-stats-and-probability/README.md

@@ -51,7 +51,7 @@ To help us understand the distribution of data, it is helpful to talk about **qu

Graphically we can represent relationship between median and quartiles in a diagram called the **box plot**:

-

+

Here we also computer **inter-quartile range** IQR=Q3-Q1, and so-called **outliers** - values, that lie outside the boundaries [Q1-1.5*IQR,Q3+1.5*IQR].

@@ -92,6 +92,82 @@ If we plot the histogram of the generated samples we will see the picture very s

*Normal Distribution with mean=0 and std.dev=1*

+## Confidence Intervals

+

+When we talk about weights of baseball players, we assume that there is certain **random variable W** that corresponds to ideal probability distribution of weights of all baseball players. Our sequence of weights corresponds to a subset of all baseball players that we call **population**. An interesting question is, can we know the parameters of distribution of W, i.e. mean and variance?

+

+The easiest answer would be to calculate mean and variance of our sample. However, it could happen that our random sample does not accurately represent complete population. Thus it makes sense to talk about **confidence interval**.

+

+Suppose we have a sample X1, ..., Xn from our distribution. Each time we draw a sample from our distribution, we would end up with different mean value μ. Thus μ can be considered to be a random variable. A **confidence interval** with confidence p is a pair of values (Lp,Rp), such that **P**(Lp≤μ≤Rp) = p, i.e. a probability of measured mean value falling within the interval equals to p.

+

+It does beyond our short intro to discuss how those confidence intervals are calculated. Some more details can be found [on Wikipedia](https://en.wikipedia.org/wiki/Confidence_interval). An example of calculating confidence interval for weights and heights is given in the [accompanying notebooks](notebook.ipynb).

+

+| p | Weight mean |

+|-----|-----------|

+| 0.85 | 201.73±0.94 |

+| 0.90 | 201.73±1.08 |

+| 0.95 | 201.73±1.28 |

+

+Notice that the higher is the confidence probability, the wider is the confidence interval.

+

+## Hypothesis Testing

+

+In our baseball players dataset, there are different player roles, that can be summarized below (look at the [accompanying notebook](notebook.ipynb) to see how this table can be calculated):

+

+| Role | Height | Weight | Count |

+|------|--------|--------|-------|

+| Catcher | 72.723684 | 204.328947 | 76 |

+| Designated_Hitter | 74.222222 | 220.888889 | 18 |

+| First_Baseman | 74.000000 | 213.109091 | 55 |

+| Outfielder | 73.010309 | 199.113402 | 194 |

+| Relief_Pitcher | 74.374603 | 203.517460 | 315 |

+| Second_Baseman | 71.362069 | 184.344828 | 58 |

+| Shortstop | 71.903846 | 182.923077 | 52 |

+| Starting_Pitcher | 74.719457 | 205.163636 | 221 |

+| Third_Baseman | 73.044444 | 200.955556 | 45 |

+

+We can notice that the mean heights of first basemen is higher that that of second basemen. Thus, we may be tempted to conclude that **first basemen are higher than second basemen**.

+

+> This statement is called **a hypothesis**, because we do not know whether the fact is actually true or not.

+

+However, it is not always obvious whether we can make this conclusion. From the discussion above we know that each mean has an associated confidence interval, and thus this difference can just be a statistical error. We need some more formal way to test our hypothesis.

+

+Let's compute confidence intervals separately for heights of first and second basemen:

+

+| Confidence | First Basemen | Second Basemen |

+|------------|---------------|----------------|

+| 0.85 | 73.62..74.38 | 71.04..71.69 |

+| 0.90 | 73.56..74.44 | 70.99..71.73 |

+| 0.95 | 73.47..74.53 | 70.92..71.81 |

+

+We can see that under no confidence the intervals overlap. That proves our hypothesis that first basemen are higher than second basemen.

+

+More formally, the problem we are solving is to see if **two probability distributions are the same**, or at least have the same parameters. Depending on the distribution, we need to use different tests for that. If we know that our distributions are normal, we can apply **[Student t-test](https://en.wikipedia.org/wiki/Student%27s_t-test)**.

+

+In Student t-test, we compute so-called **t-value**, which indicates the difference between means, taking into account the variance. It is demonstrated that t-value follows **student distribution**, which allows us to get the threshold value for a given confidence level **p** (this can be computed, or looked up in the numerical tables). We then compare t-value to this threshold to approve or reject the hypothesis.

+

+In Python, we can use **SciPy** package, which includes `ttest_ind` function (in addition to many other useful statistical functions!). It computes the t-value for us, and also does the reverse lookup of confidence p-value, so that we can just look at the confidence to draw the conclusion.

+

+For example, our comparison between heights of first and second basemen give us the following results:

+```python

+from scipy.stats import ttest_ind

+

+tval, pval = ttest_ind(df.loc[df['Role']=='First_Baseman',['Height']], df.loc[df['Role']=='Designated_Hitter',['Height']],equal_var=False)

+print(f"T-value = {tval[0]:.2f}\nP-value: {pval[0]}")

+```

+```

+T-value = 7.65

+P-value: 9.137321189738925e-12

+```

+In our case, p-value is very low, meaning that there is strong evidence supporting that first basemen are taller.

+

+> **Challenge**: Use the sample code in the notebook to test other hypothesis that: (1) First basemen and older that second basemen; (2) First basemen and taller than third basemen; (3) Shortstops are taller than second basemen

+

+

+There are different types of hypothesis that we might want to test, for example:

+* To prove that a given sample follows some distribution. In our case we have assumed that heights are normally distributed, but that needs formal statistical verification.

+* To prove that a mean value of a sample corresponds to some predefined value

+* To prove that

## Law of Large Numbers and Central Limit Theorem

diff --git a/1-Introduction/04-stats-and-probability/notebook.ipynb b/1-Introduction/04-stats-and-probability/notebook.ipynb

index c94d8b3a..8b83ab72 100644

--- a/1-Introduction/04-stats-and-probability/notebook.ipynb

+++ b/1-Introduction/04-stats-and-probability/notebook.ipynb

@@ -93,9 +93,9 @@

},

{

"cell_type": "code",

- "execution_count": 26,

+ "execution_count": 168,

"source": [

- "df = pd.read_csv(\"../../data/SOCR_MLB.tsv\",sep='\\t',header=None,names=['Name','Team','Rome','Height','Weight','Age'])\r\n",

+ "df = pd.read_csv(\"../../data/SOCR_MLB.tsv\",sep='\\t',header=None,names=['Name','Team','Role','Height','Weight','Age'])\r\n",

"df"

],

"outputs": [

@@ -103,7 +103,7 @@

"output_type": "execute_result",

"data": {

"text/plain": [

- " Name Team Rome Height Weight Age\n",

+ " Name Team Role Height Weight Age\n",

"0 Adam_Donachie BAL Catcher 74 180.0 22.99\n",

"1 Paul_Bako BAL Catcher 74 215.0 34.69\n",

"2 Ramon_Hernandez BAL Catcher 72 210.0 30.78\n",

@@ -139,7 +139,7 @@

"

"

+ ]

+ },

+ "metadata": {},

+ "execution_count": 175

+ }

+ ],

+ "metadata": {}

+ },

+ {

+ "cell_type": "markdown",

+ "source": [

+ "Let's test the hypothesis that First Basemen are higher then Second Basemen. The simplest way to do it is to test the confidence intervals:"

+ ],

+ "metadata": {}

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 188,

+ "source": [

+ "for p in [0.85,0.9,0.95]:\r\n",

+ " m1, h1 = mean_confidence_interval(df.loc[df['Role']=='First_Baseman',['Height']],p)\r\n",

+ " m2, h2 = mean_confidence_interval(df.loc[df['Role']=='Second_Baseman',['Height']],p)\r\n",

+ " print(f'Conf={p:.2f}, 1st basemen height: {m1-h1[0]:.2f}..{m1+h1[0]:.2f}, 2nd basemen height: {m2-h2[0]:.2f}..{m2+h2[0]:.2f}')"

+ ],

+ "outputs": [

+ {

+ "output_type": "stream",

+ "name": "stdout",

+ "text": [

+ "Conf=0.85, 1st basemen height: 73.62..74.38, 2nd basemen height: 71.04..71.69\n",

+ "Conf=0.90, 1st basemen height: 73.56..74.44, 2nd basemen height: 70.99..71.73\n",

+ "Conf=0.95, 1st basemen height: 73.47..74.53, 2nd basemen height: 70.92..71.81\n"

+ ]

+ }

+ ],

+ "metadata": {}

+ },

+ {

+ "cell_type": "markdown",

+ "source": [

+ "We can see that intervals do not overlap.\r\n",

+ "\r\n",

+ "More statistically correct way to prove the hypothesis is to use **Student t-test**:"

+ ],

+ "metadata": {}

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 200,

+ "source": [

+ "from scipy.stats import ttest_ind\r\n",

+ "\r\n",

+ "tval, pval = ttest_ind(df.loc[df['Role']=='First_Baseman',['Height']], df.loc[df['Role']=='Second_Baseman',['Height']],equal_var=False)\r\n",

+ "print(f\"T-value = {tval[0]:.2f}\\nP-value: {pval[0]}\")"

+ ],

+ "outputs": [

+ {

+ "output_type": "stream",

+ "name": "stdout",

+ "text": [

+ "T-value = 7.65\n",

+ "P-value: 9.137321189738925e-12\n"

+ ]

+ }

+ ],

+ "metadata": {}

+ },

+ {

+ "cell_type": "markdown",

+ "source": [

+ "Two values returned by the `ttest_ind` functions are:\r\n",

+ "* p-value can be considered as the probability of two distributions having the same mean. In our case, it is very low, meaning that there is strong evidence supporting that first basemen are taller\r\n",

+ "* t-value is the intermediate value of normalized mean difference that is used in t-test, and it is compared against threshold value for a given confidence value "

+ ],

+ "metadata": {}

+ },

{

"cell_type": "markdown",

"source": [

diff --git a/3-Data-Visualization/10-visualization-quantities/README.md b/3-Data-Visualization/10-visualization-quantities/README.md

index c136ea93..72f8e813 100644

--- a/3-Data-Visualization/10-visualization-quantities/README.md

+++ b/3-Data-Visualization/10-visualization-quantities/README.md

@@ -189,17 +189,16 @@ In this plot you can see the range, per category, of the Minimum Length and Maxi

-

## 🚀 Challenge

-

+This bird dataset offers a wealth of information about different types of birds within a particular ecosystem. Search around the internet and see if you can find other bird-oriented datasets. Practice building charts and graphs around these birds to discover facts you didn't realize.

## Post-Lecture Quiz

[Post-lecture quiz]()

## Review & Self Study

-

+This first lesson has given you some information about how to use Matplotlib to visualize quantities. Do some research around other ways to work with datasets for visualization. [Plotly](https://github.com/plotly/plotly.py) is one that we won't cover in these lessons, so take a look at what it can offer.

## Assignment

-[Assignment Title](assignment.md)

+[Lines, Scatters, and Bars](assignment.md)

diff --git a/3-Data-Visualization/10-visualization-quantities/assignment.md b/3-Data-Visualization/10-visualization-quantities/assignment.md

index b7af6412..6caa81fd 100644

--- a/3-Data-Visualization/10-visualization-quantities/assignment.md

+++ b/3-Data-Visualization/10-visualization-quantities/assignment.md

@@ -1,8 +1,11 @@

-# Title

+# Lines, Scatters and Bars

## Instructions

+In this lesson, you worked with line charts, scatterplots, and bar charts to show interesting facts about this dataset. In this assignment, dig deeper into the dataset to discover a fact about a given type of bird. For example, create a notebook visualizing all the interesting data you can uncover about Snow Geese. Use the three plots mentioned above to tell a story in your notebook.

+

## Rubric

Exemplary | Adequate | Needs Improvement

--- | --- | -- |

+A notebook is presented with good annotations, solid storytelling, and attractive graphs | The notebook is missing one of these elements | The notebook is missing two of these elements

\ No newline at end of file

diff --git a/3-Data-Visualization/11-visualization-distributions/README.md b/3-Data-Visualization/11-visualization-distributions/README.md

index 3231e497..2dacdb95 100644

--- a/3-Data-Visualization/11-visualization-distributions/README.md

+++ b/3-Data-Visualization/11-visualization-distributions/README.md

@@ -176,6 +176,7 @@ Perhaps it's worth researching whether the cluster of 'Vulnerable' birds accordi

## 🚀 Challenge

+Histograms are a more sophisticated type of chart than basic scatterplots, bar charts, or line charts. Go on a search on the internet to find good examples of the use of histograms. How are they used, what do they demonstrate, and in what fields or areas of inquiry do they tend to be used?

## Post-Lecture Quiz

@@ -183,7 +184,8 @@ Perhaps it's worth researching whether the cluster of 'Vulnerable' birds accordi

## Review & Self Study

+In this lesson, you used Matplotlib and started working with Seaborn to show more sophisticated charts. Do some research on `kdeplot` in Seaborn, a "continuous probability density curve in one or more dimensions". Read through [the documentation](https://seaborn.pydata.org/generated/seaborn.kdeplot.html) to understand how it works.

## Assignment

-[Assignment Title](assignment.md)

+[Apply your skills](assignment.md)

diff --git a/3-Data-Visualization/11-visualization-distributions/assignment.md b/3-Data-Visualization/11-visualization-distributions/assignment.md

index b7af6412..e3e9301b 100644

--- a/3-Data-Visualization/11-visualization-distributions/assignment.md

+++ b/3-Data-Visualization/11-visualization-distributions/assignment.md

@@ -1,8 +1,10 @@

-# Title

+# Apply your skills

## Instructions

+So far, you have worked with the Minnesota birds dataset to discover information about bird quantities and population density. Practice your application of these techniques by trying a different dataset, perhaps sourced from [Kaggle]. Build a notebook to tell a story about this dataset, and make sure to use histograms when discussing it.

## Rubric

Exemplary | Adequate | Needs Improvement

--- | --- | -- |

+A notebook is presented with annotations about this dataset, including it source, and uses at least 5 histograms to discover facts about the data. | A notebook is presented with incomplete annotations or bugs | A notebook is presented without annotations and includes bugs

\ No newline at end of file

diff --git a/3-Data-Visualization/12-visualization-proportions/README.md b/3-Data-Visualization/12-visualization-proportions/README.md

index 9489b44d..69b20be2 100644

--- a/3-Data-Visualization/12-visualization-proportions/README.md

+++ b/3-Data-Visualization/12-visualization-proportions/README.md

@@ -6,6 +6,8 @@ In this lesson, you will use a different nature-focused dataset to visualize pro

- Donut charts 🍩

- Waffle charts 🧇

+> 💡 A very interesting project called [Charticulator](https://charticulator.com) by Microsoft Research offers a free drag and drop interface for data visualizations. In one of their tutorials they also use this mushroom dataset! So you can explore the data and learn the library at the same time: https://charticulator.com/tutorials/tutorial4.html

+

## Pre-Lecture Quiz

[Pre-lecture quiz]()

@@ -157,17 +159,26 @@ Using a waffle chart, you can plainly see the proportions of cap color of this m

In this lesson you learned three ways to visualize proportions. First, you need to group your data into categories and then decide which is the best way to display the data - pie, donut, or waffle. All are delicious and gratify the user with an instant snapshot of a dataset.

-

## 🚀 Challenge

-

+Try recreating these tasty charts in [Charticulator](https://charticulator.com).

## Post-Lecture Quiz

[Post-lecture quiz]()

## Review & Self Study

+Sometimes it's not obvious when to use a pie, donut, or waffle chart. Here are some articles to read on this topic:

+

+https://www.beautiful.ai/blog/battle-of-the-charts-pie-chart-vs-donut-chart

+

+https://medium.com/@hypsypops/pie-chart-vs-donut-chart-showdown-in-the-ring-5d24fd86a9ce

+

+https://www.mit.edu/~mbarker/formula1/f1help/11-ch-c6.htm

+

+https://medium.datadriveninvestor.com/data-visualization-done-the-right-way-with-tableau-waffle-chart-fdf2a19be402

+Do some research to find more information on this sticky decision.

## Assignment

-[Assignment Title](assignment.md)

+[Try it in Excel](assignment.md)

diff --git a/3-Data-Visualization/12-visualization-proportions/assignment.md b/3-Data-Visualization/12-visualization-proportions/assignment.md

index b7af6412..e3ab407c 100644

--- a/3-Data-Visualization/12-visualization-proportions/assignment.md

+++ b/3-Data-Visualization/12-visualization-proportions/assignment.md

@@ -1,8 +1,11 @@

-# Title

+# Try it in Excel

## Instructions

+Did you know you can create donut, pie and waffle charts in Excel? Using a dataset of your choice, create these three charts right in an Excel spreadsheet

+

## Rubric

-Exemplary | Adequate | Needs Improvement

---- | --- | -- |

+| Exemplary | Adequate | Needs Improvement |

+| ------------------------------------------------------- | ------------------------------------------------- | ------------------------------------------------------ |

+| An Excel spreadsheet is presented with all three charts | An Excel spreadsheet is presented with two charts | An Excel spreadsheet is presented with only one charts |

\ No newline at end of file

diff --git a/3-Data-Visualization/13-visualization-relationships/README.md b/3-Data-Visualization/13-visualization-relationships/README.md

index f9fadc95..b2494971 100644

--- a/3-Data-Visualization/13-visualization-relationships/README.md

+++ b/3-Data-Visualization/13-visualization-relationships/README.md

@@ -10,15 +10,165 @@ It will be interesting to visualize the relationship between a given state's pro

[Pre-lecture quiz]()

+In this lesson, you can use Seaborn, which you use before, as a good library to visualize relationships between variables. Particularly interesting is the use of Seaborn's `relplot` function that allows scatter plots and line plots to quickly visualize '[statistical relationships](https://seaborn.pydata.org/tutorial/relational.html?highlight=relationships)', which allow the data scientist to better understand how variables relate to each other.

+

+## Scatterplots

+

+Use a scatterplot to show how the price of honey has evolved, year over year, per state. Seaborn, using `relplot`, conveniently groups the state data and displays data points for both categorical and numeric data.

+

+Let's start by importing the data and Seaborn:

+

+```python

+import pandas as pd

+import matplotlib.pyplot as plt

+import seaborn as sns

+honey = pd.read_csv('../../data/honey.csv')

+honey.head()

+```

+You notice that the honey data has several interesting columns, including year and price per pound. Let's explore this data, grouped by U.S. state:

+

+| state | numcol | yieldpercol | totalprod | stocks | priceperlb | prodvalue | year |

+| ----- | ------ | ----------- | --------- | -------- | ---------- | --------- | ---- |

+| AL | 16000 | 71 | 1136000 | 159000 | 0.72 | 818000 | 1998 |

+| AZ | 55000 | 60 | 3300000 | 1485000 | 0.64 | 2112000 | 1998 |

+| AR | 53000 | 65 | 3445000 | 1688000 | 0.59 | 2033000 | 1998 |

+| CA | 450000 | 83 | 37350000 | 12326000 | 0.62 | 23157000 | 1998 |

+| CO | 27000 | 72 | 1944000 | 1594000 | 0.7 | 1361000 | 1998 |

+

+

+Create a basic scatterplot to show the relationship between the price per pound of honey and its U.S. state of origin. Make the `y` axis tall enough to display all the states:

+

+```python

+sns.relplot(x="priceperlb", y="state", data=honey, height=15, aspect=.5);

+```

+

+

+Now, show the same data with a honey color scheme to show how the price evolves over the years. You can do this by adding a 'hue' parameter to show the change, year over year:

+

+> ✅ Learn more about the [color palettes you can use in Seaborn](https://seaborn.pydata.org/tutorial/color_palettes.html) - try a beautiful rainbow color scheme!

+

+```python

+sns.relplot(x="priceperlb", y="state", hue="year", palette="YlOrBr", data=honey, height=15, aspect=.5);

+```

+

+

+With this color scheme change, you can see that there's obviously a strong progression over the years in terms of honey price per pound. Indeed, if you look at a sample set in the data to verify (pick a given state, Arizona for example) you can see a pattern of price increases year over year, with few exceptions:

+

+| state | numcol | yieldpercol | totalprod | stocks | priceperlb | prodvalue | year |

+| ----- | ------ | ----------- | --------- | ------- | ---------- | --------- | ---- |

+| AZ | 55000 | 60 | 3300000 | 1485000 | 0.64 | 2112000 | 1998 |

+| AZ | 52000 | 62 | 3224000 | 1548000 | 0.62 | 1999000 | 1999 |

+| AZ | 40000 | 59 | 2360000 | 1322000 | 0.73 | 1723000 | 2000 |

+| AZ | 43000 | 59 | 2537000 | 1142000 | 0.72 | 1827000 | 2001 |

+| AZ | 38000 | 63 | 2394000 | 1197000 | 1.08 | 2586000 | 2002 |

+| AZ | 35000 | 72 | 2520000 | 983000 | 1.34 | 3377000 | 2003 |

+| AZ | 32000 | 55 | 1760000 | 774000 | 1.11 | 1954000 | 2004 |

+| AZ | 36000 | 50 | 1800000 | 720000 | 1.04 | 1872000 | 2005 |

+| AZ | 30000 | 65 | 1950000 | 839000 | 0.91 | 1775000 | 2006 |

+| AZ | 30000 | 64 | 1920000 | 902000 | 1.26 | 2419000 | 2007 |

+| AZ | 25000 | 64 | 1600000 | 336000 | 1.26 | 2016000 | 2008 |

+| AZ | 20000 | 52 | 1040000 | 562000 | 1.45 | 1508000 | 2009 |

+| AZ | 24000 | 77 | 1848000 | 665000 | 1.52 | 2809000 | 2010 |

+| AZ | 23000 | 53 | 1219000 | 427000 | 1.55 | 1889000 | 2011 |

+| AZ | 22000 | 46 | 1012000 | 253000 | 1.79 | 1811000 | 2012 |

+

+

+Another way to visualize this progression is to use size, rather than color. For colorblind users, this might be a better option. Edit your visualization to show an increase of price by an increase in dot circumference:

+

+```python

+sns.relplot(x="priceperlb", y="state", size="year", data=honey, height=15, aspect=.5);

+```

+You can see the size of the dots gradually increasing.

+

+

+

+Is this a simple case of supply and demand? Due to factors such as climate change and colony collapse, is there less honey available for purchase year over year, and thus the price increases?

+

+To discover a correlation between some of the variables in this dataset, let's explore some line charts.

+

+## Line charts

+

+Question: Is there a clear rise in price of honey per pound year over year? You can most easily discover that by creating a single line chart:

+

+```python

+sns.relplot(x="year", y="priceperlb", kind="line", data=honey);

+```

+Answer: Yes, with some exceptions around the year 2003:

+

+

+

+✅ Because Seaborn is aggregating data around one line, it displays "the multiple measurements at each x value by plotting the mean and the 95% confidence interval around the mean". [source](https://seaborn.pydata.org/tutorial/relational.html). This time-consuming behavior can be disabled by adding `ci=None`.

+

+Question: Well, in 2003 can we also see a spike in the honey supply? What if you look at total production year over year?

+

+```python

+sns.relplot(x="year", y="totalprod", kind="line", data=honey);

+```

+

+

+

+Answer: Not really. If you look at total production, it actually seems to have increased in that particular year, even though generally speaking the amount of honey being produced is in decline during these years.

+

+Question: In that case, what could have caused that spike in the price of honey around 2003?

+

+To discover this, you can explore a facet grid.

+

+## Facet grids

+

+Facet grids take one facet of your dataset (in our case, you can choose 'year' to avoid having too many facets produced). Seaborn can then make a plot for each of those facets of your chosen x and y coordinates for more easy visual comparison. Does 2003 stand out in this type of comparison?

+

+Create a facet grid by continuing to use `relplot` as recommended by [Seaborn's documentation](https://seaborn.pydata.org/generated/seaborn.FacetGrid.html?highlight=facetgrid#seaborn.FacetGrid).

+

+```python

+sns.relplot(

+ data=honey,

+ x="yieldpercol", y="numcol",

+ col="year",

+ col_wrap=3,

+ kind="line"

+```

+In this visualization, you can compare the yield per colony and number of colonies year over year, side by side with a wrap set at 3 for the columns:

+

+

+

+For this dataset, nothing particularly stands out with regards to the number of colonies and their yield, year over year and state over state. Is there a different way to look at finding a correlation between these two variables?

+

+## Dual-line Plots

+

+Try a multiline plot by superimposing two lineplots on top of each other, using Seaborn's 'despine' to remove their top and right spines, and using `ax.twinx` [derived from Matplotlib](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.twinx.html). Twinx allows a chart to share the x axis and display two y axes. So, display the yield per colony and number of colonies, superimposed:

+

+```python

+fig, ax = plt.subplots(figsize=(12,6))

+lineplot = sns.lineplot(x=honey['year'], y=honey['numcol'], data=honey,

+ label = 'Number of bee colonies', legend=False)

+sns.despine()

+plt.ylabel('# colonies')

+plt.title('Honey Production Year over Year');

+

+ax2 = ax.twinx()

+lineplot2 = sns.lineplot(x=honey['year'], y=honey['yieldpercol'], ax=ax2, color="r",

+ label ='Yield per colony', legend=False)

+sns.despine(right=False)

+plt.ylabel('colony yield')

+ax.figure.legend();

+```

+

+

+While nothing jumps out to the eye around the year 2003, it does allow us to end this lesson on a little happier note: while there are overall a declining number of colonies, their numbers might seem to be stabilizing and their yield per colony is actually increasing, even with fewer bees.

+

+Go, bees, go!

+

+🐝❤️

## 🚀 Challenge

+In this lesson, you learned a bit more about other uses of scatterplots and line grids, including facet grids. Challenge yourself to create a facet grid using a different dataset, maybe one you used prior to these lessons. Note how long they take to create and how you need to be careful about how many grids you need to draw using these techniques.

## Post-Lecture Quiz

[Post-lecture quiz]()

## Review & Self Study

-

+Line plots can be simple or quite complex. Do a bit of reading in the [Seaborn documentation](https://seaborn.pydata.org/generated/seaborn.lineplot.html) on the various ways you can build them. Try to enhance the line charts you built in this lesson with other methods listed in the docs.

## Assignment

-[Assignment Title](assignment.md)

+[Dive into the beehive](assignment.md)

diff --git a/3-Data-Visualization/13-visualization-relationships/assignment.md b/3-Data-Visualization/13-visualization-relationships/assignment.md

index b7af6412..5dca670a 100644

--- a/3-Data-Visualization/13-visualization-relationships/assignment.md

+++ b/3-Data-Visualization/13-visualization-relationships/assignment.md

@@ -1,8 +1,11 @@

-# Title

+# Dive into the beehive

## Instructions

+In this lesson you started looking at a dataset around bees and their honey production over a period of time that saw losses in the bee colony population overall. Dig deeper into this dataset and build a notebook that can tell the story of the health of the bee population, state by state and year by year. Do you discover anything interesting about this dataset?

+

## Rubric

-Exemplary | Adequate | Needs Improvement

---- | --- | -- |

+| Exemplary | Adequate | Needs Improvement |

+| ------------------------------------------------------------------------------------------------------------------------------------------------------- | ---------------------------------------- | ---------------------------------------- |

+| A notebook is presented with a story annotated with at least three different charts showing aspects of the dataset, state over state and year over year | The notebook lacks one of these elements | The notebook lacks two of these elements |

\ No newline at end of file

diff --git a/3-Data-Visualization/13-visualization-relationships/images/dual-line.png b/3-Data-Visualization/13-visualization-relationships/images/dual-line.png

new file mode 100644

index 00000000..9f56938e

Binary files /dev/null and b/3-Data-Visualization/13-visualization-relationships/images/dual-line.png differ

diff --git a/3-Data-Visualization/13-visualization-relationships/images/facet.png b/3-Data-Visualization/13-visualization-relationships/images/facet.png

new file mode 100644

index 00000000..ac6b606b

Binary files /dev/null and b/3-Data-Visualization/13-visualization-relationships/images/facet.png differ

diff --git a/3-Data-Visualization/13-visualization-relationships/images/line1.png b/3-Data-Visualization/13-visualization-relationships/images/line1.png

new file mode 100644

index 00000000..5945dba2

Binary files /dev/null and b/3-Data-Visualization/13-visualization-relationships/images/line1.png differ

diff --git a/3-Data-Visualization/13-visualization-relationships/images/line2.png b/3-Data-Visualization/13-visualization-relationships/images/line2.png

new file mode 100644

index 00000000..7380832c

Binary files /dev/null and b/3-Data-Visualization/13-visualization-relationships/images/line2.png differ

diff --git a/3-Data-Visualization/13-visualization-relationships/images/scatter1.png b/3-Data-Visualization/13-visualization-relationships/images/scatter1.png

new file mode 100644

index 00000000..b5581842

Binary files /dev/null and b/3-Data-Visualization/13-visualization-relationships/images/scatter1.png differ

diff --git a/3-Data-Visualization/13-visualization-relationships/images/scatter2.png b/3-Data-Visualization/13-visualization-relationships/images/scatter2.png

new file mode 100644

index 00000000..8a45eac6

Binary files /dev/null and b/3-Data-Visualization/13-visualization-relationships/images/scatter2.png differ

diff --git a/3-Data-Visualization/13-visualization-relationships/images/scatter3.png b/3-Data-Visualization/13-visualization-relationships/images/scatter3.png

new file mode 100644

index 00000000..1b186ceb

Binary files /dev/null and b/3-Data-Visualization/13-visualization-relationships/images/scatter3.png differ

diff --git a/3-Data-Visualization/13-visualization-relationships/solution/notebook.ipynb b/3-Data-Visualization/13-visualization-relationships/solution/notebook.ipynb

index ca53da06..5e61879d 100644

--- a/3-Data-Visualization/13-visualization-relationships/solution/notebook.ipynb

+++ b/3-Data-Visualization/13-visualization-relationships/solution/notebook.ipynb

@@ -6,12 +6,371 @@

"# Visualizing Honey Production 🍯 🐝"

],

"metadata": {}

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 177,

+ "source": [

+ "import pandas as pd\n",

+ "import matplotlib.pyplot as plt\n",

+ "import seaborn as sns\n",

+ "honey = pd.read_csv('../../../data/honey.csv')\n",

+ "honey.head()"

+ ],

+ "outputs": [

+ {

+ "output_type": "execute_result",

+ "data": {

+ "text/plain": [

+ " state numcol yieldpercol totalprod stocks priceperlb \\\n",

+ "0 AL 16000.0 71 1136000.0 159000.0 0.72 \n",

+ "1 AZ 55000.0 60 3300000.0 1485000.0 0.64 \n",

+ "2 AR 53000.0 65 3445000.0 1688000.0 0.59 \n",

+ "3 CA 450000.0 83 37350000.0 12326000.0 0.62 \n",

+ "4 CO 27000.0 72 1944000.0 1594000.0 0.70 \n",

+ "\n",

+ " prodvalue year \n",

+ "0 818000.0 1998 \n",

+ "1 2112000.0 1998 \n",

+ "2 2033000.0 1998 \n",

+ "3 23157000.0 1998 \n",

+ "4 1361000.0 1998 "

+ ],

+ "text/html": [

+ "

\n",

+ "\n",

+ "

\n",

+ " \n",

+ "

\n",

+ "

\n",

+ "

state

\n",

+ "

numcol

\n",

+ "

yieldpercol

\n",

+ "

totalprod

\n",

+ "

stocks

\n",

+ "

priceperlb

\n",

+ "

prodvalue

\n",

+ "

year

\n",

+ "

\n",

+ " \n",

+ " \n",

+ "

\n",

+ "

0

\n",

+ "

AL

\n",

+ "

16000.0

\n",

+ "

71

\n",

+ "

1136000.0

\n",

+ "

159000.0

\n",

+ "

0.72

\n",

+ "

818000.0

\n",

+ "

1998

\n",

+ "

\n",

+ "

\n",

+ "

1

\n",

+ "

AZ

\n",

+ "

55000.0

\n",

+ "

60

\n",

+ "

3300000.0

\n",

+ "

1485000.0

\n",

+ "

0.64

\n",

+ "

2112000.0

\n",

+ "

1998

\n",

+ "

\n",

+ "

\n",

+ "

2

\n",

+ "

AR

\n",

+ "

53000.0

\n",

+ "

65

\n",

+ "

3445000.0

\n",

+ "

1688000.0

\n",

+ "

0.59

\n",

+ "

2033000.0

\n",

+ "

1998

\n",

+ "

\n",

+ "

\n",

+ "

3

\n",

+ "

CA

\n",

+ "

450000.0

\n",

+ "

83

\n",

+ "

37350000.0

\n",

+ "

12326000.0

\n",

+ "

0.62

\n",

+ "

23157000.0

\n",

+ "

1998

\n",

+ "

\n",

+ "

\n",

+ "

4

\n",

+ "

CO

\n",

+ "

27000.0

\n",

+ "

72

\n",

+ "

1944000.0

\n",

+ "

1594000.0

\n",

+ "

0.70

\n",

+ "

1361000.0

\n",

+ "

1998

\n",

+ "

\n",

+ " \n",

+ "

\n",

+ "

"

+ ]

+ },

+ "metadata": {},

+ "execution_count": 177

+ }

+ ],

+ "metadata": {}

+ },

+ {

+ "cell_type": "markdown",

+ "source": [

+ "Use a scatterplot to show the relationship between a state and its price per pound for local honey"

+ ],

+ "metadata": {}

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 178,

+ "source": [

+ "sns.relplot(x=\"priceperlb\", y=\"state\", data=honey, height=15, aspect=.5);\n"

+ ],

+ "outputs": [

+ {

+ "output_type": "display_data",

+ "data": {

+ "text/plain": [

+ ""

+ ],

+ "image/svg+xml": "\n\n\n